Terraform vs. Helm for Kubernetes

Time for a competition! When it comes to Helm, Terraform, and K8s, which infrastructure provisioning tool beats the other out and why? Let's take both for a test drive.

Join the DZone community and get the full member experience.

Join For Freei have been an avid user of terraform and use it to do many things in my infrastructure, be it provisioning machines or setting up my whole stack. when i started working with helm, my first impression was “wow! this is like terraform for kubernetes resources!” what re-kindled these thoughts again was when, a few weeks ago, hashicorp announced support for kubernetes provider via terraform . so one can use terraform to provision their infrastructure as well as to manage kubernetes resources. so i decided to take both for a test drive and see what works better in one vs. the other. before we get to the meat, a quick recap of similarities and differences. for brevity in this blog post, when i mention terraform, i am referring to the terraform kubernetes provider.

you might also enjoy linode's beginner's guide to terraform .

there are some key similarities:

- both allow you to describe and maintain your kubernetes objects as code. helm uses the standard manifests along with go-templates, whereas terraform uses the json/hcl file format.

- both allow usage of variables and overwriting those variables at various levels such as file and command line, and terraform additionally supports environment variables.

- both support modularity (helm has sub-charts while terraform has modules ).

- both provide a curated list of packages (helm has stable and incubator charts, while terraform has recently started the terraform module registry , though there are no terraform modules in the registry that work on kubernetes as of this post).

- both allow installation from multiple sources such as local directories and git repositories.

- both allow dry runs of actions before actually running them (helm has a –dry-run flag, while terraform has the plan subcommand).

with this premise in mind, i set out to try and understand the differences between the two. i took a simple use case with following objectives:

- install a kubernetes cluster (possible with terraform only)

- install guestbook

- upgrade guestbook

- roll back the upgrade

setup: provisioning the kubernetes cluster

in this step, we will create a kubernetes cluster via terraform using these steps:

- clone this git repo

- the kubectl, terraform, ssh, and helm binaries should be available in the shell you are working with.

- create a file called `terraform.tfvars` with the following content:

do_token = "your_digitaocean_access_token"

# you will need a token for kubeadm and can be generated using following command:

# python -c 'import random; print "%0x.%0x" % (random.systemrandom().getrandbits(3*8), random.systemrandom().getrandbits(8*8))'

kubeadm_token = "token_from_above_python_command"

# private_key_file = "/c/users/harshal/id_rsa"

private_key_file = "path_to_your_private_key_file"

# public_key_file = "/c/users/harshal/id_rsa.pub"

public_key_file = "path_to_your_public_key_file"

now we will run a set of commands to provision the cluster:

-

terraform getso terraform picks up all modules -

terraform initso terraform will pull all required plugins -

terraform planto validate whether everything shall run as expected. -

terraform apply -target=module.do-k8s-cluster

this will create a 1-master, 3-worker kubernetes cluster and copy a file called `admin.conf` to `${pwd}`, which can be used to interact with the cluster via kubectl. run `export kubeconfig=${pwd}/admin.conf` to use this file for this session. you can also copy this file to `~/.kube/config` if required. ensure your cluster is ready by running `kubectl get nodes`. the output should show all nodes in ready status.

name status age version

master ready 4m v1.8.2

node1 ready 2m v1.8.2

node2 ready 2m v1.8.2

node3 ready 2m v1.8.2

terraform kubernetes provider

install guestbook

once you run

terraform apply -target=module.gb-app

, verify that all pods and services are created by running

kubectl get all

. you should now be able to access guestbook on node port 31080. you will notice that we have implemented guestbook using

replication controllers

and not

deployments

. that is because the kubernetes provider in terraform does not support beta resources. more discussion on this can be found

here

. under the hood, we are using simple declaration files and mainly rc.tf and services.tf files in the gb-module directory, which should be self-explanatory.

update guestbook

since the application is deployed via replication controllers, changing the image is not enough. we would need to scale down old pods and scale up new pods. so we will scale down the rc to 0 pods and then scale it up again with the new image. run:

terraform apply -var 'fe_replicas=0' && terraform apply -var 'fe_image=harshals/gb-frontend:1.0' -var 'fe_replicas=3'

verify the updated application at node port 31080.

roll back the application

again, without deployments, rolling back rc is a little more tedious. we scale down the rc to 0 and then bring back the old image. run:

terraform apply -var 'fe_replicas=0' && terraform apply

this will bring the pods back to their default version and replica count.

helm

install guestbook

now we will perform the installation of guestbook on the same cluster in a different namespace using helm. ensure you are pointing to the correct cluster by running `export kubeconfig=${pwd}/admin.conf`

since we are running kubernetes 1.8.2 with rbac, run the following commands to give tiller the required privileges and initialize helm:

kubectl -n kube-system create sa tiller

kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller

helm init --service-account tiller --upgrade(courtesy: https://gist.github.com/mgoodness/bd887830cd5d483446cc4cd3cb7db09d )

run the following command to install guestbook on namespace “helm-gb”:

helm install --name helm-gb-chart --namespace helm-gb ./helm_chart_guestbook

verify that all pods and services are created by running

helm status helm-gb-chart

upgrade guestbook

in order to perform a similar upgrade via helm, run following command:

helm upgrade helm-gb-chart --set frontend.image=harshals/gb-frontend:1.0

since the application is using deployments, the upgrade is a lot easier. to view the upgrade taking place and old pods being replaced with new ones, run:

helm status helm-gb-chart

rollback

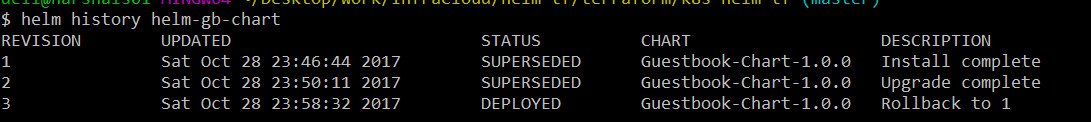

the revision history of the chart can be viewed via

helm history helm-gb-chart

run the following command to perform the rollback:

helm rollback helm-gb-chart 1

run

helm history helm-gb-chart

to get rollback confirmation as shown below:

cleanup

be sure to clean up the cluster by running

terraform destroy

.

pros and cons

we already saw the similarities between helm and terraform pertaining to the management of kubernetes resources. now let’s look at what works well and doesn’t with each of them.

terraform pros

- use of the same tool and code base for infrastructure as well as cluster management, including the kubernetes resources. so a team already comfortable with terraform can easily extend it to be used with kubernetes.

- terraform does not install any component inside the kubernetes cluster, whereas helm installs tiller. this can be seen as a positive, but tiller does some real-time management of running pods ,which we will talk in a bit.

terraform cons

- no support for beta resources. while many implementations are already working with beta resources such as deployment, daemonset, and statefulset, not having these available via terraform reduces the incentive for working with it.

- in case of a scenario where there is a dependency between two providers (module do-k8s-cluster creates admin.conf and the kubernetes provider of module gb-app refers to it), a single terraform action will not work. the order of execution has to be maintained and run in the following format: `terraform apply -target=module.do-k8s-cluster && terraform apply -target=gb-app`. this becomes tedious and hard to manage when there are multiple modules that could have provider-based dependencies. this is still an open issue within terraform, and more details can be found here .

- terraform's kubernetes provider is still fairly new.

helm pros

- since helm makes api calls to the tiller, all kubernetes resources are supported. moreover, helm templates have advanced constructs such as flow control and pipelines, resulting in a lot more flexible deployment template.

- upgrades and rollbacks are very well-implemented and easy to handle in helm. also, running tiller inside the cluster managed the runtime resources effectively.

- the helm charts repository has a lot of useful charts for various applications.

helm cons

- helm becomes an additional tool to be maintained, apart from existing tools for infrastructure creation and configuration management.

conclusion

in terms of sheer capabilities, helm is far more mature as of today and makes it really simple for the end user to adopt and use it. a great variety of charts also give you a head start, and you don’t have to re-invent the wheel. helm’s tiller component provides a lot of capabilities at runtime that aren't present in terraform due to inherent nature of the way it is used.

on the other hand, terraform can provision machines, clusters, and seamlessly manage resources, making it a single tool to learn and manage all of your infrastructure. that being said, for managing the applications/resources inside a kubernetes cluster, you have to do a lot of work — and lack of support for beta objects makes it all the more impossible.

i would personally go about using terraform for provisioning cloud resources and kubernetes and helm for deploying applications. time will tell if terraform gets better at application/resources provisioning in kubernetes clusters.

Published at DZone with permission of Harshal Shah, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments