Troubleshooting AWS CodePipeline Artifacts

Learn how to use and troubleshoot Input and Output Artifacts in AWS CodePipeline for continuous integration, delivery, and deployment.

Join the DZone community and get the full member experience.

Join For FreeAWS CodePipeline is a managed service that orchestrates workflow for continuous integration, continuous delivery, and continuous deployment. With CodePipeline, you define a series of stages composed of actions that perform tasks in a release process from a code commit all the way to production. It helps teams deliver changes to users whenever there's a business need to do so.

One of the key benefits of CodePipeline is that you don't need to install, configure, or manage compute instances for your release workflow. It also integrates with other AWS and non-AWS services and tools such as version control, build, test, and deployment.

In this post, I describe the details of how to use and troubleshoot what's often a confusing concept in CodePipeline: Input and Output Artifacts. There are plenty of examples using these artifacts online that sometimes it can be easy to copy and paste them without understanding the underlying concepts; this fact can make it difficult to diagnose problems when they occur. My hope is by going into the details of these artifact types, it'll save you some time the next time you experience an error in CodePipeline.

Artifact Store

The Artifact Store is an Amazon S3 bucket that CodePipeline uses to store artifacts used by pipelines. When you first use the CodePipeline console in a region to create a pipeline, CodePipeline automatically generates this S3 bucket in the AWS region. It stores artifacts for all pipelines in that region in this bucket. When you use the CLI, SDK, or CloudFormation to create a pipeline in CodePipeline, you must specify an S3 bucket to store the pipeline artifacts. If the CodePipeline bucket has already been created in S3, you can refer to this bucket when creating pipelines outside the console or you can create or reference another S3 bucket. All artifacts are securely stored in S3 using the default KMS key (aws/s3). Figure 1 shows an encrypted CodePipeline Artifact zip file in S3.

Figure 1: Encrypted CodePipeline Source Artifact in S3.

Figure 1: Encrypted CodePipeline Source Artifact in S3.

CodePipeline Artifacts

At the first stage in its workflow, CodePipeline obtains the source code, configuration, data, and other resources from a source provider. This source provider might include a Git repository (namely, GitHub and AWS CodeCommit) or S3. It stores a zipped version of the artifacts in the Artifact Store. In the example in this post, these artifacts are defined as Output Artifacts for the Source stage in CodePipeline. The next stage consumes these artifacts as Input Artifacts. This relationship is illustrated in Figure 2.

Figure 2: CodePipeline Artifacts and S3.

Figure 2: CodePipeline Artifacts and S3.

Launch an Example Solution

In order to learn about how CodePipeline artifacts are used, you'll walk through a simple solution by launching a CloudFormation stack. There are 4 steps to deploying the solution: preparing an AWS account, launching the stack, testing the deployment, and walking through CodePipeline and related resources in the solution. Each is described below.

Prepare an AWS Account

Create or login AWS account at https://aws.amazon.com by following the instructions on the site.

Launch the Stack

"AWS CloudFormation provides a common language for you to describe and provision all the infrastructure resources in your cloud environment. CloudFormation allows you to use a simple text file to model and provision, in an automated and secure manner, all the resources needed for your applications across all regions and accounts. This file serves as the single source of truth for your cloud environment. AWS CloudFormation is available at no additional charge, and you pay only for the AWS resources needed to run your applications." [ source]

Click on the Launch Stack button below to launch the CloudFormation Stack that configures a simple deployment pipeline in CodePipeline.

Stack Assumptions: The pipeline stack assumes the stack is launched in the US East (N. Virginia) Region ( us-east-1) and may not function properly if you do not use this region.

You can launch the same stack using the AWS CLI. Here's an example (you will need to modify the YOURGITHUBTOKEN and YOURGLOBALLYUNIQUES3BUCKET placeholder values):

aws cloudformation create-stack --stack-name YOURSTACKNAME --template-body file:///home/ec2-user/environment/devops-essentials/samples/static/pipeline.yml --parameters ParameterKey=GitHubToken,ParameterValue=YOURGITHUBTOKEN ParameterKey=SiteBucketName,ParameterValue=YOURGLOBALLYUNIQUES3BUCKET --capabilities CAPABILITY_NAMED_IAM --no-disable-rollback Parameters

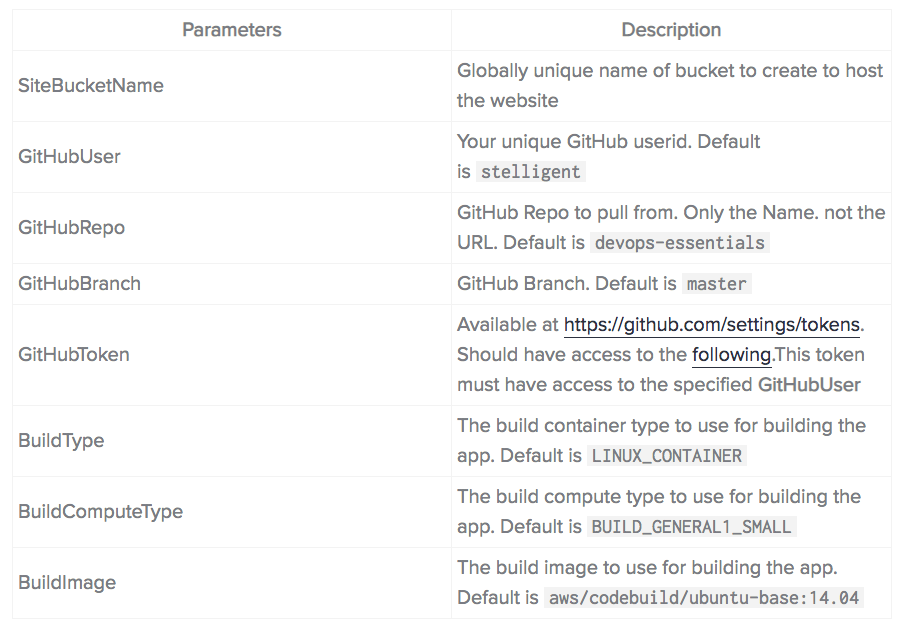

*The following, as mentioned above.

Test the Deployment

- Once the CloudFormation stack is successful, select the checkbox next to the stack and click the Outputs tab.

- From Outputs, click on the PipelineUrl output.

- Once the pipeline is complete, go to your CloudFormation Outputs and click on the SiteUrl Output. It should be able to launch a working website.

Once you've confirmed the deployment was successful, you'll walk through the solution below.

Solution Walkthrough

Click the URL from the step you ran before (from Outputs, click on the PipelineUrl output) or go to the AWS CodePipeline Console and find the pipeline and select it. After doing so, you'll see the two-stage pipeline that was generated by the CloudFormation stack.

Click the Edit button, then select the Edit pencil in the Source action of the Source stage as shown in Figure 3.

Figure 3: AWS CodePipeline Source Action with Output Artifact.

Figure 3: AWS CodePipeline Source Action with Output Artifact.

As shown in Figure 3, you see the name of Output artifact #1 is SourceArtifacts. This name is used by CodePipeline to store the Source artifacts in S3. The Output artifact ( SourceArtifacts) is used as an Input artifact in the Deploy stage (in this example) as shown in Figure 4 - see Input artifacts #1.

Figure 4: Input and Output Artifact Names for Deploy Stage.

Figure 4: Input and Output Artifact Names for Deploy Stage.

In Figure 4, you see there's an Output artifact called DeploymentArtifacts that's generated from the CodeBuild action that runs in this stage.

You can see examples of the S3 folders/keys that are generated in S3 by CodePipeline in Figure 5. CodePipeline automatically creates these keys/folders in S3 based on the name of the artifact as defined by CodePipeline users.

Figure 5: S3 Folders/Keys for CodePipeline Input and Output Artifacts.

Figure 5: S3 Folders/Keys for CodePipeline Input and Output Artifacts.

Figure 6 shows the ZIP files (for each CodePipeline revision) that contains all the source files downloaded from GitHub.

Figure 6: Compressed ZIP files of CodePipeline Source Artifacts in S3.

Figure 6: Compressed ZIP files of CodePipeline Source Artifacts in S3.

Figure 7 shows the ZIP files (for each CodePipeline revision) that contains the deployment artifacts generated by CodePipeline - via CodeBuild.

Figure 7: Compressed files of CodePipeline Deployment Artifacts in S3.

Figure 7: Compressed files of CodePipeline Deployment Artifacts in S3.

Unzip Files to Inspect Contents

The next set of commands provide access to the artifacts that CodePipeline stores in Amazon S3.

The example commands below were run from the AWS Cloud9 IDE.

The command below displays all of the S3 bucket in your AWS account.

aws s3api list-buckets --query "Buckets[].Name" After running this command, you'll be looking for a bucket name that begins with the stack name you chose when launching the CloudFormation stack. Copy this bucket name and replace YOURBUCKETNAME with it in the command below.

aws s3api list-objects --bucket YOURBUCKETNAME --query 'Contents[].{Key: Key, Size: Size}' This displays all the objects from this S3 bucket - namely, the CodePipeline Artifact folders and files.

Next, create a new directory. You'll use this to explode the ZIP file that you'll copy from S3 later. Here's an example:

mkdir /home/ec2-user/environment/cpl Next, you'll copy the ZIP file from S3 for the Source Artifacts obtained from the Source action in CodePipeline. You'll use the S3 copy command to copy the zip to a local directory in Cloud9. If you're using something other than Cloud9, make the appropriate accommodations. Your S3 URL will be completely different than the location below.

aws s3 cp s3://YOURBUCKETNAME/FOLDER/SourceArti/m2UfrVo.zip /home/ec2-user/environment/cpl/codepipeline-source.zip Then, unzip the file you copied from S3.

unzip /home/ec2-user/environment/cpl/codepipeline-source.zip

-d /home/ec2-user/environment/cplThe contents will look similar to Figure 8.

Figure 8: Exploded ZIP file locally from CodePipeline Source Input Artifact in S3.

Figure 8: Exploded ZIP file locally from CodePipeline Source Input Artifact in S3.

JSON

You can also inspect all the resources of a particular pipeline using the AWS CLI. This includes the Input and Output Artifacts. For example, if you run the command below (modify the YOURPIPELINENAME placeholder value):

aws codepipeline get-pipeline --name YOURPIPELINENAME it will generated a JSON object that looks similar to the snippet below:

{

"pipeline": {

"roleArn": "arn:aws:iam::123456789012:role/pmd-static-0919-CodePipelineRole-7E9WOAAGV4CL",

"stages": [

{

"name": "Source",

"actions": [

{

"inputArtifacts": [],

"name": "Source",

"actionTypeId": {

...

},

"outputArtifacts": [

{

"name": "SourceArtifacts"

}

],

"configuration": {

...

},

"runOrder": 1

}

]

},

{

"name": "Deploy",

"actions": [

{

"inputArtifacts": [

{

"name": "SourceArtifacts"

}

],

"name": "Artifact",

"actionTypeId": {

...

"provider": "CodeBuild"

},

"outputArtifacts": [

{

"name": "DeploymentArtifacts"

}

],

"configuration": {

"ProjectName": "pmd-static-0919-DeploySite"

},

"runOrder": 1

}

]

}

],

"artifactStore": {

"type": "S3",

"location": "pmd-static-0919-pipelinebucket-xpraag4sxtku"

},

"name": "pmd-static-0919-Pipeline-Z7X1AALTTHH3", "version": 2 }, "metadata": { "pipelineArn": "arn:aws:codepipeline:us-east-1:123456789012:pmd-static-0919-Pipeline-Z7X1AALTTHH3", "updated": 1536067775.356, "created": 1536067724.213 } } You can use the information from this JSON object to learn and modify the configuration of the pipeline using the AWS Console, CLI, SDK, or CloudFormation.

AWS CloudFormation

In this section, you will walk through the essential code snippets from a CloudFormation template that generates a pipeline in CodePipeline. These resources include S3, CodePipeline, and CodeBuild.

S3

In the snippet below, you see how a new S3 bucket is provisioned for this pipeline using the AWS::S3::Bucket resource.

PipelineBucket:

Type: AWS::S3::Bucket

DeletionPolicy: DeleteIn the snippet below, you see how the ArtifactStore is referenced as part of the AWS::CodePipeline::Pipeline resource.

ArtifactStore:

Type: S3

EncryptionKey:

Id: !Ref CodePipelineKeyArn

Type: KMS

Location: !Ref PipelineBucketCodePipeline

The snippet below is part of the AWS::CodePipeline::Pipeline CloudFormation definition. It shows where to define the InputArtifacts and OutputArtifacts within a CodePipeline action which is part of a CodePipeline stage.

- Name: Deploy

Actions:

- Name: Artifact

ActionTypeId:

Category: Build

Owner: AWS

Version: '1'

Provider: CodeBuild

InputArtifacts:

- Name: SourceArtifacts

OutputArtifacts:

- Name: DeploymentArtifacts

Configuration:

ProjectName: !Ref CodeBuildDeploySite

RunOrder: 1CodeBuild

The ./samples and ./html folders from the CloudFormation AWS::CodeBuild::Project resource code snippet below is implicitly referring to the folder from the CodePipeline Input Artifacts (i.e., SourceArtifacts as previously defined).

BuildSpec: !Sub |

version: 0.2

phases:

post_build:

commands:

- aws s3 cp --recursive --acl public-read ./samples s3://${SiteBucketName}/samples

- aws s3 cp --recursive --acl public-read ./html s3://${SiteBucketName}/

artifacts:

type: zip

files:

- ./html/index.htmlOther CodePipeline Providers

Artifacts work similarly for other CodePipeline providers including AWS OpsWorks, AWS Elastic Beanstalk, AWS CloudFormation, and Amazon ECS. All of these services can consume zip files.

For example, when using CloudFormation as a CodePipeline Deploy provider for a Lambda function, your CodePipeline action configuration might look something like this:

ActionTypeId:

Category: Deploy

Owner: AWS

Version: '1'

Provider: CloudFormation

OutputArtifacts: []

Configuration:

ActionMode: CHANGE_SET_REPLACE

ChangeSetName: pipeline-changeset

RoleArn:

Fn::GetAtt:

- CloudFormationTrustRole

- Arn

Capabilities: CAPABILITY_IAM

StackName:

Fn::Join:

- ''

- - ""

- Ref: AWS::StackName

- "-"

- Ref: AWS::Region

- ""

TemplatePath: lambdatrigger-BuildArtifact::template-export.json

RunOrder: 1In the case of the TemplatePath property above, it's referring to the lambdatrigger-BuildArtifact InputArtifact which is an OutputArtifact from the previous stage in which an AWS Lamda function was built using CodeBuild. A product of being built in CodePipeline is that it's stored the built function in S3 as a zip file. This enabled the next step to consume this zip file and execute on it. In this case, there's a single file in the zip file called template-export.json which is a SAM template that deploys the Lambda function on AWS.

Troubleshooting

In this section, you'll learn of some of the common CodePipeline errors along with how to diagnose and resolve them.

Referring to an Artifact that Does Not Exist in S3

Below, the command run from the buildspec for the CodeBuild resource refers to a folder that does not exist in S3: samples-wrong.

version: 0.2

phases:

post_build:

commands:

- aws s3 cp --recursive --acl public-read ./samples-wrong s3://${SiteBucketName}/samples

- aws s3 cp --recursive --acl public-read ./html s3://${SiteBucketName}/

artifacts:

type: zip

files:

- ./html/index.htmlWhen provisioning this CloudFormation stack, you will not see the error. You only see it when CodePipeline runs the Deploy action that uses CodeBuild. The error you receive when accessing the CodeBuild logs will look similar to the snippet below:

[Container] 2018/09/05 17:57:56 Running command aws s3 cp --recursive --acl public-read ./samples-wrong s3://pmd-static-0919/samples

The user-provided path ./samples-wrong does not exist.This is why it's important to understand which artifacts are being referenced from your code. In this case, it's referring to the SourceArtifacts as defined as OutputArtifacts of the Source action. To troubleshoot, you might go into S3, download and inspect the contents of the exploded zip file managed by CodePipeline. You'd see a similar error when referring to an individual file.

Referring to an Artifact Not Defined in CodePipeline

Below, you see a code snippet from a CloudFormation template that defines an AWS::CodePipeline::Pipeline resource in which the value of the InputArtifacts property does not match the OutputArtifacts from the previous stage. The OutputArtifacts name must match the name of the InputArtifacts in one of its previous stages.

ActionTypeId:

Category: Source

Owner: ThirdParty

Version: '1'

Provider: GitHub

OutputArtifacts:

- Name: SourceArtifacts

Configuration:

Owner: !Ref GitHubUser

Repo: !Ref GitHubRepo

Branch: !Ref GitHubBranch

OAuthToken: !Ref GitHubToken

RunOrder: 1

- Name: Deploy

Actions:

- Name: Artifact

ActionTypeId:

Category: Build

Owner: AWS

Version: '1'

Provider: CodeBuild

InputArtifacts:

- Name: SourceWrongWhen provisioning this CloudFormation stack, you will see an error that looks similar to the snippet below for the AWS::CodePipeline::Pipeline resource:

Input Artifact Bundle SourceWrong in action Artifact is not declared as Output Artifact Bundle in any of the preceding actions (Service: AWSCodePipeline; Status Code: 400; Error Code: InvalidActionDeclarationException; Request ID: ca5g909f-b137-11e8-ab83-61aafa2fb099)Artifact Name Validation Errors

It's not obviously documented anywhere I could find, but CodePipeline Artifacts only allow certain characters and have a maximum length. The requirements are the names must be 100 characters or less and accept only the following types of characters a-zA-Z0-9_\-

This also means no spaces. You can get a general idea of the naming requirements at Limits in AWS CodePipeline — although, it doesn't specifically mention Artifacts.

If you violate the naming requirements, you'll get errors similar to what's shown below when launching provisioning the CodePipeline resource:

at 'pipeline.stages.1.member.actions.1.member.outputArtifacts.1.member.name' failed to satisfy constraint: Member must have length less than or equal to 100;

2 validation errors detected: Value '2G&&IkRLpUfg#q$J!Axhdatoa^Mo' at 'pipeline.stages.1.member.actions.1.member.outputArtifacts.1.member.name' failed to satisfy constraint: Member must satisfy regular expression pattern: [a-zA-Z0-9_\-]+; Value '2G&&IkRLpUfg#q$J!Axhdatoa^Mo' at 'pipeline.stages.2.member.actions.1.member.inputArtifacts.1.member.name' failed to satisfy constraint: Member must satisfy regular expression pattern: [a-zA-Z0-9_\-]+ (Service: AWSCodePipeline; Status Code: 400; Error Code: ValidationException; Request ID: 09b55c3a-b13a-11e8-9035-4b50aabcf590)Summary

In this post, you learned how to manage artifacts throughout an AWS CodePipeline workflow. Moreover, you learned how to troubleshoot common errors that can occur when working with these artifacts.

Published at DZone with permission of Paul Duvall, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments