Troubleshooting Problems With Native (Off-Heap) Memory in Java Applications

Are you having trouble with native (off-heap) memory in your Java applications? Check out this post on how to troubleshoot those problems.

Join the DZone community and get the full member experience.

Join For FreeAlmost every Java application uses some native (off-heap) memory. For most apps, this amount is relatively modest. However, in some situations, you may discover that your app's RSS (total memory used by the process) is much bigger than its heap size. If this is not something that you anticipated, and you want to understand what's going on, do you know where to start? If not, read on.

In this article, we will not cover "mixed" applications that contain both Java and native code. We assume that, if you write native code, you already know how to debug it. However, it turns out that even pure Java apps can sometimes use a significant amount of off-heap memory, and when it happens, it's not easy to understand what's going on.

In pure Java applications, the most common native memory consumers are instances of java.nio.DirectByteBuffer. When such an object is created, it makes an internal call that allocates the amount of native memory equal to the buffer capacity. This memory is released when this class’ equivalent of “finalize” Java method is called — either automatically, when the DirectByteBuffer instance is GCed, or manually, which is rare.

Other than that, in rare situations, native memory might be over-consumed by JVM internals, such as class metadata, or the OS may essentially give an app more memory than it needs.

Below, we will discuss all these situations in greater detail. If you have little time, you can go straight to the checklist in section 5, and then read only the most relevant part(s) of this article.

I/O Threads That Use java.nio.HeapByteBuffers

The Java NIO APIs use ByteBuffers to read and write data. java.nio.ByteBuffer is an abstract class; its concrete subclasses are HeapByteBuffer, which simply wraps a byte[] array and the DirectByteBuffer that allocates off-heap memory. They are created by ByteBuffer.allocate() and ByteBuffer.allocateDirect() calls, respectively. Each buffer kind has its pros and cons, but what’s important is that the OS can read and write bytes only from or to native memory. Thus, if your code (or some I/O library, such as Netty) uses a HeapByteBuffer for I/O, its contents are always copied to or from a temporary DirectByteBufferthat's created by the JDK under the hood.

Furthermore, the JDK caches one or more DirectByteBuffers per thread, and by default, there is no limit on the number or size of these buffers. As a result, if a Java app creates many threads that perform I/O using HeapByteBuffers, and/or these buffers are big, the JVM process may end up using a lot of additional native memory that looks like a leak. Native memory regions used by the given thread are released only when the thread terminates, and subsequently, the GC reaches that thread’s DirectByteBuffer instance(s). This blog post explains the problem in more details and provides an example that reproduces it and links to the relevant (old) JDK source code for those who are really interested.

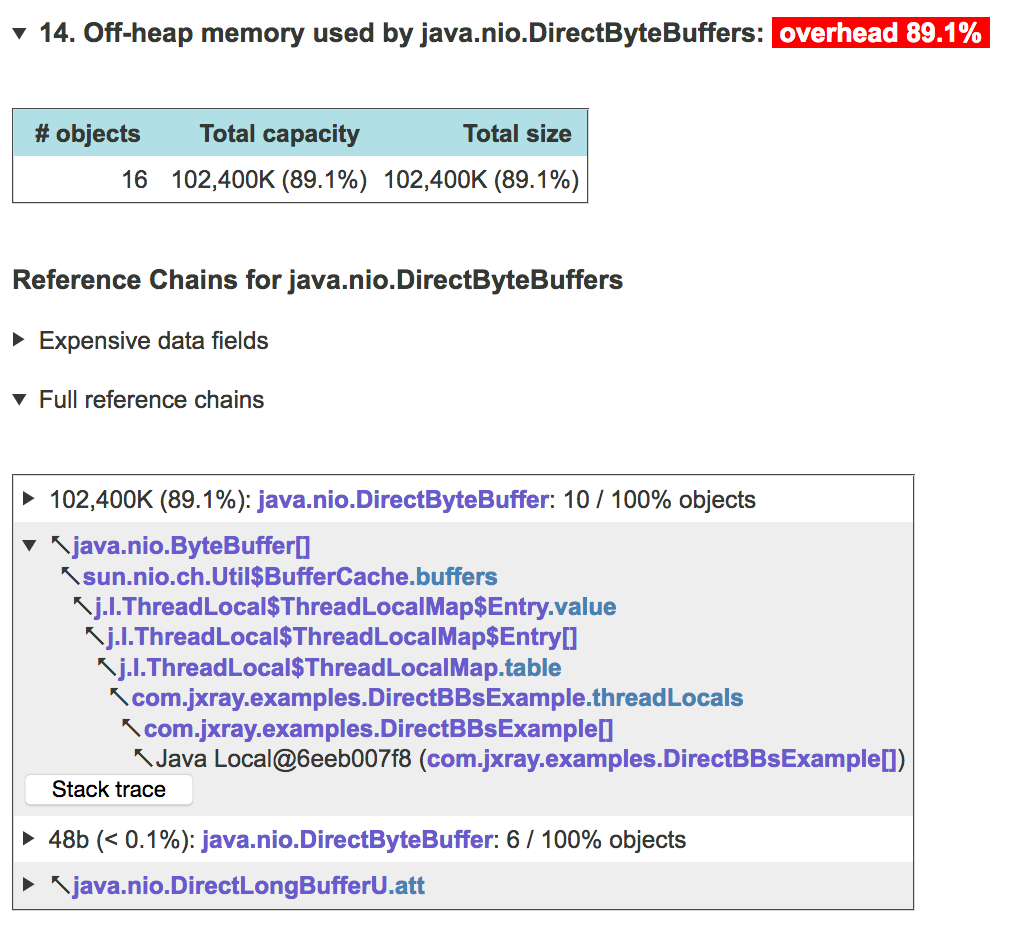

To diagnose this problem, you can take and analyze the JVM heap dump using the JXRay memory analysis tool (www.jxray.com). Despite the fact that the memory in question is allocated outside the heap, the heap dump has enough information: each DirectByteBuffer has the capacity data field that specifies the amount of native memory reserved by this buffer. Here is what the DirectByteBuffer cache maintained by a group of threads running identical I/O code looks like in the JXRay report:

When this issue occurs in the code that you cannot change, like a third-party library, the only ways to address this problem are:

Reduce the number of I/O threads, if possible

In JDK version 1.8u102 or newer, use

-Djdk.nio.maxCachedBufferSizeJVM property to limit the per-threadDirectByteBuffersize.

The Oracle documentation explains the above property as follows:

Ability to limit the capacity of buffers that can be held in the temporary buffer cache:

"The system property jdk.nio.maxCachedBufferSize has been introduced in 8u102 to limit the memory used by the "temporary buffer cache." The temporary buffer cache is a per-thread cache of direct memory used by the NIO implementation to support applications that do I/O with buffers backed by arrays in the Java heap. The value of the property is the maximum capacity of a direct buffer that can be cached. If the property is not set, then no limit is put on the size of buffers that are cached. Applications with certain patterns of I/O usage may benefit from using this property. In particular, an application that does I/O with large multi-megabyte buffers at startup but does I/O with small buffers may see a benefit to using this property. Applications that perform I/O using direct buffers will not see any benefit to using this system property."

Here are several important observations from experimenting with this property and reading the JDK source code:

You cannot use “M,” “GB,” and so on when specifying the value. Only plain numbers work, e.g.

-Djdk.nio.maxCachedBufferSize=1000000The value is indeed “per thread." So, if you want to limit the total off-heap part of the RSS to R, you should estimate the number of I/O threads T and use R/T as the value of this property.

Setting

-Djdk.nio.maxCachedBufferSizedoesn’t prevent allocation of a bigDirectByteBuffer— it only prevents this buffer from being cached and reused. So, if each of 10 threads allocates a 1GBHeapByteBufferand then invokes some I/O operation simultaneously, there will be a temporary RSS spike of up to 10GB, due to 10 temporary direct buffers. However, each of theseDirectByteBuffers will be deallocated immediately after the I/O operation. In contrast, any direct buffers smaller than the threshold will stick in memory until their owner thread terminates.

2. OutOfMemoryError: Direct Buffer Memory

Internally, the JVM keeps track of the amount of native memory that is allocated and released by DirectByteBuffers, and puts a limit on this amount. If your application fails with a stack trace like:

Exception in thread … java.lang.OutOfMemoryError: Direct buffer memory

at java.nio.Bits.reserveMemory(Bits.java:694)

at java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:123)

at java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311)

...Then, it’s a signal that the JVM ran above this limit. Note that the internal limit has nothing to do with the available RAM. An application may fail with the above exception when there is plenty of free memory. Conversely, if the limit is too high and RAM is exhausted, the JVM may force the OS into swapping memory and/or crash.

The internal JVM limit is set as follows:

By default, it’s equal to

-Xmx. Yes, the JVM heap and off-heap memory are two different memory areas, but by default, they have the same maximum size.The limit can be changed using

-XX:MaxDirectMemorySizeproperty. This property accepts acronyms like “g” or “G” for gigabytes, etc.

Thus, to find out how much native memory a given JVM is allowed to allocate, you should first check its -XX:MaxDirectMemorySize flag. If this flag is not set, or its value is <= 0, then the JVM uses the default value equal to -Xmx. For those who are curious, the relevant JDK code can be found here: code that obtains the limit and later throws OOM, where the limit is defined. Note that the last class is public, so the native memory limit can be obtained programmatically.

System.out.println("Max native mem = " + sun.misc.VM.maxDirectMemory());What can you do to determine why your app ran out of its native memory allowance, and/or how to reduce its RSS? For that, you need to find out where the (biggest) DirectByteBuffers come from, i.e. which Java data structures they are attached to. Again, this can be done by taking a heap dump and analyzing it with JXRay, as explained in the previous section.

This story can take an unusual twist when the JVM runs with a heap that is big enough for GCs (at least in the Old Gen) to occur infrequently. In this situation, some DirectByteBuffer instances that became unreachable, are not garbage-collected for a long time. But, such objects still hold native memory until the cleanup method below is called:

((DirectBuffer)buf).cleaner().clean();The garbage collector always calls it before destroying the object, but, as explained above, it may happen too late. If the code that manages direct buffers is under your control, you may be able to call the above method explicitly. Otherwise, the only way to prevent over-consumption of native memory would be to reduce the heap size and to make GCs more frequent.

3. Linux GLIBC Allocator Side-Effects: RSS Grows Big in 64MB Increments

This is the least obvious problem with native memory. It's known to occur in RedHat Enterprise Linux 6 (RHEL 6), running glibc memory allocator version 2.10 or newer on 64-bit machines. It manifests itself in the RSS growth when just a fraction of that memory is actually utilized. This article describes it in detail, but a quick summary is provided below.

The glibc memory allocator, at least in some versions of Linux, has an optimization to improve speed by avoiding contention when a process has a large number of concurrent threads. The supposed speedup is achieved by maintaining per-core memory pools. Essentially, with this optimization, the OS grabs memory for a given process in pretty big same-size (64MB) chunks called arenas, which are clearly visible when process memory is analyzed with pmap. Each arena is available only to its respective CPU core, so no more than one thread at a time can operate on it. Then, individual malloc() calls reserve memory within these arenas. Up to a certain maximum number of arenas (8 by default) can be allocated per each CPU core. Looks like this is maxed out when the number of threads is high and/or threads are created and destroyed frequently. The actual amount of memory utilized by the application within these arenas can be quite small. However, if an application has a large number of threads, and the machine has a large number of CPU cores, the total amount of memory reserved in this way can grow really high. For example, on a machine with 16 cores, this number would be 16 * 8 * 64MB = 8GB.

Fortunately, the maximum number of arenas can be adjusted via the MALLOC_ARENA_MAX environment variable. Because of this, some Java applications use a script like the one below to prevent this problem:

# Some versions of glibc use an arena memory allocator that causes

# virtual memory usage to explode. Tune the variable down to prevent

# vmem explosion.

export MALLOC_ARENA_MAX=${MALLOC_ARENA_MAX:-4}So, in principle, if you suspect this problem, it's always possible to set the above env variable and/or check it using the cat /proc/<JVM_PID>/environ command. But, unfortunately, the story doesn't end here — it turns out that sometimes the adjustment above doesn't work!

When I investigated one such case in the past, I found this Linux bug that seems to suggest that adjusting MALLOC_ARENA_MAX may not always work. It looks like, subsequently, the bug has been fixed in one of glibc 2.12 updates (see the following Linux update release notes mentioning BZ#769594). But, chances are that some users still run the unpatched versions of RHEL.

The simplest and most straightforward way to check whether we are observing the above problem is to set MALLOC_CHECK_=1 . This makes the process use an entirely different memory allocator. But this allocator is potentially slower (the article above says it's intended to be used mainly for debugging). Yet, another allocator called jemalloc can be used as well. Simply switching to a different Linux distribution, such as CentOS, may be the quickest way to address this problem.

4. Native Memory Tracking (NMT) JVM Feature

Native Memory Tracking (NMT) is the HotSpot JVM feature that tracks internal memory usage of the JVM. It is enabled via the -XX:NativeMemoryTracking=[summary | detail] flag. This flag on its own does not result in any extra output from the JVM. Instead, one has to invoke the jcmd utility separately to obtain the information accumulated by the JVM so far. Both the detailed info (all the allocation events) or summary (how much memory is currently allocated by category, such as Class, Thread, Internal) is available. Memory allocated by DirectByteBuffers is tracked under the “Internal” category. The documentation says that enabling NMT results in 5-10 percent JVM performance drop and slightly increased memory consumption. And again, NMT does not track memory allocation by non-JVM code.

It looks like the main advantage of NMT is the ability to track memory used by JVM internals and metadata that would, otherwise, be hard or impossible to distinguish — for example, classes, threads stacks, compiler, symbols, etc. So, it can be used as a last resort, if the previous troubleshooting methods don’t explain where memory goes, and you know that the problematic memory allocations don’t come from a custom native code.

5. Step-By-Step Checklist

If the JVM fails with java.lang.OutOfMemoryError: Direct buffer memory, then the problem is with the java.nio.DirectByteBuffers, meaning that they are either too big, too numerous, or haven’t been GCed. Thus, they will hold too much native memory. You will need to collect a JVM heap dump and analyze it with JXRay (www.jxray.com). The report will tell you where these direct byte buffers come from. For more details, see section 2 above.

If the JVM’s RSS grows much higher than the maximum heap size, then, most likely, this is again a problem with DirectByteBuffers. They may be created explicitly by the app (though this code may be in some third-party library), or they may be automatically created and cached by internal JDK code for I/O threads that use HeapByteBuffers (some versions of Netty, for example, do that). Again, take a JVM heap dump and analyze it with JXRay. If there is a java.lang.ThreadLocal in the reference chain that keeps the buffers in memory, refer to section 1 above; otherwise, return to section 2.

If, from the heap dump, there are not enough DirectByteBuffers to explain the high RSS, there may either be some custom native code that leaks memory, or some JVM internals, such as a very large number of classes that may over-consume memory (not very likely), or you may face the OS issue described in section 3 above. The latter would be indirectly signaled by numerous 64MB allocations reported by pmap . If that’s not the case, check for custom native code and/or check section 4 above on how to enable Native Memory Tracking (NMT) for the JVM.

Opinions expressed by DZone contributors are their own.

Comments