Understanding the Differences Between Rate Limiting, Debouncing, and Throttling

Learn the differences between rate limiting, debouncing, and throttling, with examples and best practices for managing function execution.

Join the DZone community and get the full member experience.

Join For FreeWhen developing, we often encounter rate limiting, debouncing, and throttling concepts, such as debouncing and throttling in the front end with event listeners and rate limiting when working with third-party APIs. These three concepts are at the core of any queueing system, enabling us to configure the frequency at which functions must be invoked over a given period.

While this definition sounds simple, the distinction between the three approaches can take time to grasp. If my Inngest function is calling the OpenAI API, should I use rate limiting or throttling? Similarly, should I use debouncing or rate limiting if my Inngest Function is performing some costly operation?

Let's answer these questions with some examples and comparisons!

Rate Limiting

Let's start with the most widespread one: rate limiting. You've probably encountered this term while working with public APIs such as OpenAI or Shopify API. Both leverage rate limiting to control the external usage of their API by limiting the number of requests per hour or day — additional requests above this limit are rejected.

The same reasoning applies when developing your Inngest functions. For example, consider an Inngest function, part of a CRM product that frequently scrapes and stores company information. Companies might be updated often, and scraping is a costly operation unrelated to the order of events.

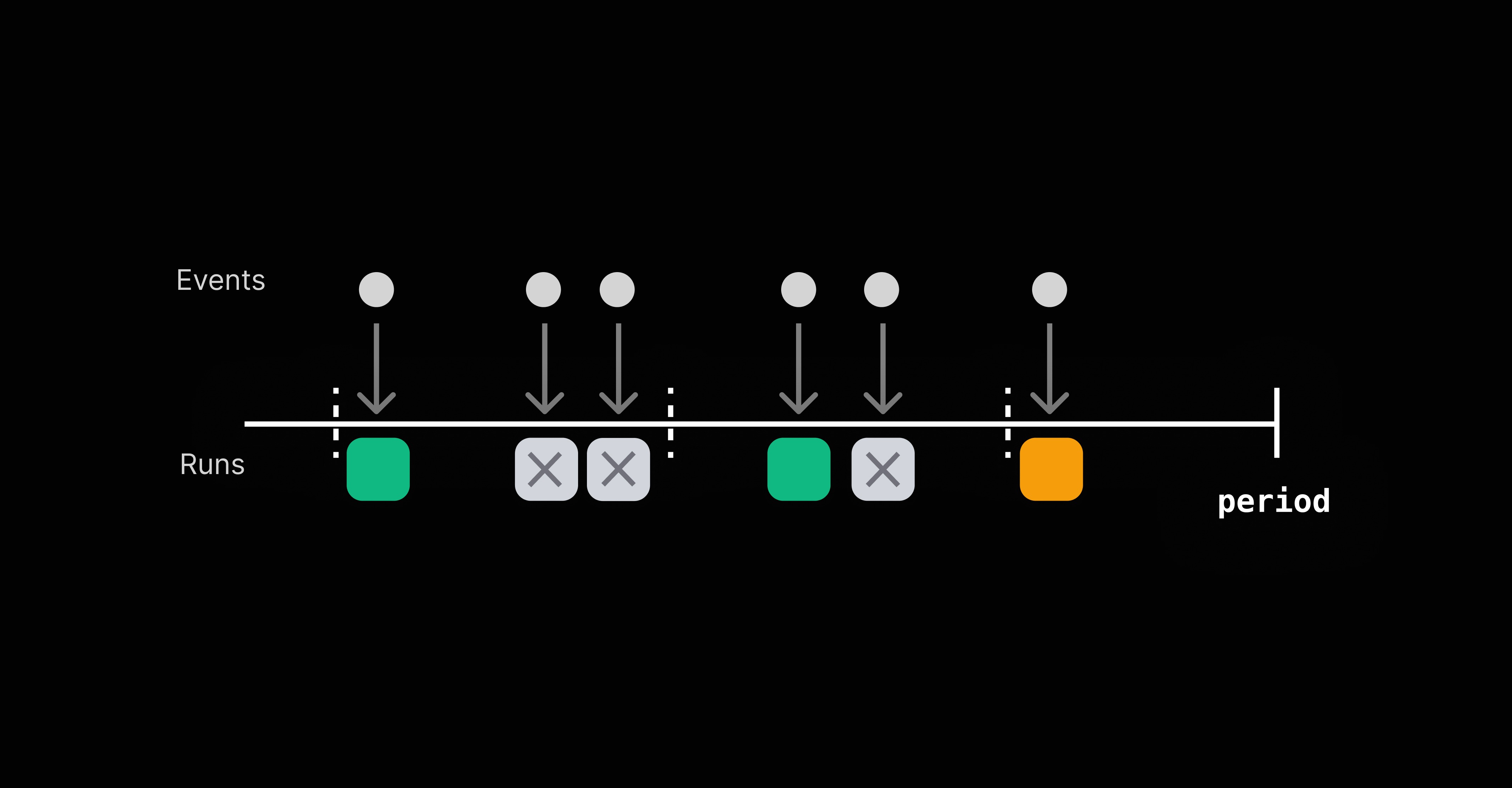

Here is how rate limiting operates for a maximum of 1 call per minute:

In case of a surge of company updates, our Inngest Function will be rate-limited (not run), preventing costly and unnecessary scraping operations.

Adding a rate limit configuration guarantees that our company scraping function will only run when companies get updated and at a maximum of every 4 hours:

- tsx:

export default inngest.createFunction(

{

id: "scrape-company",

rateLimit: {

limit: 1,

period: "4h",

key: "event.data.company_id",

},

},

{ event: "intercom/company.updated" },

async ({ event, step }) => {

// This function will be rate limited

// It will only run once per 4 hours for a given event payload with matching company_id

}

);

The Many Flavors of Rate Limiting

Rate limiting is implemented following an algorithm. The simplest one is a “fixed window” approach where you can make a set number of requests (say 100) during a given time window (like one minute). If you exceed that limit within the window, you're blocked. Once the time resets, you get a fresh set of 100 requests.

Public APIs like the Shopify API rely on the Leaky Bucket algorithm. Picture a bucket with a small hole at the bottom. You can add water (requests) as fast as you want, but it only leaks out (processes) steadily. The excess is discarded if the bucket overflows (too many requests).

Inngest functions benefit from a more flexible algorithm that allows bursts but still enforces a steady overall rate: the Generic Cell Rate Algorithm (GCRA). This algorithm is like a hybrid of fixed window and Leaky Bucket algorithms. It controls traffic by spacing out requests, ensuring they happen regularly, resulting in more fairness in rate limiting.

When Should I Use Rate Limiting?

Rate limiting is a good fit to protect the Inngest functions that routinely perform costly operations. Good examples are functions that won't be a good fit for a CRON, as they only need to run when something is happening (not at each occurrence) and are costly to run (for example, AI summaries, aggregations, synchronizing data, or scraping).

Debouncing

Debouncing could be seen as the mirror of rate limiting, providing the same protection against spikes of invocations but behaving differently by permanently preserving the last occurrence of the given window (with Inngest, the last Event).

For example, an Inngest function computing AI suggestions based on a document change is costly and likely to be triggered often. In that scenario, we might want to avoid unnecessary runs and limit our AI work to run every minute with the latest Event received:

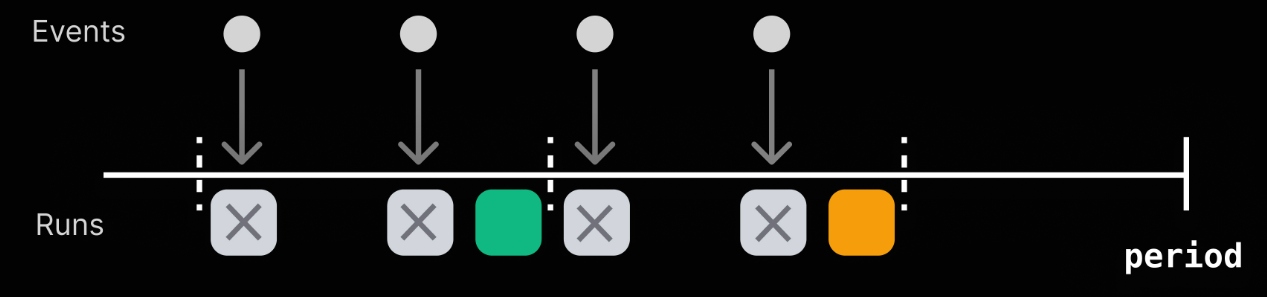

Here is how debouncing operates for a maximum of 1 call per minute:

Our Inngest function is called every minute only if a triggering event is received during this time window. You will notice that contrary to rate limiting, only the last received event triggers a function run.

Adding a debouncing window of 1 minute ensures that our AI workflow will only be triggered by the last event received in a 1-minute window of document updates:

- tsx:

export default inngest.createFunction(

{

id: "handle-document-suggestions",

debounce: {

key: "event.data.id",

period: "1m"

},

},

{ event: "document.updated" },

async ({ event, step }) => {

// This function will only be scheduled 1 minute after events

// are no longer received with the same `event.data.id` field.

//

// `event` will be the last event in the series received.

}

);

When Should I Use Debouncing?

Popular use cases are webhooks, any Inngest function reacting to use actions, or AI workflows.

The above scenarios occur in a usage-intensive environment where the event triggering the function matters for the Function's execution (ex, the AI workflow needs the latest up-to-date occurrence).

Throttling

Interestingly, throttling is the Flow Control feature for dealing with third-party API rate limiting. Instead of dropping unwanted function runs like rate limiting and debouncing, throttling will buffer the queued function runs to match the configured frequency and time window.

A good example would be an Inngest function performing some AI enrichment as part of an ETL pipeline. As events arrive, our Inngest Function needs to match OpenAI's API rate limit by reducing the frequency of runs.

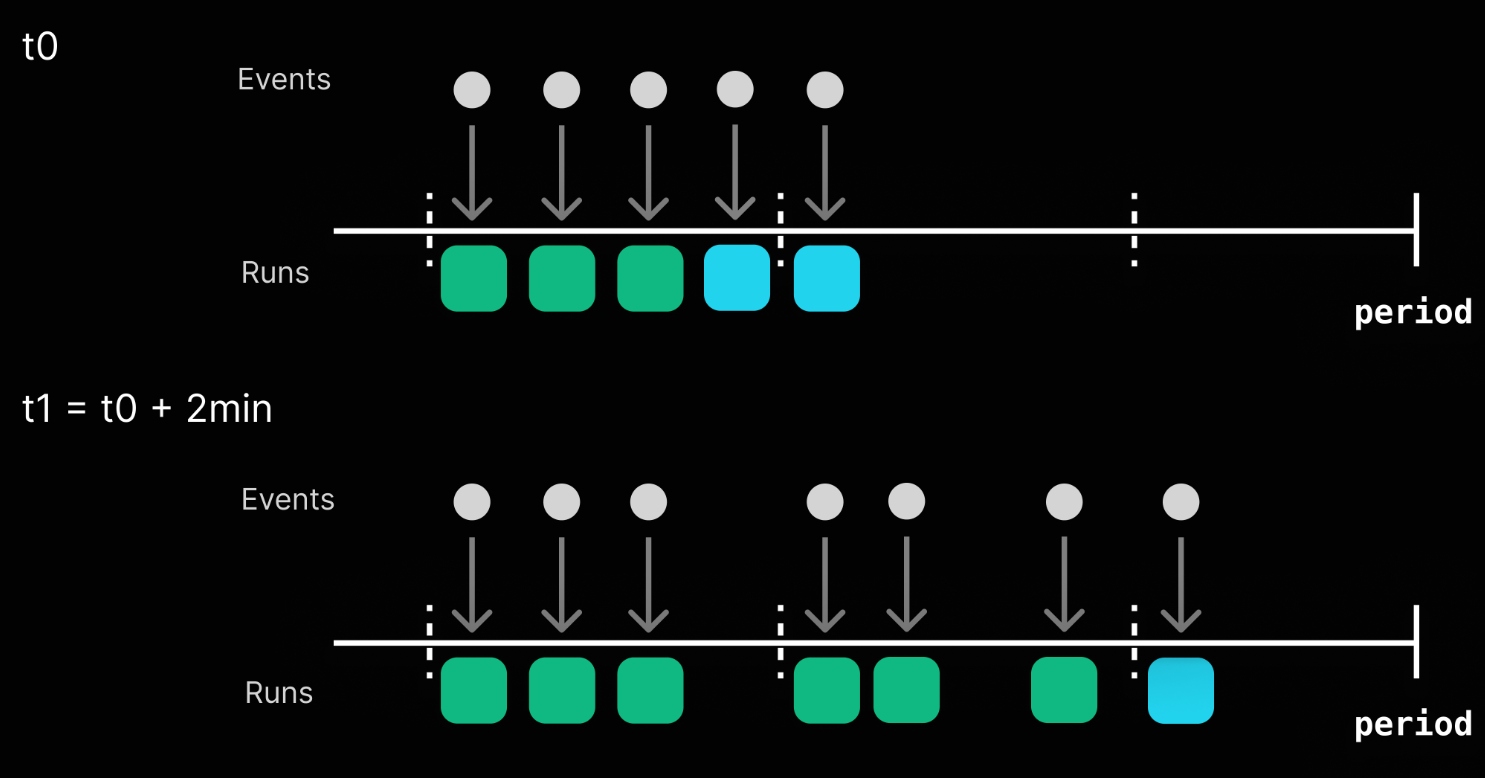

Here is how Throttling operates for a maximum of 3 calls per minute:

Throttling keeps in queue function runs that exceed that configured frequency and time window and distributes them smoothly as soon as capacity is available.

When Should I Use Throttling?

Throttling is the go-to solution when dealing with third-party API rate limits. You can configure Throttling by matching its configuration with the target API's rate limit. For example, an Inngest Function relying on Resend might benefit from a Throttling of 2 calls per second.

Conclusion: A Takeaway Cheatsheet

Congrats, you are now an expert in rate limiting, debouncing, and throttling!

The following is a good rule of thumb to keep in mind:

Throttling helps deal with external API limitations while Debouncing and Rate limiting prevents abuse from bad actors or scheduling resource intensive work.

Please find below a takeaway cheatsheet that will help you evaluate the best way to optimize your Inngest Functions:

| Use cases/Feature | Throttling | Debouncing | Rate Limiting |

|---|---|---|---|

| Expensive computation (ex: AI) | ✅ | ✅ | |

| 3rd party rate-limited API calls | ✅ | ||

| Processing large amounts of real-time events | ✅ | ||

| 2FA or magic sign-in emails | ✅ | ||

| Smoothing out bursts in volume | ✅ |

Published at DZone with permission of Charly Poly. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments