Understanding Softmax Activation Function for AI/ML Engineers

The article explains the softmax function's role in turning neural network outputs into probabilities for classification in machine learning.

Join the DZone community and get the full member experience.

Join For FreeIn the realm of machine learning and deep learning, activation functions play a pivotal role in neural networks' ability to make complex decisions and predictions. Among these, the softmax activation function stands out, especially in classification tasks where outcomes are mutually exclusive. This article delves into the softmax function, offering insights into its workings, applications, and significance in the field of artificial intelligence (AI).

Softmax Activation Function

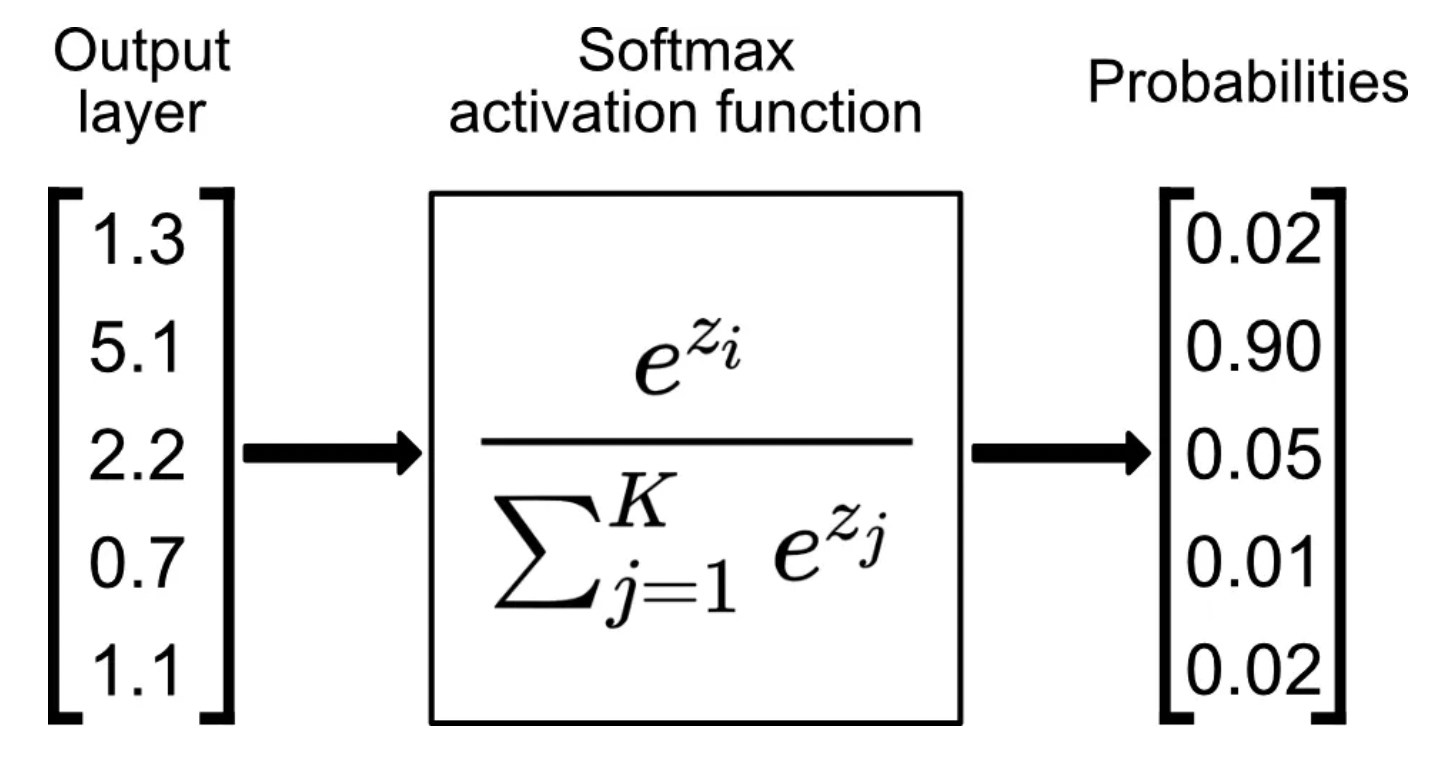

Image credits: Towards Data Science

The softmax function, often used in the final layer of a neural network model for classification tasks, converts raw output scores — also known as logits — into probabilities by taking the exponential of each output and normalizing these values by dividing by the sum of all the exponentials. This process ensures the output values are in the range (0,1) and sum up to 1, making them interpretable as probabilities.

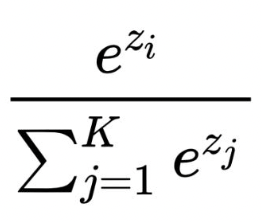

The mathematical expression for the softmax function is as follows:

Here, zi represents the input to the softmax function for class i, and the denominator is the sum of the exponentials of all the raw class scores in the output layer.

Imagine a neural network tasked with classifying images of handwritten digits (0-9). The final layer might output a vector with 10 numbers, each corresponding to a digit. However, these numbers don't directly represent probabilities. The softmax function steps in to convert this vector into a probability distribution for each digit (class).

Here’s How Softmax Achieves This Magic

- Input: The softmax function takes a vector z of real numbers, representing the outputs from the final layer of the neural network.

- Exponentiation: Each element in z is exponentiated using the mathematical constant e (approximately 2.718). This ensures all values become positive.

- Normalization: The exponentiated values are then divided by the sum of all exponentiated values. This normalization step guarantees the output values sum to 1, a crucial property of a probability distribution.

Properties of the Softmax Function

- Output range: The softmax function guarantees that the output values lie between 0 and 1, satisfying the definition of probabilities.

- The sum of probabilities: As mentioned earlier, the sum of all outputs from the softmax function always equals 1.

- Interpretability: Softmax transforms the raw outputs into probabilities, making the network's predictions easier to understand and analyze.

Applications of Softmax Activation

Softmax is predominantly used in multi-class classification problems. From image recognition and Natural Language Processing (NLP) to recommendation systems, its ability to handle multiple classes efficiently makes it indispensable. For instance, in a neural network model predicting types of fruits, softmax would help determine the probability of an image being an apple, orange, or banana, ensuring the sum of these probabilities equals one.

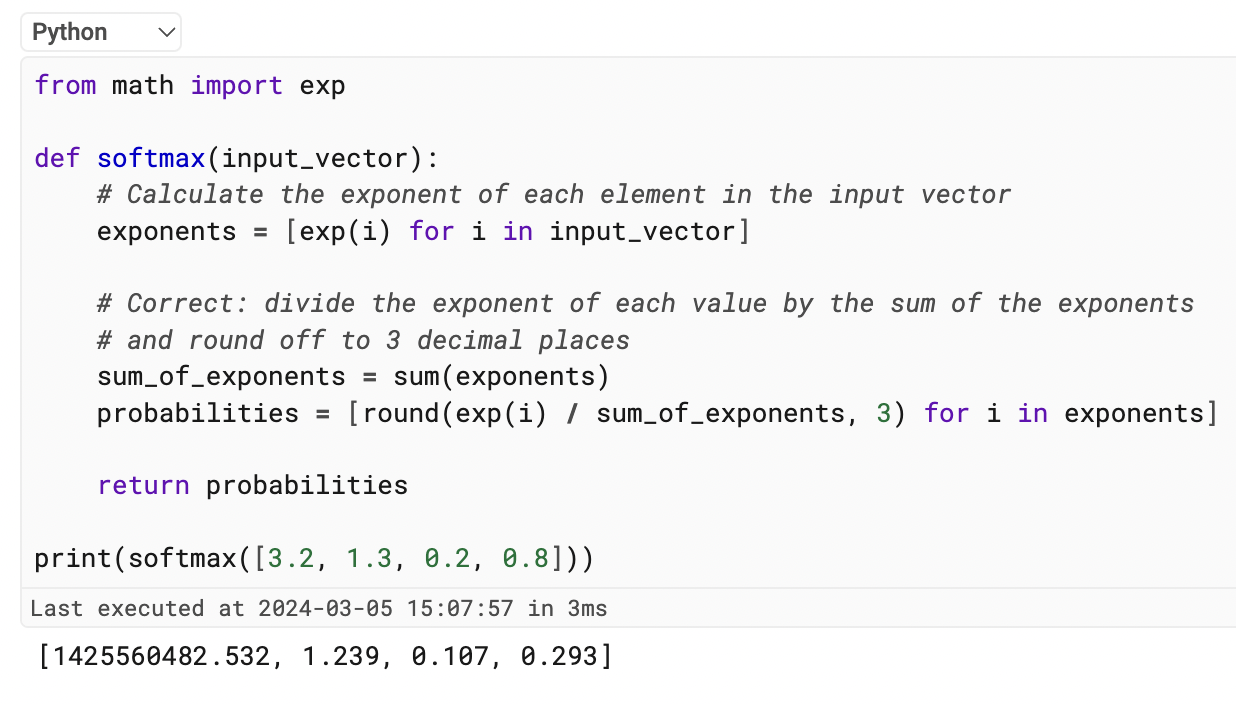

In Python, we can implement Softmax as follows:

from math import exp

def softmax(input_vector):

# Calculate the exponent of each element in the input vector

exponents = [exp(i) for i in input_vector]

# Correct: divide the exponent of each value by the sum of the exponents

# and round off to 3 decimal places

sum_of_exponents = sum(exponents)

probabilities = [round(exp(i) / sum_of_exponents, 3) for i in exponents]

return probabilities

print(softmax([3.2, 1.3, 0.2, 0.8]))The output will be as follows:

Comparison With Other Activation Functions

Unlike functions such as sigmoid or ReLU (Rectified Linear Unit), which are used in hidden layers for binary classification or non-linear transformations, softmax is uniquely suited for the output layer in multi-class scenarios. While sigmoid squashes outputs between 0 and 1, it doesn't ensure that the sum of outputs is 1 — making softmax more appropriate for probabilities. ReLU, known for solving vanishing gradient problems, doesn't provide probabilities, highlighting softmax's role in classification contexts.

Softmax in Action: Multi-Class Classification

Softmax shines in multi-class classification problems where the input can belong to one of several discrete categories. Here are some real-world examples:

- Image recognition: Classifying images of objects, animals, or scenes, where each image can belong to a specific class (e.g., cat, dog, car).

- Spam detection: Classifying emails as spam or not spam.

- Sentiment analysis: Classifying text into categories like positive, negative, or neutral sentiment.

In these scenarios, the softmax function provides a probabilistic interpretation of the network's predictions. For instance, in image recognition, the softmax output might indicate a 70% probability of the image being a cat and a 30% probability of it being a dog.

Advantages of Using Softmax

There are several advantages of using the softmax activation function — here are a few you can benefit from:

- Probability distribution: Softmax provides a well-defined probability distribution for each class, enabling us to assess the network's confidence in its predictions.

- Interpretability: Probabilities are easier to understand and communicate compared to raw output values. This allows for better evaluation and debugging of the neural network.

- Numerical stability: The softmax function exhibits good numerical stability, making it efficient for training neural networks.

Softmax Activation Function Tutorial

Let’s see how the softmax activation function works through a simple tutorial.

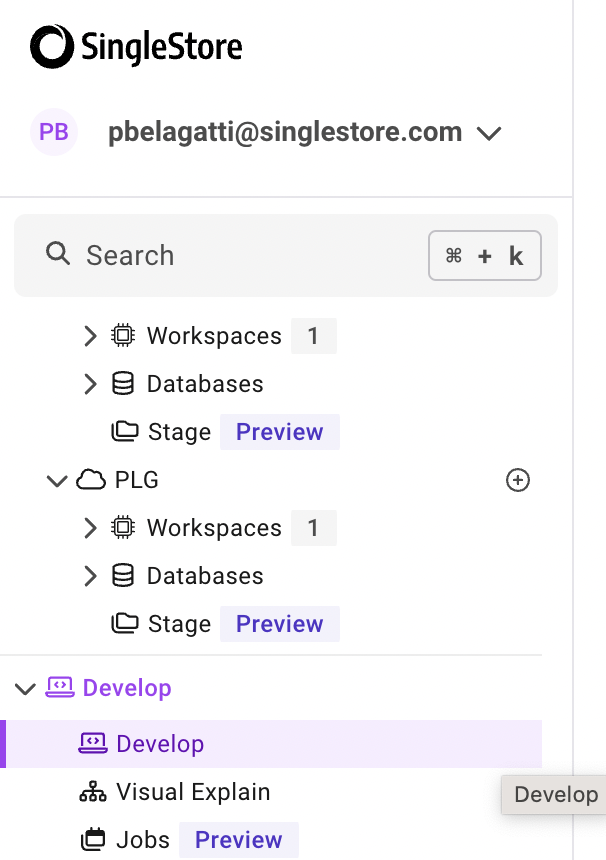

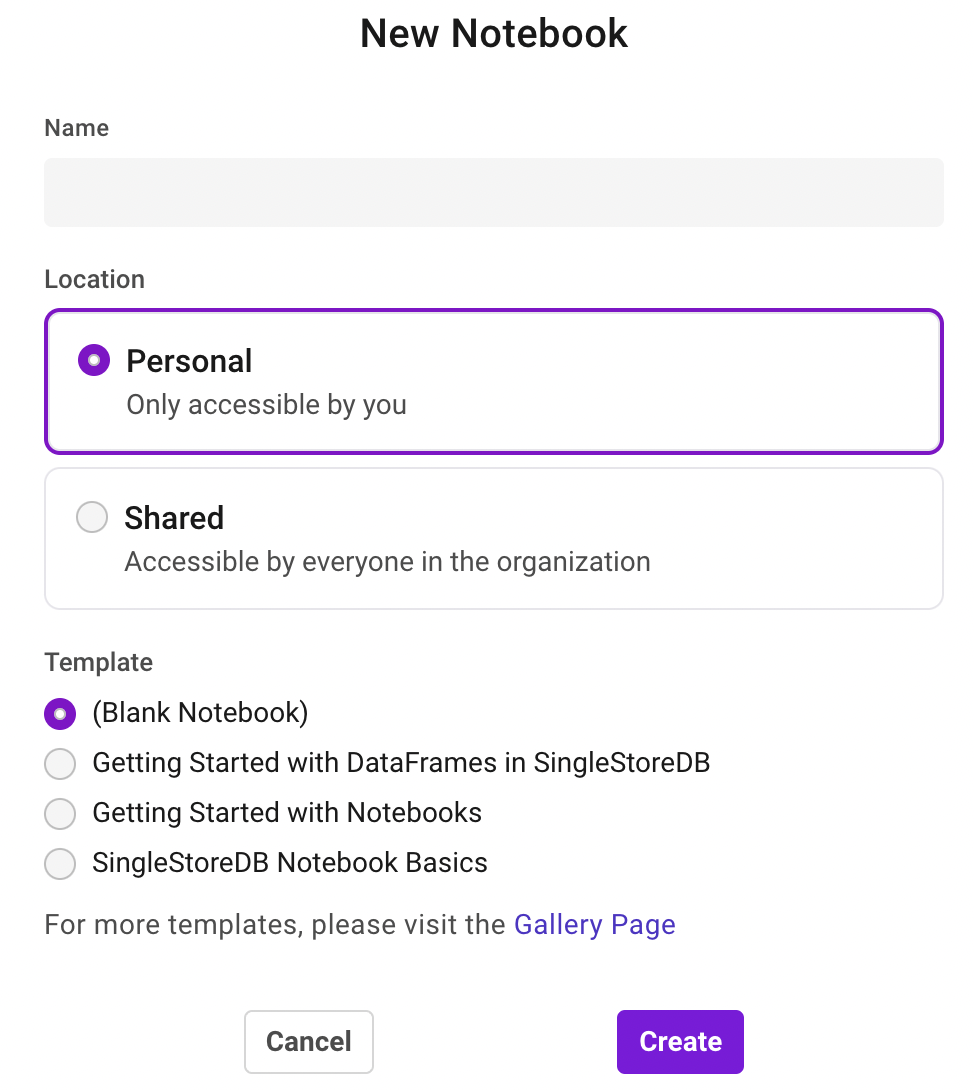

Let’s use the SingleStore Notebook feature to carry out this tutorial. If you haven’t already, activate your free SingleStore trial to start using Notebooks.

Once you sign up, go to the ‘Develop’ option and create a blank Notebook.

Name your Notebook and start adding the following instructions.

The tutorial illustrates the calculation of softmax probabilities from a set of logits, showcasing its application in converting raw scores into probabilities that sum to 1.

Step 1: Install NumPy and Matplotlib Libraries

!pip install numpy

!pip install matplotlibStep 2: Import Libraries

import numpy as np

import matplotlib.pyplot as pltStep 3: Implement the Softmax Function

Implement the softmax function using NumPy. This function takes a vector of raw scores (logits) and returns a vector of probabilities.

def softmax(logits):

exp_logits = np.exp(logits - np.max(logits)) # Improve numerical stability

probabilities = exp_logits / np.sum(exp_logits)

return probabilitiesStep 4: Create a Set of Logits

Define a set of logits as a NumPy array. These logits can be raw scores from any model output you want to convert to probabilities.

logits = np.array([2.0, 1.0, 0.1])Step 5: Apply the Softmax Function

Use the softmax function defined earlier to convert the logits into probabilities.

probabilities = softmax(logits)

print("Probabilities:", probabilities)Step 6: Visualize the Results

To better understand the softmax function's effect, visualize the logits and the resulting probabilities using Matplotlib.

# Plotting

labels = ['Class 1', 'Class 2', 'Class 3']

x = range(len(labels))

plt.figure(figsize=(10, 5))

plt.subplot(1, 2, 1)

plt.bar(x, logits, color='red')

plt.title('Logits')

plt.xticks(x, labels)

plt.subplot(1, 2, 2)

plt.bar(x, probabilities, color='green')

plt.title('Probabilities after Softmax')

plt.xticks(x, labels)

plt.show()The complete tutorial code can be found here in this repository.

The softmax function is an essential component of neural networks for multi-class classification tasks. It empowers networks to make probabilistic predictions, enabling a more nuanced understanding of their outputs. As deep learning continues to evolve, the softmax function will remain a cornerstone, providing a bridge between the raw computations of neural networks and the world of interpretable probabilities.

Published at DZone with permission of Pavan Belagatti, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments