Understanding the Deployment of Deep Learning Algorithms on Embedded Platforms

Deploying deep learning on embedded platforms involves optimizing models and ensuring real-time performance for efficient execution and unlocking new possibilities.

Join the DZone community and get the full member experience.

Join For FreeEmbedded platforms have become an integral part of our daily lives, revolutionizing our technological interaction. These platforms, equipped with deep learning algorithms, have opened a world of possibilities, enabling smart devices, autonomous systems, and intelligent applications. Therefore, the deployment of deep learning algorithms on embedded platforms is crucial. It involves the process of optimizing and adapting deep learning models to run efficiently on resource-constrained embedded systems such as microcontrollers, FPGAs, and CPUs. This deployment process often requires model compression, quantization, and other techniques to reduce the model size and computational requirements without sacrificing performance.

The global market for embedded systems has experienced rapid expansion, expected to reach USD 170.04 billion in 2023. As per the precedence research survey, it is expected to continue its upward trajectory, with estimates projecting it to reach approximately USD 258.6 billion by 2032. The forecasted Compound Annual Growth Rate (CAGR) during the period from 2023 to 2032 is around 4.77%. Several key insights emerge from the market analysis. In 2022, North America emerged as the dominant region, accounting for 51% of the total revenue share, while Asia Pacific held a considerable share of 24%. In terms of hardware platforms, the ASIC segment had a substantial market share of 31.5%, and the microprocessor segment captured 22.3% of the revenue share in 2022.

Embedded platforms have limited memory, processing power, and energy resources compared to traditional computing systems. Therefore, deploying deep learning algorithms on these platforms necessitates careful consideration of hardware constraints and trade-offs between accuracy and resource utilization.

The deployment includes converting the trained deep learning model into a format compatible with the target embedded platform. This involves converting the model to a framework-specific format or optimizing it for specific hardware accelerators or libraries.

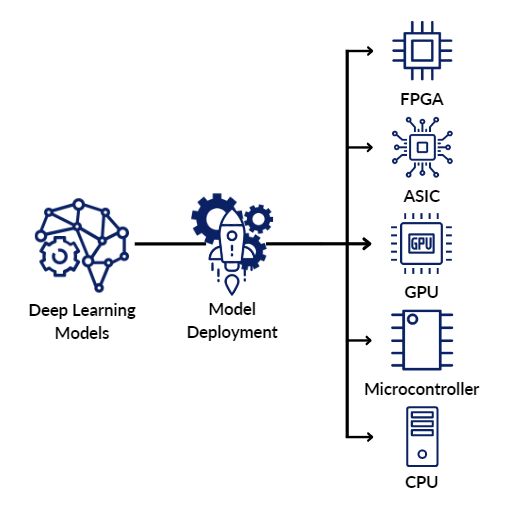

Additionally, deploying deep learning algorithms on embedded platforms often involves leveraging hardware acceleration techniques such as GPU acceleration, specialized neural network accelerators, or custom hardware designs like FPGAs or ASICs. These hardware accelerators can significantly enhance deep learning algorithms' inference speed and energy efficiency on embedded platforms. The deployment of deep learning algorithms on embedded platforms typically includes below.

Deep learning model deployment on various embedded platforms.

Optimizing Deep Learning Models for Embedded Deployment

Deploying deep learning algorithms on embedded platforms requires careful optimization and adaptation. Model compression, quantization, and pruning techniques help reduce the model's size and computational requirements without compromising performance.

Hardware Considerations for Embedded Deployment

Understanding the unique hardware constraints of embedded platforms is crucial for successful deployment. Factors such as available memory, processing power, and energy limitations need to be carefully analyzed. Selecting deep learning models and architectures that effectively utilize the resources of the target embedded platform is essential for optimal performance and efficiency.

Converting and Adapting Models for Embedded Systems

Converting trained deep learning models into formats compatible with embedded platforms is a critical step in the deployment process. Framework-specific formats such as TensorFlow Lite or ONNX are commonly used. Additionally, adapting models to leverage specialized hardware accelerators, like GPUs, neural network accelerators, or custom designs, such as FPGAs or ASICs, can significantly enhance inference speed and energy efficiency on embedded platforms.

Real-Time Performance and Latency Constraints

In the domain of embedded systems, real-time performance and low latency are crucial. Deep learning algorithms must meet the timing requirements of specific applications, ensuring prompt and efficient execution of the inference process. Balancing real-time demands with the limited resources of embedded platforms requires careful optimization and fine-tuning.

If the deployed model doesn't meet the desired performance or resource constraints, an iterative refinement process may be necessary. This could involve further model optimization, hardware tuning, or algorithmic changes to improve the performance or efficiency of the deployed deep learning algorithm.

Throughout the deployment process, it is important to consider factors such as real-time requirements, latency constraints, and the specific needs of the application to ensure that the deployed deep learning algorithm functions effectively on the embedded platform.

Frameworks and Tools for Deploying Deep Learning Algorithms

Several frameworks and tools have emerged to facilitate the deployment of deep learning algorithms on embedded platforms. TensorFlow Lite, PyTorch Mobile, Caffe2, OpenVINO, and ARM CMSIS-NN library are among the popular choices, providing optimized libraries and runtime environments for efficient execution on embedded devices.

Let us see a few use cases where deep learning model deployment on embedded edge platforms is suitable.

- Autonomous Vehicles: Autonomous vehicles rely heavily on computer vision algorithms trained using deep learning techniques such as Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs). These systems process images from cameras mounted on autonomous cars to detect objects like pedestrians crossing streets, parked cars along curbsides, cyclists riding, etc., based on which the autonomous vehicle performs actions.

- Healthcare and Remote Monitoring: Healthcare: Deep learning is rapidly gaining traction in the healthcare industry. For instance, wearable sensors and devices utilize patient data to offer real-time insights into various health metrics, including overall health status, blood sugar levels, blood pressure, heart rate, and more. These technologies leverage deep learning algorithms to analyze and interpret the collected data, providing valuable information for monitoring and managing patient conditions.

Future Trends and Advancements

The future holds exciting advancements in deploying deep learning algorithms on embedded platforms; edge computing, with AI at the edge, enables real-time decision-making and reduced latency. The integration of deep learning with Internet of Things (IoT) devices further extends the possibilities of embedded AI. Custom hardware designs tailored for deep learning algorithms on embedded platforms are also anticipated, offering enhanced efficiency and performance.

Deploying deep learning algorithms on embedded platforms involves a structured process that optimizes models, considers hardware constraints, and addresses real-time performance requirements. By following this process, businesses can harness the power of AI on resource-constrained systems, driving innovation, streamlining operations, and delivering exceptional products and services. Embracing this technology empowers businesses to unlock new possibilities, leading to sustainable growth and success in today's AI-driven world.

Furthermore, real-time performance requirements and latency constraints are critical considerations in deploying deep learning algorithms on embedded platforms, on which the efficient execution of the inference process depends.

Opinions expressed by DZone contributors are their own.

Comments