How to Use Python to Loop Through HTML Tables and Scrape Tabular Data

Iterating through HTML tables is tricky, so we've created this guide to help you understand how to use Python to extract tabular data from public HTML tables.

Join the DZone community and get the full member experience.

Join For FreeTabular data is one of the best sources of data on the web. They can store a massive amount of useful information without losing its easy-to-read format, making it gold mines for data-related projects.

Whether it is to scrape football data or extract stock market data, we can use Python to quickly access, parse and extract data from HTML tables, thanks to Requests and Beautiful Soup.

Also, we have a little black and white surprise for you at the end, so keep reading!

Understanding HTML Table’s Structure

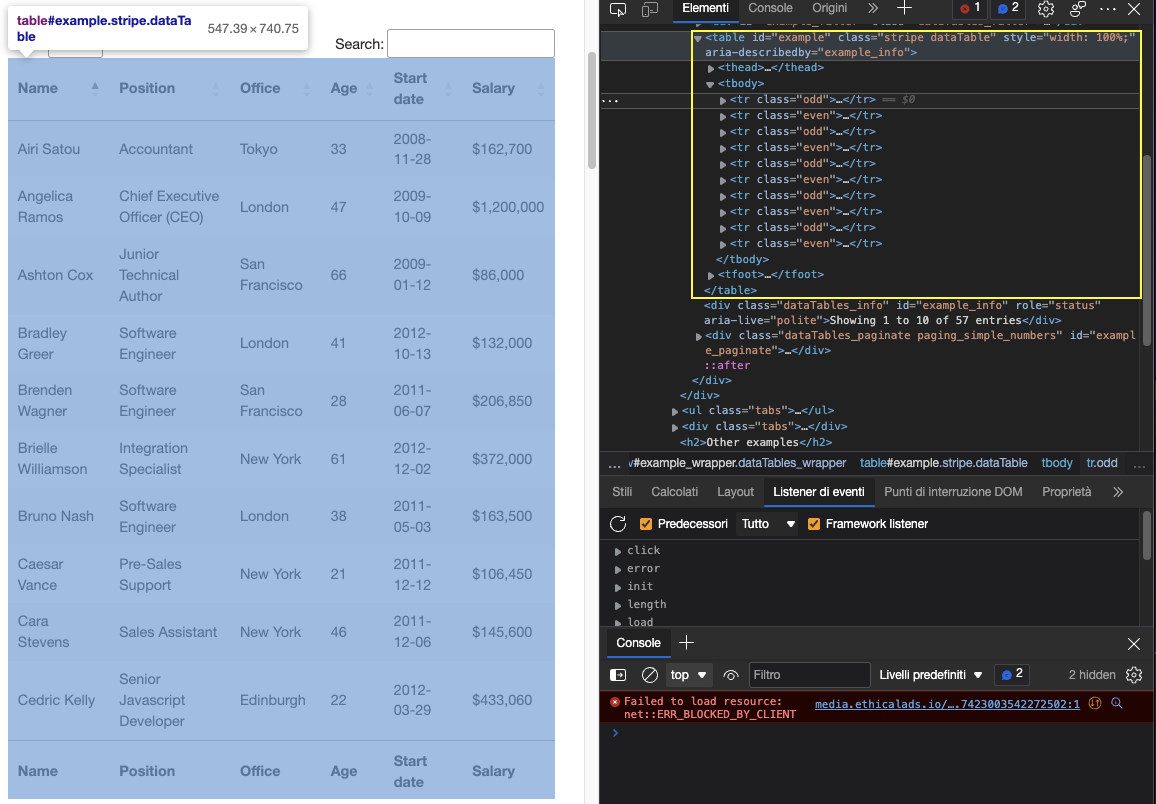

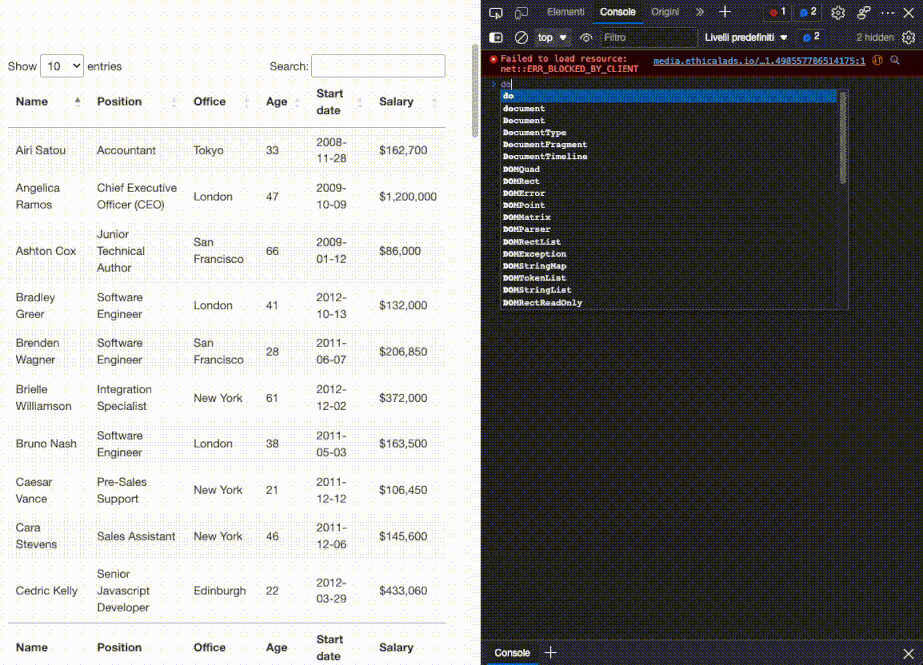

Visually, an HTML table is a set of rows and columns displaying information in a tabular format. For this tutorial, we’ll be scraping the table above:

To be able to scrape the data contained within this table, we’ll need to go a little deeper into its coding.

Generally speaking, HTML tables are actually built using the following HTML tags:

- <table>: It marks the start of an HTML table

- <th> or <thead>: Defines a row as the heading of the table

- <tbody>: Indicates the section where the data is

- <tr>: Indicates a row in the table

- <td>: Defines a cell in the table

However, as we’ll see in real-life scenarios, not all developers respect these conventions when building their tables, making some projects harder than others. Still, understanding how they work is crucial for finding the right approach.

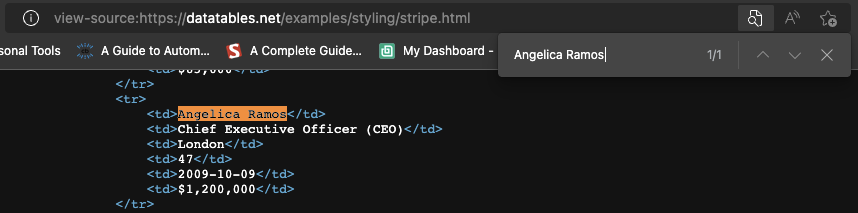

Let’s enter the table’s URL (https://datatables.net/examples/styling/stripe.html) in our browser and inspect the page to see what’s happening under the hood.

This is why this is a great page to practice scraping tabular data with Python. There’s a clear <table> tag pair opening and closing the table and all the relevant data is inside the <tbody> tag. It only shows ten rows which matches the number of entries selected on the front-end.

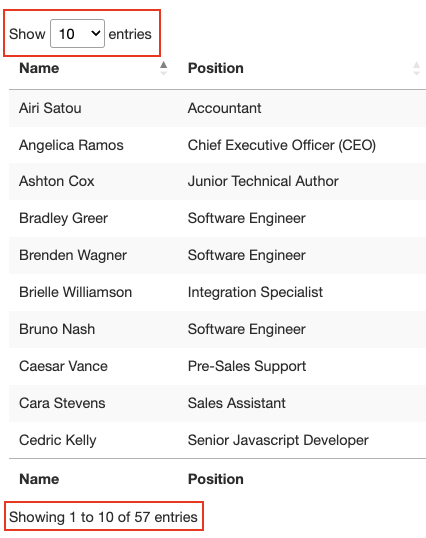

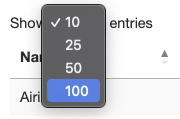

A few more things to know about this table is that it has a total of 57 entries we’ll want to scrape and there seems to be two solutions to access the data. The first is clicking the drop-down menu and selecting “100” to show all entries:

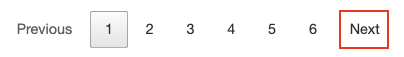

Or clicking on the next button to move through the pagination.

So which one is gonna be? Either of these solutions will add extra complexity to our script, so instead, let’s check where’s the data getting pulled from first.

Of course, because this is an HTML table, all the data should be on the HTML file itself without the need for an AJAX injection. To verify this, Right Click > View Page Source. Next, copy a few cells and search for them in the Source Code.

We did the same thing for a couple more entries from different paginated cells and yes, it seems like all our target data is in there even though the front-end doesn’t display it.

And with this information, we’re ready to move to the code!

Scraping HTML Tables Using Python’s Beautiful Soup

Because all the employee data we’re looking to scrape is on the HTML file, we can use the Requests library to send the HTTP request and parse the respond using Beautiful Soup.

Note: If you’re new to web scraping, we’ve created a web scraping in Python tutorial for beginners. Although you’ll be able to follow along without experience, it’s always a good idea to start from the basics.

1. Sending Our Main Request

Let’s create a new directory for the project named python-html-table, then a new folder named bs4-table-scraper and finally, create a new python_table_scraper.py file.54

From the terminal, let’s pip3 install requests beautifulsoup4 and import them to our project as follows:

import requests

from bs4 import BeautifulSoupTo send an HTTP requests with Requests, all we need to do is set an URL and pass it through requests.get(), store the returned HTML inside a response variable and print response.status_code.

Note: If you’re totally new to Python, you can run your code from the terminal with the command python3 python_table_scraper.py.

url = 'https://datatables.net/examples/styling/stripe.html'

response = requests.get(url)

print(response.status_code)If it’s working, it’s going to return a 200 status code. Anything else means that your IP is getting rejected by the anti-scraping systems the website has in placed. A potential solution is adding custom headers to your script to make your script look more human – but that might not be sufficient. Another solution is using an web scraping API to handle all these complexities for you.

2. Building the Parser Using Beautiful Soup

Before we can extract the data, we need to turn the raw HTML into formatted or parsed data. We’ll store this parsed HTML into a soup object like this:

soup = BeautifulSoup(response.text, 'html.parser')From here, we can traverse the parse tree using the HTML tags and their attributes.

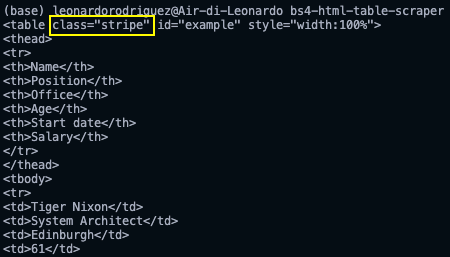

If we go back to the table on the page, we’ve already seen that the table is enclosed between <table> tags with the class stripe dataTable, which we can use to select the table.

table = soup.find('table', class_ = 'stripe')

print(table)Note: After testing, adding the second class (dataTable) didn’t return the element. In fact, in the return elements, the table’s class is only stripe. You can also use id = ‘example’.

Here’s what it returns:

Now that we grabbed the table, we can loop through the rows and grab the data we want.

3. Looping Through the HTML Table

Thinking back to the table’s structure, every row is represented by a <tr> element, and within them there’s <td> element containing data, all of this is wrapped between a <tbody> tag pair.

To extract the data, we’ll create two for looks, one to grab the <tbody> section of the table (where all rows are) and another to store all rows into a variable we can use:

for employee_data in table.find_all('tbody'):

rows = employee_data.find_all('tr')

print(rows)In rows we’ll store all the <tr> elements found within the body section of the table. If you’re following our logic, the next step is to store each individual row into a single object and loop through them to find the desired data.

For starters, let’s try to pick the first employee’s name on our browser’s console using the .querySelectorAll() method. A really usuful feature of this method is that we can go deeper and deeper into the hierarchy implementing the greater than (>) symbol to define the parent element (on the left) and the child we want to grab (on the right).

document.querySelectorAll('table.stripe &amp;amp;amp;gt; tbody &amp;amp;amp;gt; tr &amp;amp;amp;gt; td')[0]

That couldn’t work any better. As you see, once we grab all <td> elements, these become a nodelist. Because we can’t rely on a class to grab each cell, all we need to know is their position in the index and the first one, name, is 0.

From there, we can write our code like this:

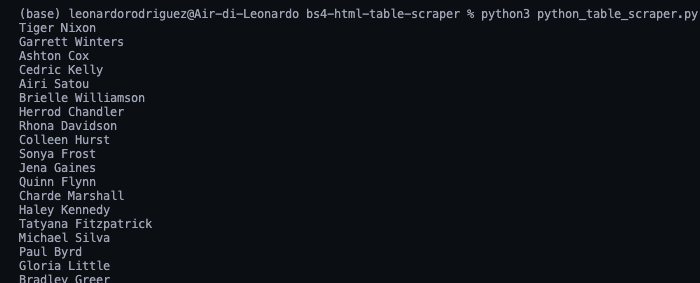

for row in rows:

name = row.find_all('td')[0].text

print(name)In simple terms, we’re taking each row, one by one, and finding all the cells inside, once we have the list, we grab only the first one in the index (position 0) and finish with the .text method to only grab the element’s text, ignoring the HTML data we don’t need.

There they are, a list with all the names employees names! For the rest, we just follow the same logic:

position = row.find_all('td')[1].text

office = row.find_all('td')[2].text

age = row.find_all('td')[3].text

start_date = row.find_all('td')[4].text

salary = row.find_all('td')[5].textHowever, having all this data printed on our console isn’t super helpful. Instead, let’s store this data into a new, more useful format.

4. Storing Tabular Data Into a JSON File

Although we could easily create a CSV file and send our data there, that wouldn’t be the most manageable format if we can to create something new using the scraped data.

Still, here’s a project we did a few months ago explaining how to create a CSV file to store scraped data.

The good news is that Python has its own JSON module for working with JSON objects, so we don’t need to install anything, just import it.

import jsonBut, before we can go ahead and create our JSON file, we’ll need to turn all this scraped data into a list. To do so, we’ll create an empty array outside of our loop.

employee_list = []And then append the data to it, with each loop appending a new object to the array.

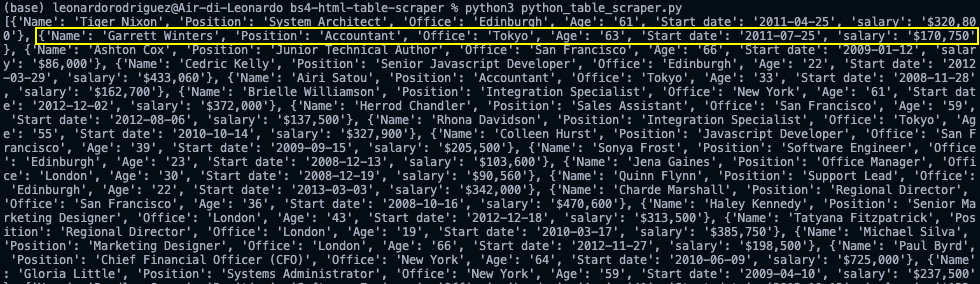

employee_list.append({ 'Name': name, 'Position': position, 'Office': office, 'Age': age, 'Start date': start_date, 'salary': salary })If we print(employee_list), here’s the result:

Still a little messy, but we have a set of objects ready to be transformed into JSON.

Note: As a test, we printed the length of employee_list and it returned 57, which is the correct number of rows we scraped (rows now being objects within the array).

Importing a list to JSON just requires two lines of code:

with open('json_data', 'w') as json_file:

json.dump(employee_list, json_file, indent=2)- First, we open a new file passing in the name we want for the file

(json_data)and ‘w’ as we want to write data to it. - Next, we use the

.dump()function to, well, dump the data from the array(employee_list)andindent=2so every object has it’s own line instead of everything being in one unreadable line.

5. Running the Script and Full Code

If you’ve been following along, your codebase should look like this:

#dependencies

import requests

from bs4 import BeautifulSoup

import json

url = 'http://api.scraperapi.com?api_key=51e43be283e4db2a5afbxxxxxxxxxxx&url=https://datatables.net/examples/styling/stripe.html'

#empty array

employee_list = []

#requesting and parsing the HTML file

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

#selecting the table

table = soup.find('table', class_ = 'stripe')

#storing all rows into one variable

for employee_data in table.find_all('tbody'):

rows = employee_data.find_all('tr')

#looping through the HTML table to scrape the data

for row in rows:

name = row.find_all('td')[0].text

position = row.find_all('td')[1].text

office = row.find_all('td')[2].text

age = row.find_all('td')[3].text

start_date = row.find_all('td')[4].text

salary = row.find_all('td')[5].text

#sending scraped data to the empty array

employee_list.append({

'Name': name,

'Position': position,

'Office': office,

'Age': age,

'Start date': start_date,

'salary': salary

})

#importing the array to a JSON file

with open('employee_data', 'w') as json_file:

json.dump(employee_list, json_file, indent=2)Note: We added some comments for context.

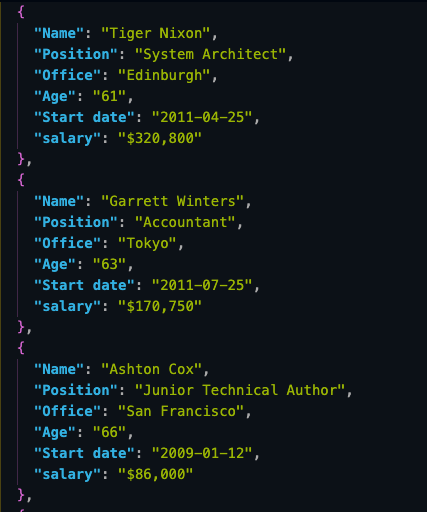

And here’s a look at the first three objects from the JSON file:

Storing scraped data in JSON format allow us to repurpose the information for new applications or

Scraping HTML Tables Using Pandas

Before you leave the page, we want to explore a second approach to scrape HTML tables. In a few lines of code, we can scrape all tabular data from an HTML document and store it into a dataframe using Pandas.

Create a new folder inside the project’s directory (we named it pandas-html-table-scraper) and create a new file name pandas_table_scraper.py.

Let’s open a new terminal and navigate to the folder we just created (cd pandas-html-table-scraper) and from there install pandas:

pip install pandasAnd we import it at the top of the file.

import pandas as pdPandas has a function called read_html() which basically scrape the target URL for us and returns all HTML tables as a list of DataFrame objects.

However, for this to work, the HTML table needs to be structured at least somewhat decently, as the function will look for elements like <table> to identify the tables on the file.

To use the function, let’s create a new variable and pass the URL we used previously to it:

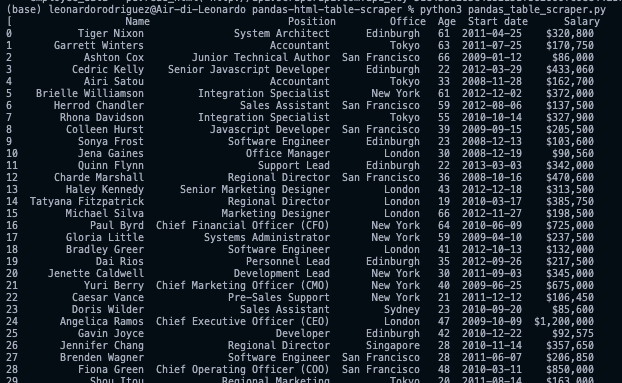

employee_data = pd.read_html('http://api.scraperapi.com?api_key=51e43be283e4db2a5afbxxxxxxxxxxxx&url=https://datatables.net/examples/styling/stripe.html')When printing it, it’ll return a list of HTML tables within the page.

If we compare the first three rows in the DataFrame they’re a perfect match to what we scraped with Beautiful Soup.

To work with JSON, Pandas can has a built-in .to_json() fuction. It’ll convert a list of DataFrame objects into a JSON string

All we need to do is calling the method on our DataFrame and pass in the path, the format (split, data, records, index, etc.) and add the indent to make it more readable:

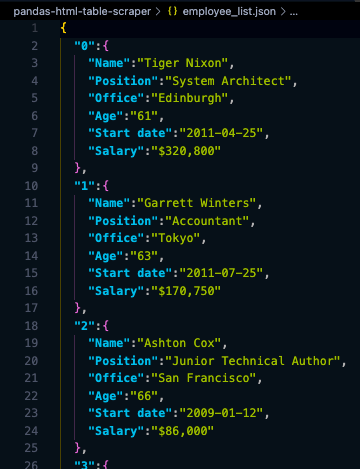

employee_data[0].to_json('./employee_list.json', orient='index', indent=2)If we run our code now, here’s the resulting file:

Notice that we needed to select our table from the index ([0])because .read_html() returns a list not a single object.

Here’s the Full Code for Your Reference

import pandas as pd

employee_data = pd.read_html('http://api.scraperapi.com?api_key=51e43be283e4db2a5afbxxxxxxxxxxxx&url=https://datatables.net/examples/styling/stripe.html')

employee_data[0].to_json('./employee_list.json', orient='index', indent=2)Armed with this new knowledge, you’re ready to start scraping virtually any HTML table on the web. Just remember that if you understand how the website is structured and the logic behind it, there’s nothing you can’t scrape.

That said, these methods will only work as long as the data is inside the HTML file. If you encounter a dynamically generated table, you’ll need to find a new approach.

Published at DZone with permission of Zoltan Bettenbuk. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments