We Turned Off AWS Config

This alternative to AWS Config rules saved this team time, money, and complexity.

Join the DZone community and get the full member experience.

Join For FreeWait, what?

That’s right. It wasn’t a typo. After enabling AWS Config across five of our AWS accounts, we decided to remove all but two of our Config rules. But why?

A little background first. If you are not familiar with AWS Config, it is a service provided by AWS that can be used to evaluate the configuration settings of your AWS resources. This is achieved by enabling AWS Config rules in one or multiple of your AWS accounts to check for your configuration settings against best practices or your desired/approved settings.

There are just over 80 managed rules available at the time of this writing. These rules provide a good baseline for a configuration audit. Not all the rules are applicable, depending on your environments and use cases. For what we needed internally at LifeOmic, we chose 21 out of those and enabled them last year.

Now onto the reasons why we disabled most of them last week.

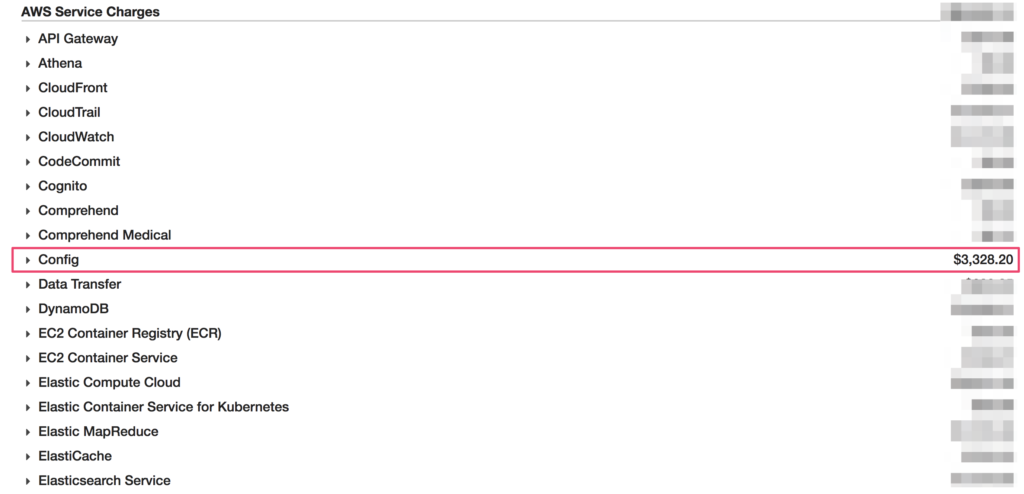

90% Cost Reduction of AWS Config

For the five AWS accounts on which we’ve enabled Config, the service is costing us over $3,300 per month, or around $40,000 a year. This was much higher than anticipated, especially for a lean startup. Enabling across all ten AWS accounts, we would likely have doubled that.

Luckily, we’ve started using JupiterOne. With the J1QL queries and the latest alerts capability, we decided to configure the equivalent of AWS Config evaluations using JupiterOne instead. Out of the 21 Config rules we’ve previously enabled, 19 of those can already be replaced.

| AWS Config Rule | Supported by J1 query/alert |

| acm-certificate-expiration-check | Yes |

| ec2-instances-in-vpc | Yes |

| ec2-volume-inuse-check | Yes |

| encrypted-volumes | Yes |

| restricted-ssh | Yes |

| iam-root-access-key-check | Yes |

| iam-password-policy | Yes |

| iam-user-no-policies-check | Yes |

| lambda-function-settings-check | Yes |

| db-instance-backup-enabled | Yes |

| rds-snapshots-public-prohibited | Yes |

| rds-storage-encrypted | Yes |

| dynamodb-throughput-limit-check | No |

| s3-bucket-public-read-prohibited | Yes |

| s3-bucket-public-write-prohibited | Yes |

| s3-bucket-replication-enabled | Yes |

| s3-bucket-server-side-encryption-enabled | Yes |

| s3-bucket-ssl-requests-only | No |

| s3-bucket-logging-enabled | Yes |

| s3-bucket-versioning-enabled | Yes |

| cloudtrail-enabled | Yes |

See more details on the documentation page, and the alerts rule pack on GitHub.

We kept the two rules that are not yet supported by JupiterOne J1QL queries and alerts at this point. But because JupiterOne already integrates with AWS Config, we’ve added a “fallback” J1QL alert rule to check for non-compliant Config rule evaluations:

| JupiterOne Alert Rule | |

| name | config-rule-noncompliant |

| description | AWS Config rule evaluation found non-compliant resource configurations. |

| query | Find aws_config_rule with complianceState='NON_COMPLIANT' |

| condition | When query result count > 0 |

This allowed us to minimize the use of AWS Config rules to only those necessary while having consistent and centralized configuration alerts within one place – JupiterOne. And this resulted in over 90% savings to our AWS bill for the Config service.

Did we go through this trouble just for cost savings? It sure is nice, but that’s not the only reason.

Much More Flexible Tuning

Previously, with AWS Config rules, it is not easy to add additional contextual filters into the rule configuration in order to reduce false positives.

For example, the “s3-bucket-public-read-prohibited” rule from AWS Config does not take any additional parameters.

What if I have certain S3 buckets that are hosting public resources and therefore are meant to be publicly readable?

With JupiterOne’s query, it is very simple to tune those out by adding the classification property/tag filter to the J1QL query:

Find aws_s3_bucket with classification != 'public'

that ALLOWS as grant Everyone where grant.permission='READ'Similarly, if we want to take into account additional contexts such as production status and classification label for “s3-bucket-replication-enabled” and “s3-bucket-versioning-enabled,” we can add those filters easily to the J1QL query:

// s3-bucket-replication-enabled

// find production buckets that do not have replication enabled

Find aws_s3_bucket with tag.Production = true

and (replicationEnabled != true or destinationBuckets = undefined)

// s3-bucket-versioning-enabled

// find production buckets classified as critical without versioning or

// mfaDelete enabled

Find aws_s3_bucket

with tag.Production = true and classification = ’critical’

and (versioningEnabled != true or mfaDelete != true)We can apply the same pattern to easily tune any rule to reduce false positives. We did the same for the “fallback” config-rule-noncompliant rule:

Find aws_config_rule with compliant = false

that evaluates * with

tag.Production = true or

classification = ‘critical’ or

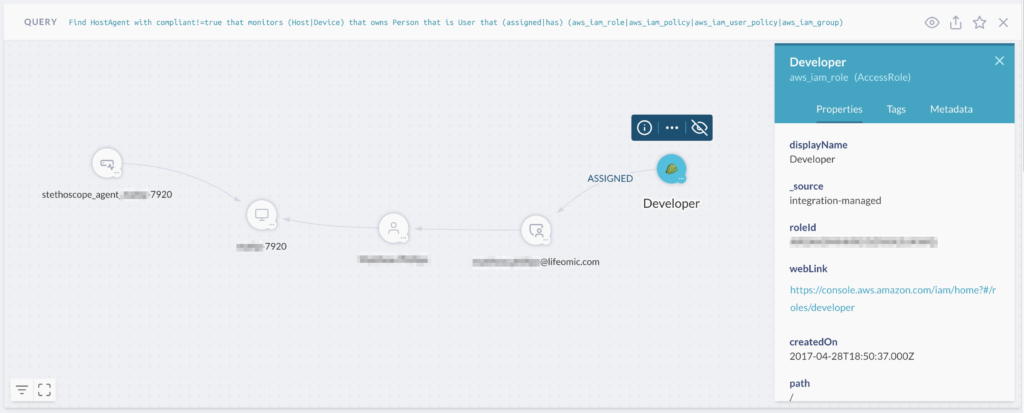

criticality >= 9Additionally, we leverage the power of relationships from the JupiterOne knowledge graph to create more precise targets. For example, we can correlate users who have been assigned access to our production AWS accounts via SAML single sign-on, the user’s endpoint devices, and the compliance status on those devices to create a query/alert only when those privileged users have endpoints that fall out of compliance:

Find HostAgent with compliant!=true

that monitors (Host|Device)

that owns Person

that is User

that (assigned|has)

(aws_iam_role|aws_iam_policy|aws_iam_user_policy|aws_iam_group)

with tag.Production=trueOh right, if you are interested, here’s what the graph from the above query looks like:

Reduced Complexity

Because of abstract class labeling assigned by the JupiterOne data model, we can use abstract rules to reduce the number of rules we have to manage and the number of alerts to analyze. Here is an example:

If we want to check to see if our production data stores have enabled encryption across the board, we may need one AWS Config rule to cover each data store type:

- S3 buckets

- RDS instances

- DynamoDB tables

- EBS volumes

Within JupiterOne, all of the above entities have been assigned an abstract class label: DataStore. This allows us to use one alert rule instead of four to cover everything. And keep in mind we can apply the same tag/property filter as needed to reduce false positives.

Find DataStore with

tag.Production = true and

classification = ’critical’ and

encrypted != trueHere’s another example. With thousands of security findings from various type of security scanners, agents, and monitoring tools, how do we set up alerts for the most important ones? Combining the abstract entity labeling and graph relationships in JupiterOne, we can do something like:

Find (Device|Host|Database|Application|CodeRepo) with

tag.Production = true or classification = ’critical’

that has Finding with severity = ‘critical’ or numericSeverity > 7This one query covers alerting for findings from AWS Inspector, GuardDuty, application code scanning tools like Veracode and WhiteHat, and endpoint monitoring tools like Carbon Black and SentinelOne. And JupiterOne has out-of-the-box integrations with all of them.

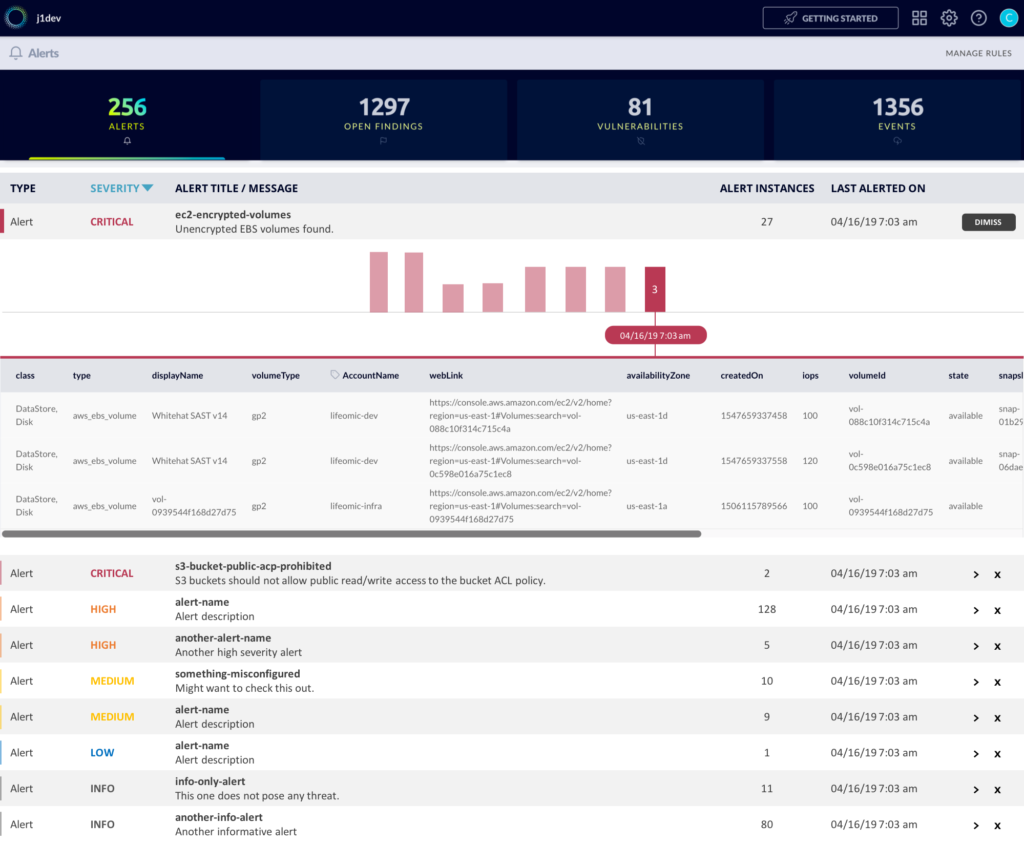

Centralized Management and Notification

In order to receive alerts from AWS Config evaluations, we had to set up CloudWatch to receive the findings, then set up alarms, and finally, configure SNS and/or SES to send out notifications. That’s a lot of services to configure. And this is only for alerts and notifications in one environment; we would have to do that same for half a dozen other security controls we have implemented. And when the alerts fire, we will have to visit the dashboard/console of each individual system to see them.

Instead, we have centralized all of the alerts to JupiterOne. In the Alerts app, we have access to alerts, findings, and vulnerabilities from all integrated source. We can set up a notification for them and perform analysis on them all in one place. This is still early in development. More to come, but here is a sneak peek.

That’s it. We’ve moved on from AWS Config and do not regret a bit.

Published at DZone with permission of Ryan Hilliard. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments