What Is Langchain And Large Language Models?

In this comprehensive article, we explore both LangChain and Large Language Models. We will go through a simple tutorial to understand both.

Join the DZone community and get the full member experience.

Join For FreeIf you're a developer or simply someone passionate about technology, you've likely encountered AI tools such as ChatGPT. These utilities are powered by advanced large language models (LLMs). Interested in taking it up a notch by crafting your own LLM-based applications? If so, LangChain is the platform for you.

Let's keep everything aside and understand about LLMs first. Then, we can go over LangChain with a simple tutorial. Sounds interesting enough? Let's get going.

What Are Large Language Models (LlMS)?

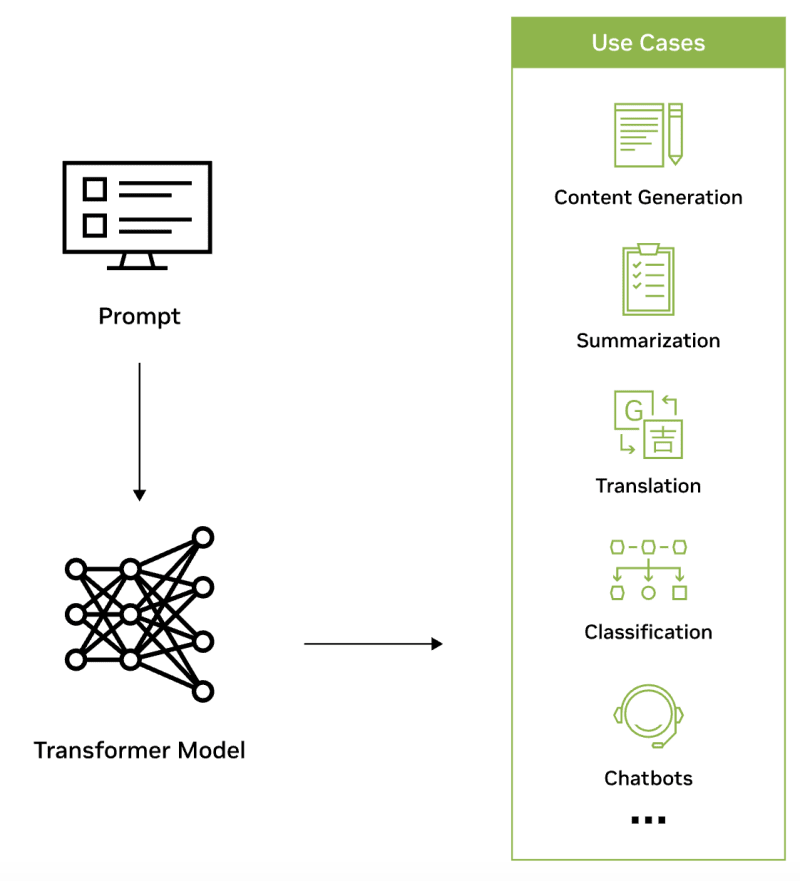

Large Language Models (LLMs) like GPT-3 and GPT-4 from OpenAI are machine learning algorithms designed to understand and generate human-like text based on the data they've been trained on. These models are built using neural networks with millions or even billions of parameters, making them capable of complex tasks such as translation, summarization, question-answering, and even creative writing.

Trained on diverse and extensive datasets, often encompassing parts of the internet, books, and other texts, LLMs analyze the patterns and relationships between words and phrases to generate coherent and contextually relevant output. While they can perform a wide range of linguistic tasks, they are not conscious and don't possess understanding or emotions, despite their ability to mimic such qualities in the text they generate.

Source Credits: NVIDIA

Large language models primarily belong to a category of deep learning structures known as transformer networks. A transformer model is a type of neural network that gains an understanding of context and significance by identifying the connections between elements in a sequence, such as the words in a given sentence.

What Is LangChain?

Developed by Harrison Chase, and debuted in October 2022, LangChain serves as an open-source platform designed for constructing sturdy applications powered by Large Language Models, such as chatbots like ChatGPT and various tailor-made applications.

Langchain seeks to equip data engineers with an all-encompassing toolkit for utilizing LLMs in diverse use cases, such as chatbots, automated question-answering, text summarization, and beyond.

LangChain is composed of 6 modules explained below:

Image credits: ByteByteGo

Large Language Models:

LangChain serves as a standard interface that allows for interactions with a wide range of Large Language Models (LLMs).Prompt Construction:

LangChain offers a variety of classes and functions designed to simplify the process of creating and handling prompts.Conversational Memory:

LangChain incorporates memory modules that enable the management and alteration of past chat conversations, a key feature for chatbots that need to recall previous interactions.Intelligent Agents:

LangChain equips agents with a comprehensive toolkit. These agents can choose which tools to utilize based on user input.Indexes:

Indexes in LangChain are methods for organizing documents in a manner that facilitates effective interaction with LLMs.Chains:

While using a single LLM may be sufficient for simpler tasks, LangChain provides a standard interface and some commonly used implementations for chaining LLMs together for more complex applications, either among themselves or with other specialized modules.

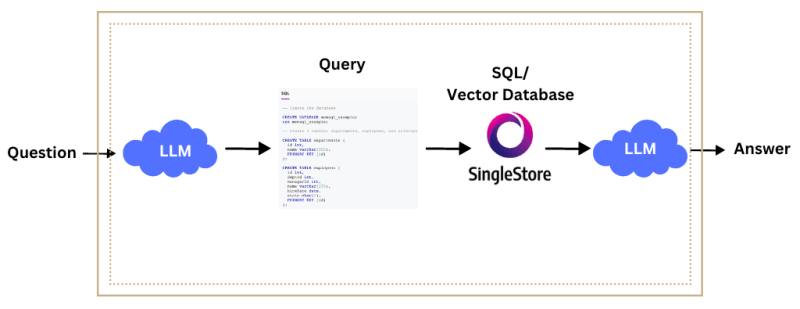

How Does LangChain Work?

LangChain is composed of large amounts of data and it breaks down that data into smaller chunks which can be easily embedded into vector store. Now, with the help of LLMs, we can retrieve the only information that is needed.

When a user inserts a prompt, LangChain will query the Vector Store for relevant information. When exact or almost matching information is found, we feed that information to LLM to complete or generate the answer that the user is looking for.

Get Started With LangChain

Let's use SingleStore's Notebooks feature (it is FREE to use) as our development environment for this tutorial.

The SingleStore Notebook extends the capabilities of Jupyter Notebook to enable data professionals to easily work and play around.

What Is A Single Store?

SingleStore is a distributed, in-memory, SQL database management system designed for high-performance, high-velocity applications. It offers real-time analytics and mixes the capabilities of a traditional operational database with that of an analytical database to allow for transactions and analytics to be performed in a single system.

Sign up for SingleStore to use the Notebooks.

Once you sign up to SingleStore, you will also receive $600 worth of free computing resources. So why not use this opportunity?

Click on 'Notebooks' and start with a blank Notebook.

Name it something like 'LangChain-Tutorial' or as per your wish.

Let's start working with our Notebook that we just created.

Follow this step-by-step guide and keep adding the code shown in each step in your Notebook and execute it. Let's start!

Now, to use Langchain, let’s first install it with the pip command.

!pip install -q langchainTo work with LangChain, you need integrations with one or more model providers like OpenAI or Hugging Face. In this example, let's leverage OpenAI’s APIs, so let's install it.

!pip install -q openai

Next, we need to set up the environment variable for the playground.

Let's do that.

import os

os.environ["OPENAI_API_KEY"] = "Your-API-Key"Hope you know how to get Your-API-Key, if not, go to this link to get your OpenAI API key.

[Note: Make sure you still have the quota to use your API Key]

Next, let’s get an LLM like OpenAI and predict with this model.

Let's ask our model the top 5 most populated cities in the world.

from langchain.llms import OpenAI

llm = OpenAI(temperature=0.7)

text = "what are the 5 most populated cities in the world?"

print(llm(text))

As you can see, our model made a prediction and printed the 5 most populated cities in the world.

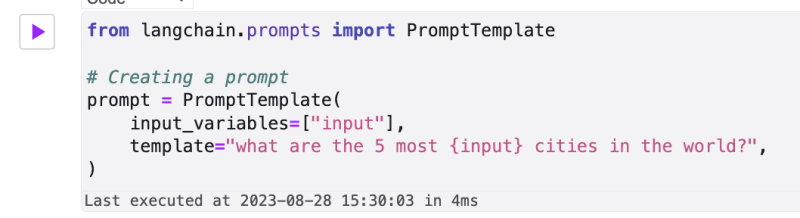

Prompt Templates

Let’s first define the prompt template.

from langchain.prompts import PromptTemplate

# Creating a prompt

prompt = PromptTemplate(

input_variables=["input"],

template="what are the 5 most {input} cities in the world?",

)

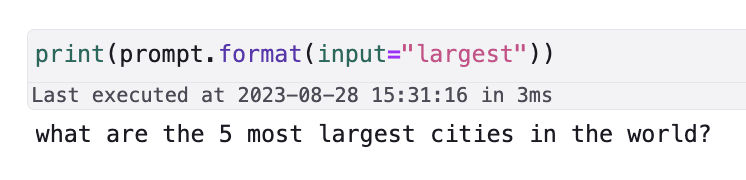

We created our prompt. To get a prediction, let’s now call the format method and pass it an input.

Creating Chains

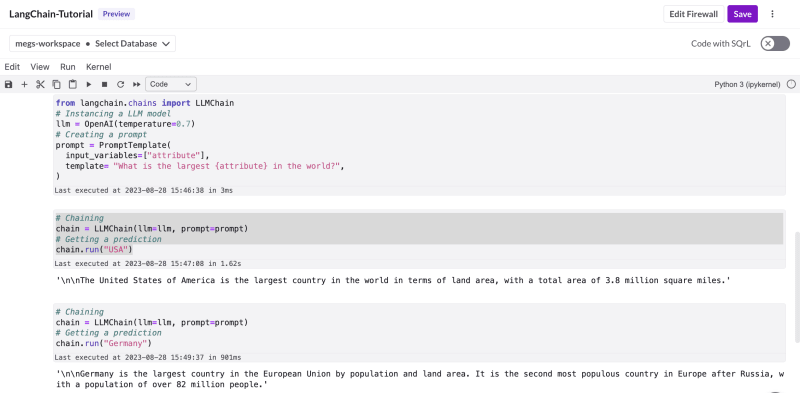

So far, we’ve seen how to initialize a LLM model, and how to get a prediction with this model. Now, let’s take a step forward and chain these steps using the LLMChain class.

from langchain.chains import LLMChain

# Instancing a LLM model

llm = OpenAI(temperature=0.7)

# Creating a prompt

prompt = PromptTemplate(

input_variables=["attribute"],

template= "What is the largest {attribute} in the world?",

)

You can see the prediction of the model.

Developing An Application Using LangChain LLM

Again use SingleStore's Notebooks as the development environment.

Let's develop a very simple chat application.

Start with a blank Notebook and name it as per your wish.

- First, install the dependencies.

pip install langchain openai- Next, import the installed dependencies.

from langchain import ConversationChain, OpenAI, PromptTemplate, LLMChain

from langchain.memory import ConversationBufferWindowMemory- Get your OpenAI API key and save it safely.

Add and customize the LLM template

# Customize the LLM template

template = """Assistant is a large language model trained by OpenAI.

{history}

Human: {human_input}

Assistant:"""

prompt = PromptTemplate(input_variables=["history", "human_input"], template=template)- Load the ChatGPT chain with the API key you saved safely. Add the human input as 'What is SingleStore?'. You can change your input to whatever you want.

chatgpt_chain = LLMChain(

llm=OpenAI(openai_api_key="YOUR-API-KEY",temperature=0),

prompt=prompt,

verbose=True,

memory=ConversationBufferWindowMemory(k=2),

)

# Predict a sentence using the chatgpt chain

output = chatgpt_chain.predict(

human_input="What is SingleStore?"

)

# Display the model's response

print(output)The script initializes the LLM chain using the OpenAI API key and a preset prompt. It then takes user input and shows the resulting output.

The expected output is as shown below,

Play with this by changing the human input text/content.

The complete execution steps in code format are available on GitHub.

LangChain emerges as an indispensable framework for data engineers and developers striving to build cutting-edge applications powered by large language models. Unlike traditional tools and platforms, LangChain offers a more robust and versatile framework tailored for complex AI applications. LangChain is not just another tool in a developer's arsenal; it's a transformative framework that redefines what is possible in the realm of AI-powered applications.

In the realm of GenAI applications and LLMs, it is highly recommended to know about vector databases. I recently wrote a complete overview of vector databases, you might like to go through that article.

Published at DZone with permission of Pavan Belagatti, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments