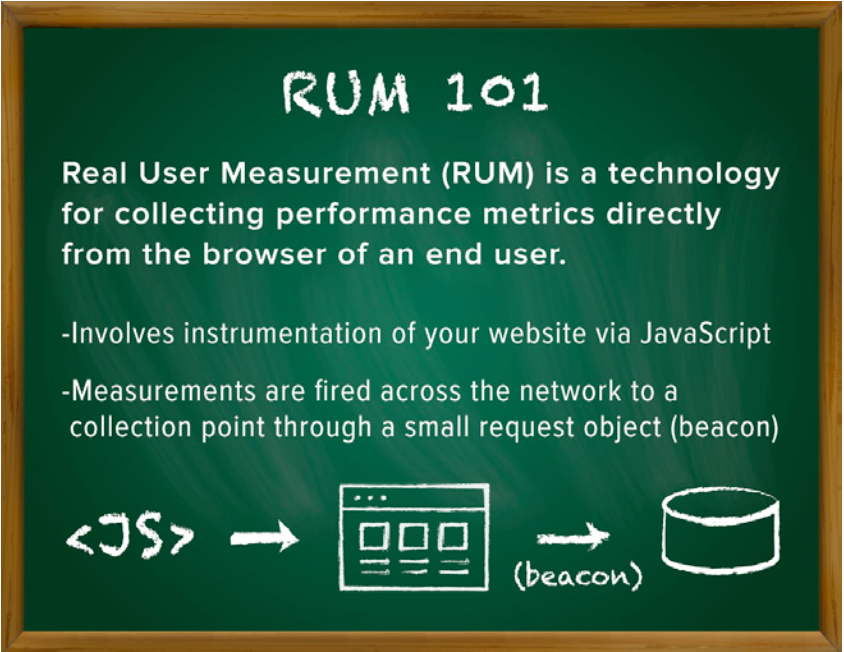

Since the dawn of performance testing, script generation has always been an inexact science. A guessing game. No one really knew how their real users, or even more importantly, their real customers, were using their website or mobile application. But with the advent of RUM, that has all changed. The user experience, or UX, is now at the forefront of performance testing best practices. Why? Using RUM to develop test cases gives you more confidence to know that the tests you are running accurately reflect the way your user community is actually using your website or mobile application.

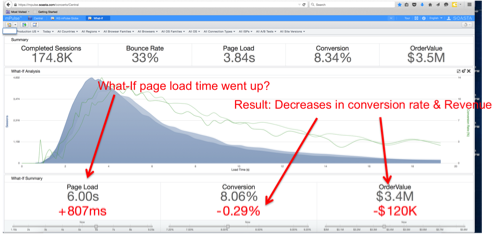

Let’s walk through a real-world example from a retail site having performance issues on an almost weekly basis— and the same time period every week—despite running performance tests nightly in numerous portions of the development and testing lifecycle (e.g. Dev => Test => QA) and in various scenarios both behind the firewall and in production from the cloud. In addition, performance tests were being executed at over 150% of the current peak user volume across the site on a release-by-release basis. But still, performance was a problem, from a minimum of a dramatic drop in page load time all the way to—and including—site outages and crashes, complete with the obvious hits to the bottom line: revenue.

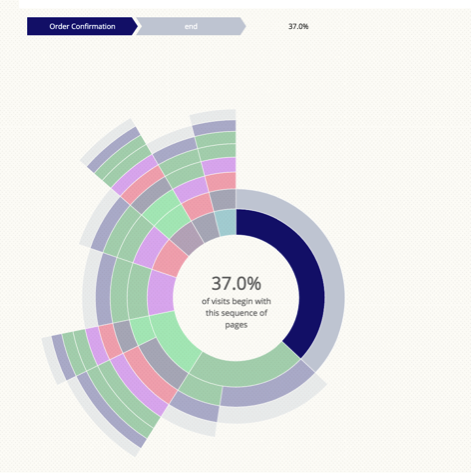

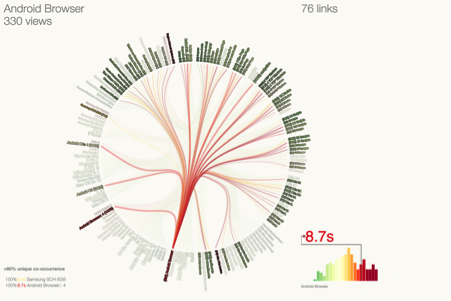

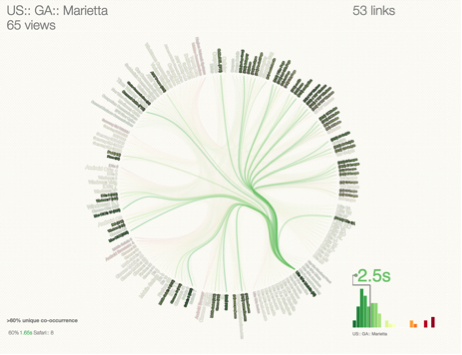

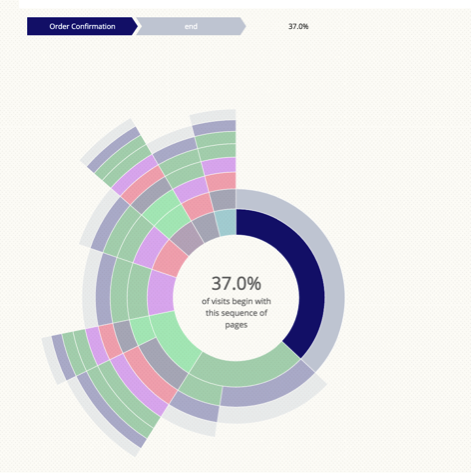

In our example, our analysis of RUM yielded a piece of data in particular that just jumped out at the whole team. The real users had a pattern of visiting the site that we had not taken into account in all of the performance testing. In the visualization below, there was a clear click-to-conversion path.

As shown in the previous image, 37% of visits to this retailer’s website started with a visit to the order confirmation page. Let that sink in for a minute—37% of the users started their visit with “order confirmation” and then hit “submit”. No product searches. No adding items to the cart. They had already completed those steps. How does this make sense? Simple.

Enter marketing.

It turns out, this makes perfect sense. Why? Let’s turn our gaze onto our friends over in the marketing organization. It seems that they run a discount promotion on Facebook every Sunday. Every Sunday around noon, marketing puts up the “sale of the week” coupon for “now you see it, now you don’t” discounts. The coupon is good the coming Friday, from 8 AM EDT until noon EDT. Discounts start at 40%, and drop by 10% every hour until everything goes back to normal retail pricing at noon EDT. (So, in this sale, from 8:00–8:59, the discount coupon is good for 40% off retail pricing; from 9:00–9:59, the discount coupon is good for 30% off retail pricing, and so on—you get the idea.)

Fast forward to Friday at 8 AM. This just happens to be the time period that all the performance drop-offs and outages were happening. See why? Marketing and the IT Operations/Performance Teams were not in alignment. In fact, IT had no idea that social media was being used for sales and marketing campaigns! (That in itself is astonishing!) So, once we were able to gather and analyze this data, the QA teams generated test cases around these new use cases and tested accordingly. And, not surprisingly, several issues were found and fixed.

This never caused another problem again. The two teams—marketing and IT, are now in alignment on every campaign, and user information is utilized to ensure that every possible test path is covered and testing to its peak.