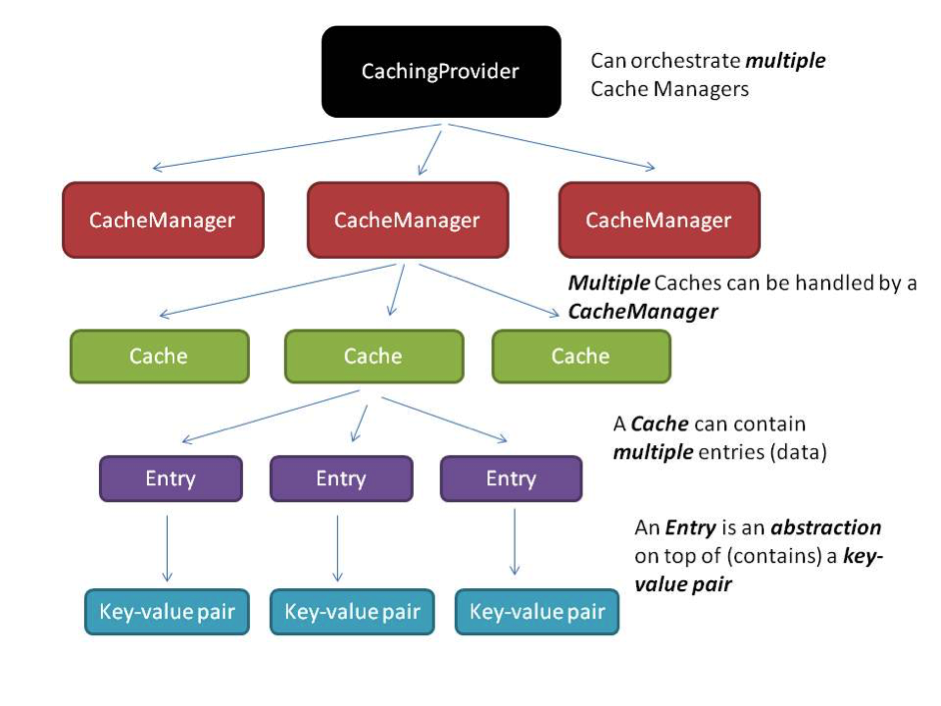

Caching strategies are methodologies one might adopt while implementing a caching solution as part of an application. The drivers/use cases behind the requirement of a caching layer vary based on the application’s requirements.

Let’s look at some of the commonly adopted caching strategies and how JCache fits into the picture. The following table provides a quick snapshot followed by some details:

Cache Topology

Which caching topology/setup is best suited for your application? Does your application need a single-node cache or a collaborative cache with multiple nodes?

Strategies/Options

From a specification perspective, JCache does not include any details or presumptions with regards to the cache topology.

Standalone: As the name suggests, this setup consists of a single node containing all the cached data. It’s equivalent to a single- node cluster and does not collaborate with other running instances.

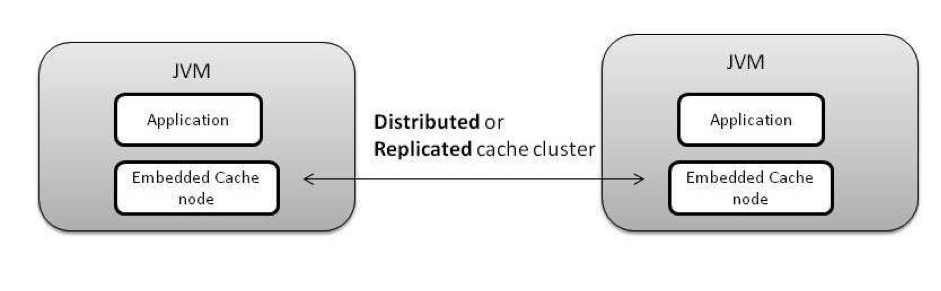

Distributed: Data is spread across multiple nodes in a cache such that only a single node is responsible for fetching a particular entry. This is possible by distributing/partitioning the cluster

in a balanced manner (i.e., all the nodes have the same number of entries and are hence load balanced). Failover is handled via configurable backups on each node.

Replicated: Data is spread across multiple nodes in a cache such that each node consists of the complete cache data, since each cluster node contains all the data; failover is not a concern.

Cache Modes

Do you want the cache to run in the same process as your application, or would you want it to exist independently (as-a- service style) and execute in a client-server mode?

Strategies/Options

JCache does not mandate anything specific as far as cache modes are concerned. It embraces these principles by providing flexible APIs that are designed in a cache-mode agnostic manner.

The following modes are common across caches in general:

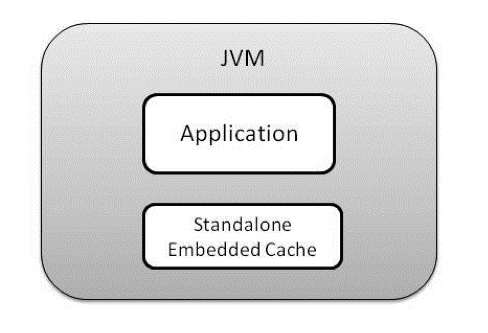

Embedded mode: When the cache and the application co-exist within the same JVM, the cache can be said to be operating in embedded mode. The cache lives and dies with the application JVM. This strategy should be used when:

- Tight coupling between your application and the cache is not a concern

- The application host has enough capacity (memory) to accommodate the demands of the cache

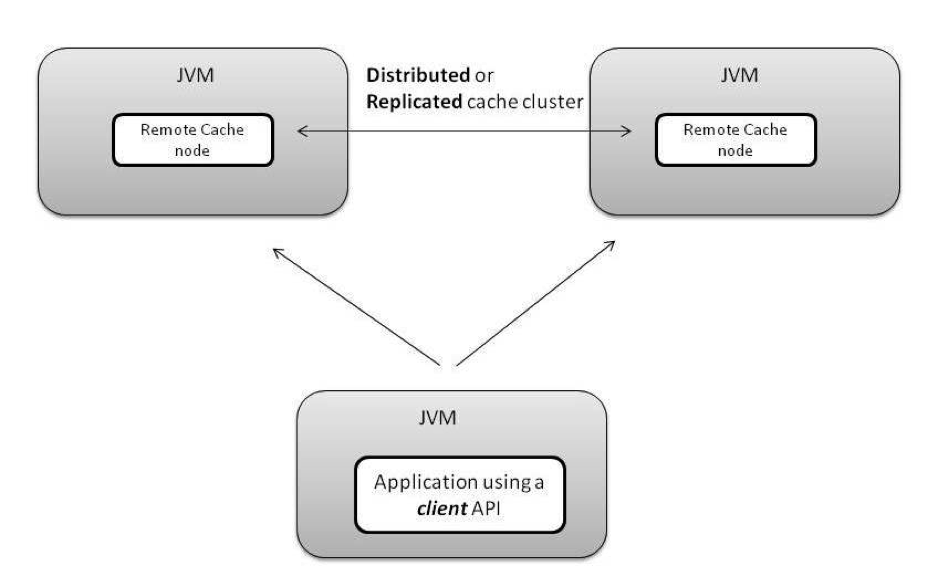

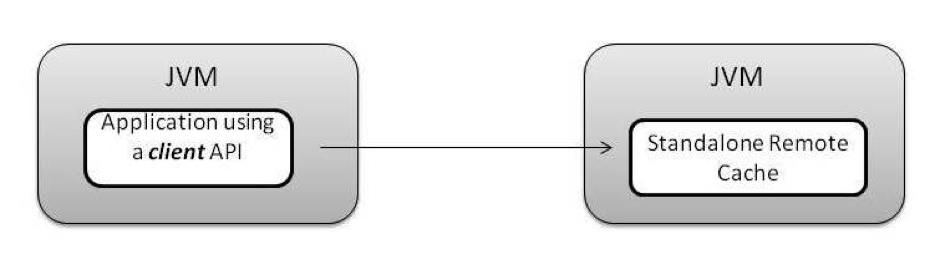

Client-Server mode: In this setup, the application acts as the client to a standalone (remote) caching layer. This should be leveraged when:

- The caching infrastructure and application need to evolve independently

- Multiple applications use a unified caching layer which can be scaled up without affecting client applications

Multiple Combinations to Choose From

Different cache modes and topologies make it possible to have multiple options to choose from, depending upon specific requirements.

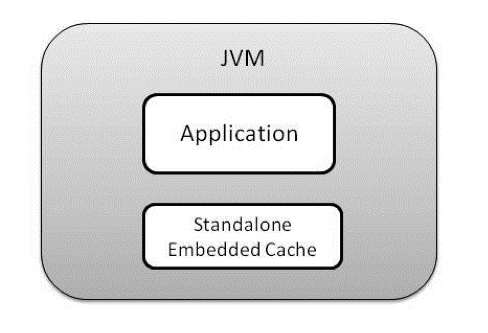

Standalone Embedded Cache: A single cache node within the same JVM as the application itself  Distributed Embedded Cache: Multiple cache (clustered) nodes, each of which is co-located within the application JVM and is responsible for a specific cache entry only

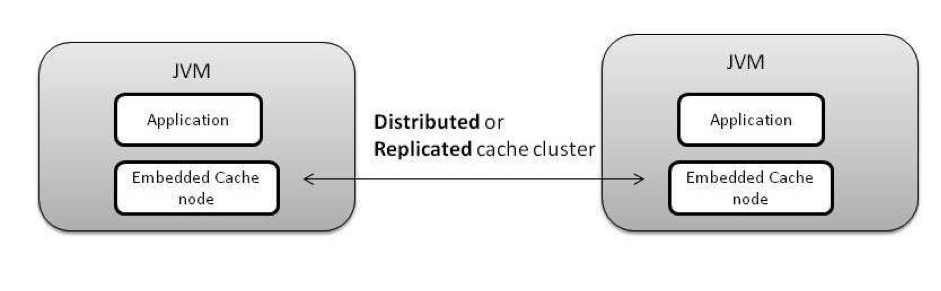

Distributed Embedded Cache: Multiple cache (clustered) nodes, each of which is co-located within the application JVM and is responsible for a specific cache entry only

Replicated Embedded Cache: Multiple cache (clustered) nodes, each of which is co-located within the application JVM; here the cached data is replicated to all the nodes Standalone Client-Server Cache: A single cache node running as a separate process than that of the application

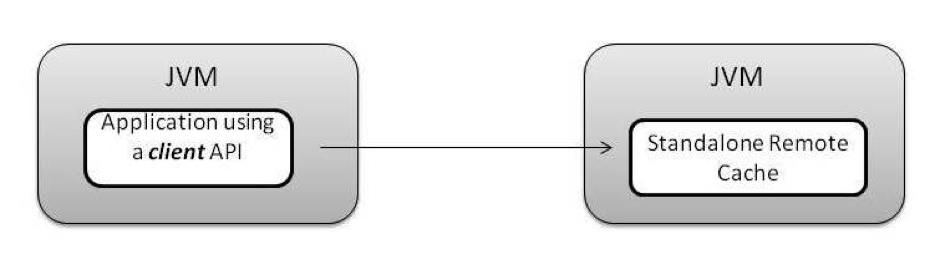

Standalone Client-Server Cache: A single cache node running as a separate process than that of the application Distributed Client-Server Cache: Multiple cache (clustered) nodes, collaborating in a distributed fashion and executing in isolation from the client application

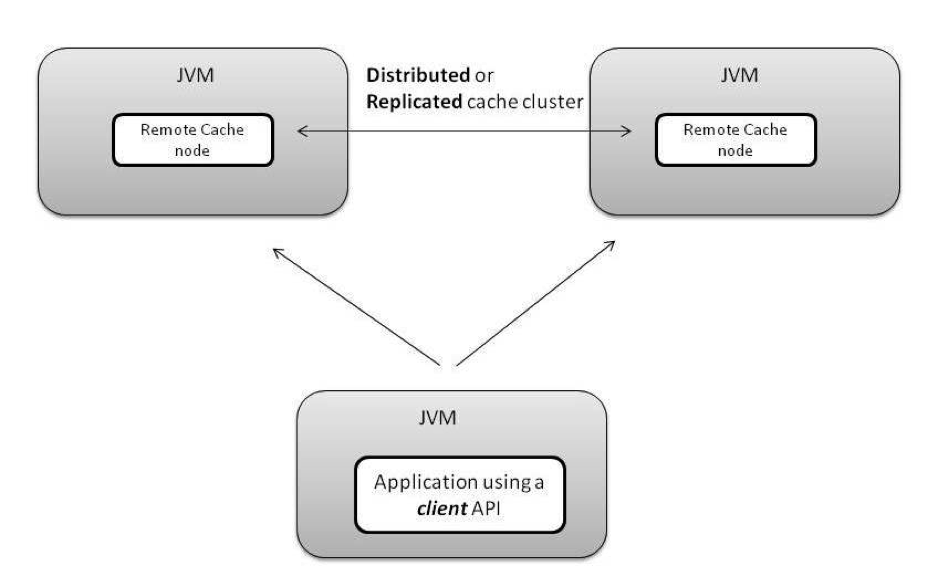

Distributed Client-Server Cache: Multiple cache (clustered) nodes, collaborating in a distributed fashion and executing in isolation from the client application

Replicated Client-Server Cache: Multiple cache (clustered) nodes, where the entire cache data copy is present on each node, and the cache itself is run as a separate process than that of the application

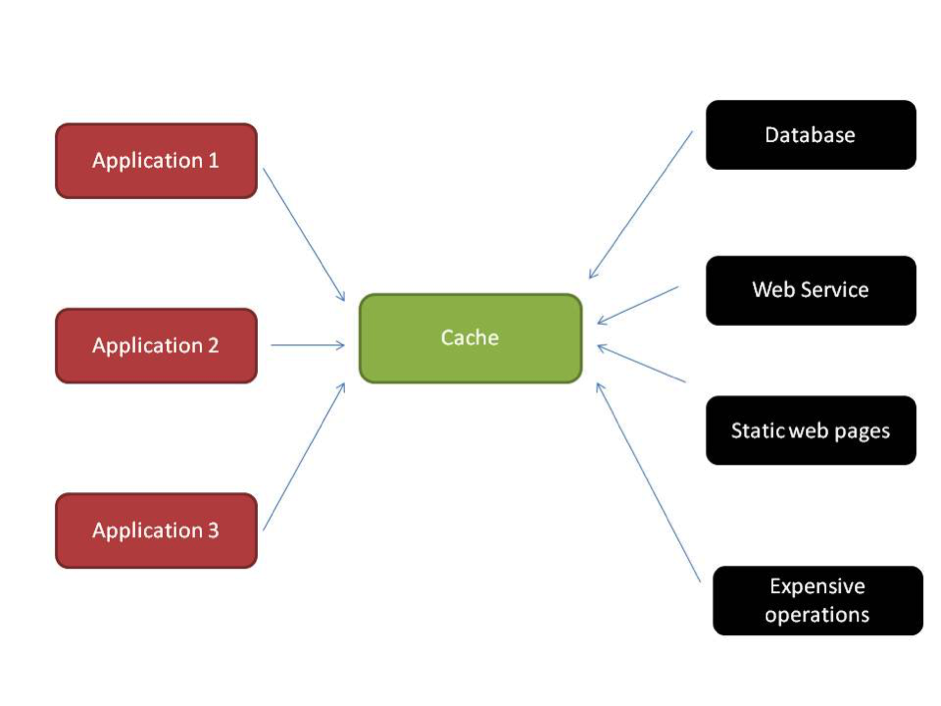

Transparent Cache Access

You are designing a generic caching layer for heterogeneous applications. Ideally, you would want your application to access the cache transparently without polluting its core logic with the specifics of the caching implementation.

Strategies/Options

As already stated in the JCache Deep Dive section, integration to external systems—like databases, file stores, LDAP, etc.— is already abstracted via the CacheLoader and CacheWriter mechanisms, which help you implement Read-Through and Write-Through strategies respectively.

Read-Through: A process by which a missing cache entry is fetched from the integrated backend store.

Write-Through: A process by which changes to a cache entry (create, update, delete) are pushed into the backend data store.

It is important to note that the business logic for Read-Through and Write-Through operations for a specific cache are confined within the caching layer itself. Hence, your application remains insulated from the specifics of the cache and its backing system-of-record.

Other Strategies (Non-JCache)

Write-Behind: This strategy leverages a more efficient approach wherein the cache updates are batched (queued) and asynchronously written to the backend store instead of an eager and synchronous policy adopted by the Write-Through strategy. For example, Hazelcast supports the Write-Behind strategy via its com.hazelcast.core. MapStore interface when the write-delay-seconds configuration property is greater than 0. Please note that this is purely a Hazelcast Map feature and is not supported through ICache extension.

Refresh-Ahead: This is another interesting strategy where a caching implementation allows you to refresh the cache data from the backend store depending upon a specific factor, which can be expressed in terms of an entry’s expiration time. The reload process is asynchronous in nature. For example, Oracle Coherence supports this strategy, which is driven by a configuration element known as the refresh-ahead factor, which is a percentage of the expiration time of a cache entry.

Cache Data Quality

Cache expiry/eviction policies are vital from a cache data quality perspective. If a cache is not tuned to expire its contents, then it will not get a chance to refresh/reload/sync up with its master repo/system-of-record and might end up returning stale or outdated data.

You need to ensure that the caching implementation takes into account the data volatility in the backing data store (behind the cache) and effectively tune your cache to maintain quality data.

Strategies/Options

In the JCache Deep Dive section, you came across the default expiry policies available in JCache—AccessedExpiryPolicy, CreatedExpiryPolicy, EternalExpiryPolicy, ModifiedExpiryPolicy, and TouchedExpiryPolicy. In addition to these policies, JCache allows you to implement custom eviction policies by implementing the javax.cache.expiry.ExpiryPolicy interface.

Flexi-Eviction (Non-JCache)

The JCache API allows you to enforce expiry policies on a specific cache; as a result, it is applicable to all the entries in that cache. With the Hazelcast JCache implementation, you can fine-tune this further by providing the capability to specify the ExpiryPolicy per entry in a cache. This is powered by the com.hazelcast.cache.ICache interface.

Distributed Embedded Cache: Multiple cache (clustered) nodes, each of which is co-located within the application JVM and is responsible for a specific cache entry only

Distributed Embedded Cache: Multiple cache (clustered) nodes, each of which is co-located within the application JVM and is responsible for a specific cache entry only Standalone Client-Server Cache: A single cache node running as a separate process than that of the application

Standalone Client-Server Cache: A single cache node running as a separate process than that of the application Distributed Client-Server Cache: Multiple cache (clustered) nodes, collaborating in a distributed fashion and executing in isolation from the client application

Distributed Client-Server Cache: Multiple cache (clustered) nodes, collaborating in a distributed fashion and executing in isolation from the client application