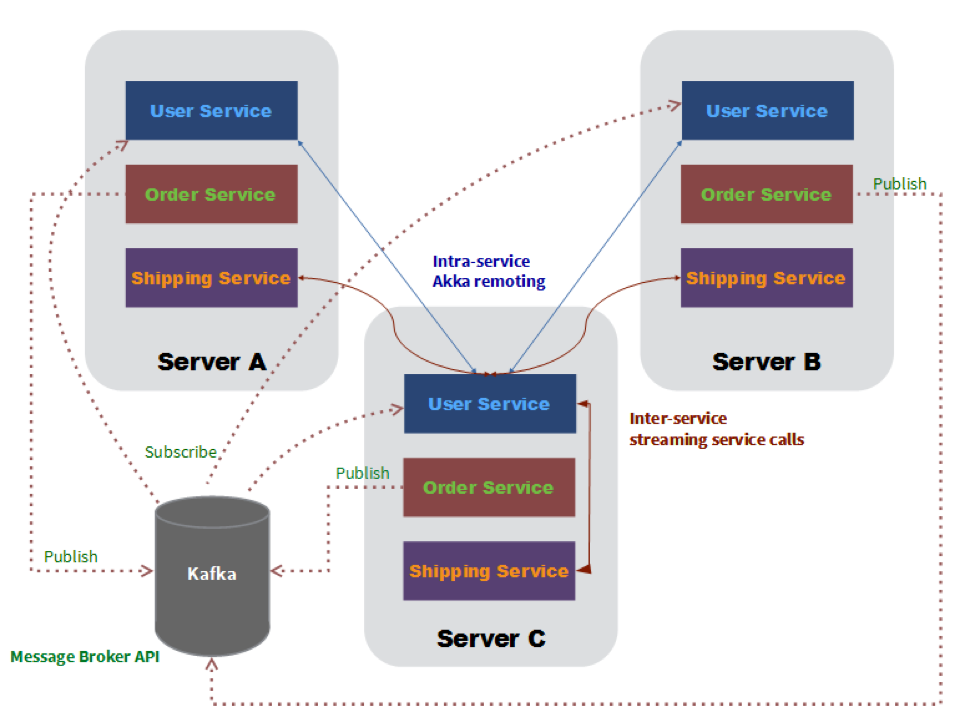

While similar, inter- and intra-service communication have very different needs, and you need multiple implementation options. Inter-service communication must use loosely-coupled protocols and message formats to maintain isolation and autonomy, while intra-service communication can take advantage of mechanisms that have less overhead and better performance.

Service calls, either synchronous or asynchronous (streaming), allow services to communicate with each other using published APIs and standard protocols (HTTP and WebSockets). Lagom services are described by an interface, known as a service descriptor. This interface not only defines how the service is invoked and implemented, it also defines the metadata that describes how the interface is mapped down onto an underlying transport protocol. Generally, the service descriptor, its implementation, and consumption should remain agnostic to what transport is being used, whether that’s REST, websockets, or some other transport.

When you use call, namedCall, or pathCall, Lagom will make a best effort attempt to map it down to REST in a semantic fashion, so that means if there is a request message, it will use POST, if there’s none, it will use GET. Every service call in Lagom has a request message type and a response message type. When the request or response message isn’t used, akka.NotUsed can be used in their place. Request and response message types fall into two categories: strict and streamed. A strict message is a single message that can be represented by a simple Java object. The message will be buffered into memory, and then parsed, for example, as JSON. The above service calls use strict messages.

A streamed message is a message of the type Source. Source is an Akka streams API that allows asynchronous streaming and handling of messages.

This service call has a strict request type and a streamed response type. An implementation of this might return a Source that sends the input tick message String at the specified interval.

Services are implemented by providing an implementation of the service descriptor interface, implementing each call specified by that descriptor.

The sayHello() method is implemented using a lambda. An important thing to realize here is that the invocation of sayHello() itself does not execute the call, it only returns the call to be executed. The advantage here is that when it comes to composing the call with other cross cutting concerns, such as authentication, this can easily be done using ordinary function-based composition.

Having provided an implementation of the service, we can now register it with the Lagom framework. Lagom is built on top of the Play Framework, and so uses Play’s Guice-based dependency injection support to register components. To register a service, you’ll need to implement a Guice module. This is done by creating a class called Module in the root package.

Working with streamed messages requires the use of Akka streams. The tick service call is going to return a Source that sends messages at the specified interval. Akka streams has a helpful constructor for such a stream:

The first two arguments are the delay before messages should be sent, and the interval at which they should be sent. The third argument is the message that should be sent on each tick. Calling this service call with an interval of 1000 and a request message of tick will result in a stream being returned that sent a tick message every second.

If you want to read from the Request header or add something to the Response header, you can use ServerServiceCall. If you’re implementing the service call directly, you can simply change the return type to be HeaderServiceCall.

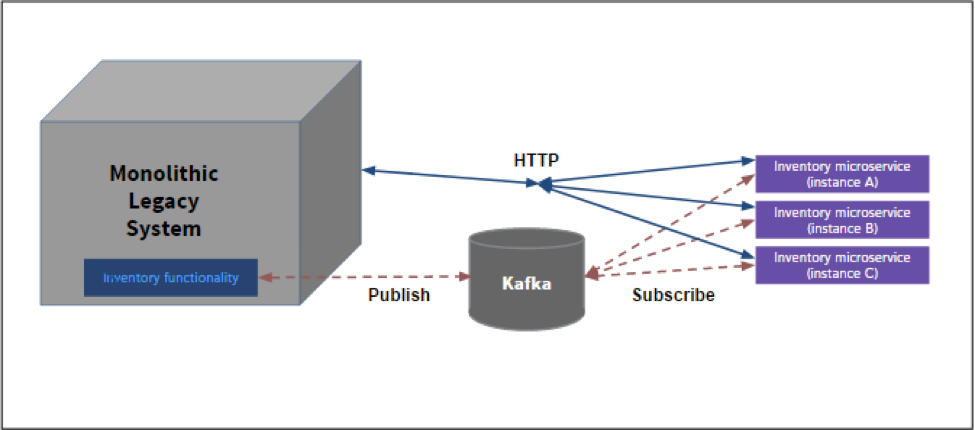

Publishing messages to a broker, such as Apache Kafka, decouples communication even further. Lagom’s Message Broker API provides at-least-once semantics and uses Kafka. If a new instance starts publishing information, its messages are added to events previously emitted. If a new instance subscribes to a topic, they will receive all events, past, present, and future. Topics are strongly typed; hence both the subscriber and producer can know in advance what the expected data flowing through will be.

To publish data to a topic a service needs to declare the topic in its service descriptor.

The syntax for declaring a topic is like the one used already to define services’ endpoints. The Descriptor.publishing method accepts a sequence of topic calls; each topic call can be defined via the Service.topic static method. The latter takes a topic name, and a reference to a method that returns a Topic instance. Data flowing through a topic is serialized to JSON by default. It is possible to use a different serialization format by passing a different message serializer for each topic defined in a service descriptor.

The primary source of messages that Lagom is designed to produce is persistent entity events. Rather than publishing events in an ad-hoc fashion in response to things that happen, it is better to take the stream of events from your persistent entities and adapt that to a stream of messages sent to the message broker. This way, you can ensure that events are processed at least once by both publishers and consumers, which allows you to guarantee a very strong level of consistency throughout your system.

Lagom’s TopicProducer helper provides two methods for publishing a persistent entity’s event stream, singleStreamWithOffset for use with non-sharded read-side event streams, and taggedStreamWithOffset for use with sharded read-side event streams. Both methods take a callback which takes the last offset that the topic producer published, and allows resumption of the event stream from that offset via the PersistentEntityRegistry.eventStream method for obtaining a read-side stream.

Here’s an example of publishing a single, non-sharded event stream:

To subscribe to a topic, a service just needs to call Topic.subscribe() on the topic of interest. For instance, if a service wants to collect all greeting messages published by the earlier HelloService all you should do is to @Inject the HelloService and subscribe to the greetings topic.

When calling Topic.subscribe(), you will get back a Subscriber instance. In the above code snippet, we have subscribed to the greetings topic using at-least-once delivery semantics. That means each message published to the greetings topic is received at least once. The subscriber also offers an atMostOnceSource that gives you at-most-once delivery semantics. If in doubt, default to using at-least-once delivery semantics.

Finally, subscribers are grouped together via Subscriber.withGroupId. A subscriber group allows many nodes in your cluster to consume a message stream while ensuring that each message is only handled once by each node in your cluster. Without subscriber groups, all your nodes for a service would get every message in the stream, leading to their processing being duplicated. By default, Lagom will use a group id that has the same name as the service consuming the topic.