13 API Metrics That Every Platform Team Should Be Tracking

The most important API metrics every API product manager and engineer should know, especially when you are looking into API analytics and reporting.

Join the DZone community and get the full member experience.

Join For Free

Identifying Key API Metrics

Infrastructure/DevOps

Application Engineering/Platform

Product Management

Business/Growth

Infrastructure API Metrics

1: Uptime

What is Synthetic Monitoring? As name implies, it is a predefined set of API calls that a server (usually by one of the providers of Monitoring services) triggers to call your service. While it doesn’t reflect real world experience of your users, it is useful to see the sequence of these APIs are as expected.

2: CPU Usage

3: Memory Usage

Application API Metrics

4: Request Per Minute (RPM)

5: Average and Max Latency

Because problematic slow endpoints may be hidden when looking only at aggregate latency, it’s critical to look at breakdowns of latency by route, by geography, and other fields to segment upon. For example, you may have a POST /checkout endpoint that’s slowly been increasing in latency over time which could be due to an ever-increasing SQL table size that’s not correctly indexed; however, due to a low volume of calls to POST /checkout, this issue is masked by your GET /items endpoint which is called for more than the checkout endpoint. Similarly, if you have a GraphQL API, you’ll want to look at the average latency per GraphQL operation.

We put latency under application/engineering even though many DevOps/Infrastructure teams will also look at latency. Usually, an infrastructure person looks at aggregate latency over a set of VMs to make sure the VMs are not overloaded, but they don’t drill down into application-specific metrics like per route.

6: Errors Per Minute

Similar to RPM, Errors per Minute (or error rate) are the number of API calls with non 200 families of status codes per minute and are critical for measuring how buggy and error-prone your API is. To track errors per minute, it’s important to understand what type of errors are happening. 500 errors could imply bad things are happening with your code whereas many 400 errors could imply user errors from a poorly designed or documented API. This means when designing your API, it’s important to use the appropriate HTTP status code.

You can further drill down into seeing where these errors come from. Many 401 Unauthorized errors from one specific geo region could imply bots are attempting to hack your API.

API Product Metrics

APIs are no longer just an engineering term associated with microservices and SOA. APIs as a product is becoming far more common especially among B2B companies who want to one-up their competition with new partners and revenue channels.

API-driven companies need to look at more than just engineering metrics like errors and latency to understand how their APIs are used (or why they are not being adopted as fast as planned). The role of ensuring the right features are built lies on the API product manager, a new role that many B2B companies are rushing to fill.

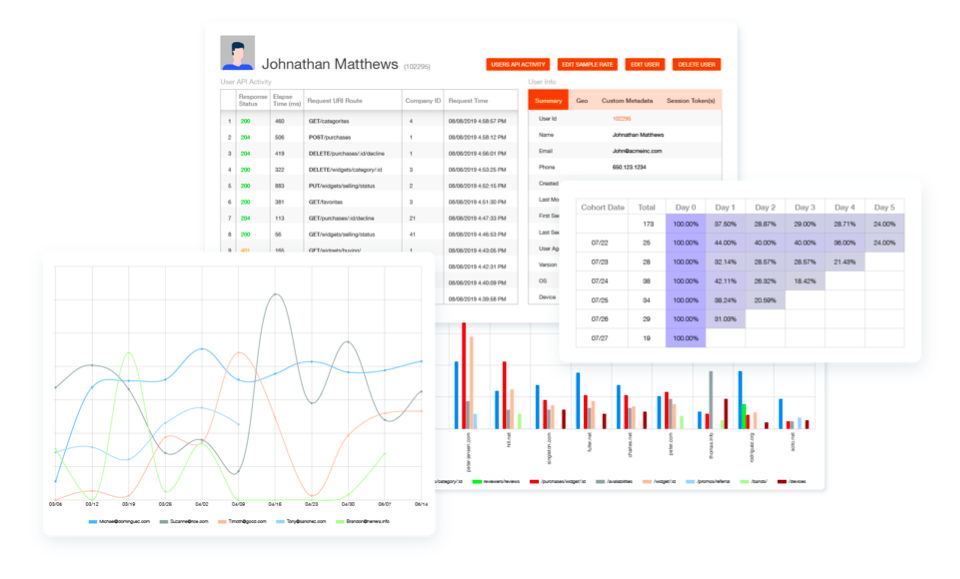

What is Moesif? Moesif is the most advanced API analytics platform used by Thousands of platforms to understand what your most loyal customers are doing with your APIs, how they’re accessing them, and from where. Moesif focuses on analyzing real customer data vs just synthetic tests to ensure you’re building the best possible API platform for your customers.

7: API Usage Growth

For many product managers, API usage (along with unique consumers) is the gold standard to measure API adoption. An API should not be just error-free, but growing month over month. Unlike requests per minute, API usage should be measured in longer intervals like days or months to understand real trends.

If measuring month-over-month API growth, we recommend choosing 28-days instead as it removes any bias due to weekend vs weekday usage and also differences in several days per month. For example, February may have only 28 days whereas the month before has a full 31 days causing February to appear to have lower usage.

8: Unique API Consumers

Because a month’s increase in API usage may be attributed to just a single customer account, it’s important to measure API DAU (Monthly Active Users) or unique consumers of an API. This metric can give you the overall health of new customer acquisition and growth. Many API platform teams correlate API MAU to their web MAU, to get full product health.

If web MAU is growing far faster than API MAU, then this could imply a leaky funnel during integration or implementation of a new solution. This is especially true when the core product of the company is an API such as for many B2B/SaaS companies. On the other hand, API MAU can be correlated to API usage to understand where that increased API usage came from (New vs. existing customers).

Tools like Moesif can track both individual users calling and API and also link them to companies or organizations.

9: Top Customers by API Usage

For any company with a focus on B2B, tracking the top API consumers can give you a huge advantage when it comes to understanding how your API is used and where to upsell opportunities exist. Many experienced product leaders know that many products exhibit power-law dynamics with a handful of power users having a disproportionate amount of usage compared to everyone else. Not surprisingly, these are the same power users that generally bring your company the most revenue and organic referrals.

This means its critical to track what your top 10 customers are doing with your API. You can further break this down by what endpoints they are calling and how they’re calling them. Do they use a specific endpoint much more than your non-power users? Maybe they found their ah-ha moment with your API.

10: API Retention

Should you spend more money on your product and engineering or put more money into growth? Retention and churn (the opposite of retention) can tell you which path to take. A product with high product retention is closer to product-market fit than a product with a churn issue. Unlike subscription retention, product retention tracks the actual usage of a product such as an API.

While the two are correlated, they are not the same. In general, product churn is a leading indicator of subscription churn since customers who don’t find value in an API may be stuck with a yearly contract while not actively using the API. API retention should be higher than web retention as web retention will include customers who logged in but didn’t necessarily integrate the platform yet. Whereas API retention looks at post-integrated customers.

11: Time to First Hello World (TTFHW)

TTFHW is an important KPI for not just tracking your API product health, but your overall developer experience aka DX. Especially if your API is an open platform attracting 3rd party developers and partners, you want to ensure they can get up and running as soon as possible to their first ah-ha moment. TTFHW measures how long it takes from the first visit to your landing page to an MVP integration that makes the first transaction through your API platform. This is a cross-functional metric tracking marketing, documentation, and tutorials, to the API itself.

12: API Calls per Business Transaction

While more equals better for many product and business metrics, it is important to keep the number of calls per business transactions as low as possible. This metric directly reflects the design of the API. If a new customer has to make three different calls and piece the data together, this can mean the API does not have the correct endpoints available. When designing an API, it’s important to think in terms of a business transaction or what the customer is trying to achieve rather than just features and endpoints. It may also mean your API is not flexible enough when it comes to filtering and pagination.

13: SDK and Version Adoption

Many API platform teams may also have a bunch of SDKs and integrations they maintain. Unlike mobile where you just have iOS and Android as the core mobile operating systems, you may have 10’s or even hundreds of SDKs. This can become a maintenance nightmare when rolling out new features.

You may selectively roll out critical features to your most popular SDKs whereas less critical features may be rolled out to less popular SDKs. Measuring API or SDK versions is also important when it comes to deprecating certain endpoints and features. You wouldn’t want to deprecate the endpoint that your highest paying customer is using without some consultation on why they are using it.

Business/Growth

Business/growth metrics can be similar to product metrics but focused on revenue, adoption, and customer success. For example, instead of looking at the top 10 customers by API usage, you may want to look at the top 10 customers by revenue, then by their endpoint usage. For tracking business growth, analytics tools like Moesif, support enriching user profiles with customer data from your CRM or other analytics services to have a better idea of who your API users are.

Conclusion

For anyone building and working with APIs, its critical to track the correct API metrics. Most companies would not launch a new web or mobile product without having the correct instrumentation for engineering and product. Similarly, you wouldn’t want to launch a new API without a way to the instrument and track the correct API metrics. Sometimes the KPIs for one team can blend into another team as we saw with the API usage metrics.

There can be different ways of looking at the same underlying metric. However, teams should stay focused on looking at the right metrics for their team. For example, product managers shouldn’t worry about CPU usage just like infrastructure teams shouldn’t worry about API retention. Tools like Moesif API Analytics can help you get started measuring these metrics with just a quick SDK installation.

Published at DZone with permission of Derric Gilling. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments