Apache RocketMQ: How We Lowered Latency

See how latency was lowered in Apache RocketMQ.

Join the DZone community and get the full member experience.

Join For FreeApache RocketMQ

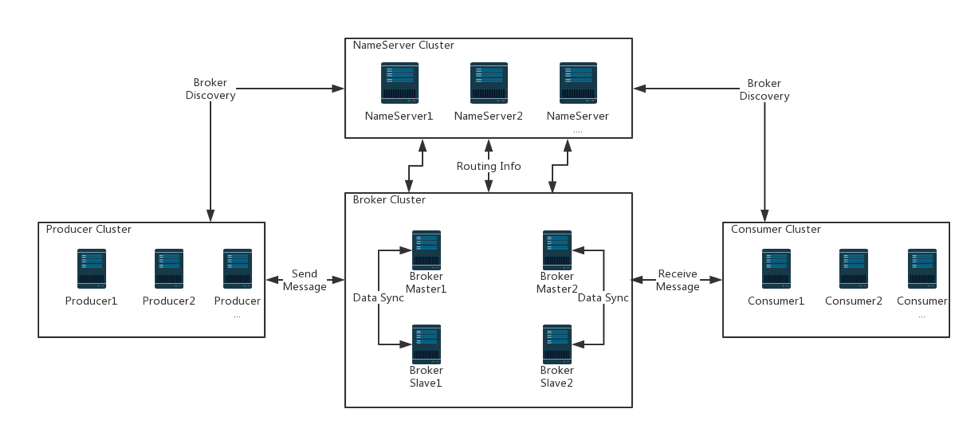

Apache RocketMQ is a distributed messaging and streaming data platform open sourced by Alibaba.

Here is the typical deployment topology:

At first glance, it looks pretty similar to other products, such as Kafka.

In fact, even the NameServer in the above diagram is Zookeeper, the same as Kafka.

To end the confusion, I would like to share a couple lessons we learned in the process of shaping this project.

In this article, I’ll start with one of the major features: low latency.

Little’s Law

Little’s law states that the occupancy = latency x throughput.

If occupancy is a set number, the lower the latency, the higher the throughput. In order to maximize the throughput, we have to minimize the latency.

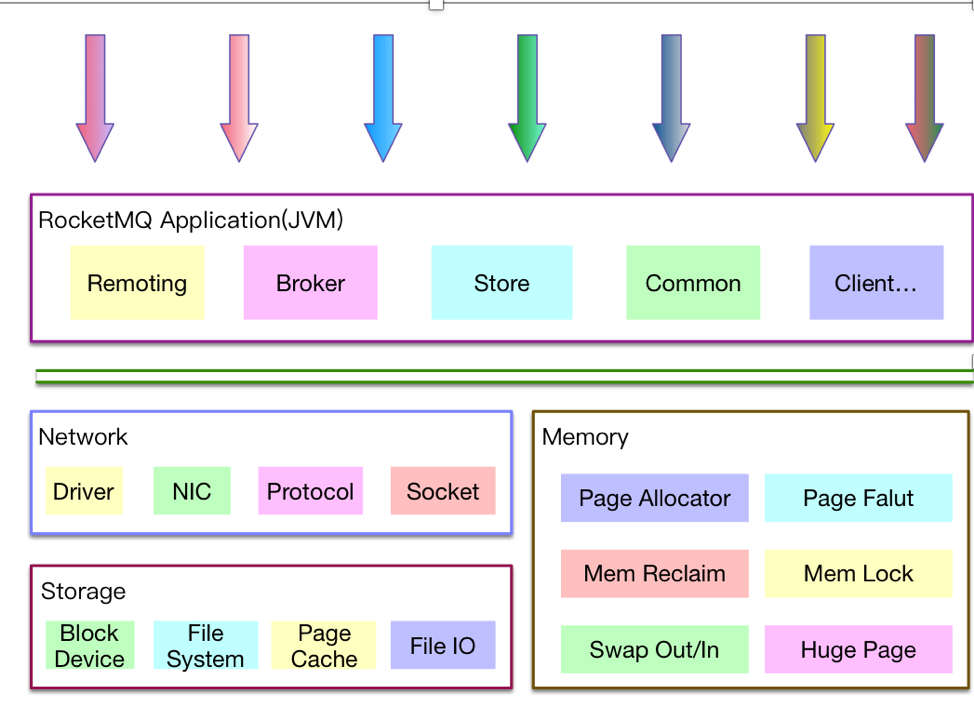

RocketMQ is a pure Java implementation. By “pure” I mean rocketMQ has developed its own memory, storage, and all the other modules, all of which has to be fine-tuned to avoid huge latency.

JVM Stall:

JVM stall happens a lot, such as during GC, JIT, RevokeBias, and RedefineClasses, to name a few. Many of us already know how to fine-tune GC: via adjusting heap size, changing GC timing, optimizing data structure, etc. But how do we tune the other JVM stalls?

One approach to the issue is to use -XX:+PrintGCApplicationStoppedTime with XX:+PrintSafepointStatistics and -XX: flags to isolate the trouble maker. For example, if we can identify RevokeBias is causing the stall, we can use -XX:-UseBiasedLocking to turn off the biased lock.

Memory Reclaim

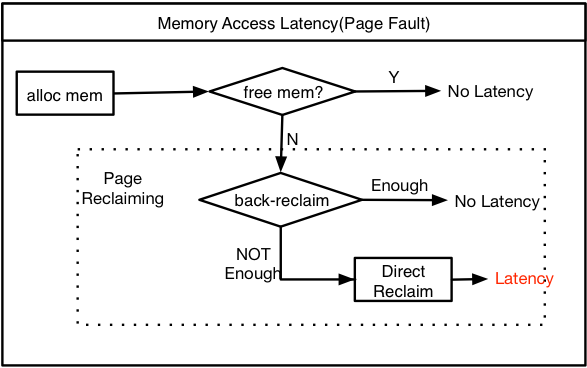

When RocketMQ is applying for new memory pages, there is a chance that will cause the Linux system to get into a stage called direct reclaim, which may cause huge latency.

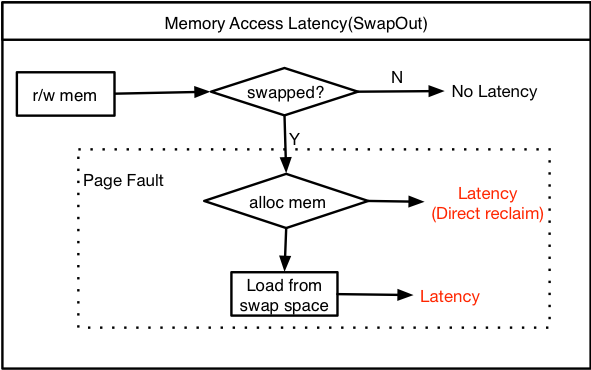

Another problem we run into is when the kernel reclaims the memory, if that page is loaded from swap space, it may cause file I/O, which leads to huge delay.

To solve these two issues, we played with different combinations of vm.extra_free_kbytes and vm.swappiness to get the optimal result.

In most Linux versions, the memory page size is 4K. If your application somehow creates a read/write racing condition on the same page, the latency will be a huge problem. To avoid this issue, we tried different ways to coordination memory access.

Conclusion

As they say: the devil is in the details. Many of the fine-tuning processes are trivia yet important. After the optimization, the project is able to handle very tough challenges.

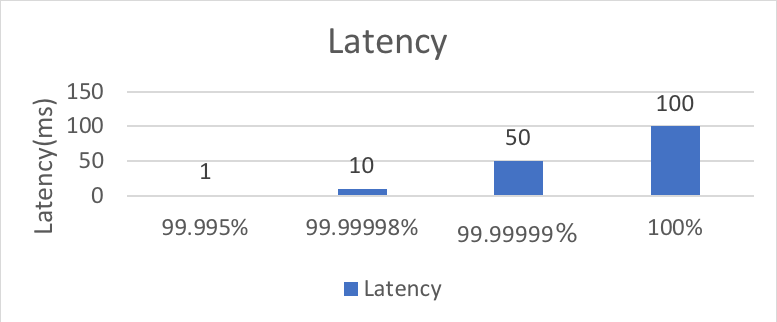

The following is the result of the latency benchmark:

Opinions expressed by DZone contributors are their own.

Comments