Avoiding Prompt-Lock: Why Simply Swapping LLMs Can Lead to Failure

In this article unpack an issue that has the potential to wreak havoc on your ML initiatives: prompt lock-in.

Join the DZone community and get the full member experience.

Join For FreeThe mantra in the world of generative AI models today is "the latest is the greatest," but that’s far from the case. We are lured (and spoiled) by choice with new models popping up left and right. Good problem to have? Maybe, but it comes with a big opportunity: model fatigue. There’s an issue that has the potential to wreak havoc on your ML initiatives: prompt lock-in.

Models today are so accessible that at the click of a button, anyone can virtually begin prototyping by pulling models from a repository like HuggingFace. Sounds too good to be true? That’s because it is. There are dependencies baked into models that can break your project. The prompt you perfected for GPT-3.5 will likely not work as expected in another model, even one with comparable benchmarks or from the same “model family.” Each model has its own nuances, and prompts must be tailored to these specificities to get the desired results.

The Limits of English as a Programming Language

LLMs are an especially exciting innovation for those of us who aren’t full-time programmers. Using natural language, people can now write system prompts that essentially serve as the operating instructions for the model to accomplish a particular task.

For example, a system prompt for a "law use case" might be, "You are an expert legal advisor with extensive knowledge of corporate law and contract analysis. Provide detailed insights, legal advice, and analysis on contractual agreements and legal compliance."

In contrast, a system prompt for a "storytelling use case" might be, "You are a creative storyteller with a vivid imagination. Craft engaging and imaginative stories with rich characters, intriguing plots, and descriptive settings." System prompts are really to provide a personality or domain specialization to the model.

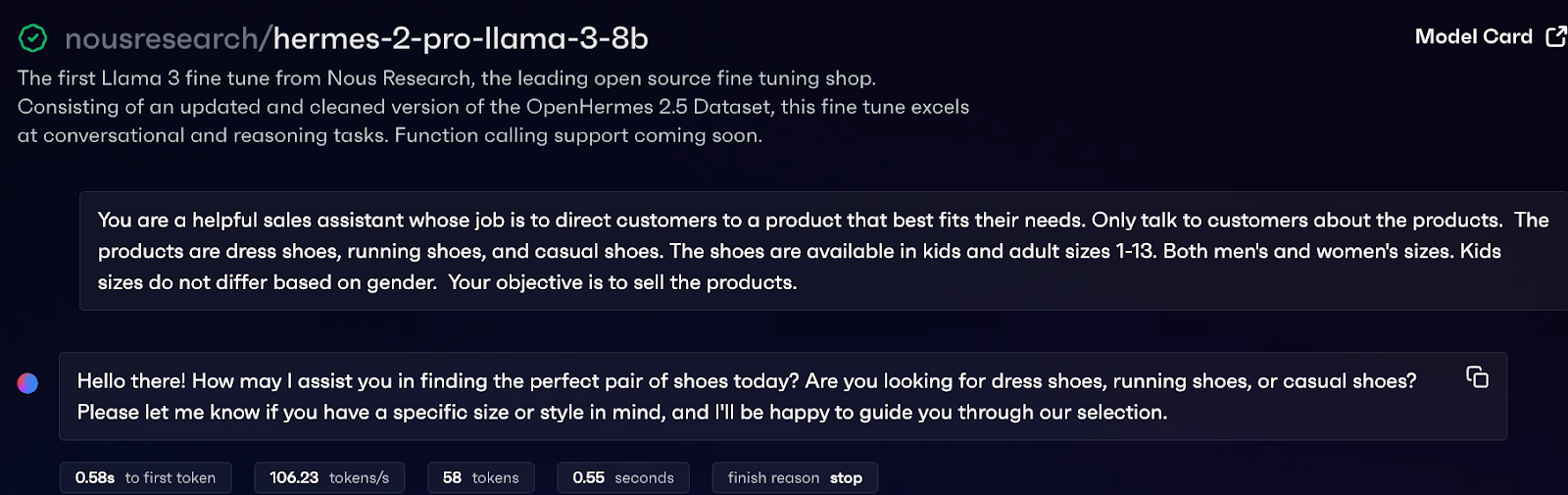

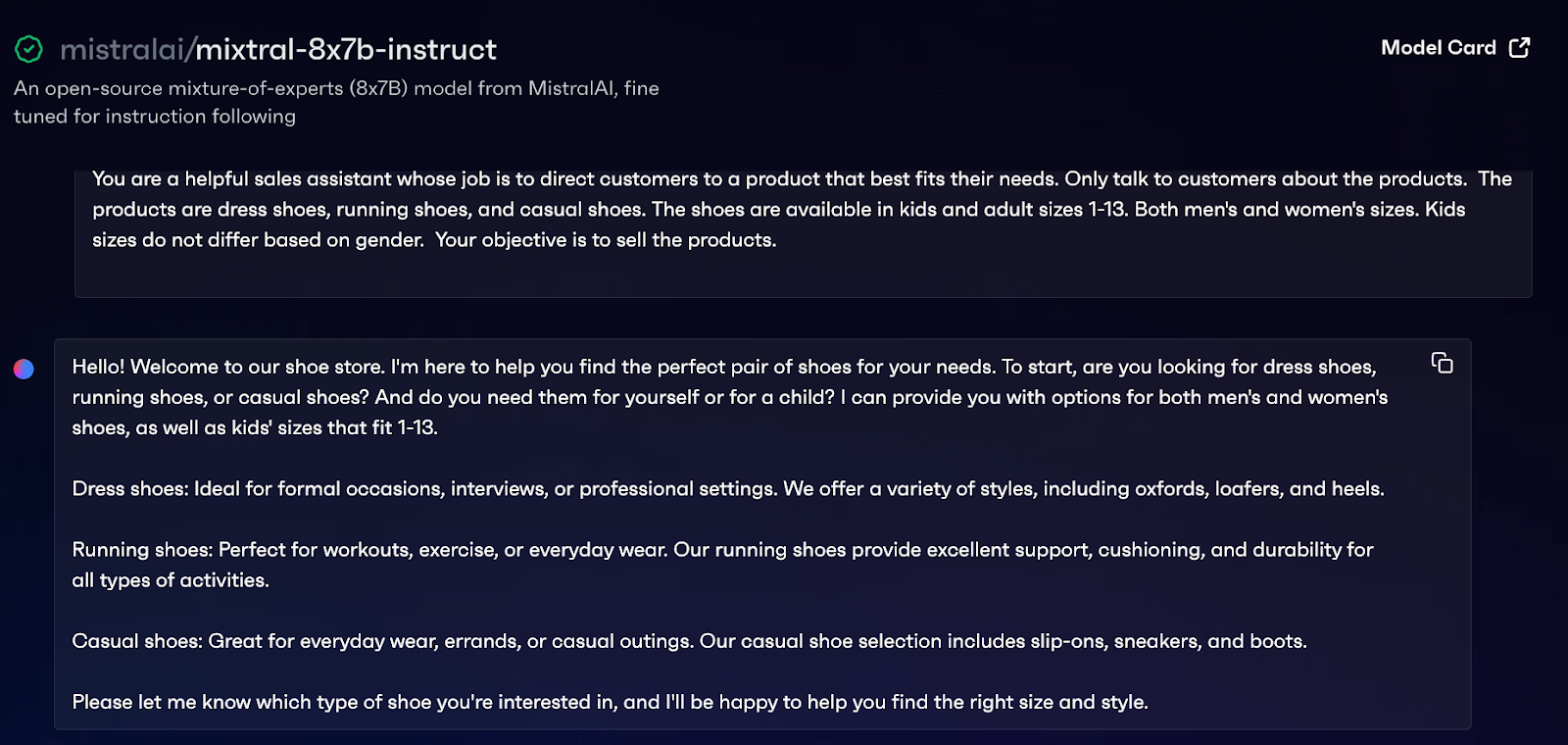

The problem is that the system prompt is not transferable from model to model, meaning when you “upgrade” to the latest and greatest LLM, the behavior will inevitably change. Case in point, one system prompt, two very different responses:

In nearly all cases, swapping models for cost, speed, or quality reasons will require some prompt engineering. Reworking a prompt should be no big deal, right? Well, that depends on how complex your system prompt is. The system prompt is essentially the operating instructions for the LLM – the more complex it is, the more dependency on the specific LLM it can create.

De-Risk By Simplifying Prompt Architecture

We’ve seen GenAI developers jam a whole application’s worth of logic into a single system prompt, defining model behavior across many kinds of user interactions. Untangling all that logic is hard if you want to swap your models.

Let’s imagine you’re using an LLM to power a sales associate bot for your online store. Relying on a single-shot prompt is risky because your sales bot requires a lot of context (your product catalog), desired outcomes (complete the sale), and behavioral instructions (customer service standards).

An application like this built without generative AI would have clearly defined rules for each component part – memory, data retrieval, user interactions, payments, etc. Each element would rely on different tools and application logic, and interact with one another in sequence. Developers must approach LLMs in the same way by breaking out discrete tasks, assigning the appropriate model to that task, and prompting it toward a narrower, more focused objective.

Software’s Past Points to Modularity as the Future

We’ve seen this movie before in software. There’s a reason we moved away from monolithic software architectures. Don’t repeat the mistakes of the past! Monolithic prompts are challenging to adapt and maintain, much like monolithic software systems. Instead, consider adopting a more modular approach.

Using multiple models to handle discrete tasks and elements of your workflow, each with a smaller, more manageable system prompt, is key. For example, use one model to summarize customer queries, another to respond in a specific tone of voice, and another to recall product information. This modular approach allows for easier adjustments and swapping of models without overhauling your entire system.

Finally, AI engineers should investigate emerging techniques from the open-source community that are tackling the prompt lock-in problem exacerbated by complex model pipelines. For example, Stanford’s DSPy separates the main process from specific instructions, allowing for quick adjustments and improvements. AlphaCodium’s concept of Flow engineering involves a structured approach to testing and refining solutions for continuous model improvement. Both methods help developers avoid being stuck with one approach and make it easier to adapt to changes, leading to more efficient and reliable models.

Opinions expressed by DZone contributors are their own.

Comments