Avro's Built-In Sorting

Join the DZone community and get the full member experience.

Join For Free

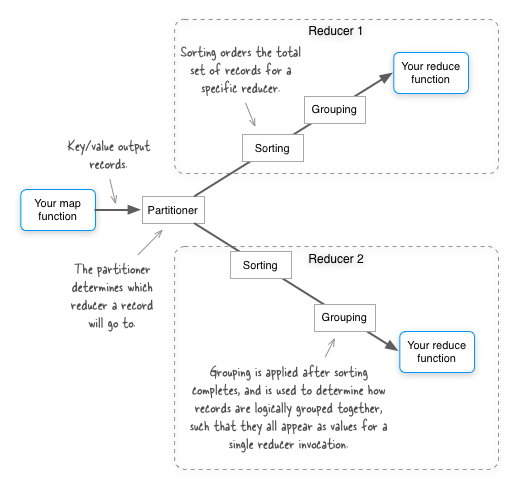

avro has a little-known gem of a feature which allows you to control which fields in an avro record are used for partitioning , sorting and grouping in mapreduce. the following figure gives a quick refresher as to what these terms mean. oh, and don’t take the placement of the “sorting” literally - sorting actually occurs on both the map and reduce side - but it’s always performed in the context of a specific partition (i.e. for a specific reducer).

by default all the fields in an avro map output key are used for partitioning, sorting and grouping in mapreduce. let’s walk through an example and see how this works. you’ll begin with a simple schema github source :

{"type": "record", "name": "com.alexholmes.avro.weathernoignore",

"doc": "a weather reading.",

"fields": [

{"name": "station", "type": "string"},

{"name": "time", "type": "long"},

{"name": "temp", "type": "int"},

{"name": "counter", "type": "int", "default": 0}

]

}

we’re going to see what happens when we run this code against a small sample data set, which we’ll generate using avro code github source :

file input = tmpfolder.newfile("input.txt");

avrofiles.createfile(input, weathernoignore.schema$, arrays.aslist(

weathernoignore.newbuilder().setstation("sfo").settime(1).settemp(3).build(),

weathernoignore.newbuilder().setstation("iad").settime(1).settemp(1).build(),

weathernoignore.newbuilder().setstation("sfo").settime(2).settemp(1).build(),

weathernoignore.newbuilder().setstation("sfo").settime(1).settemp(2).build(),

weathernoignore.newbuilder().setstation("sfo").settime(1).settemp(1).build()

).toarray());

to understand how avro is partitioning, sorting and grouping the data, we’ll write an identity mapper and reducer, with a small enhancement to the reducer to increment the

counter

field for each record we see in an individual reducer instance

github source

:

package com.alexholmes.avro.sort.basic;

import com.alexholmes.avro.weathernoignore;

import org.apache.avro.mapred.avrokey;

import org.apache.avro.mapred.avrovalue;

import org.apache.avro.mapreduce.avrojob;

import org.apache.avro.mapreduce.avrokeyinputformat;

import org.apache.avro.mapreduce.avrokeyoutputformat;

import org.apache.hadoop.fs.path;

import org.apache.hadoop.io.nullwritable;

import org.apache.hadoop.mapreduce.job;

import org.apache.hadoop.mapreduce.mapper;

import org.apache.hadoop.mapreduce.reducer;

import org.apache.hadoop.mapreduce.lib.input.fileinputformat;

import org.apache.hadoop.mapreduce.lib.output.fileoutputformat;

import java.io.ioexception;

public class avrosort {

private static class sortmapper

extends mapper<avrokey<weathernoignore>, nullwritable,

avrokey<weathernoignore>, avrovalue<weathernoignore>> {

@override

protected void map(avrokey<weathernoignore> key, nullwritable value, context context)

throws ioexception, interruptedexception {

context.write(key, new avrovalue<weathernoignore>(key.datum()));

}

}

private static class sortreducer

extends reducer<avrokey<weathernoignore>, avrovalue<weathernoignore>,

avrokey<weathernoignore>, nullwritable> {

@override

protected void reduce(avrokey<weathernoignore> key,

iterable<avrovalue<weathernoignore>> values, context context)

throws ioexception, interruptedexception {

int counter = 1;

for (avrovalue<weathernoignore> weathernoignore : values) {

weathernoignore.datum().setcounter(counter++);

context.write(new avrokey<weathernoignore>(weathernoignore.datum()),

nullwritable.get());

}

}

}

public boolean runmapreduce(final job job, path inputpath, path outputpath)

throws exception {

fileinputformat.setinputpaths(job, inputpath);

job.setinputformatclass(avrokeyinputformat.class);

avrojob.setinputkeyschema(job, weathernoignore.schema$);

job.setmapperclass(sortmapper.class);

avrojob.setmapoutputkeyschema(job, weathernoignore.schema$);

avrojob.setmapoutputvalueschema(job, weathernoignore.schema$);

job.setreducerclass(sortreducer.class);

avrojob.setoutputkeyschema(job, weathernoignore.schema$);

job.setoutputformatclass(avrokeyoutputformat.class);

fileoutputformat.setoutputpath(job, outputpath);

return job.waitforcompletion(true);

}

}

if you look at the output of the job below, you’ll see that the output is sorted across all the fields, and that the sorting is in field ordinal order. what this means is that when mapreduce is sorting these records, it compares the

station

field first, then the

time

field second, and so on according to the ordering of the fields in the avro schema. this is pretty much what you’d expect if you write your own complex

writable

type, and your comparator compared all the fields in order.

{"station": "iad", "time": 1, "temp": 1, "counter": 1}

{"station": "sfo", "time": 1, "temp": 1, "counter": 1}

{"station": "sfo", "time": 1, "temp": 2, "counter": 1}

{"station": "sfo", "time": 1, "temp": 3, "counter": 1}

{"station": "sfo", "time": 2, "temp": 1, "counter": 1}

oh, and before we move on notice that the value for the

counter

field is always

1

, meaning that each reducer was only fed a single key/vaue pair, which makes sense since our identity mapper only emitted a single value for each key, the keys are unique, and the mapreduce partitioner, sorter and grouper were using all the fields in the record.

excluding fields for sorting

avro gives us the ability to indicate that specific fields should be ignored when performing ordering functions. in mapreduce these fields are ignored for sorting/partitioning and grouping in mapreduce, which basically means that we have the ability to perform secondary sorting. let’s examine the following schema github source :

{"type": "record", "name": "com.alexholmes.avro.weather",

"doc": "a weather reading.",

"fields": [

{"name": "station", "type": "string"},

{"name": "time", "type": "long"},

{"name": "temp", "type": "int", "order": "ignore"},

{"name": "counter", "type": "int", "order": "ignore", "default": 0}

]

}

it’s pretty much identical to the first schema, the only difference being that the last two fields are flagged as being “ignored” for sorting/partitioning/grouping. let’s run the same (other than modified to work with the different schema) mapreduce code github source as above against this new schema and examine the outputs.

{"station": "iad", "time": 1, "temp": 1, "counter": 1}

{"station": "sfo", "time": 1, "temp": 3, "counter": 1}

{"station": "sfo", "time": 1, "temp": 2, "counter": 2}

{"station": "sfo", "time": 1, "temp": 1, "counter": 3}

{"station": "sfo", "time": 2, "temp": 1, "counter": 1}

there are a couple of notable differences between this output, and the output from the previous schema which didn’t have any ignored fields. first, it’s clear that the

temp

field isn’t being used in the sorting, which makes sense since we specified that it should be ignored in the schema. however, more interestingly, note the value of the

counter

field. all records that had identical

station

and

time

values went to the same reducer invocation, evidenced by the increasing value of

counter

. this is essentially secondary sort!

now, all of this greatness isn’t without some limitations:

- you can’t support two mapreduce jobs that use the same avro key, but have different sorting/partitioning/grouping requirements. although it’s conceivable that you could create a new instance of the avro schema and set the ignored flags for these fields yourself.

- the partitioner, sorter and grouping functions in mapreduce all work off of the same fields (i.e. they all ignore fields that set as ignored in the schema). this means that your options for secondary sorting are limited. for example, you wouldn’t be able to partition all stations to the same reducer, and then group by station and time.

- ordering uses a field’s ordinal position to determine its order within the overall set of fields to be ordered. in other words, in a two-field record, the first field is always compared before the second. there’s no way to change this behavior other than flipping the order of the fields in the record.

having said all of that - the “ignoring fields” feature for sorting is pretty awesome, and something that will no doubt come in handy in my future mapreduce work.

Published at DZone with permission of Alex Holmes, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments