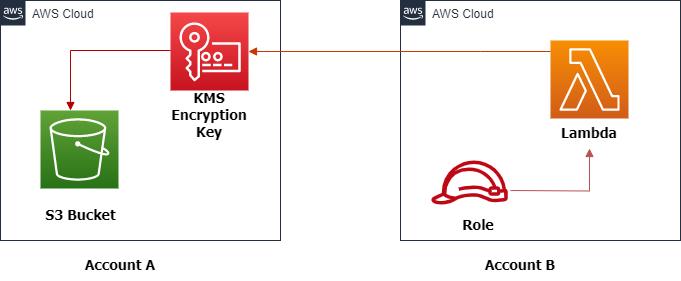

AWS Cross Account S3 Access Through Lambda Functions

In this article, you will learn about how to set up AWS Cross Account S3 access through Lambda Functions, covering the configuration process in detail.

Join the DZone community and get the full member experience.

Join For FreeThis article describes the steps involved in setting up Lambda Functions for accessing AWS S3 in a Cross Account scenario. The configuration process for this integration will be explained in detail, providing a comprehensive guide. By following these instructions, readers can gain an understanding of the requirements for Cross Account access to S3 using Lambda Functions. This article is aimed at AWS developers who are looking to improve their knowledge of Cross Account S3 access and want to learn how to configure Lambda Functions for this purpose.

Prerequisites

To create this solution, you must have the following prerequisites:

- An AWS account.

- Python 3, preferably the latest version.

- Basic knowledge of AWS SDK for Python (boto3).

- Basic knowledge of S3 and KMS Services.

- Basic knowledge of Lambda and IAM Roles.

Setup in Account A

In AWS Account A, create the following resources step by step.

- Create S3 Bucket

- Setup S3 bucket policy

- Setup KMS key

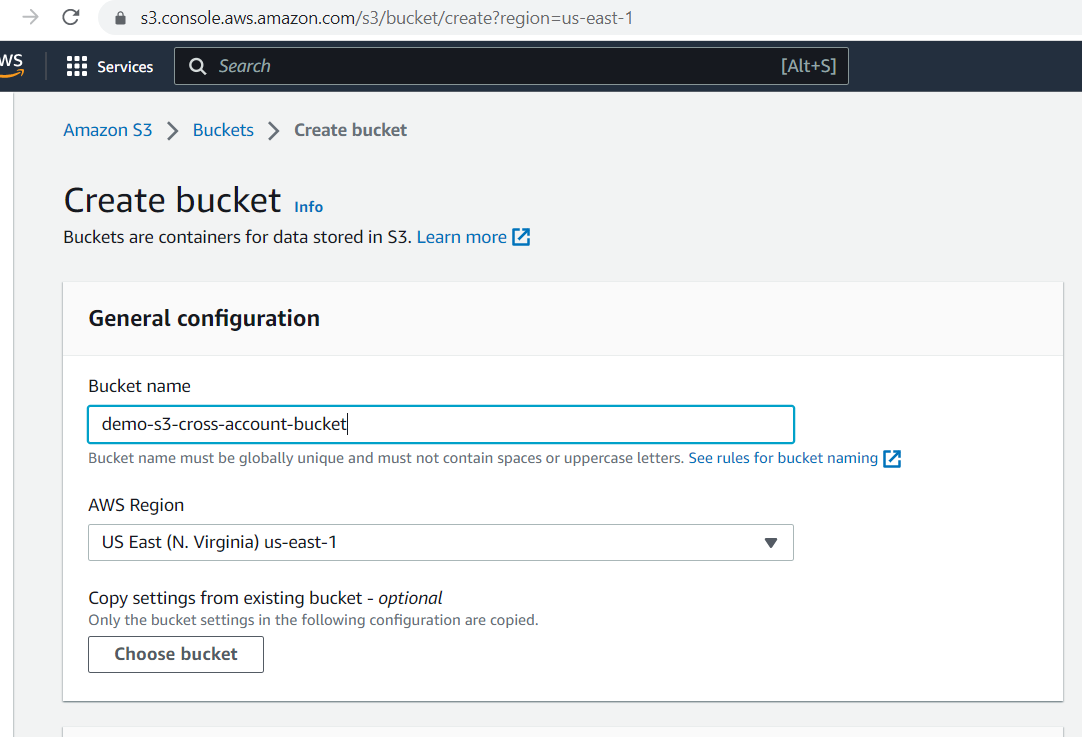

Create S3 Bucket

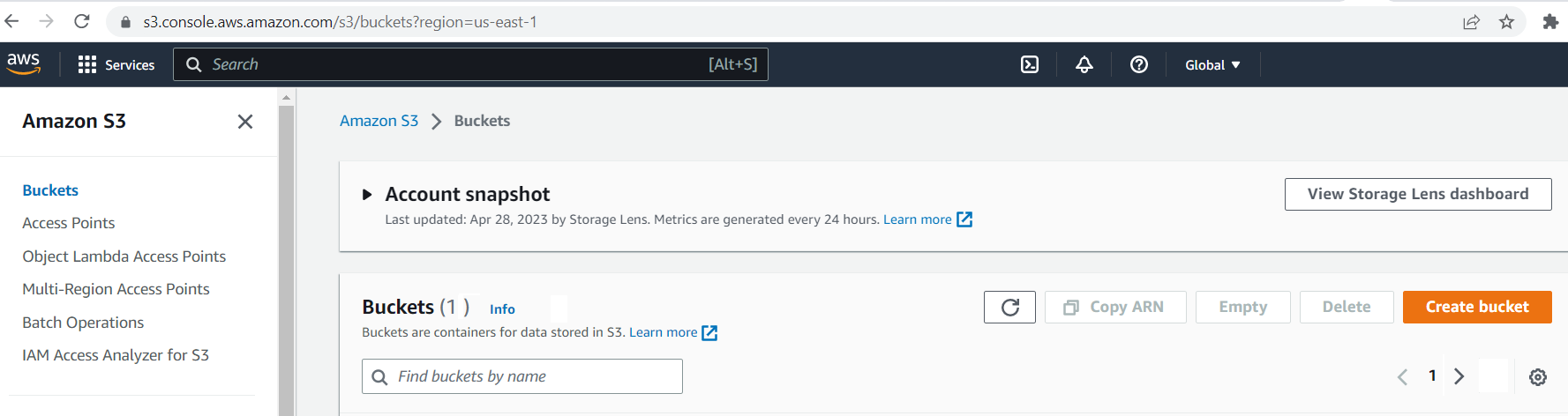

Let's begin by creating an S3 bucket in AWS Account A. Firstly, log in to the AWS console and then navigate to the S3 dashboard.

Please enter the bucket name and region, and then click on the "Create" button.

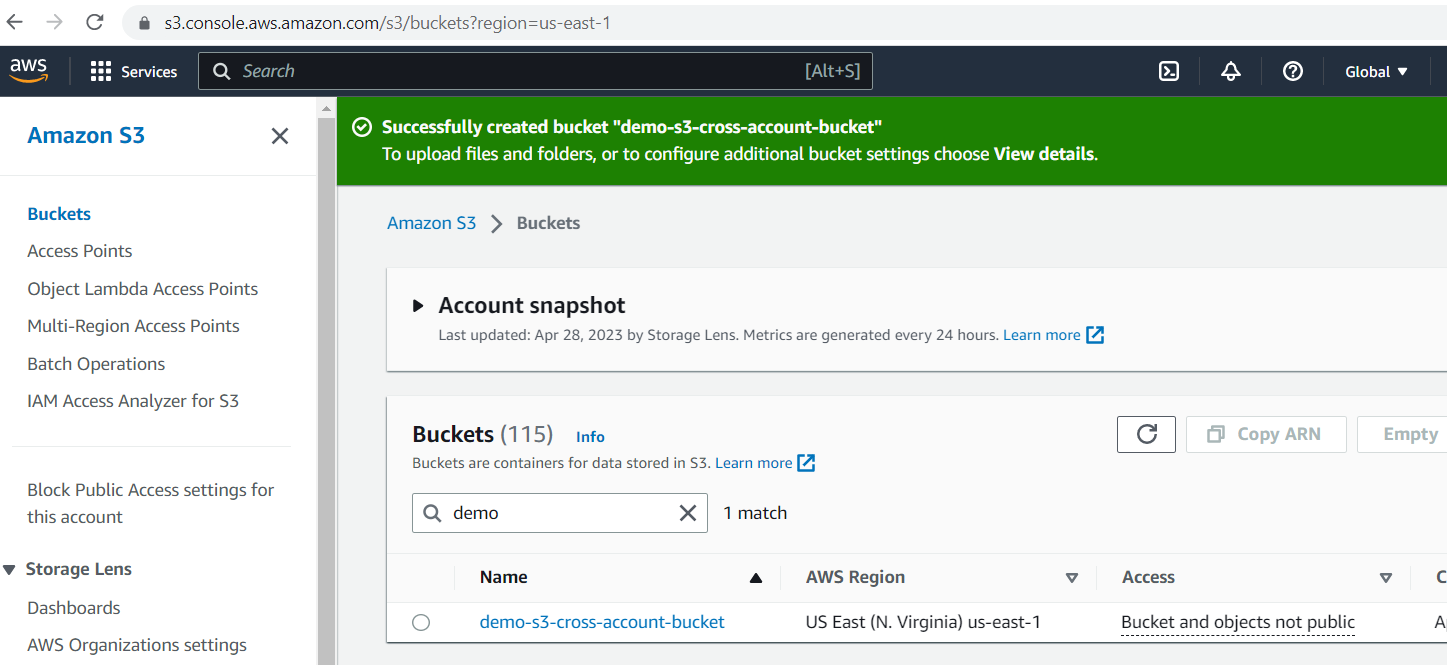

Successfully created bucket.

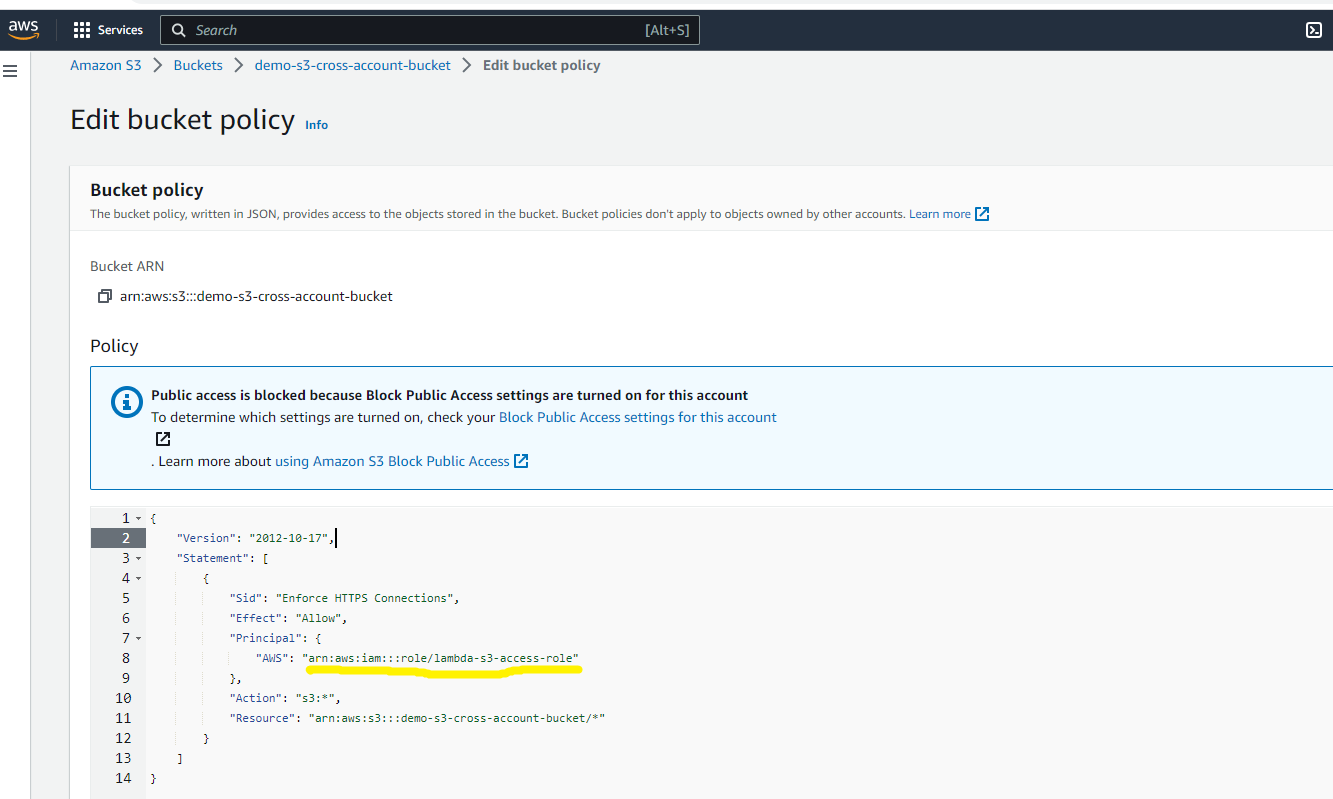

Setup S3 Bucket Policy

It is important to set up a bucket policy that allows a cross-account lambda role to access an S3 bucket and perform S3 operations.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Enforce HTTPS Connections",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam:::role/lambda-s3-access-role"

},

"Action": "s3:*",

"Resource": "arn:aws:s3:::demo-s3-cross-account-bucket/*"

}

]

}Please navigate to the permission tab and then proceed to edit the bucket policy. Replace the existing policy with the one provided above.

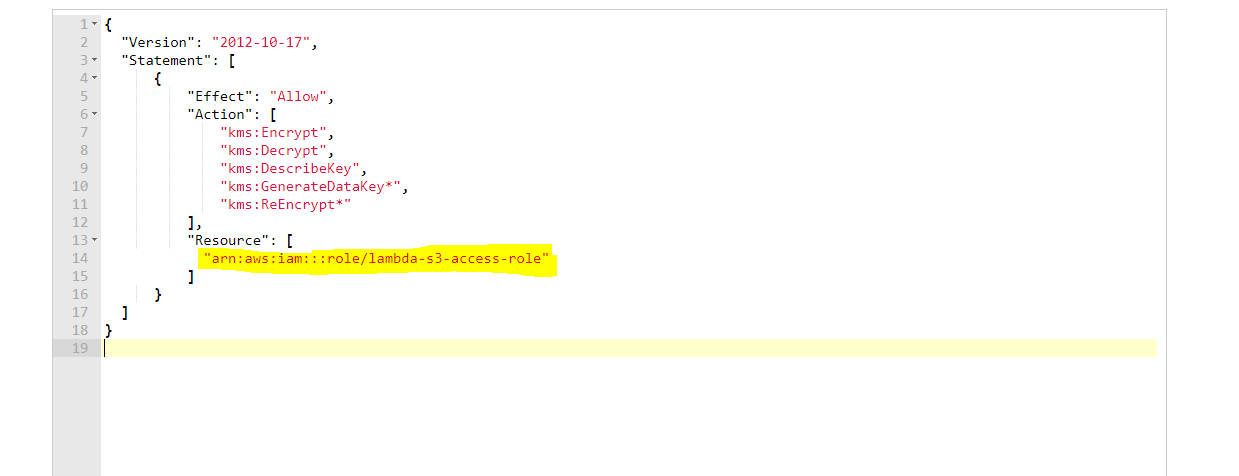

Setup KMS Key

This step is crucial for enabling encryption and decryption of S3 bucket data using the KMS key. It's important to remember that if the bucket is associated with the KMS key, a cross-account lambda role must be authorized to avoid a 401 unauthorized error. To achieve this, we need to modify the existing KMS key policy to permit cross-region lambda roles to perform encryption or decryption operations.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:DescribeKey",

"kms:GenerateDataKey*",

"kms:ReEncrypt*"

],

"Resource": [

"arn:aws:iam:::role/lambda-s3-access-role",

]

}

]

}

To enable the cross-account lambda role, go to the KMS dashboard and choose the key that's linked to the S3 bucket. Then, select the "Key Policy" tab and add the above key policy.

Setup in Account B

In AWS Account B, create the following resources step by step.

- Create IAM Policy and Role

- Create Lambda function

- Test Lambda and Verify

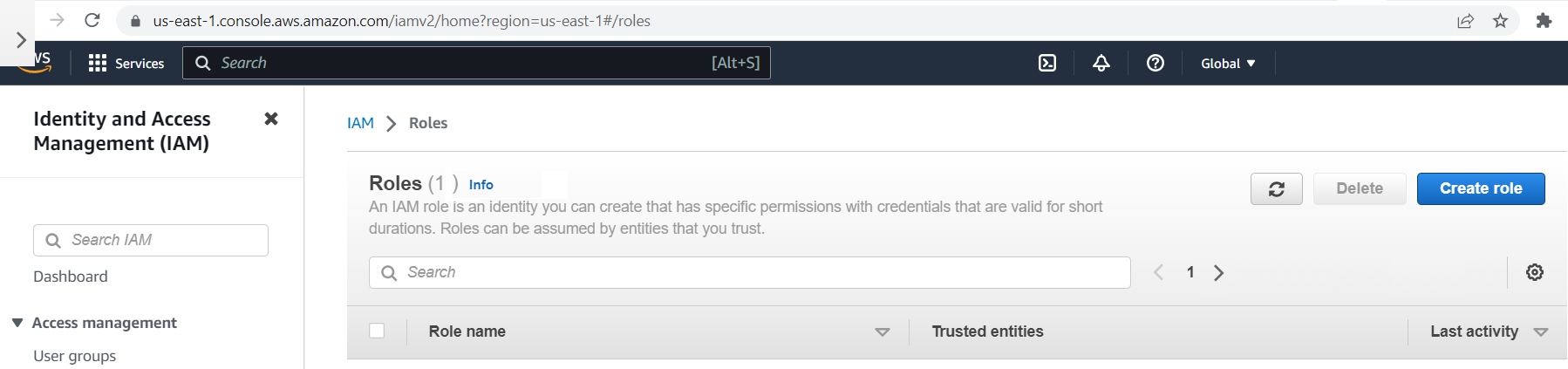

Create IAM Policy and Role

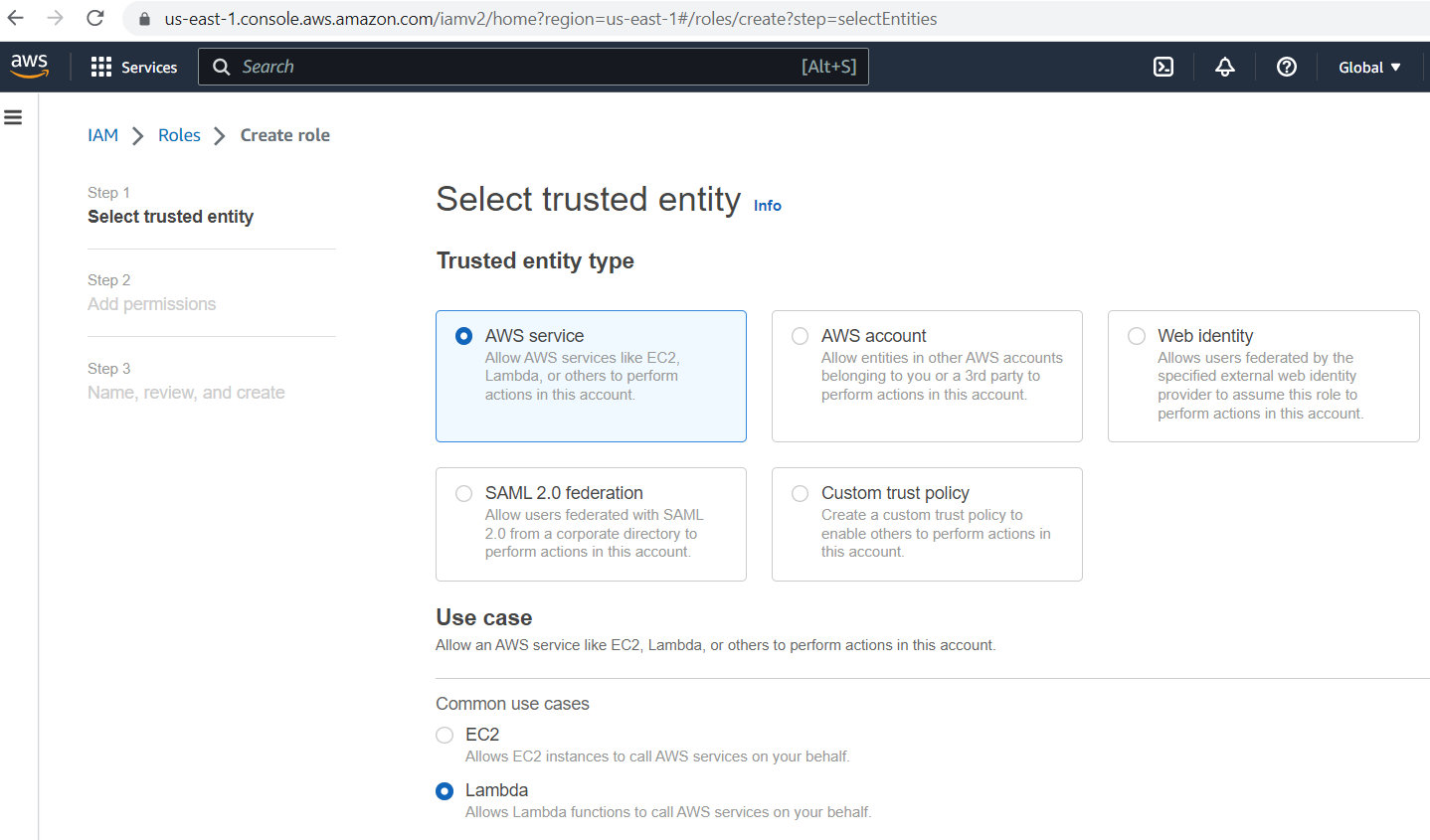

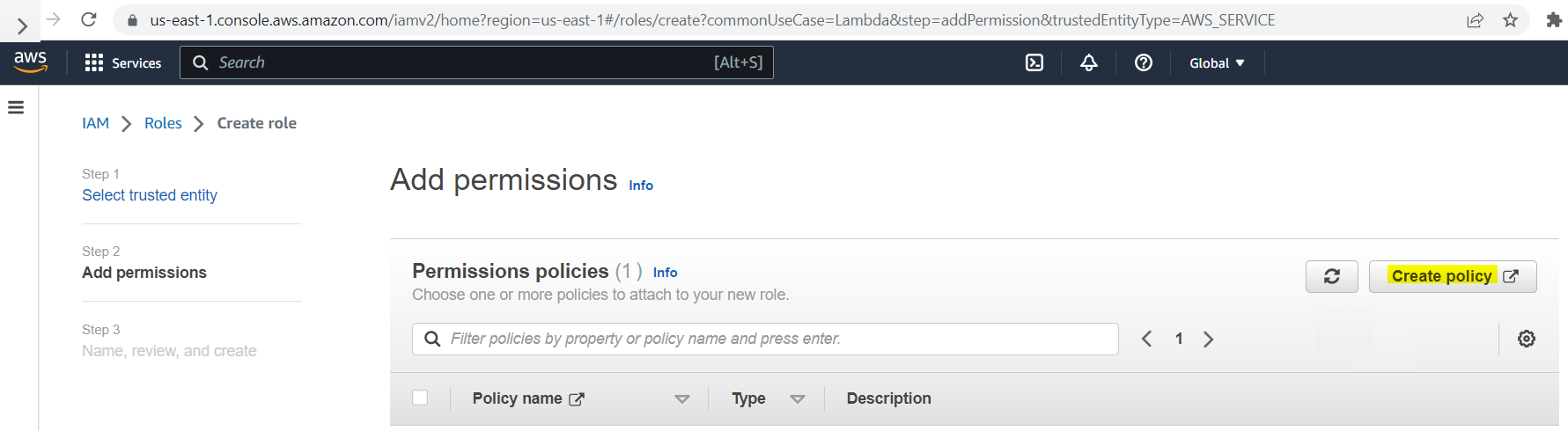

Let's move on and begin creating an IAM role in AWS Account B. Log in to the AWS Account B console and navigate to the IAM dashboard.

Choose "AWS service" as the trusted identity type and "Lambda" as the use case, then click on the "Next" button.

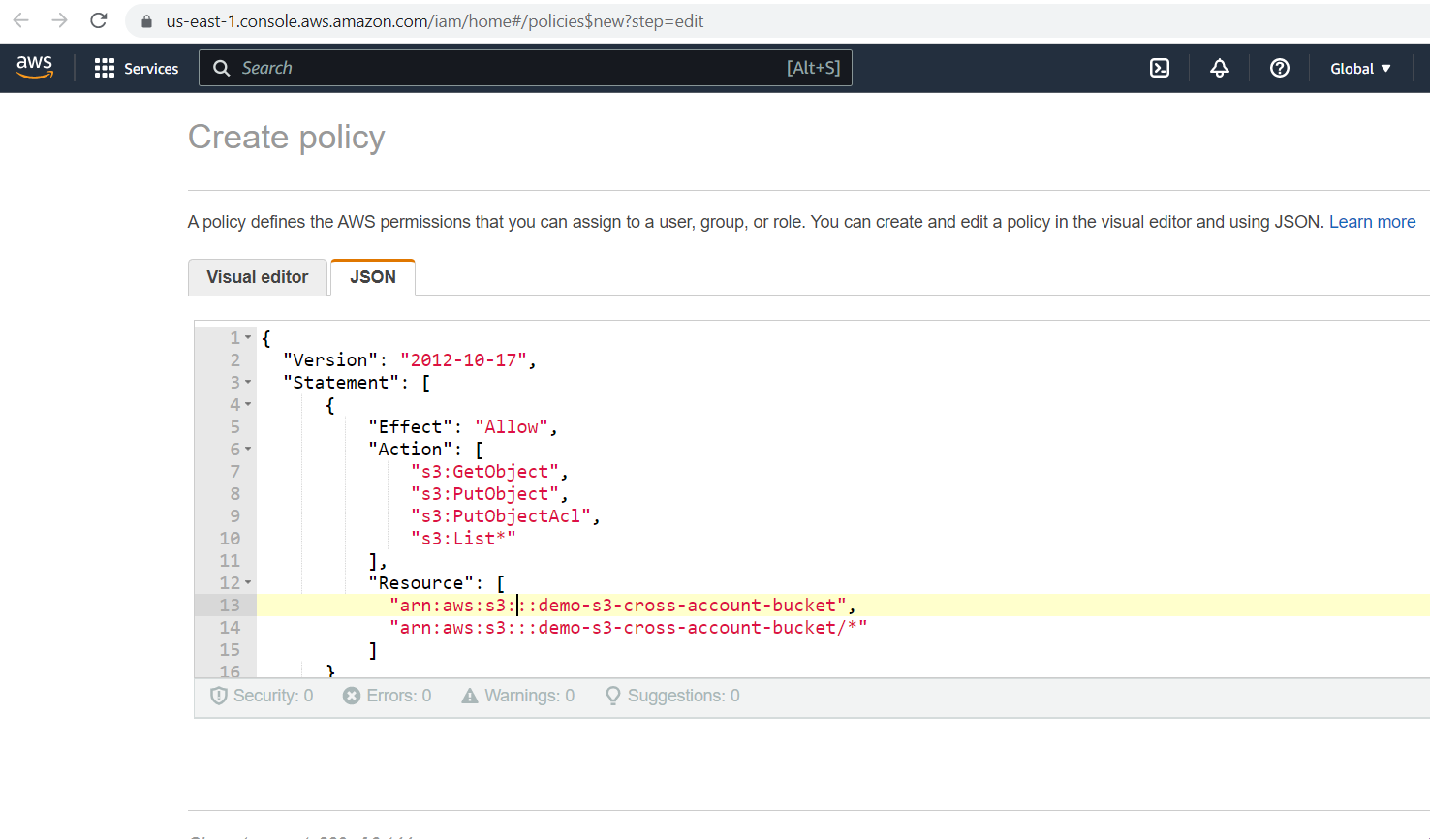

Click on the "Create policy" button to add the role policy.

This role policy enables Lambda to access a cross-account S3 bucket, which requires specifying the S3 bucket name and KMS key.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:PutObjectAcl",

"s3:List*"

],

"Resource": [

"arn:aws:s3:::demo-s3-cross-account-bucket",

"arn:aws:s3:::demo-s3-cross-account-bucket/*"

]

},

{

"Effect": "Allow",

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:DescribeKey",

"kms:GenerateDataKey*"

],

"Resource": [

"arn:aws:kms:::key/demo-s3-cross-account-kms-key",

]

}

]

]

}

Add the above policy on the JSON tab and then click on the "Next" button.

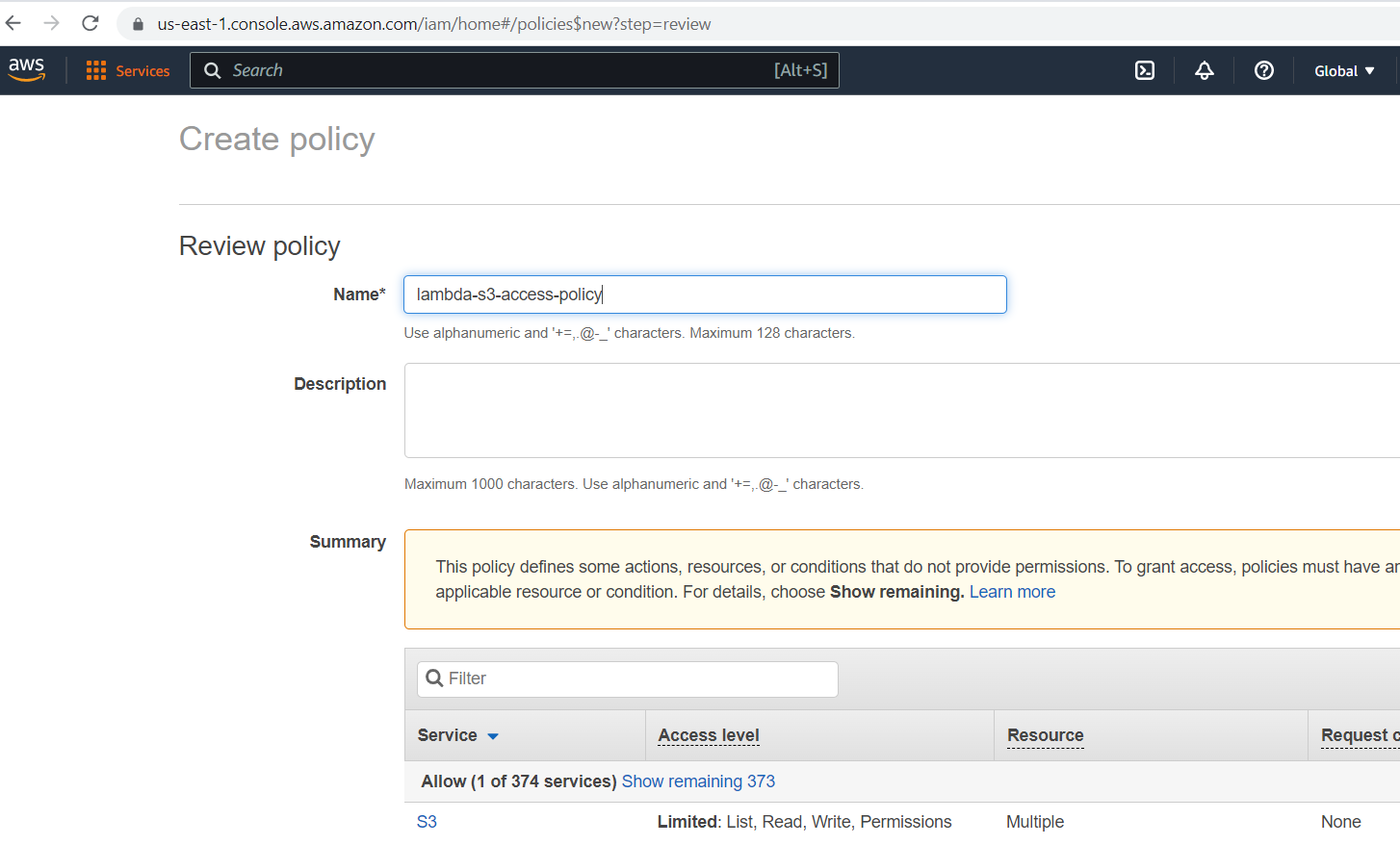

Please provide a policy name and click the "Create policy" button.

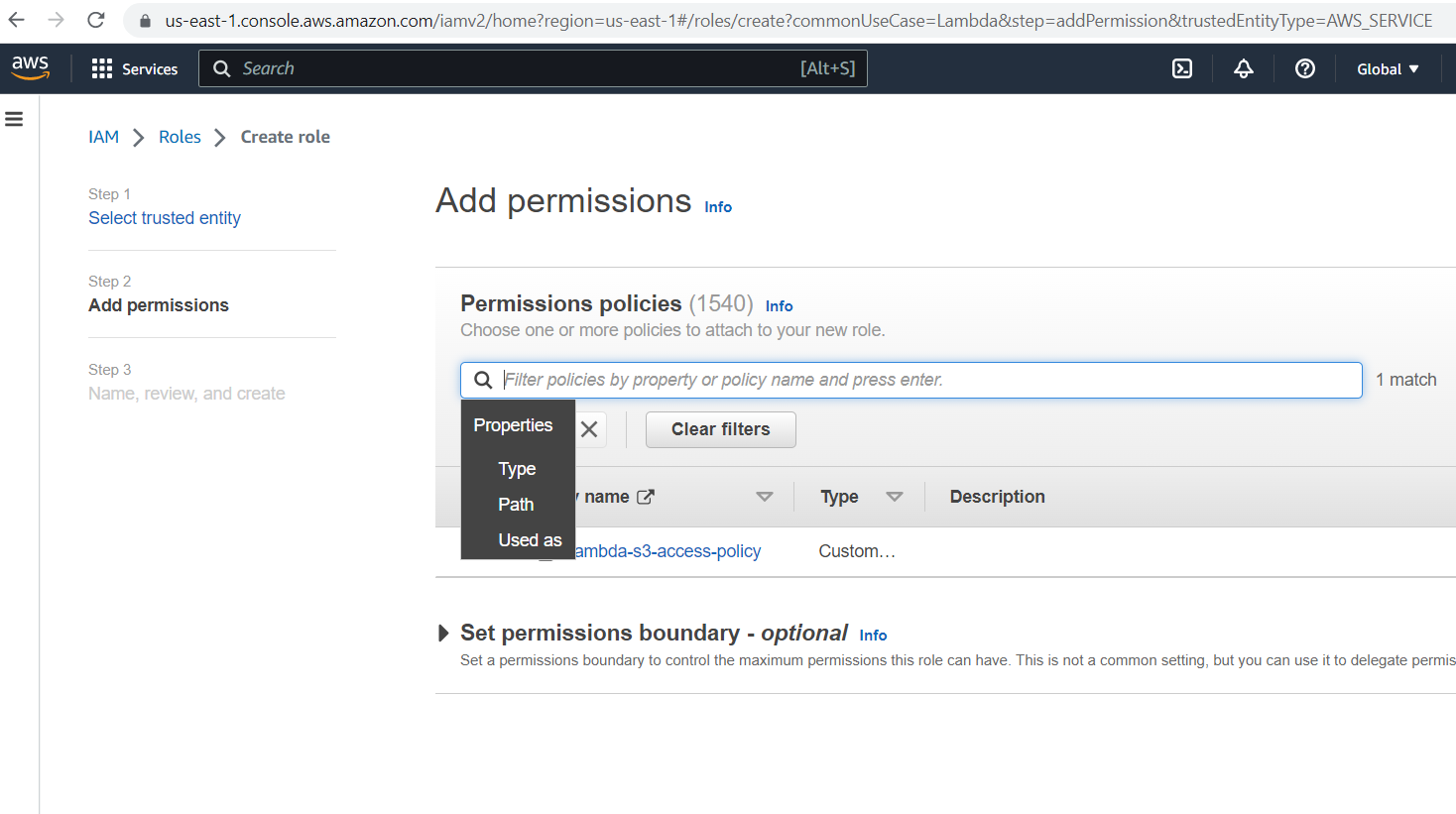

Once the role policy is created successfully, map it to the role. Select the policy and click the "Next" button.

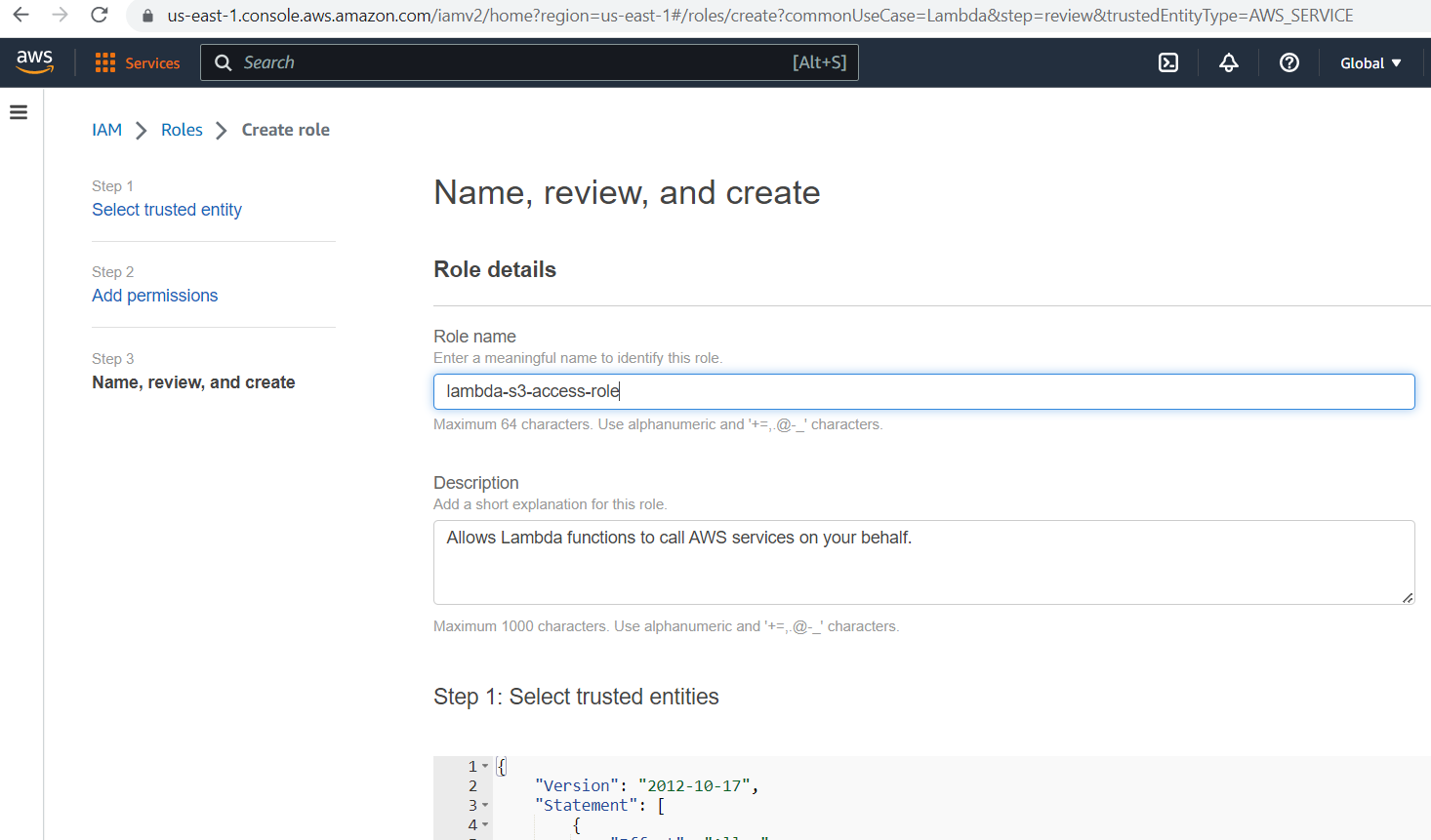

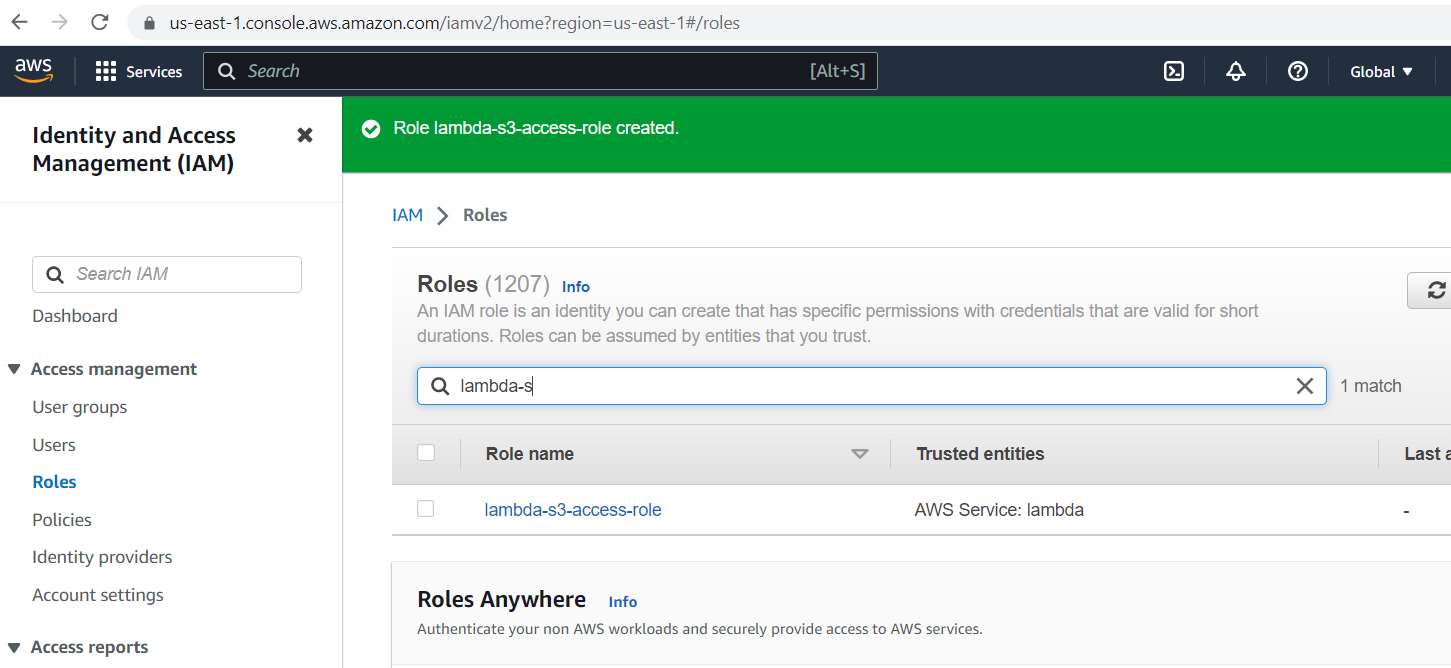

Provide the role name and click the "Create role" button.

The role is created successfully; then, we will use this role for lambda in the coming step.

Create Lambda Function

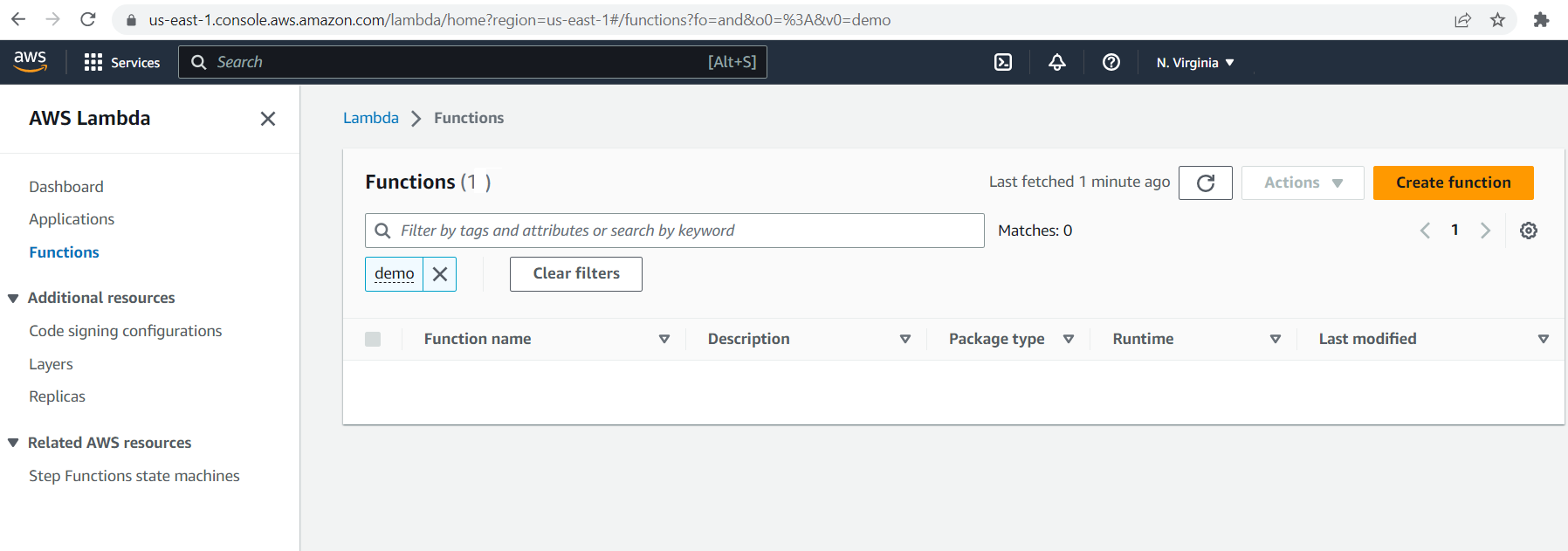

Now, we'll start creating a Lambda function in AWS Account B. Please log in to the AWS Account B console and go to the Lambda dashboard.

import logging

import boto3

from botocore.exceptions import ClientError

import os

def lambda_handler(event, context):

upload_file(event['file_name'], event['bucket'], event['object_name']):

def upload_file(file_name, bucket, object_name=None):

"""Upload a file to an S3 bucket

:param file_name: File to upload

:param bucket: Bucket to upload to

:param object_name: S3 object name. If not specified then file_name is used

:return: True if file was uploaded, else False

"""

# If S3 object_name was not specified, use file_name

if object_name is None:

object_name = os.path.basename(file_name)

# Upload the file

s3_client = boto3.client('s3')

try:

response = s3_client.upload_file(file_name, bucket, object_name)

except ClientError as e:

logging.error(e)

return False

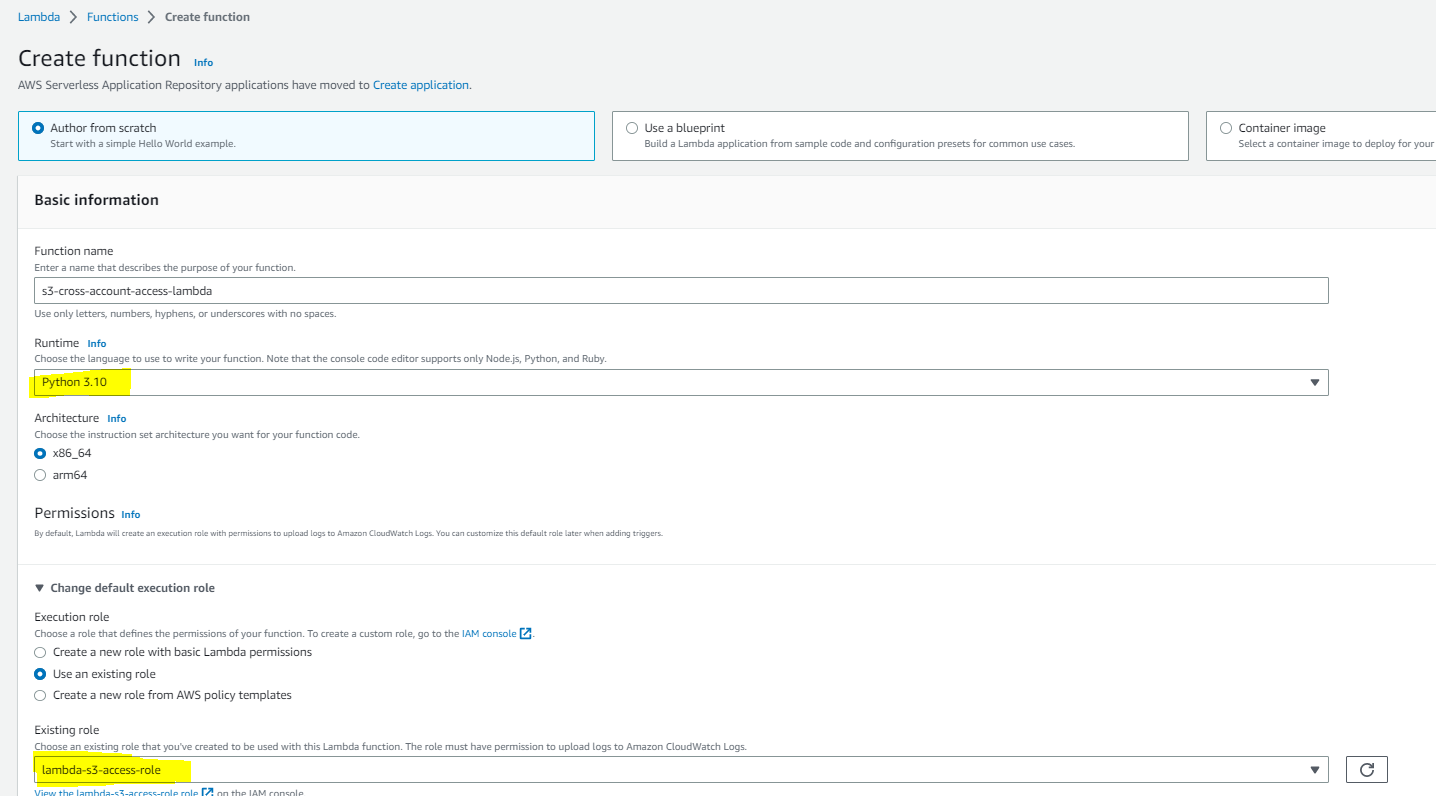

return TrueCreate a Lambda function using Python 3.10 runtime, and select the previously created role. Then, add the Python code mentioned above and create the Lambda function.

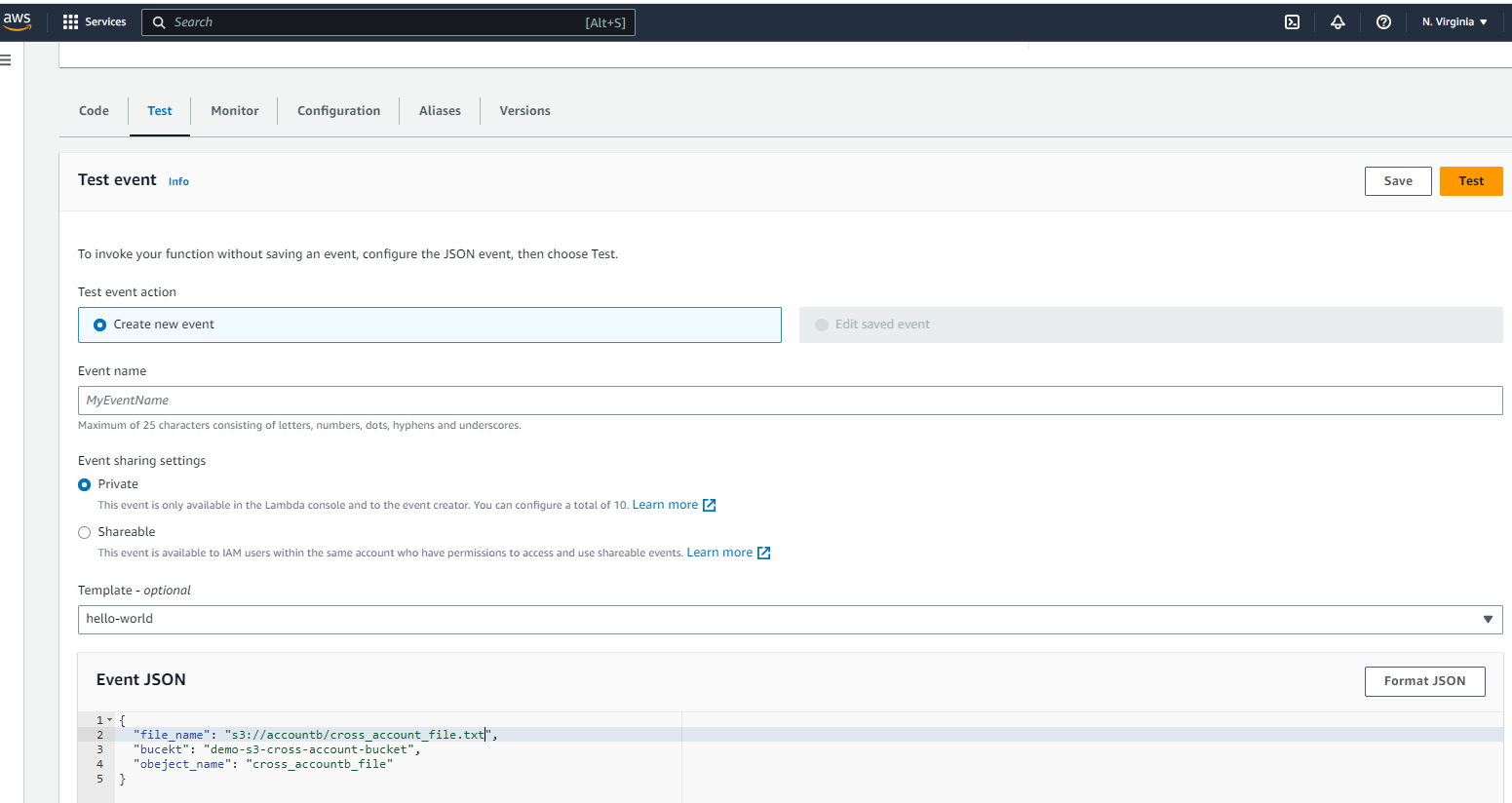

Test Lambda Function and Validate

Finally, let's go through the steps we provisioned to test and validate the process and ensure that it's working properly. To execute the Lambda function, provide the necessary input in the event JSON, as shown in the illustration below, and click on the "Test" button.

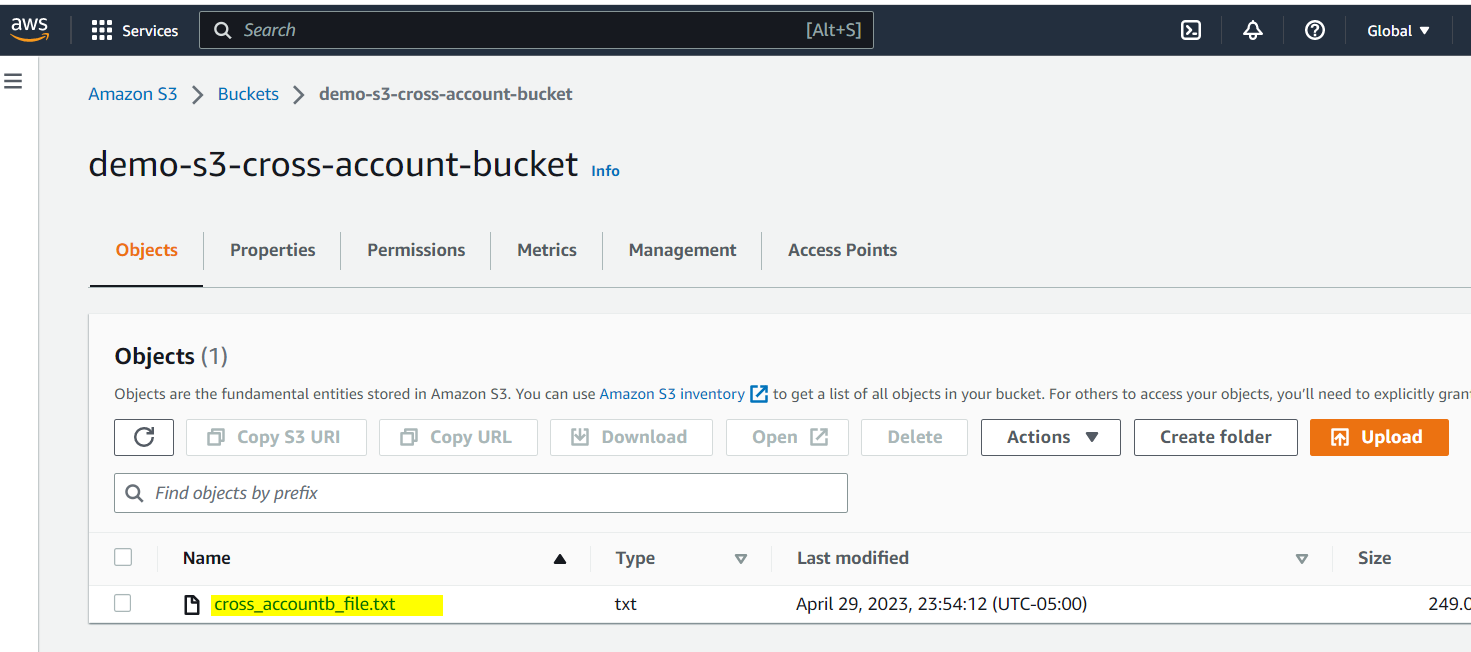

After successful execution of the Lambda function in AWS account B, verify the S3 bucket in AWS account A. You will see the uploaded file as illustrated below.

Conclusion

In conclusion, this article has provided a detailed guide on how to set up Lambda Functions for accessing AWS S3 in a Cross Account scenario. By following the step-by-step instructions outlined in this article, AWS developers can enhance their knowledge of Cross Account S3 access and gain the skills needed to configure Lambda Functions for this purpose. This guide is designed to help readers understand the requirements for Cross Account access to S3 using Lambda Functions and to provide them with the knowledge and tools necessary to successfully complete the configuration process. With the information provided in this article, readers can confidently set up Lambda Functions for Cross Account S3 access and take advantage of the benefits that this integration offers.

Opinions expressed by DZone contributors are their own.

Comments