Azure SQL with PCF Spring Boot Applications (Part 2 - AlwaysEncrypted)

In this follow-up to a previous article, we look at how to secure your previously constructed Spring Boot application with Always Encrypted.

Join the DZone community and get the full member experience.

Join For FreeThis is part 2 of the series demonstrating advanced Azure SQL (PAAS) features and how to use them from Java Spring Boot applications running on PCF (Pivotal CloudFoundry) on Azure. The first article showed how to use a Spring Boot application with Azure SQL Database auto-failover groups to provide resilience to regional outages and walked thru Azure Service Broker providing seamless integration with Azure services to applications running Pivotal CloudFoundry.

This article will demonstrate how to protect sensitive data (such as password, credit cards, and social security numbers) in Azure SQL database. Security and protection of the data becomes even more important to enterprises looking to migrate databases to the cloud. The Always Encrypted feature of Azure SQL enables customers to be confident that even high privileged but unauthorized users cannot access their encrypted data in the cloud. It safeguards data not only “at-rest” and ”in transit”, but also "in use” in any data lifecycle event and does it transparently to applications. Moreover, encryption keys are not stored within the database, they stay with the client (in the demo below — stored in Key Vault) and that’s why it keeps data protected even from cloud operators.

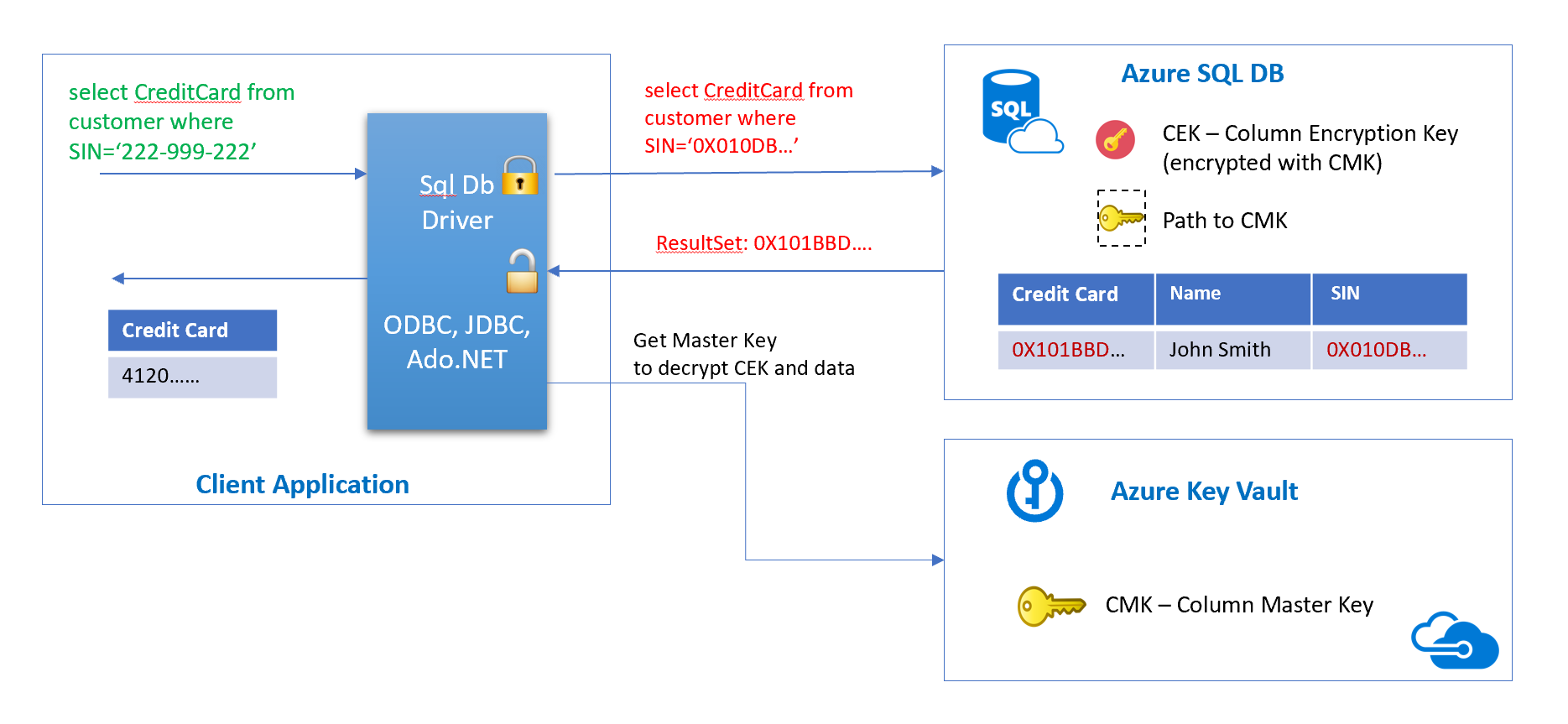

How Always Encrypted Works

The Always Encrypted feature is implemented on the Driver level and this way it is transparent to application, with almost no changes required in the code. The Driver encrypts/decrypts sensitive data inside client applications and never reveals the encryption keys to the Database Engine. Data in the SQL DB is encrypted using CEK (Column master key) which is stored in encrypted form inside db. CEK key is encrypted using Master Key which is stored outside the DB. We will demonstrate a case where master key is stored in Azure Key Vault.

Client application performs queries using the driver, the driver is responsible for getting the master key, the column encryption key, encrypting the data, and sending it to the database. A similar flow going back from the database as shown in the picture above. For more information on Always Encrypted and its setup refer to the following article: Always Encrypted (Database Engine).

Encrypting the Data

Let’s encrypt data in the database used in the Part 1 article Spring Boot application; we could do that using SSMS (SQL Server Management Studio) or using PowerShell. An example script creating a master key in the vault, a column encryption key, and encrypting the column could be found on GitHub.

To generate a column master key in the Azure Key Vault:

- Create Azure Key Vault using Portal or CLI.

- Grant the user that would encrypt the columns Get, List, Create, WrapKey, UnwrapKey, Encrypt, Decrypt, Verify, and Sign permissions to the key vault.

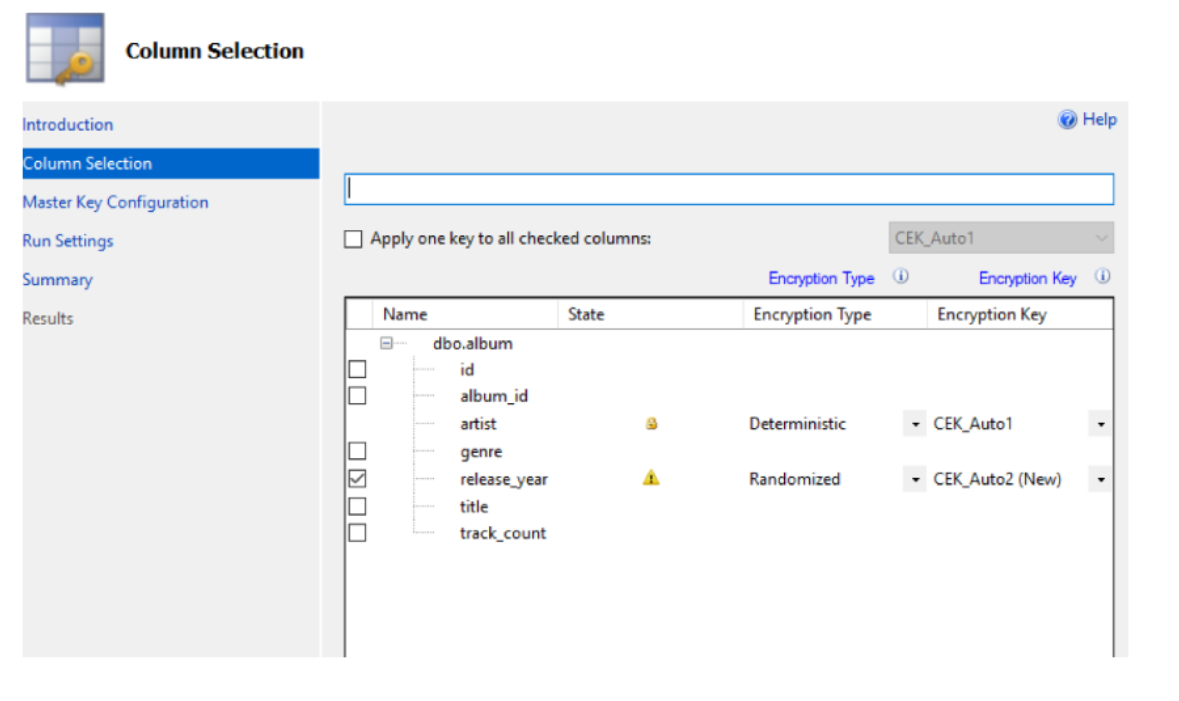

Open the database in the SSMS, right-click the table and invoke the “Encrypt Columns” wizard, go through steps configuring columns to be encrypted and keys:

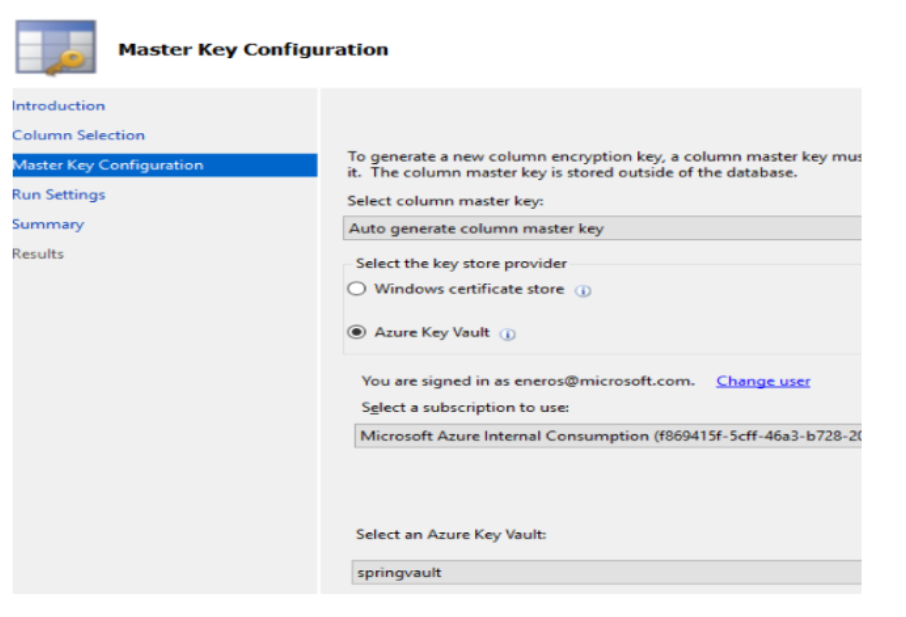

Use the Azure key vault as storage for the Master Key:

Use the Azure key vault as storage for the Master Key:

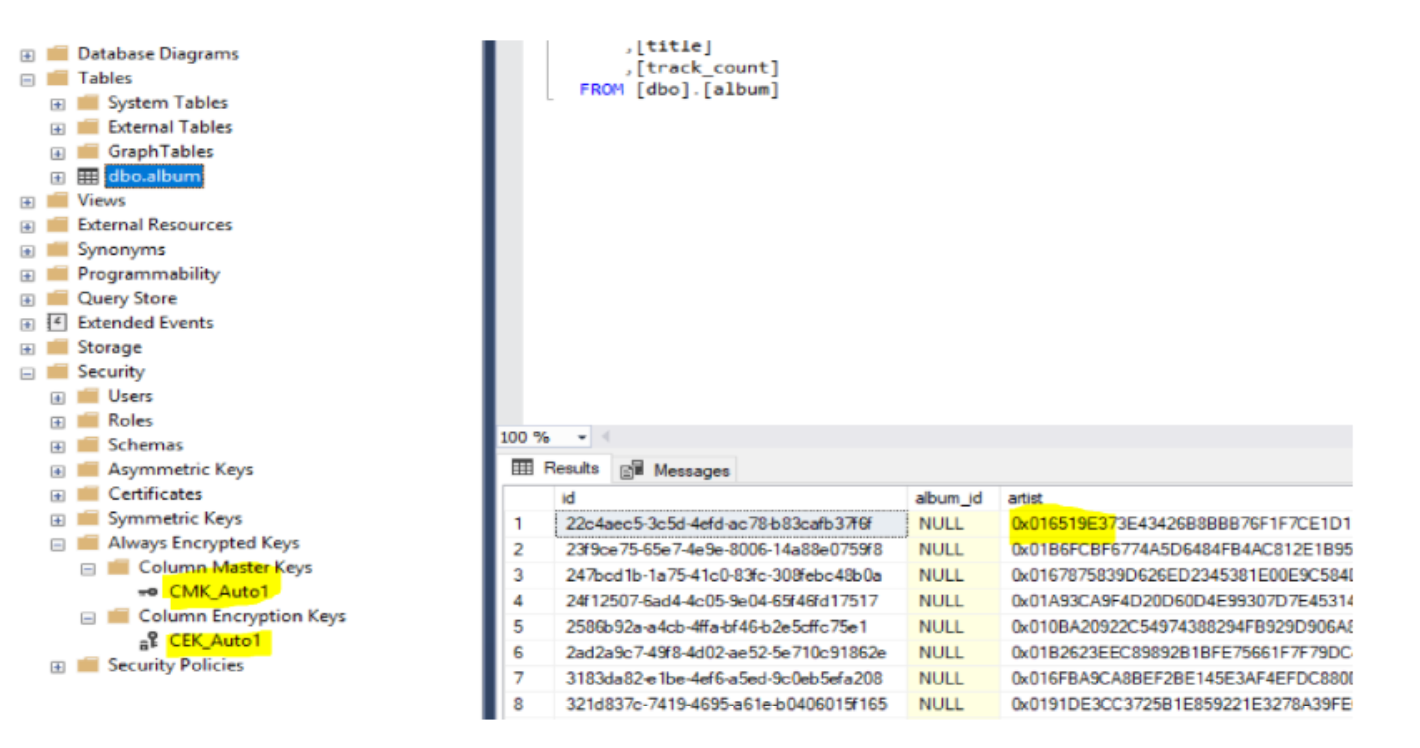

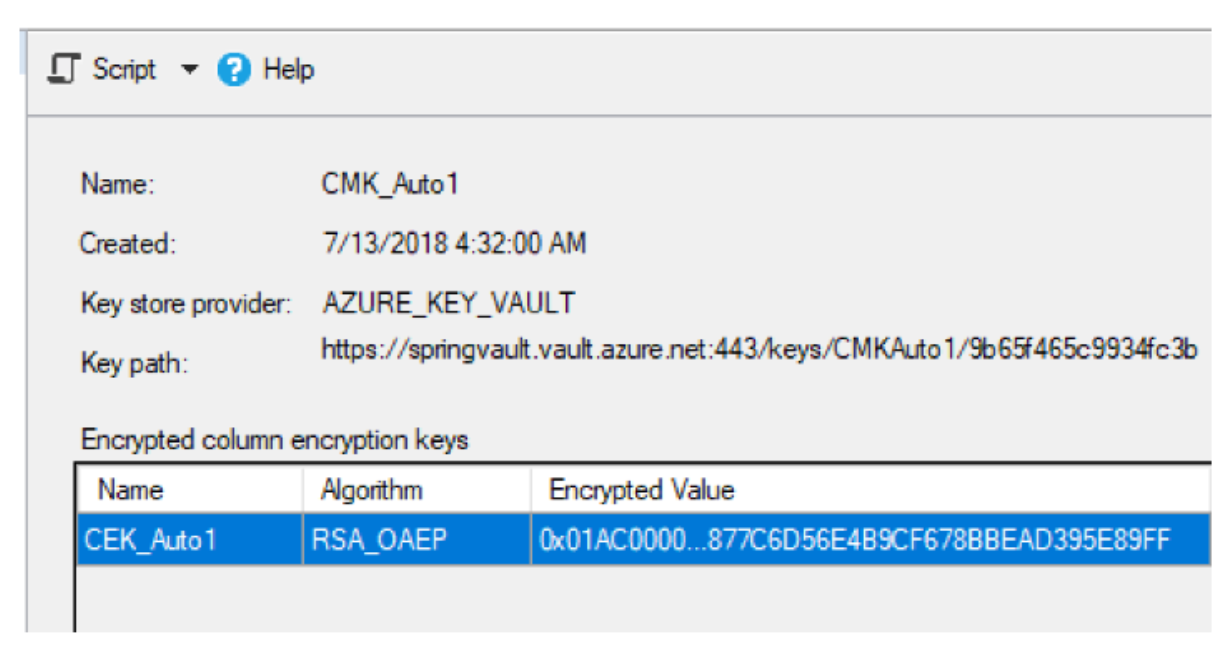

Once the changes are applied and encryption finishes, you could see the CEK key created in the database and only metadata pointing to the Master key:

The Master key is not stored inside the database, only the information on how to access it, used by driver:

The Master key is not stored inside the database, only the information on how to access it, used by driver:

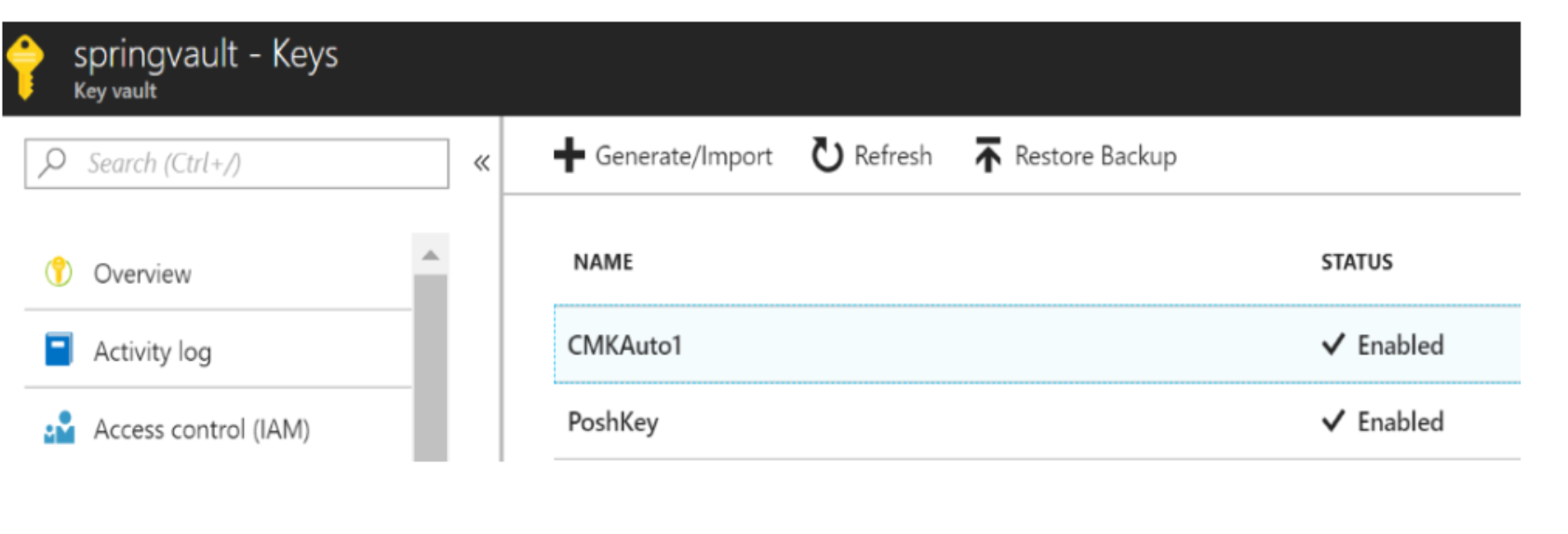

You could examine the Master key by navigating to the vault in the Azure portal:

Spring Boot Application Changes

At this stage, if we use our part 1 sample SpringBoot application as it is, will get the scrambled encrypted data displayed on the page. We need to enable JDBC driver settings to be able to encrypt/decrypt the data. Details on using Always Encrypted with JDBC driver are described in Microsoft docs:

The summary of the steps required by Java application are:

- Create the Service Principal that will be used by JDBC driver to access the vault and grant it following access permissions to keyvault:

get, list, wrapKey, unwrapKey, sign, verify

Powershell example: Making Azure KeyVault keys available to users

- use jdbcUrl with

columnEncryptionSetting=Enabledto connect to the DataSource e.gjdbc:sqlserver://;user=;password=;columnEncryptionSetting=Enabled; - provide the JDBC driver with information and service principal to connect to Azure KeyVault to retrieve master key:

SQLServerColumnEncryptionAzureKeyVaultProvider akvProvider =

new SQLServerColumnEncryptionAzureKeyVaultProvider(clientId, clientSecret);

Map<String, SQLServerColumnEncryptionKeyStoreProvider> keyStoreMap = new HashMap<String, SQLServerColumnEncryptionKeyStoreProvider>();

keyStoreMap.put(akvProvider.getName(), akvProvider);

SQLServerConnection.registerColumnEncryptionKeyStoreProviders(keyStoreMap); Where clientId, clientSecret are applicationID and password of Service Principal.

- The account used by the driver has to have permissions "View Any Column Master Key Definition, View Any Column Encryption Key Definition" to be able to get details about master and column key.

PCF Service Broker - User Permissions for DB User

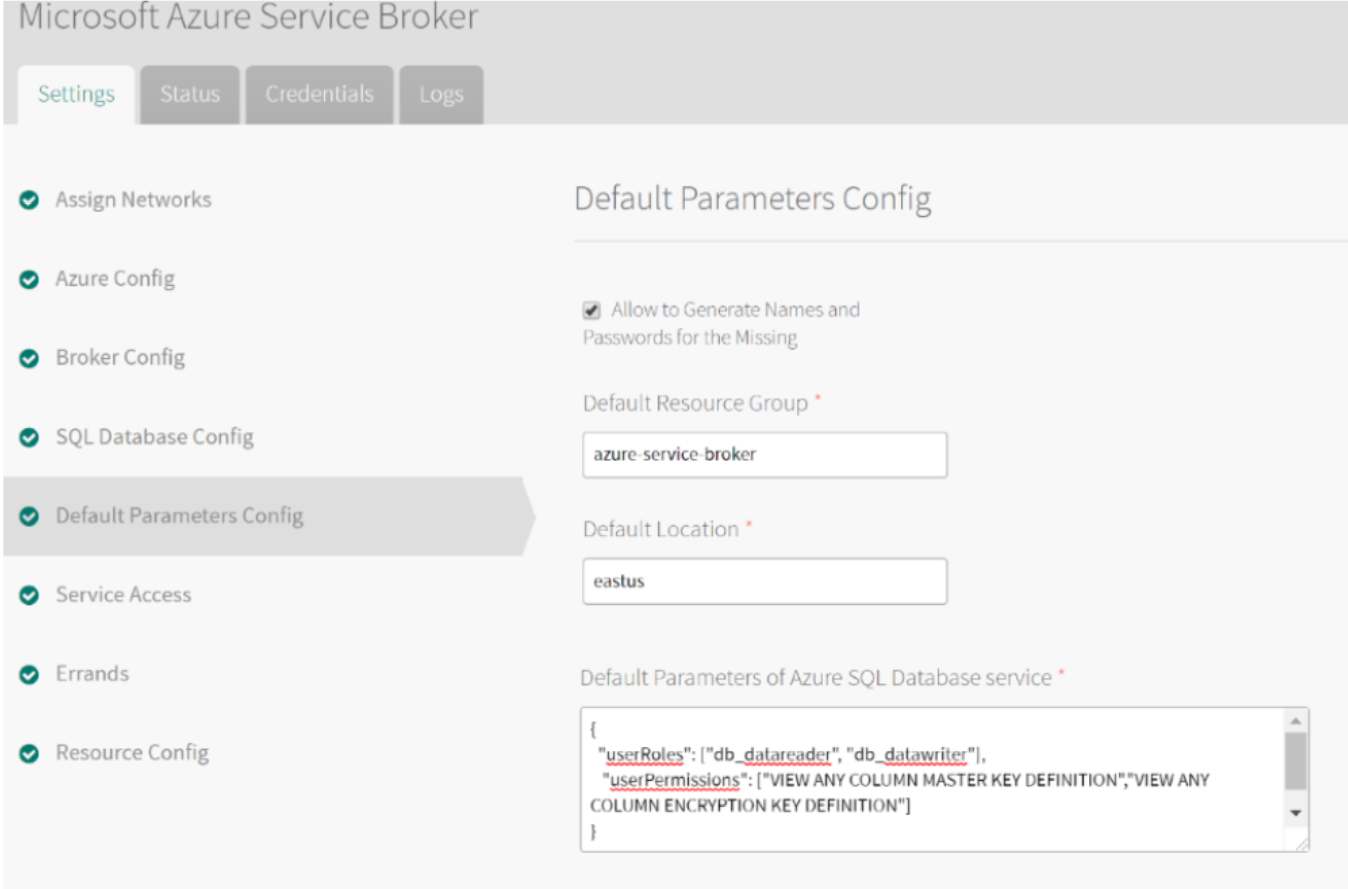

As shown in the article in Part 1 of the series, we use Meta Azure Service Broker to provision SQL DB, an auto-failover group for high-availability and the DB user. Service Broker provisions new users to access the database when performing the bind operation. It’s good practice to give the application users the least possible permissions.

MASB service broker starting from version 1.7 allows to specify sql user roles and permissions that would be assigned during provisioning. Specify default settings in MASB tile in Operations Manager as shown below.

Update Service Broker Tile “Default Parameters config” section for Azure SQL DB to include permissions required for accessing Encryption Keys:

{

"userRoles": ["db_datareader", "db_datawriter"],

"userPermissions": ["VIEW ANY COLUMN MASTER KEY DEFINITION","VIEW ANY COLUMN ENCRYPTION KEY DEFINITION"]

}

Apply the changes, and wait until the installation completes.

Updating JDBC URL for PCF Spring Boot Application

Updating jdbcURL in SpringBoot application to include a new flag could be done very easily in application.properties:

spring:

datasource:

url: "jdbc:sqlserver://<server>.database.windows.net:1433;database=<db>;ColumnEncryptionSetting=Enabled"

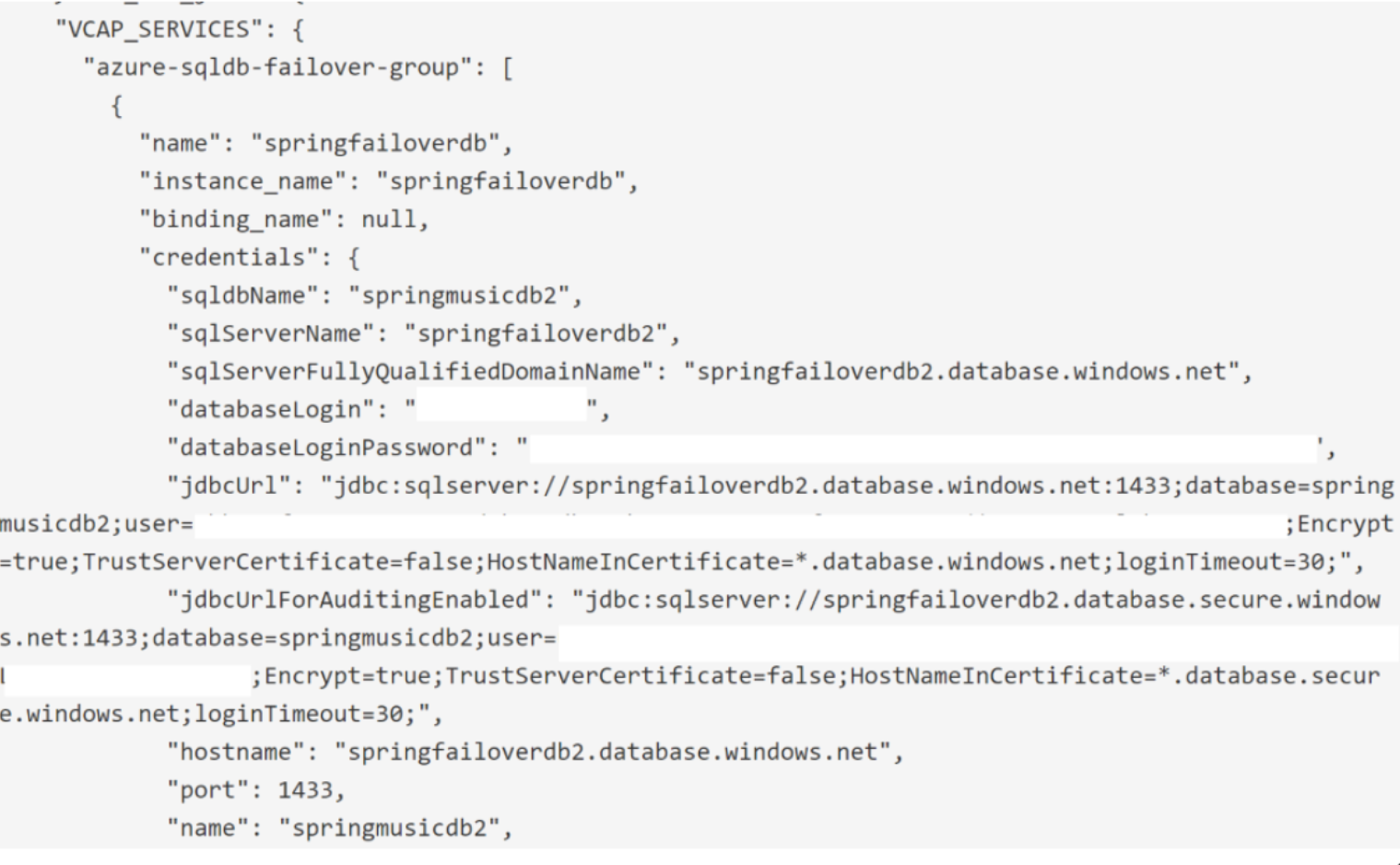

driver-class-name: com.microsoft.sqlserver.jdbc.SQLServerDriverBut the problem arises when the application has to be deployed on PCF, and since the sql-db service is bound to the application, information on how to connect to database including jdbcUrl is injected by service connectors into VCAP_SERVICES and passed to the application as shown below:

Starting with SpringBoot 2, it comes by default with HikariCP as a connection pool mechanism. Datasource automatically injected by spring autoconfiguration and spring cloud connectors will be of HikariDataSource type. Refer to the details here.

Luckily, there is extension mechanism in HikariCP that allows injecting custom settings into DataSource configuration. To perform customizations, we could use the DataSourceProperties class to set the new properties that are specific to drivers or pool. To set this property we need to update application.properties or PCF environment variables with the following setting:

spring.datasource.dataSourceProperties.ColumnEncryptionSetting: Enabled

Spring Cloud Connectors will pass this setting to the underlying Datasource.

Note: In SpringBoot 1.5.X, applications that use DBCP as a default connection pool, the following setting will inject custom property:

spring.datasource.connectionProperties: ColumnEncryptionSetting=Enabled

Updating Driver with Azure KeyVault Encryption Provider

Setting the Column Encryption provider is a static method on the SQLConnection and could be done only once in application startup. The easiest pluggability point is to implement BeanPostProcessor which will set the required settings after Datasource bean is initialized. The full code of post-processing is available at GitHub.

To make enabling encryption to be configurable we will add @ConditionalOnProperty and this class postprocessing login will be activated if the setting we described in previous section:

@Component

@ConditionalOnProperty(name = "spring.datasource.dataSourceProperties.ColumnEncryptionSetting", havingValue = "Enabled", matchIfMissing = false)

public class DataSourceBeanPostProcessor implements BeanPostProcessor {

private static final Logger logger = LoggerFactory.getLogger(DataSourceBeanPostProcessor.class);

@Value("${microsoft.vault.clientId}")

private String clientId;

@Value("${microsoft.vault.clientSecret}")

private String clientSecret;

@Override

public Object postProcessBeforeInitialization(final Object bean, final String beanName) throws BeansException {

return bean;

}

@Override

public Object postProcessAfterInitialization(final Object bean, final String beanName) throws BeansException {

if (bean instanceof DataSource) {

if ( clientId ==null || clientId.isEmpty() || clientSecret == null || clientSecret.isEmpty() )

throw new FatalBeanException("AlwaysEncrypted feature requires Service Principal that has access to Vaulr - setup clientId and ClientSecret");

try {

logger.info ("initializing DataSource AlwaysEncryption Vault provider");

SQLServerColumnEncryptionAzureKeyVaultProvider akvProvider =

new SQLServerColumnEncryptionAzureKeyVaultProvider(clientId, clientSecret);

Map<String, SQLServerColumnEncryptionKeyStoreProvider> keyStoreMap = new HashMap<String, SQLServerColumnEncryptionKeyStoreProvider>();

keyStoreMap.put(akvProvider.getName(), akvProvider);

SQLServerConnection.registerColumnEncryptionKeyStoreProviders(keyStoreMap);

} catch (SQLException ex) {

logger.error(ex.getMessage());

throw new FatalBeanException(ex.getMessage());

}

}

return bean;

}

} ClientID and ClientSecret for service principal are passed to the Bean from the environment.

Update application manifest.yml to set the setting described above:

---

applications:

- name: spring-music

memory: 1G

buildpack: java_buildpack_offline

path: build/libs/spring-music-1.0.jar

routes:

- route: spring-music.<pcf-domain>

services:

- springfailoverdb2

env:

microsoft.vault.clientId: <service principal app Id>

microsoft.vault.clientSecret: <service principal secret>

spring.datasource.dataSourceProperties.ColumnEncryptionSetting: Enabled Deploy Application to PCF

Please refer to Part 1 article on the details of creating Azure SQL DB with MASB. Application and sample configuration files are available here.

The summary of build and deployment commands:

./gradlew clean assemble

If a database was not created yet, run following to create the DB and Failover group:

cf create-service azure-sqldb PremiumP2 springdemodb -c ./springmusicdb.json

cf create-service azure-sqldb-failover-group SecondaryDatabaseWithFailoverGroup springfailoverdb2 -c ./failover.json` Deploy the application:

cf push -f manifest.yml

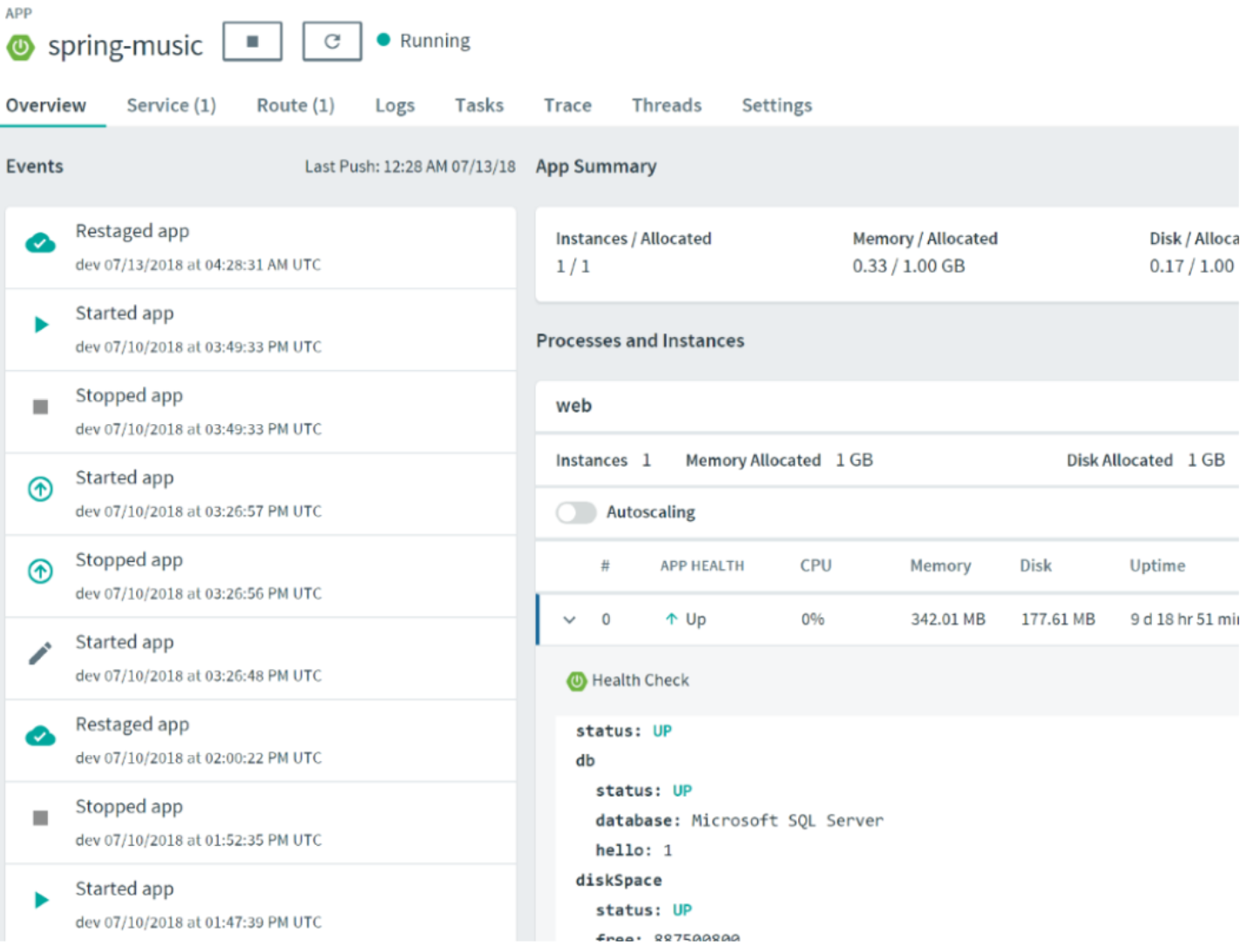

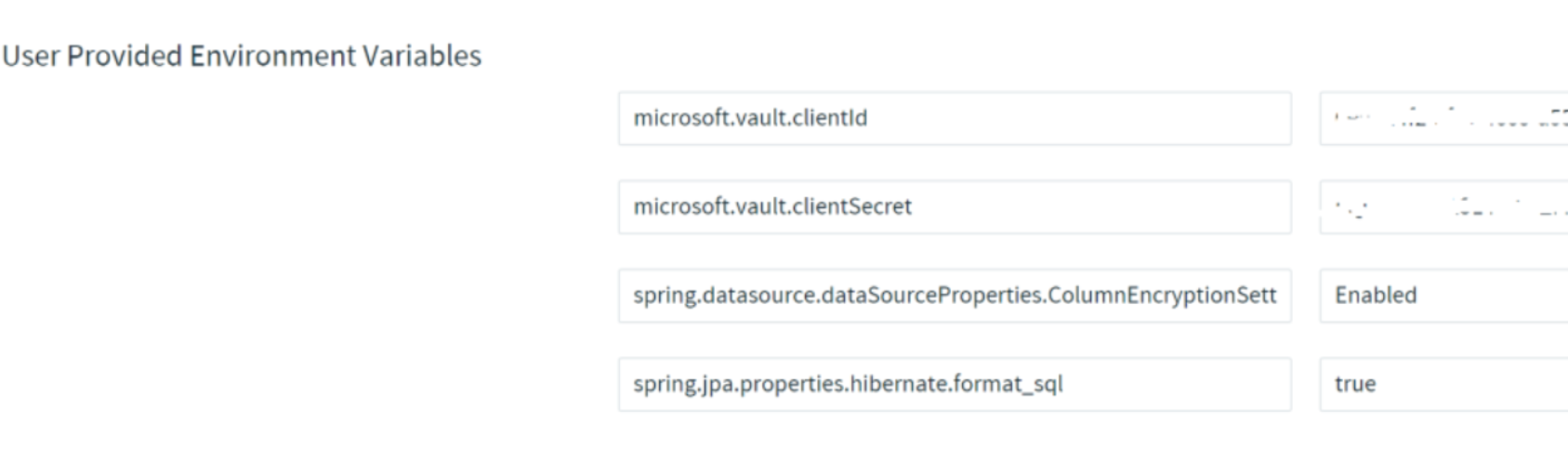

The deployed application should be running and showing a Health connection to SQL Server:

And the settings will have the values to enable Encryption and Service Principal to connect to Azure Key Vault.

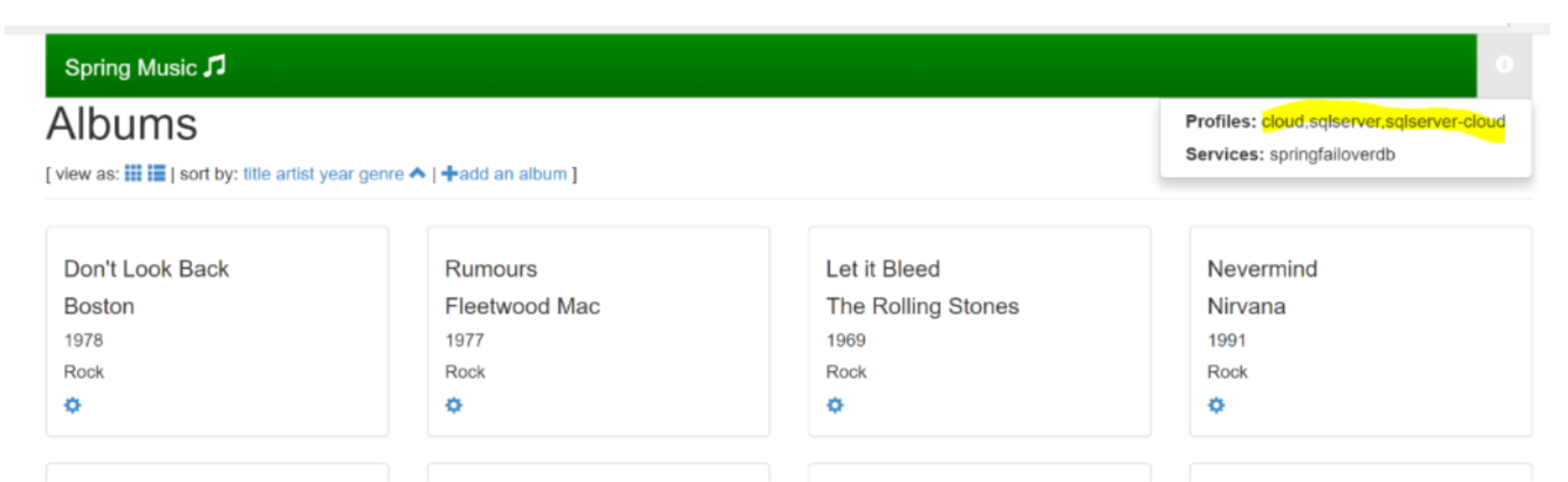

The application will show decrypted values for the fields, and once new items added, you could examine the database to see that the values are encrypted in the DB.

Rotate the Keys

Following the three Rs of enterprise security, we could Rotate the master key periodically. It could be done using SSMS or Powershell. The change is transparent to the application as metadata in the database will point to the new key in Azure KeyVault.

Troubleshooting

During extensive encryption testing, when we were modifying encryption scheme and deleting/re-creating keys, we encountered following errors when running the application

SQL Error: 206, SQLState: S0002

h.engine.jdbc.spi.SqlExceptionHelper : Operand type clash: varchar(6) encrypted with

Or

Some parameters or columns of the batch require to be encrypted, but the corresponding column encryption key cannot be found. Use sp_refresh_parameter_encryption to refresh the module parameters metadata.

Solution is to refresh the cache of the DB:

ALTER DATABASE SCOPED CONFIGURATION CLEAR PROCEDURE_CACHE;

Considerations

Always Encrypted provides a great way to secure your data, but there are number of limitations and considerations that need to be taken into account. Refer to the list of limitations at Microsoft: Always Encrypted features.

Verify which datatypes and Column options are supported.

Although deterministic encryption is supported, not all types of queries are supported on the encrypted fields.

The application must use parameterized queries, as the driver collaborates with the DB engine to determine column encryption.

Conclusion

In this article we have demonstrated “Always Encrypted” — a powerful feature of Azure SQL providing end-to-end security for the data in the cloud. We have shown that only a few minor changes are required in the application, and it is transparent to the queries performed by the application logic.

Opinions expressed by DZone contributors are their own.

Comments