Chaining API Requests With API Gateway

Learn how to handle client requests that should be called in sequence with an open-source API gateway which breaks down the API workflows into more manageable steps.

Join the DZone community and get the full member experience.

Join For FreeAs the number of APIs that need to be integrated increases, managing the complexity of API interactions becomes increasingly challenging. By using the API gateway, we can create a sequence of API calls, which breaks down the API workflows into smaller, more manageable steps. For example, in an online shopping website when a customer searches for a product, the platform can send a request to the product search API, then send a request to the product details API to retrieve more information about the products. In this article, we will create a custom plugin for Apache APISIX API Gateway to handle client requests that should be called in sequence.

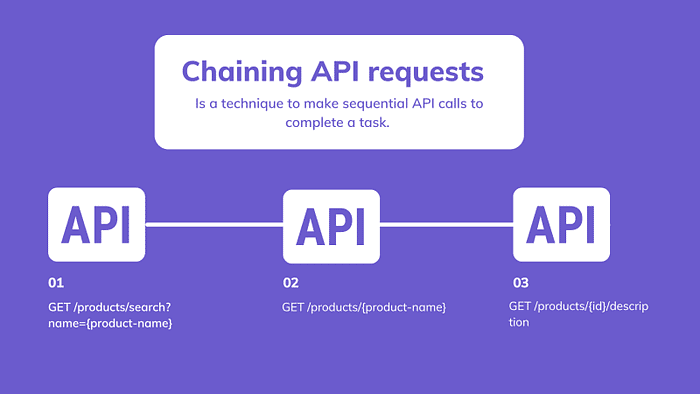

What Is a Chaining API Request, and Why Do We Need It?

Chaining API requests (or pipeline requests, or sequential API calls) is a technique used in software development to manage the complexity of API interactions where software requires multiple API calls to complete a task. It is similar to batch request processing where you group multiple API requests into a single request and send them to the server as a batch. While they may seem similar, a pipeline request involves sending a single request to the server that triggers a sequence of API requests to be executed in a defined order. Each API request in the sequence can modify the request and response data, and the response from one API request is passed as input to the next API request in the sequence. Pipeline requests can be useful when a client needs to execute a sequence of dependent API requests that must be executed in a specific order.

In each step of the pipeline, we can transform or manipulate the response data before passing it on to the next step. This can be useful in situations where data needs to be normalized or transformed or filter out sensitive data before it is returned to the client. It can help to reduce overall latency. For example, one API call can be made while another is waiting for a response, reducing the overall time needed to complete the workflow.

Custom Pipeline-Request Plugin for Apache APISIX

An API gateway can be the right place to implement this functionality because it can intercept all client app requests and forward them to intended destinations. We are going to use Apache APISIX as it is a popular open-source API gateway solution with a bunch of built-in plugins. However, at the time of developing the current blog post, APISIX did not have official support for the pipeline-request plugin. By having the knowledge of custom plugin development capabilities of APISIX, we decided to introduce a new plugin that can offer the same feature. There is a repo on GitHub with source code written in Lua programming language and a description of the pipeline-request plugin.

With this plugin, you can specify a list of upstream APIs that should be called in sequence to handle a single client request. Each upstream API can modify the request and response data, and the response from one upstream API is passed as the input to the next upstream API in the pipeline. The pipeline can be defined in a Route configuration, and you can also define orders for API URLs when the pipeline should execute them.

Let’s understand the usage of this plugin with an example. Suppose you have two APIs — one that makes GET /credit_cards requests to retrieve credit card information and another that receives previous response data in the body of POST /filter request and then it filters out the sensitive data (such as the credit card number and expiration date) before returning the response to the client. Because the credit card API endpoint returns sensitive information that should not be exposed to unauthorized parties. The below diagram illustrates the overall data flow:

- When a client makes a request to the credit card API endpoint of API Gateway to retrieve all credit card info, API Gateway forwards a request to retrieve the credit card data from the credit card backend service.

- If the request is successful and returns credit card data, then it passes to the next step in the pipeline which security service

- When the filtered response is received from the security service, it returns the combined response to the client.

Pipeline-Request Plugin Demo

For this demo, we are going to leverage another prepared demo project on GitHub where you can find all curl command examples used in this tutorial, run APISIX and enable a custom plugin without additional configuration with a Docker compose.yml file.

Prerequisites

- Docker is used to installing the containerized etcd and APISIX.

- curl is used to send requests to APISIX Admin API. You can also use easy tools such as Postman to interact with the API.

Step 1: Install and Run APISIX and etcd

You can easily install APISIX and etcd by running docker compose up from the project root folder after you fork/clone the project. You may notice that there is a volume ./custom-plugins:/opt/apisix/plugins:ro specified in docker-compose.yml file. This mounts the local directory*./custom-plugins* where our pipeline-request.lua file with the custom plugin implementation as a read-only volume in the docker container at the path /opt/apisix/plugins. This allows custom plugins to be added to APISIX in the runtime (This setup is only applicable if you run APISIX with docker).

Step 2: Create the First Route With the Pipeline-Request Plugin

Once APISIX is running, we use cURL command that is used to send an HTTP PUT request to the APISIX Admin API /routes endpoint to create our first route that listens for URI path /my-credit-cards.

curl -X PUT 'http://127.0.0.1:9180/apisix/admin/routes/1' \

--header 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' \

--header 'Content-Type: application/json' \

--data-raw '{

"uri":"/my-credit-cards",

"plugins":{

"pipeline-request":{

"nodes":[

{

"url":"https://random-data-api.com/api/v2/credit_cards"

},

{

"url":"http://127.0.0.1:9080/filter"

}

]

}

}

}'

The important part of the configuration is the “plugins” section, which specifies that the “pipeline-request” plugin should be used for this API route. The plugin configuration contains a “nodes” array, which defines the sequence of API requests that should be executed in the pipeline. You can define one or multiple APIs there. In this case, the pipeline consists of two nodes: the first node sends a request to the https://random-data-api.com/api/v2/credit_cards ****API to retrieve credit card data, and the second node sends a request to a local API at http://127.0.0.1:9080/filter to filter out sensitive data from the credit card information. The second API will be just a serverless function using the serverless-pre-function APISIX plugin. It acts just as a backend service to modify the response from the first API.

Step 3: Create the Second Route With the Serverless Plugin

Next, we configure a new route with ID 2 that handles requests to /filter endpoint in the pipeline. It also enables serverless-pre-function APISIX’s existing plugin where we specify a Lua function that should be executed by the plugin. This function simply retrieves the request body from the previous response, replaces the credit card number field, and leaves the rest of the response unchanged. Finally, it sets the current response body to the modified request body and sends an HTTP 200 response back to the client. You can modify this script to suit your needs, such as by using the decoded body to perform further processing or validation.

curl -X PUT 'http://127.0.0.1:9180/apisix/admin/routes/2' \

--header 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' \

--header 'Content-Type: application/json' \

--data-raw '

{

"uri": "/filter",

"plugins":{

"serverless-pre-function": {

"phase": "access",

"functions": [

"return function(conf, ctx)

local core = require(\"apisix.core\")

local cjson = require(\"cjson.safe\")-- Get the request body

local body = core.request.get_body()

-- Decode the JSON body

local decoded_body = cjson.decode(body)

-- Hide the credit card number

decoded_body.credit_card_number = \"****-****-****-****\"

core.response.exit(200, decoded_body);

end"

]

}

}

}'

Step 4: Test Setup

Now it is time to test the overall config. With the below curl command, we send an HTTP GET request to the endpoint http://127.0.0.1:9080/my-credit-cards.

curl http://127.0.0.1:9080/my-credit-cards

We have the corresponding route configured in the second step to use the pipeline-request plugin with two nodes, this request will trigger the pipeline to retrieve credit card information from the https://random-data-api.com/api/v2/credit_cards endpoint, filter out sensitive data using the http://127.0.0.1:9080/filter endpoint, and return the modified response to the client. See the output:

{

"uid":"a66239cd-960b-4e14-8d3c-a8940cedd907",

"credit_card_expiry_date":"2025-05-10",

"credit_card_type":"visa",

"credit_card_number":"****-****-****-****",

"id":2248

}

As you can see, it replaces the credit card number in the request body (Actually, it is the response from the first API call in the chain) with asterisks.

Summary

Up to now, we learned that our custom pipeline request plugin for the Apache APISIX API Gateway allows us to define a sequence of API calls as a pipeline in a specific order. We can also use this new plugin with the combination of existing ones to enable authentication, security, and other API Gateway features for our API endpoints.

Published at DZone with permission of Bobur Umurzokov. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments