Change Streams With MongoDB

Let's check out how to achieve integration with help of Change Streams. Also look at what MongoDB has to do with this.

Join the DZone community and get the full member experience.

Join For FreeMongoDB is always one step ahead of other database solutions in providing user-friendly support with advance features rolled out to ease operations. The OpLog feature was used extensively by MongoDB connectors to pull out data updates and generate stream. OpLog feature banked on MongoDB's internal replication feature. While the feature was highly useful, it was complex and necessarily mean tailing of logs.

To simplify things, Change Streams as subscriber to all insert, update, delete MongoDB collection operations was introduced, which should go well with Node.js event-based architecture.

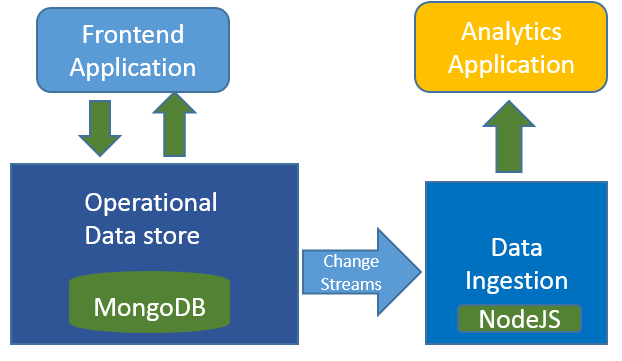

Change Streams can be leveraged to integrate data producer and data consumer applications.

Below are the steps to achieve integration with help of Change Streams.

Configure MongoDB cluster with replica set, start data servers.

mongod --shardsvr --replSet "rs0" --port 27018 --dbpath=D:\mongo\shard0\s0 --logpath=D:\mongo\shard0\log\s0.log --logappend

mongod --shardsvr --replSet "rs0" --port 27019 --dbpath=D:\mongo\shard0\s4 --logpath=D:\mongo\shard0\log\s4.log --logappend

mongod --shardsvr --replSet "rs0" --port 27020 --dbpath=D:\mongo\shard0\s5 --logpath=D:\mongo\shard0\log\s5.log --logappend Ensure that dbpath and logpath exists before you execute the shell command.

Connect to one of the servers launched above and initialize the replica set:

mongo --host localhost --port 27020

rs0:SECONDARY> rs.initiate( {

... _id : "rs0",

... members: [

... { _id: 0, host: "localhost:27018" },

... { _id: 1, host: "localhost:27019" },

... { _id: 2, host: "localhost:27020" }

... ]

... })Start MongoDB configuration server replication set - 3 servers:

mongod --configsvr --replSet configset --port 27101 --dbpath=D:\mongo\shard\config1 --logpath=D:\mongo\shard\log1\config.log --logappend

mongod --configsvr --replSet configset --port 27102 --dbpath=D:\mongo\shard\config2 --logpath=D:\mongo\shard\log2\config.log --logappend

mongod --configsvr --replSet configset --port 27103 --dbpath=D:\mongo\shard\config3 --logpath=D:\mongo\shard\log3\config.log --logappendInitialize configuration set by connecting to one of the config servers:

mongo --host localhost --port 27101

configset:PRIMARY> rs.initiate( {

... _id: "configset",

... configsvr: true,

... members: [

... { _id: 0, host: "localhost:27101" },

... { _id: 1, host: "localhost:27102" },

... { _id: 2, host: "localhost:27103" }

... ]

... } )Start Query Router server by configuring all configuration servers and add replica set as shard by connecting to Query Router server:

mongos --configdb configset/localhost:27103,localhost:27102,localhost:27101 --port 27030 --logpath=D:\mongo\shard\log\route.log

mongo --host localhost --port 27030

mongos> sh.addShard( "rs0/localhost:27018,localhost:27019,localhost:27020" )Now with the MongoDB replication in place, use below NodeJS module and code to subscribe to MongoDB database collection updates.

Install mongodb driver with npm install:

npm install mongodb --savePlace the below code block in NodeJS application to subscribe for changes in the form of stream.

const MongoClient = require('mongodb').MongoClient

const pipeline = [

{

$project: { documentKey: false }

}

];

MongoClient.connect("mongodb://localhost:27018,localhost:27019,localhost:27020/?replicaSet=rs0")

.then(client => {

console.log("Connected correctly to server");

// specify db and collections

const db = client.db("test_db");

const collection = db.collection("test_collection");

console.log(MongoClient);

const changeStream = collection.watch(pipeline);

// start listen to changes

changeStream.on("change", function (change) {

console.log( JSON.stringify(change));

});Conclusion

MongoDB Change Streams is a wonderful addition to MEAN development stack, and I thank the MongoDB team for bringing this feature. Integration between producer front-end applications and consumer analytic applications can be done in a seamless manner by leveraging this feature.

Opinions expressed by DZone contributors are their own.

Comments