Continuous Code Reviews & Quality Releases

Let's look at how we can make a solid end-to-end code review process with continuous monitoring in place and deliver high quality products using some of the tools like Git, Stash, Jenkins, SonarQube, JaCoCo & Ant/Maven for a Java-based product, for example.

Join the DZone community and get the full member experience.

Join For FreeIt is very important that we write quality code from day one to deliver high quality products. We need to educate and encourage developers about quality by continuously monitoring/promoting throughout the development life cycle.

Let's us see how we can make a solid end-to-end code review process with continuous monitoring in place and deliver high quality products using some of the tools like Git, Stash, Jenkins, SonarQube, JaCoCo & Ant/Maven for a Java-based product, for example.

Processes

All we need to know is how to use the available tools/methods and how best we can leverage, integrate and orchestrate them to create an end-to-end system in place which we can call the "Review System".

Here's a link to share the list of tools & methods that can help in building a Review System http://www.methodsandtools.com/archive/archive.php.

We will need both Manual & Automated review processes to have a solid Review System.

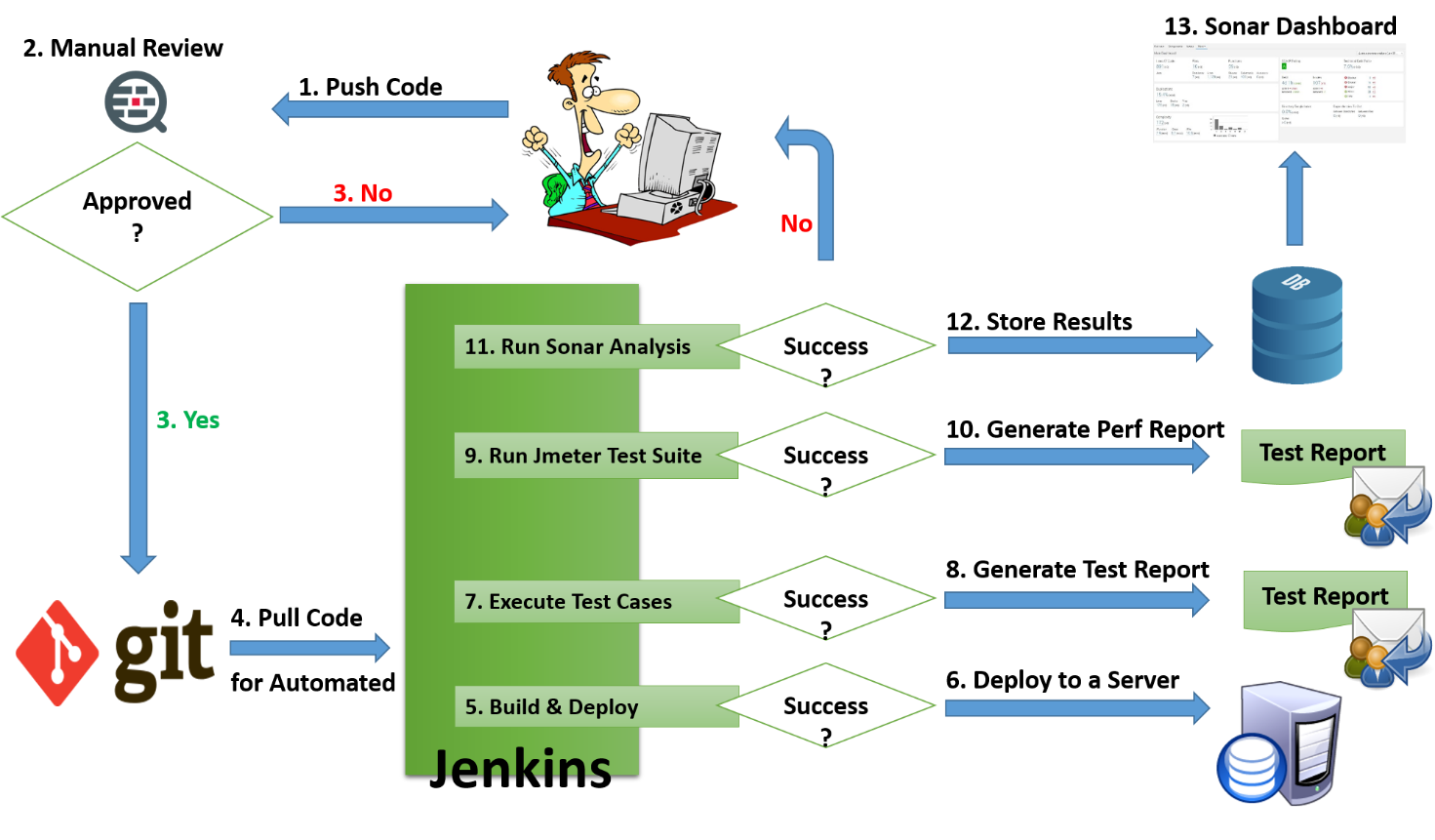

I've tried my best to illustrate a simple example of a Review System. We can add more automated processes to this, similar to Junit, Jmeter, SonarQube and so on, as shown below.

Developer checks-in the code using Git commands and uses Stash help to get the code reviewed by the respective reviewer by requesting through Stash.

A reviewer gets an email requesting for a code review, then the reviewer goes through the code and does a manual review of it. Making this manual review process mandatory by using tools like Stash/JIRA will help in getting continuous reviews done, logging feedback/issues religiously.

Once manual reviews are done and accepted, code gets merged into the Git repo automatically. Now, a CI tool like Jenkins pulls the source code to execute relevant scripts for automated reviews and thus generating reports.

These automated scripts can be written using Ant/Maven/Bash. I have used Ant scripts here for the examples.

Manual Review Process

We should try to automate everything if possible and leave only those areas which cannot be automated for manual reviews.

Manual code reviews can cover the following important areas (but not limited to), and these review areas might vary depending on the phase, code churn, size, and release type (Dot, Hotfix, and Major).

Design & Functional

The reviewer should do an end-to-end review to verify against the specifications. This should cover overall product design to every component level with its pros and cons to make sure the proposed system addresses the business problem.

This could cover architecture, technologies, framework, design patterns, database schemas, end-to-end data flow, and critical components. Important reviewers at this stage can be chief architects and subject matter experts.

Improve the design as the application evolves, there is no perfect or everlasting design.

Compatibility

Backward and forward compatibility review plays a vital role to ensure that the application has not broken while it underwent enhancements/improvements in a previous version or future versions. This could cover operating systems (OS), devices, database schemas, networks, browsers, libraries, and development kits.

But, there needs to be a limit to this support as we try to improve, add more features, and take advantage of new technologies. We may not be able to support n-4, n-5, n-6...n-x versions. For example, not all OS versions runs on every device version.

Architects and subject matter experts can do a better job with their overall knowledge of a product, its life span, and roadmaps.

Backward compatibility may become a hurdle for the product evolution, but it shouldn't be ignored.

Performance

This is another very important area that should be reviewed in detail from the inception of the code till the product goes live. Reviewer needs in depth knowledge & the usage of each and every technology.

Always look for alternatives like utilities, designs, best practices/patterns if any which can improve the performance even a millisecond for that matter of fact.

But that said, there could be situations where we may need to compromise with the performance to achieve functionality & this may be due to technology limitations at that point of time.

For example, a smart phone device will drain out early than a non-smart of the same manufacturer.

Performance reviews, improvements, and tests should be taken care from day one.

Security

This is one of the important review checks that needs to be performed. Though we could delegate the security reviews to tools like Fortify/Findbugs the product still needs a manual review process, as every system is unique in its design, development, integration and installation/deployment.

This review could cover threat modelling, penetration, integrations with 3rd party APIs and so on, to eliminate poor design/development/configuration/deployment errors which can exploit weak areas.

Try to hack your own system with known tools/methodologies, for example: SQL injection/cross-site scripting, to identify weak areas and close them prior to the product launch.

Readability

Writing code is an art. Reviewers should go through the code, review the code complexities and comments, and verify it against standards/guidelines/consistency checks. Any other developer other than the author should be able to make out its purpose, identify/fix defects, and enhance it further.

Maintainability

Maintenance is a very important aspect that if not appropriately factored for, could prove more expensive and hidden, than the actual development itself. Low maintenance systems, would avoid high down times (High availability) and resume system with easy efforts.

The author of the code may not always be the person who might be maintaining the code. The important reviewers can be a system administrator & an architect.

Readability & Maintainability of a code should be as simple as a relay race, where in developer passes the code like a baton to others.

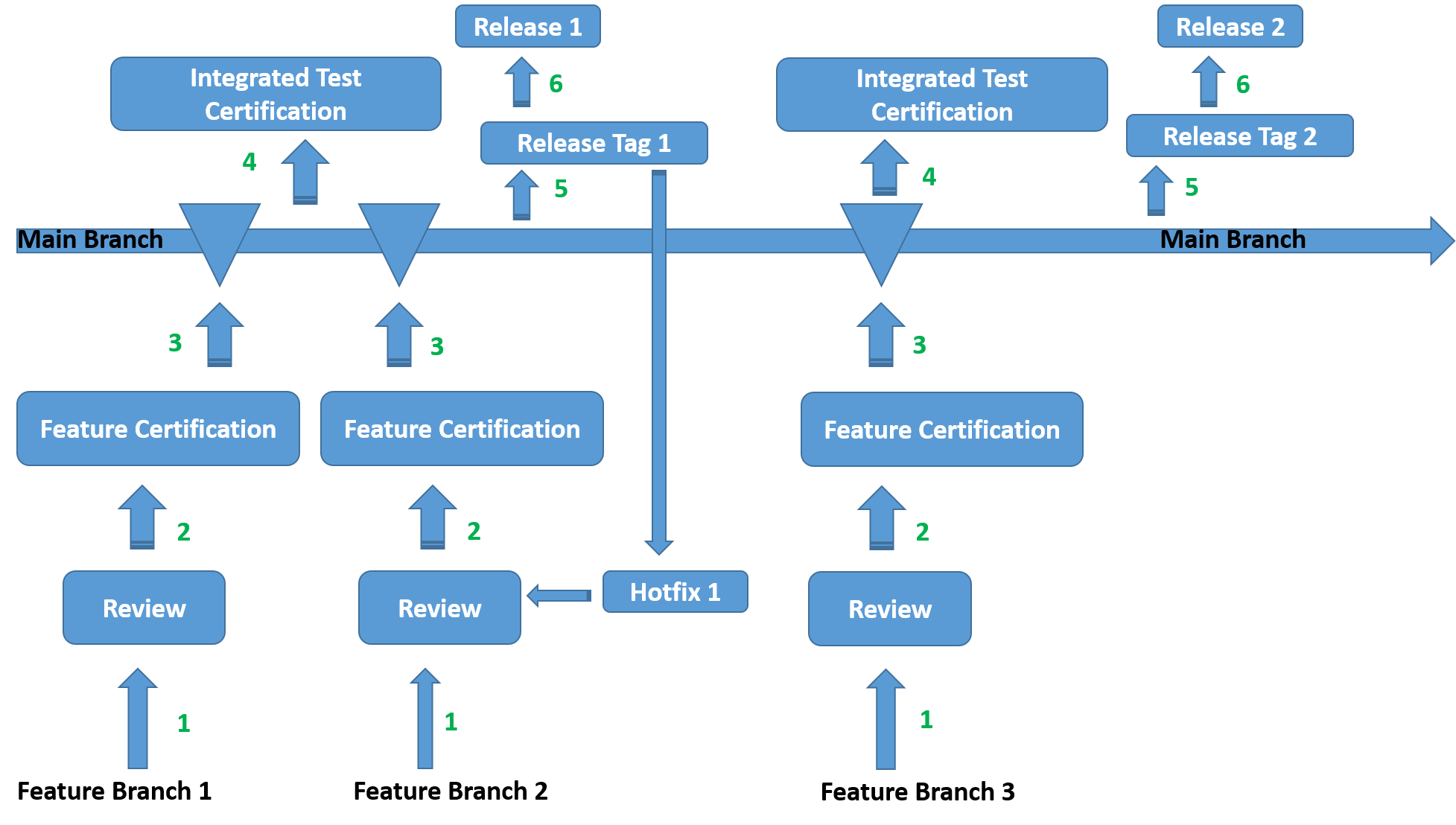

Code Check-ins, Branches & Releases

We can make review, test & release processes easy & better with the below

- Maintain a main branch for continuous development and integrations.

- Maintain separate branch for each feature (Feature Branch) & merge this to main branch once reviews/tests are done.

- Try to push the code in small sizes which are reviewable & testable.

- Try to push the code as frequent as possible to avoid bulk merges/conflicts.

- Code check-ins with proper comments with feature/defect descriptions/Ids would help in quicker traceability, easy reviews & feedbacks.

- Cut the release candidate from mother branch and proceed with the release process.

- Maintain main branch always in stable state.

Review Feedback

Feedback can be integrated with a bug tracking tools like JIRA. You can find more information on integration of Stash with JIRA at https://confluence.atlassian.com/bitbucketserver/jira-integration-776639874.html.

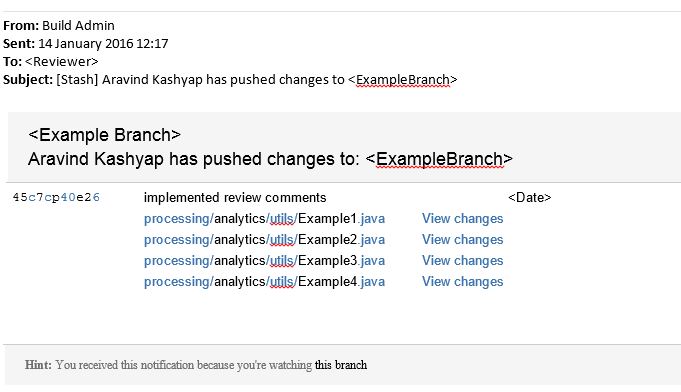

The author sends a request for review through Stash. Below is an example of Review Request mail sent by Stash.

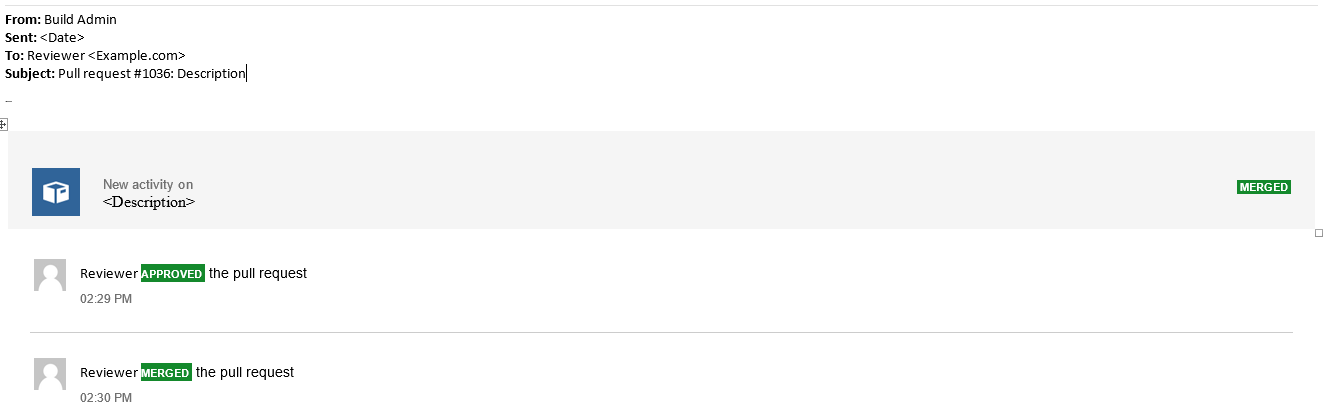

Reviewers go through the comments of the request, followed by code review, and eventually providing feedback. An example of a reviewer code acceptance mail sent by Stash on Approval.

Automated Review Process

Formatting, styling, naming, coverage, standards and possible security vulnerability reviews can be given to tools like Junit, Selenium, Jmeter, Fortify, and SonarQube.

Here are the steps that could help you build an automated system for code reviews:

- Download & configure Jenkins and SonarQube.

- Chose a database for your system, for example MS SQL Server/MySQL.

- Create a database with the name "SONAR" in the database.

- Configure Jenkins job and set "Build Trigger" as per the project requirements. For example, Unit test executions can be a daily and performance/security can be weekly frequencies.

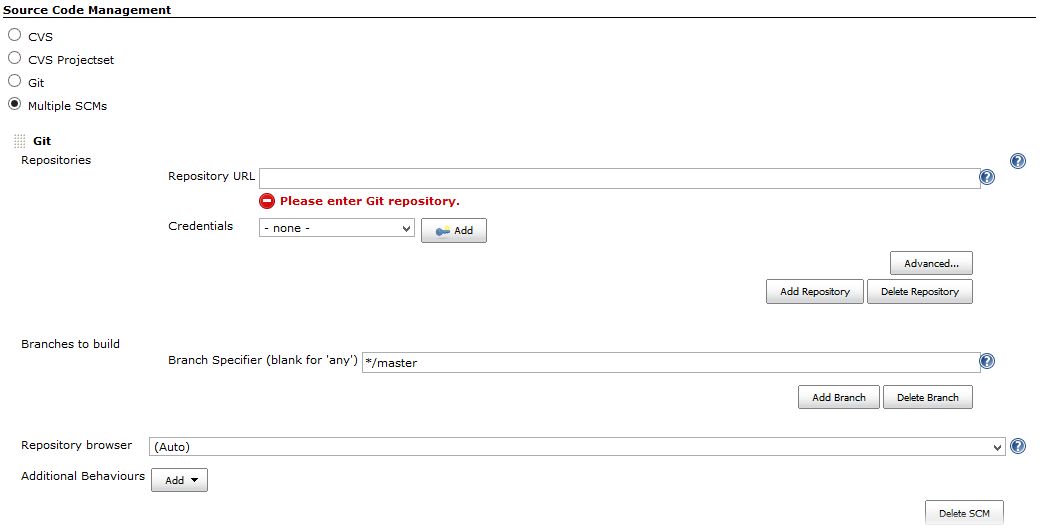

- Configure Git in Jenkins under "Source Code Management" to pull the code.

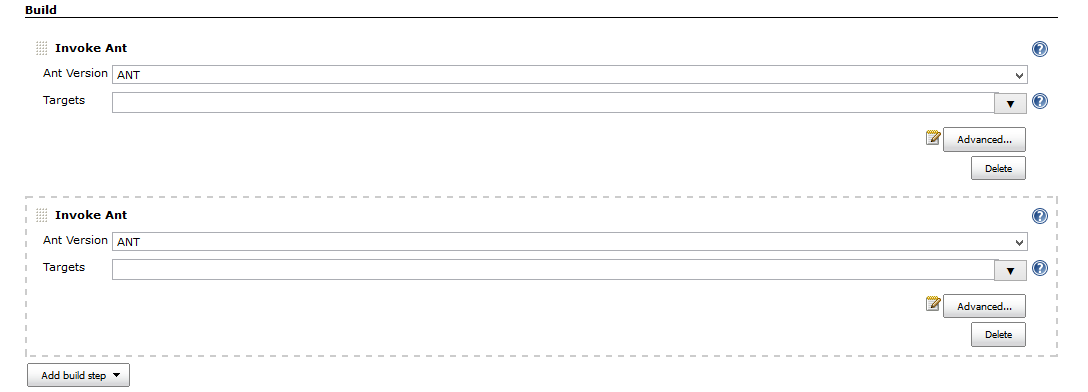

Write an Ant script to build .war or .ear files and deploy to a application server. Invoke this script from Jenkins.

<target name="makewar-AppServer" >

<mkdir dir="${release.dir}/AppServer" />

<war destfile="${release.dir}/AppServer/${ant.project.name}.war" webxml="${web.xml}">

<classes dir="${classes.dir}"/>

<fileset dir="WebContent/">

<exclude name="**/example.jar"/>

</fileset>

<manifest>

<attribute name="Example-Version" value="${LABEL}" />

</manifest>

</war>

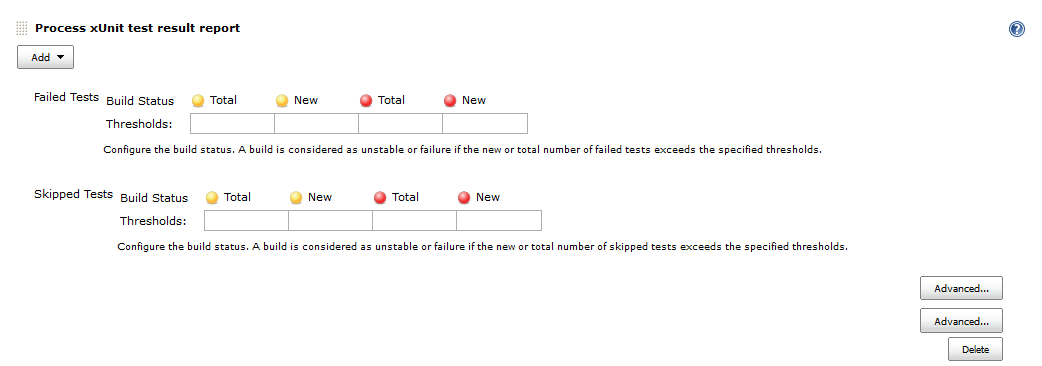

</target>- Invoke unit test script from Jenkins that runs all P0, P1, P2...Pn automated test cases as part of the build to get the JaCoCo, Selenium test results. Execution of automated unit test cases can be a daily job.

More details on Ant task for unit test executions & reports: http://eclemma.org/jacoco/trunk/doc/ant.html

<target name="unittest" description="Execute Junit tests">

<taskdef uri="antlib:org.jacoco.ant" resource="org/jacoco/ant/antlib.xml">

<classpath path="${lib.dir}/jacocoant.jar" />

</taskdef>

<property name="jacoco.resultfile" value="jacoco-syncservice.exec" />

<jacoco:coverage destfile="${jacoco.resultfile}">

<junit fork="true" forkmode="once" printsummary="true" failureproperty="junit.failure">

<sysproperty key="user.dir" value="${basedir}"/>

<classpath refid="test.base.path"/>

<batchtest todir="${build.dir}/test-reports">

<fileset dir="${build.dir}/test-classes">

<include name="com/kony/examples/tests/suits/Suite1"/>

<include name="com/kony/examples/tests/suits/Suite2"/>

<include name="com/kony/examples/tests/suits/Suite3"/>

<include name="com/kony/examples/tests/suits/Suite4"/>

<include name="com/kony/examples/tests/suits/Suite5"/>

<include name="com/kony/examples/tests/suits/Suite6"/>

</fileset>

<formatter type="xml"/>

</batchtest>

</junit>

</jacoco:coverage>

<jacoco:report>

<executiondata>

<file file="${jacoco.resultfile}" />

</executiondata>

<structure name="${ant.project.name}">

<classfiles>

<fileset dir="${classes.dir}" />

</classfiles>

<sourcefiles encoding="UTF-8">

<fileset dir="${src.dir}" />

</sourcefiles>

</structure>

<html destdir="${build.dir}/coverage-report" />

</jacoco:report>

<antcall target="test-report"></antcall>

<fail if="junit.failure" message="Junit test(s) failed. See reports!"/>

</target>

<project name="<projectName>" default="sonar" basedir="." xmlns:sonar="antlib:org.sonar.ant">

<property name="base.dir" value="." />

<property name="reports.junit.xml.dir" value="${base.dir}/build/test-reports" />

<!-- Define the SonarQube global properties (the most usual way is to pass these properties via the command line) -->

<!-- Used SQL Server in this example -->

<property name="sonar.jdbc.url" value="jdbc:jtds:sqlserver://<ipaddess>/sonar;SelectMethod=Cursor" />

<property name="sonar.jdbc.username" value="<userId>" />

<property name="sonar.jdbc.password" value="<password>" />

<!-- Define the SonarQube project properties -->

<!-- Used Java as source code in this example -->

<property name="sonar.projectKey" value="org.codehaus.sonar:<projectKey>" />

<property name="sonar.projectName" value="<projectName>" />

<property name="sonar.projectVersion" value="<ver#>" />

<property name="sonar.host.url" value="http://<ipaddess>:<port>" />

<property name="sonar.language" value="java" />

<property name="sonar.sources" value="${base.dir}/src" />

<property name="sonar.java.binaries" value="${base.dir}/build/classes" />

<property name="sonar.junit.reportsPath" value="${reports.junit.xml.dir}" />

<property name="sonar.dynamicAnalysis" value="reuseReports" />

<property name="sonar.java.coveragePlugin" value="jacoco" />

<property name="sonar.jacoco.reportPath" value="${base.dir}/jacoco-example.exec" />

<property name="sonar.surefire.reportsPath" value="jacoco-example.exec" />

<property name="sonar.jacoco.antTargets" value="build/<test-reports>/"/>

<property name="sonar.libraries" value="${base.dir}/lib" />

<!-- Define the SonarQube target -->

<target name="sonar">

<taskdef uri="antlib:org.sonar.ant" resource="org/sonar/ant/antlib.xml">

<!-- Update the following line, or put the "sonar-ant-task-*.jar" file in your "$HOME/.ant/lib" folder -->

<classpath path="path/to/sonar/ant/task/lib/sonar-ant-task-*.jar" />

</taskdef>

<!-- Execute the SonarQube analysis -->

<sonar:sonar />

</target>

</project>

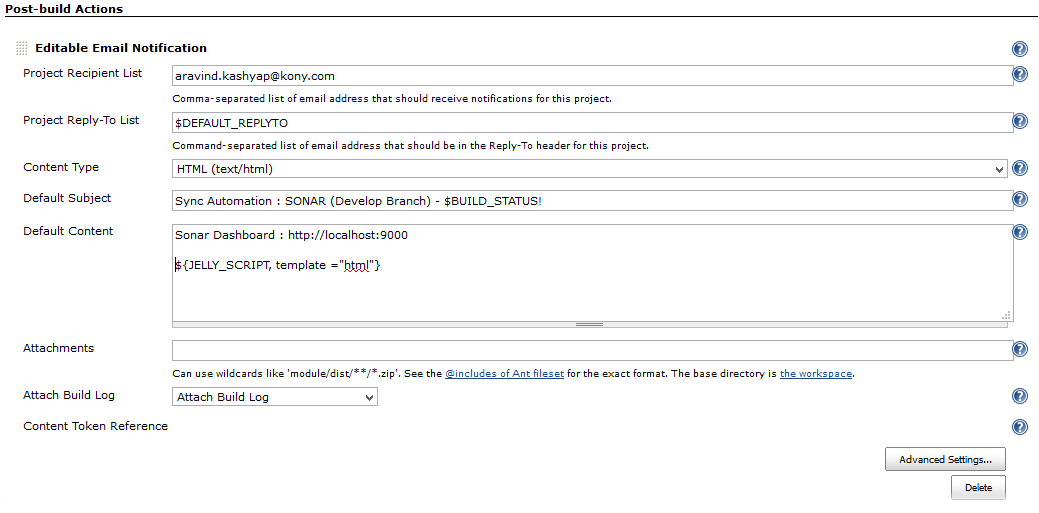

- On completion of the job, Jenkins sends the mail to the "Recipient List".

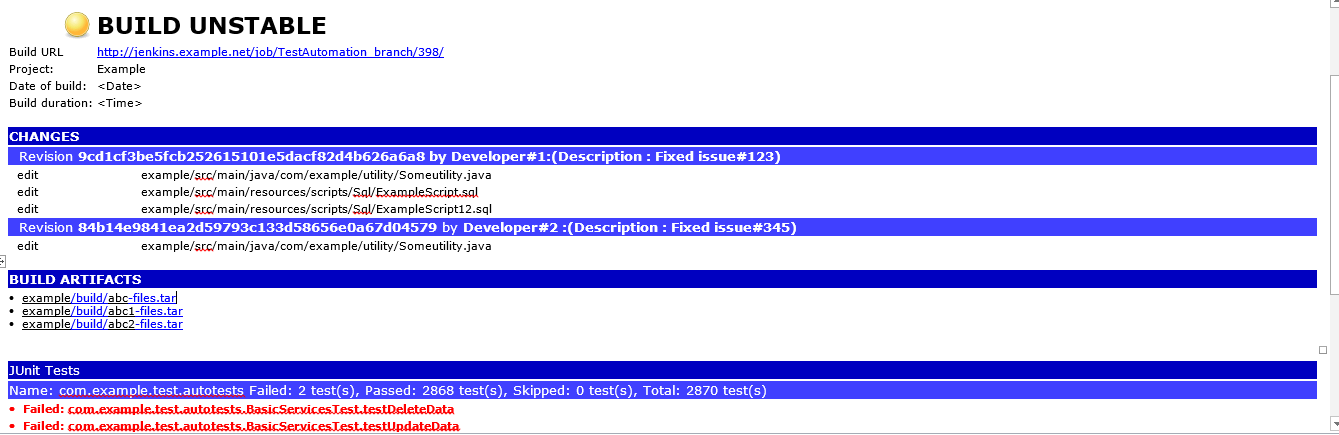

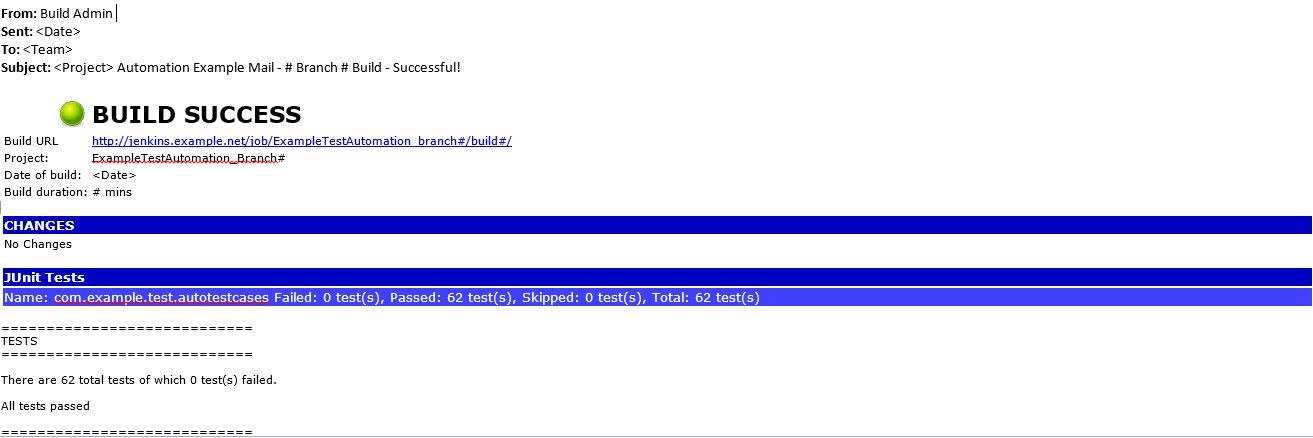

Examples of build success/failure status mails. Please look into the details of the mail, it has Build status, Build duration, Build branch with version details, Changes, Artefacts checked-in & Unit test statistics.

- You can have your own Jenkins dashboard (Like the one Jenkins has its own) to know the overall status across the projects https://ci.jenkins-ci.org/

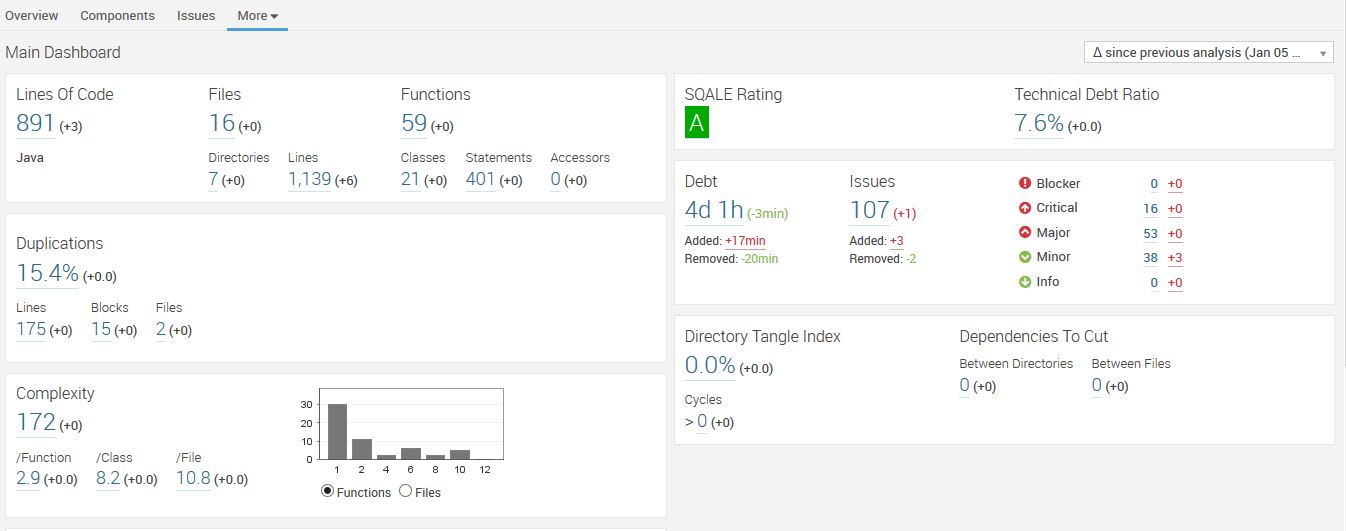

SonarQube

SonarQube comes with various dashboards that help to monitor code metrics in detail at project and portfolio level, facilitating the developer to get registered and assign the defects, to navigate through the defects to the code level, and to know the exact cause/possible fix.

Main dashboard covers

- Programming Defects

- Code Duplications

- Code Complexity

- Code Coverage

Example project dashboard view

Conclusion

Review System with solid Manual & Automated processes will help in:

- Compiling, executing test suits, deployments continuously.

- Continuous reviews, controlled check-ins, and share knowledge.

- Avoiding time lags between development and test phases.

- Shortening QA cycles and avoiding repeated manual tests comparatively.

- Producing high quality releases with continuous integration, parallel reviews, tests, and fixes.

- Frequent, predictable, and continuous releases.

- Minimizing rework, refactor/clean-ups/tech debts as the code grows over a period of time.

- Minimizing manual intervention/corrections and maximizing quality.

- Bringing in transparency to all (from developer to leadership).

Every single line of code should get tested and produce expected results every single day.

I tried my best to share my thoughts throughout this article, please feel free to leave me any comments/questions you might have.

Opinions expressed by DZone contributors are their own.

Comments