Continuous Development: Building the Thing Right, to Build the Right Thing

DevOps transformation guru Rich Jordan discusses why and how collaborative modeling can provide the foundations for successful testing and automation.

Join the DZone community and get the full member experience.

Join For FreeTest automation is vital to any organization wanting to adopt Agile or DevOps, or simply wanting to deliver IT change faster.

If you ask a stakeholder “What do you hope to achieve from your testing?” the common answer you receive is to assure quality. But the more you delve into organization dynamics of test automation, the answer appears to be different.

It seems the overriding motivation for automating testing is not quality, but to go faster and bring the cost of testing down. That sounds a little cynical, but is surprisingly common when you take a step back and look at organizational dynamics, especially where delivery dates drive the culture of an organization.

You might be thinking, “Isn’t quality implicit in doing the activity of testing anyway?” Or, that by accelerating testing through automation, quality will inevitably pop out. This could be true, but not when there’s a fundamental problem in the way organizations understand quality and the role that testing plays in it. In this instance, faster testing does not equate to better quality.

Ask yourselves, how do your test teams report on testing activity and its outcomes? Is it based on tests completed and test coverage — and possibly the dreaded “we’ve got 100% test coverage?” Then consider why stakeholders might still question quality when you tell them you’ve achieved 100% test coverage? What do you really mean by it?

Quality Lessons Learned From 16+ Years of Test Transformation

Having spent many years trying to create technical test capability from within a traditional Center of Excellence, I found many successes. They ranged from creating a centralized automation team and standardizing frameworks, to creating an environment virtualization capability. Yet all of it seemed somehow too short-term and too bespoke.

It dawned on me while facing into the challenges of creating a centralized test data capability that what we were doing, and always had been doing, was building on shaky foundations. We didn’t know what the product was supposed to be doing upfront. How could we then prove it did it, let alone try and automate the unknowns requirements, create test data for them, and create representative interfaces to test them?

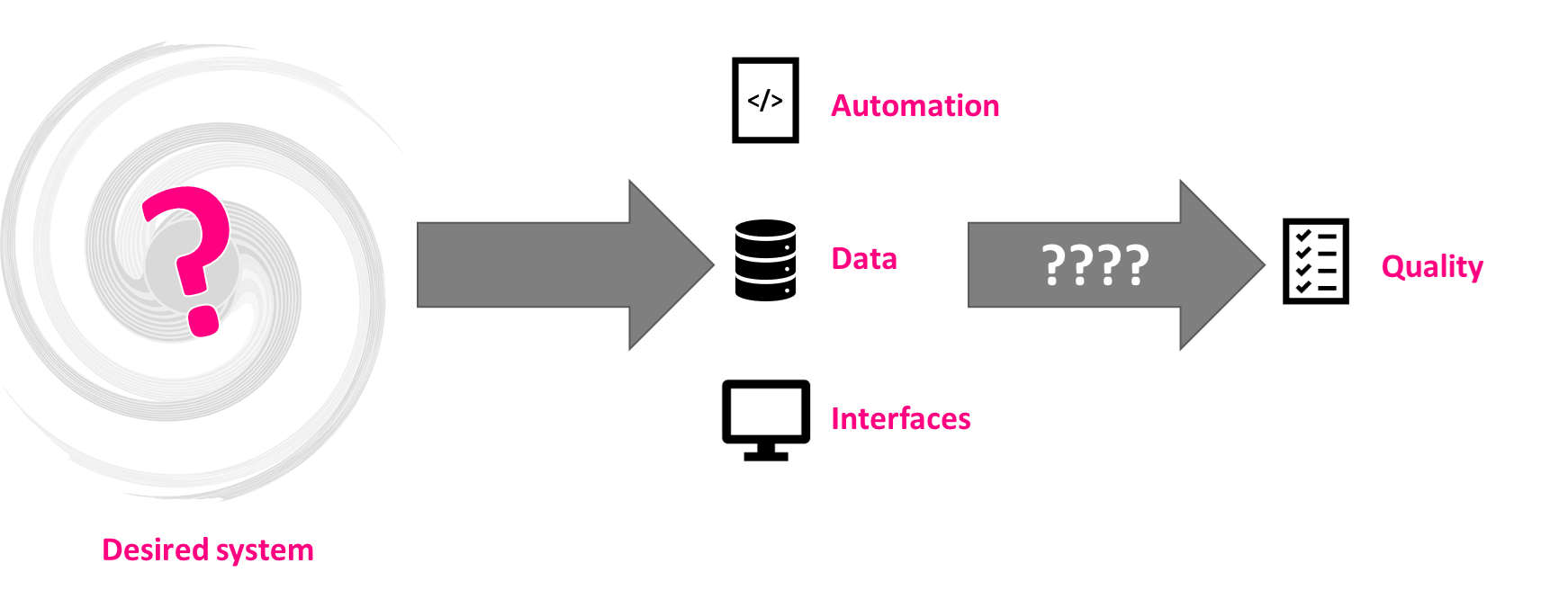

Figure 1: Does limited upfront understanding risk a “garbage in, garbage out” scenario in your testing?

In truth, we were being reactive, redesigning the systems through the medium of testing. The rate of change wasn’t helping the problem, nor were the traditional ways of creating mounds of test cases in the usual test management tools. In fact, both were exacerbating it, creating ever more tests of questionable value, while providing the false confidence to stakeholders that running hundreds of tests equates to “quality.” We were shifting right when we should have been shifting left.

Shift Left to Build Better Things

But shift left shouldn’t be for testing alone, a point that is often overlooked. As a collective, we’d let ourselves move IT change to the right, knowing the foundations weren’t right. As testers, we’d lost the craft of testing, growing more focused on making the automation work rather than ensuring that the automation is testing the right things.

The same can be said of our amigos who we work with from design and development: There doesn’t seem to be a common understanding of “Build the thing right, build the right thing!”

The obvious leading indicator of this problem for your test team is to ask, how does your test approach compliment the architecture or design patterns of the team, if there even are any? Or how does it compliment the delivery methodology? If your teams are working in two week sprints and you have a big end-to-end testing phase at the end, chances are you’ve got Agile theater and need to look at “building the thing right” again as a team.

Testing's Critical Role in Understanding and Collaboration

Concentrating on building the thing right will very quickly lead to collaboration and conversation around “unknowns:” solutions that start life as black boxes or have grown too complex over time. Using techniques like modelling to prototype design thinking and capture conversations can very quickly help to visualize previously undiscovered rules or assumptions that have muddied the understanding of the team and stakeholders regarding how the system actually works.

I’m keen to stress here that, although tooling is an enabler, it certainly doesn’t end with buying a tool and doing the same things we always have. That would just be committing the same mistakes under a new buzzword — a mistake we are all currently living in in some way or another.

What we are really talking about, at its core, is critical questioning and collaboration, as well as understanding where to target appropriate effort to achieve a quality outcome.

We should build models, but this should be as an aid to the conversations, queries, rules, and beyond that we need to elicit from various stakeholders. Models provide a structured representation of “Are we building the right thing?” Through this questioning, we realize that we actually have many customers who have needs to meet (be that compliance, security, operations), as well as the end consumer of the software being created.

Models allow us as testers to have a richer conversation, but they also make up the “just enough documentation” for the wider team. In this way, our models become the living specifications:

Figure 2: Modeling allows for a richer conversation and provides the living documentation needed for the wider team.

Models Should Break Down Complexity, Not Add It

Modeling is immensely valuable, but the approach taken matters. “Static” modeling, in design and test, offers short term benefits, but can become a source of technical debt. This will occur as the modeled systems inevitably become more complex, with more features added.

Static modeling might include using products like Office (Word, Excel, Vision), or some of the newer tool offerings which seem to come hand-in-hand with the Agile sales pitch. It differs from “living” specifications (models), which can guide you to the impact of change. This is the key factor in keeping the continuous delivery train rolling and in preventing “layering,” in which understanding is spread across multiple locations and becomes hard to maintain.

Modeling to Become Test Automation-Ready

So, in our teams we now have models, and those models are based on rules of the system and methodical questioning. We now have a tick in the box for building the right thing! At the same time, we’ve also created the perfect environment of predictable and repeatable criteria with absolute expected results. And now we are ready to give the stakeholders what they want... automation!

The difference is, we are now creating automation for all the right reasons. We know that each test derived from the model is a valuable one, meaning we can confidently articulate that testing activities achieve the verification element of the solution:

Figure 3: Test automation is rooted in a conversation and consensus regarding the desired system, articulating that we are testing the right things.

While we derive tests from the model, we should always augment our testing with exploration. What’s key is that we feed what we learn back into our models, so that we are always stretching the bounds of understanding, appreciating that we can never know all:

Figure 4: Testing volcanoes and the importance of continual exploration/learning.

Modeling to Make Sure We Build Right and Test Right

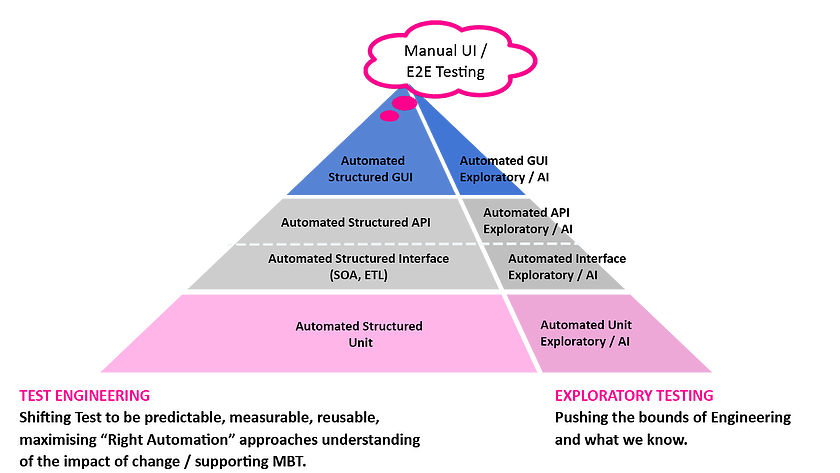

So far, so good: We’ve started modeling (structured flows), asked questions, and all of our problems have gone away. Except they won’t if we don’t change where we target our validation. If we are too concentrated on business flows alone, we create business flow models which in turn force a test approach which is too UI-based. Yet we know testing on the UI is slow and automation of the UI is the hardest to achieve.

Instead, we need to look at the right shaped approach to ensure that we validate how things are imagined to work under the “iceberg” of a solution we are being asked to test. We must validate (model) at the service/API level, and those models have to connect to the business flows:

Figure 5: Testing across the whole tech stack and testing pyramid.

Otherwise, how will verification ever have a chance of proving something that’s never been defined other than in the head of a developer, or in an out-of-date, happy path, static specification? Infrastructure is often ignored, but can that still be the case given the proliferation of cloud?

A Quality-Centric Approach

Breaking down traditional silos and understanding quality is something that requires consensus before it can be proven. This seems to be the fundamental “elephant in the room,” often missed in the pursuit of continuous testing. Where it is understood, automation thrives.

Yet the interesting cultural shift is that those stakeholders who sponsor these high-performing teams also understand that quality isn’t born from test automation. Instead, automation is an outcome of taking the time to define upfront what quality means.

Inspection does not improve the quality, nor guarantee quality. Inspection is too late. The quality, good or bad, is already in the product. As Harold F. Dodge said, “You cannot inspect quality into a product.”

- W. Edwards Deming (The MIT Press: 2000), Out of the Crisis, P. 29

Published at DZone with permission of Rich Jordan. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments