Deploying a Scala API on OpenShift With OpenShift Pipelines

In this article, gain knowledge about how to deploy a simple API on OpenShift using the Pipelines feature, and how to build it using the Scala build tool.

Join the DZone community and get the full member experience.

Join For FreeI recently took a course on Scala Basics. To further my knowledge, I decided to deploy a simple API on OpenShift using the Pipelines feature and build it using the Scala build tool(not Maven, as there are plenty of tasks for that).

Phase 1

First, we need an app, so as a part of the course, I developed a simple expense tracking API that has all the CRUD features and an endpoint to sum all your expenses. I'm going to walk you through the app. In order to build an API, we're going to leverage the use of the Play Framework.

We have here all the common CRUD operations: the getAll and getById.

def getAll() = Action {

if (expenseList.isEmpty){

NoContent

}else {

Ok(Json.toJson(expenseList))

}

}

def getById(expenseId: Long) = Action {

val found = expenseList.find(_.id == expenseId)

found match {

case Some(value) => Ok(Json.toJson(value))

case None => NotFound

}

}Delete:

def deleteExpense(expenseId: Long) = Action {

expenseList -= expenseList.find(_.id == expenseId).get

Ok(Json.toJson(expenseList))

}Create and Update:

def updateExpense() = Action { implicit request =>

val updatedExpense: Option[Expense] = request.body.asJson.flatMap(Json.fromJson[Expense](_).asOpt)

val found = expenseList.find(_.id == updatedExpense.get.id)

found match {

case Some(value) =>

val newId = updatedExpense.get.id

expenseList.dropWhileInPlace(_.id == newId)

expenseList += updatedExpense.get

Accepted(Json.toJson(expenseList))

case None => NotFound

}

}

def addNewExpense() = Action { implicit request =>

val content = request.body

val jsonObject = content.asJson

val newExpense: Option[Expense] = jsonObject.flatMap(Json.fromJson[Expense](_).asOpt)

newExpense match {

case Some(newItem) =>

expenseList += Expense(newItem.id, newItem.desc, newItem.paymentMethod, newItem.amount)

Created(Json.toJson(newExpense))

case None =>

BadRequest

}

}This is a simple prototype for an expense tracker that I intend to develop more with additional functionalities. I intend to expand while writing more tutorials and getting into more advanced topics both on Scala and OpenShift topics.

Phase 2

Now, all we need is an OpenShift cluster with Openshift Pipelines installed. For this, you can always order a developer sandbox cluster to put this to the test.

Phase 3

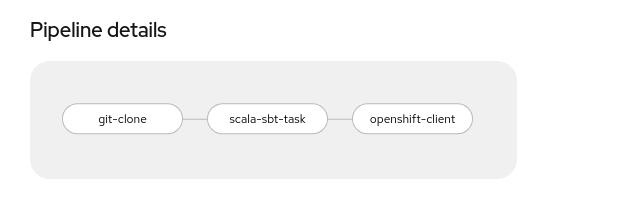

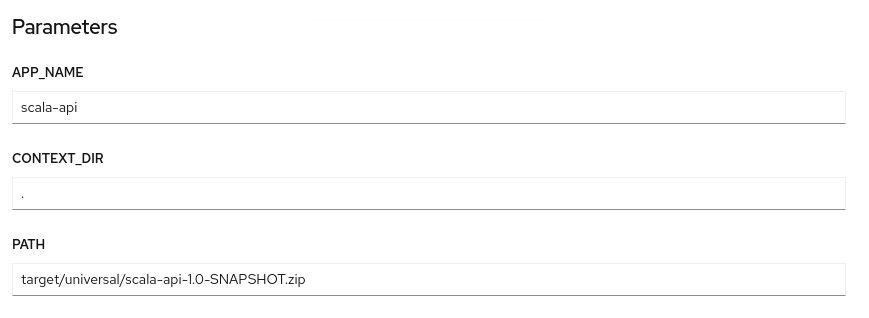

Let's start building the pipeline for this app. The pipeline will consist of 3 stages.

Git Clone -> Build using Scala build tool(sbt) with a custom task -> deploy the app leveraging the BuildConfig and DeploymentConfig resources, as we can see in the image below:

For the git clone task, it's simple as it gets: we just configure the URL of the git repo with the code and declare a workspace to clone into:

- name: git-clone

params:

- name: url

value: 'https://github.com/raffamendes/scala-api.git'

- name: submodules

value: 'true'

- name: depth

value: '1'

- name: sslVerify

value: 'true'

- name: deleteExisting

value: 'true'

- name: verbose

value: 'true'

- name: gitInitImage

value: >-

registry.redhat.io/openshift-pipelines/pipelines-git-init-rhel8@sha256:da1aedf0b17f2b9dd2a46edc93ff1c0582989414b902a28cd79bad8a035c9ea4

- name: userHome

value: /tekton/home

taskRef:

kind: ClusterTask

name: git-clone

workspaces:

- name: output

workspace: workspaceAfter that, we need to configure the custom Scala build tool task built for this. Let's first look at the task code:

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: scala-sbt-task

spec:

params:

- description: The workspace source directory

name: CONTEXT_DIR

type: string

- default: build

description: SBT Arguments

name: ARG

type: string

steps:

- env:

- name: SBT_ARG

value: $(params.ARG)

image: 'quay.io/rcmendes/scala-sbt:0.1'

name: build-app

resources: {}

script: |

#!/bin/bash

sbt ${SBT_ARG}

workingDir: $(workspaces.source.path)/$(params.CONTEXT_DIR)

workspaces:

- description: The workspace containing the scala App

name: sourceIt's actually really simple. It only takes 2 parameters: one being the directory to execute from, and the other just the argument for the build tool.

Knowing that now we configure the task on the pipeline:

- name: scala-sbt-task

params:

- name: CONTEXT_DIR

value: $(params.CONTEXT_DIR)

- name: ARG

value: dist

runAfter:

- git-clone

taskRef:

kind: Task

name: scala-sbt-task

workspaces:

- name: source

workspace: workspaceLast but not least, we configure an Openshift CLI task to start the build and do the rollout of the DeploymentConfig resource.

name: openshift-client

params:

- name: SCRIPT

value: >-

oc start-build $(params.APP_NAME)

--from-archive=$(params.CONTEXT_DIR)/$(params.PATH) --wait=true &&

oc rollout latest scala-api

- name: VERSION

value: latest

runAfter:

- scala-sbt-task

taskRef:

kind: ClusterTask

name: openshift-client

workspaces:

- name: manifest-dir

workspace: workspaceJust a little explanation here: we're going to use a binary build because of the way a Scala Play Framework app is built. When the build is done, we start a rollout.

Now, for some more little preparations before we can run the pipeline and deploy the app.

Phase 3.5

Before we start our pipeline, we need to create the BuildConfig, and the DeploymentConfig, expose it as a Service and create a Route so we can access it from outside OpenShift.

First we create the build:

oc new-build quay.io/rcmendes/scala-sbt:0.2 --name=scala-api --binary=true

For this build, we use the same image from the task as it contains all the necessary packages for this to work.

Now we create the deployment config. On Openshift 4.5 and below, we use this command:

oc new-app scala-api --allow-missing-imagestream-tags

On Openshift 4.6 and onwards, we use this:

oc new-app scala-api --allow-missing-imagestream-tags --as-deployment-config

We set the triggers to manual, to allow this to be started only by our pipelines:

oc set triggers dc scala-api --containers='scala-api' --from-image='scala-api:latest' --manual=true

Finally, we add the startup command to our container:

oc patch dc scala-api -p '{"spec": {"template": {"spec": {"containers": [{"name": "scala-api", "command": ["/bin/bash"], "args": ["/deployments/scala-api-1.0-SNAPSHOT/bin/scala-api", "-Dplay.http.secret.key=abcdefghijklmno"]}]}}}}'

Now, we expose both the DeploymentConfig and the service:

oc expose dc scala-api --port=9000

oc expose svc scala-api

Now let's deploy our Scala API!

Endgame

Now we execute our pipeline:

We wait for our pipeline to complete, and test the API.

To get the route, we can use this command:

SCALA_API_ROUTE=$(oc get route scala-api -o jsonpath='{.spec.host}')

Then, we test with cURL.

curl -d '{"id": 2, "desc":"market", "paymentMethod":"Cash", "amount":10.5}' -H 'Content-Type: application/json' -X POST $SCALA_API_ROUTE/expenses/create -i

HTTP/1.1 201 Created

referrer-policy: origin-when-cross-origin, strict-origin-when-cross-origin

x-frame-options: DENY

x-xss-protection: 1; mode=block

x-content-type-options: nosniff

x-permitted-cross-domain-policies: master-only

date: Tue, 21 Dec 2021 19:54:51 GMT

content-type: application/json

content-length: 61

set-cookie: bde27596a92b9d07338188022e97e190=ade724ba226d21b44b79de398e75c3e5; path=/; HttpOnly

{"id":2,"desc":"market","paymentMethod":"Cash","amount":10.5}That's it: another app - this time with Scala successfully deployed with Openshift Pipelines. Next time we'll talk about a generic enough pipeline for use with the feature pattern that is common to some workflows. With that, we'll start diving into some advanced concepts of OpenShift Pipeline, like interceptors, triggers, and more.

See you in the next article.

Opinions expressed by DZone contributors are their own.

Comments