The Downside of Version-less Optimistic Locking

Join the DZone community and get the full member experience.

Join For FreeIntroduction

In my previous post I demonstrated how you can scale optimistic locking through write-concerns splitting.

Version-less optimistic locking is one lesser-known Hibernate feature. In this post I’ll explain both the good and the bad parts of this approach.

Version-less optimistic locking

Optimistic locking is commonly associated with a logical or physical clocking sequence, for both performance and consistency reasons. The clocking sequence points to an absolute entity state version for all entity state transitions.

To support legacy database schema optimistic locking, Hibernate added a version-less concurrency control mechanism. To enable this feature you have to configure your entities with the @OptimisticLocking annotation that takes the following parameters:

| Optimistic Locking Type | Description |

|---|---|

| ALL | All entity properties are going to be used to verify the entity version |

| DIRTY | Only current dirty properties are going to be used to verify the entity version |

| NONE | Disables optimistic locking |

| VERSION | Surrogate version column optimistic locking |

For version-less optimistic locking, you need to choose ALL or DIRTY.

Use case

We are going to rerun the Product update use case I covered in my previous optimistic locking scaling article.

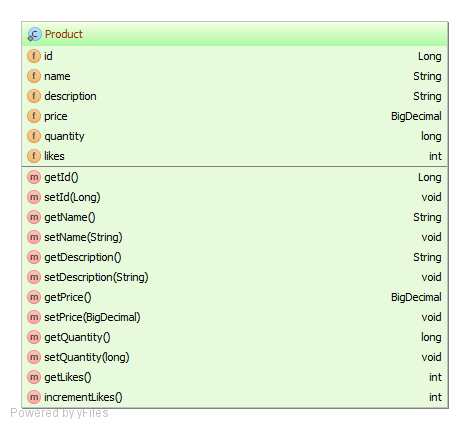

The Product entity looks like this:

First thing to notice is the absence of a surrogate version column. For concurrency control, we’ll use DIRTY properties optimistic locking:

@Entity(name = "product")

@Table(name = "product")

@OptimisticLocking(type = OptimisticLockType.DIRTY)

@DynamicUpdate

public class Product {

//code omitted for brevity

}By default, Hibernate includes all table columns in every entity update, therefore reusing cached prepared statements. For dirty properties optimistic locking, the changed columns are included in the update WHERE clause and that’s the reason for using the @DynamicUpdate annotation.

This entity is going to be changed by three concurrent users (e.g. Alice, Bob and Vlad), each one updating a distinct entity properties subset, as you can see in the following The following sequence diagram:

The SQL DML statement sequence goes like this:

#create tables

Query:{[create table product (id bigint not null, description varchar(255) not null, likes integer not null, name varchar(255) not null, price numeric(19,2) not null, quantity bigint not null, primary key (id))][]}

Query:{[alter table product add constraint UK_jmivyxk9rmgysrmsqw15lqr5b unique (name)][]}

#insert product

Query:{[insert into product (description, likes, name, price, quantity, id) values (?, ?, ?, ?, ?, ?)][Plasma TV,0,TV,199.99,7,1]}

#Alice selects the product

Query:{[select optimistic0_.id as id1_0_0_, optimistic0_.description as descript2_0_0_, optimistic0_.likes as likes3_0_0_, optimistic0_.name as name4_0_0_, optimistic0_.price as price5_0_0_, optimistic0_.quantity as quantity6_0_0_ from product optimistic0_ where optimistic0_.id=?][1]}

#Bob selects the product

Query:{[select optimistic0_.id as id1_0_0_, optimistic0_.description as descript2_0_0_, optimistic0_.likes as likes3_0_0_, optimistic0_.name as name4_0_0_, optimistic0_.price as price5_0_0_, optimistic0_.quantity as quantity6_0_0_ from product optimistic0_ where optimistic0_.id=?][1]}

#Vlad selects the product

Query:{[select optimistic0_.id as id1_0_0_, optimistic0_.description as descript2_0_0_, optimistic0_.likes as likes3_0_0_, optimistic0_.name as name4_0_0_, optimistic0_.price as price5_0_0_, optimistic0_.quantity as quantity6_0_0_ from product optimistic0_ where optimistic0_.id=?][1]}

#Alice updates the product

Query:{[update product set quantity=? where id=? and quantity=?][6,1,7]}

#Bob updates the product

Query:{[update product set likes=? where id=? and likes=?][1,1,0]}

#Vlad updates the product

Query:{[update product set description=? where id=? and description=?][Plasma HDTV,1,Plasma TV]} Each UPDATE sets the latest changes and expects the current database snapshot to be exactly as it was at entity load time. As simple and straightforward as it may look, the version-less optimistic locking strategy suffers from a very inconvenient shortcoming.

The detached entities anomaly

The version-less optimistic locking is feasible as long as you don’t close the Persistence Context. All entity changes must happen inside an open Persistence Context, Hibernate translating [a href="http://vladmihalcea.com/2014/12/08/the-downside-of-version-less-optimistic-locking/2014/07/30/a-beginners-guide-to-jpahibernate-entity-state-transitions/"]entity state transitions into database DML statements.

Detached entities changes can be only persisted if the entities rebecome managed in a new Hibernate Session, and for this we have two options:

- entity merging (using Session#merge(entity))

- entity reattaching (using Session#update(entity))

Both operations require a database SELECT to retrieve the latest database snapshot, so changes will be applied against the latest entity version. Unfortunately, this can also lead to lost updates, as we can see in the following sequence diagram:

Once the original Session is gone, we have no way of including the original entity state in the UPDATE WHERE clause. So newer changes might be overwritten by older ones and this is exactly what we wanted to avoid in the very first place.

Let’s replicate this issue for both merging and reattaching.

Merging

The merge operation consists in loading and attaching a new entity object from the database and update it with the current given entity snapshot. Merging is supported by JPA too and it’s tolerant to already managed Persistence Context entity entries. If there’s an already managed entity then the select is not going to be issued, as Hibernate guarantees session-level repeatable reads.

#Alice inserts a Product and her Session is closed

Query:{[insert into Product (description, likes, name, price, quantity, id) values (?, ?, ?, ?, ?, ?)][Plasma TV,0,TV,199.99,7,1]}

#Bob selects the Product and changes the price to 21.22

Query:{[select optimistic0_.id as id1_0_0_, optimistic0_.description as descript2_0_0_, optimistic0_.likes as likes3_0_0_, optimistic0_.name as name4_0_0_, optimistic0_.price as price5_0_0_, optimistic0_.quantity as quantity6_0_0_ from Product optimistic0_ where optimistic0_.id=?][1]}

OptimisticLockingVersionlessTest - Updating product price to 21.22

Query:{[update Product set price=? where id=? and price=?][21.22,1,199.99]}

#Alice changes the Product price to 1 and tries to merge the detached Product entity

c.v.h.m.l.c.OptimisticLockingVersionlessTest - Merging product, price to be saved is 1

#A fresh copy is going to be fetched from the database

Query:{[select optimistic0_.id as id1_0_0_, optimistic0_.description as descript2_0_0_, optimistic0_.likes as likes3_0_0_, optimistic0_.name as name4_0_0_, optimistic0_.price as price5_0_0_, optimistic0_.quantity as quantity6_0_0_ from Product optimistic0_ where optimistic0_.id=?][1]}

#Alice overwrites Bob therefore loosing an update

Query:{[update Product set price=? where id=? and price=?][1,1,21.22]} If you enjoy reading this article, you might want to subscribe to my newsletter and get a discount for my book as well.

Reattaching

Reattaching is a Hibernate specific operation. As opposed to merging, the given detached entity must become managed in another Session. If there’s an already loaded entity, Hibernate will throw an exception. This operation also requires an SQL SELECT for loading the current database entity snapshot. The detached entity state will be copied on the freshly loaded entity snapshot and the dirty checking mechanism will trigger the actual DML update:

#Alice inserts a Product and her Session is closed

Query:{[insert into Product (description, likes, name, price, quantity, id) values (?, ?, ?, ?, ?, ?)][Plasma TV,0,TV,199.99,7,1]}

#Bob selects the Product and changes the price to 21.22

Query:{[select optimistic0_.id as id1_0_0_, optimistic0_.description as descript2_0_0_, optimistic0_.likes as likes3_0_0_, optimistic0_.name as name4_0_0_, optimistic0_.price as price5_0_0_, optimistic0_.quantity as quantity6_0_0_ from Product optimistic0_ where optimistic0_.id=?][1]}

OptimisticLockingVersionlessTest - Updating product price to 21.22

Query:{[update Product set price=? where id=? and price=?][21.22,1,199.99]}

#Alice changes the Product price to 1 and tries to merge the detached Product entity

c.v.h.m.l.c.OptimisticLockingVersionlessTest - Reattaching product, price to be saved is 10

#A fresh copy is going to be fetched from the database

Query:{[select optimistic_.id, optimistic_.description as descript2_0_, optimistic_.likes as likes3_0_, optimistic_.name as name4_0_, optimistic_.price as price5_0_, optimistic_.quantity as quantity6_0_ from Product optimistic_ where optimistic_.id=?][1]}

#Alice overwrites Bob therefore loosing an update

Query:{[update Product set price=? where id=?][10,1]} If you enjoyed this article, I bet you are going to love my book as well.

Conclusion

The version-less optimistic locking is a viable alternative as long as you can stick to a non-detached entities policy. Combined with extended persistence contexts, this strategy can boost writing performance even for a legacy database schema.

Code available on GitHub.

Published at DZone with permission of Vlad Mihalcea. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments