Enterprise Integration: Part III

Learn about important enterprise integration concepts and enterprise schema in order to provide a foundation for the rest of the series.

Join the DZone community and get the full member experience.

Join For FreeContinuing on from Part 1 and Part 2, I’m going to introduce a number of integration terms, concepts, and patterns and provide a basic definition so these references make sense in further articles. This won’t be an exhaustive list, merely a brief sample.

Common Integration Concepts and Patterns

Enterprise Service Bus

The Enterprise Service Bus (ESB) is a Service Oriented Architecture (SOA) design concept which provides a robust integration capability for systems within an enterprise eco-system.

The principle is to provide a mechanism which allows applications to publish information and allow other applications to receive and process information using well-defined interfaces. This type of system integration – a form of distributed computing – promotes an flexible approach to communication between applications.

The ESB also provides endpoints which are used to integrate two or more systems (service orchestration), particularly for services or APIs which publish reusable and well-defined interfaces. Throughout this document and within various system diagrams, the ESB applies as both a messaging bus and as a collection of integrated web services and Web APIs.

Application Programming Interfaces (APIs)

An API is a defined software interface which selectively exposes logic, data, and other functionality, commonly seen implemented as a service.

The prevalence of API interfaces to/from COTS products is the future across many different proprietary software packages (including in the cloud) and eases the burden for developers (and also both apps and UI/UX consumption). Its primary objective is to open information to a wide variety of endpoint consumers and to allow for information mixing across sources, utilizing abstraction from the source systems.

Extract, Transform and Load (ETL)

ETL is a common integration pattern used to uplift data from a source to one or more destinations, transforming or transitioning the data format from one type (source) to another (destination) as part of the extraction and transfer between systems or applications. This process is usually managed by some kind of middleware, and can often be transactional in nature.

Integration activities incorporating ETL patterns are commonly found when transferring data to and from structured data stores, such as databases. Some of the advantages of ETL include repeatability, consistency, error handling and transactional support. ETL is often favored for handling large volumes of data, and can often be tuned to handle volumes of data.

Enterprise Schema

Guiding Principal

In order to promote and provide a robust set of reusable services and interfaces as part of the Enterprise Service Bus’ Service Oriented Architecture, interchange between integrated services will, where possible, use a common definition. This promotes reusability and standardizes business data structures.

Logical Data Models

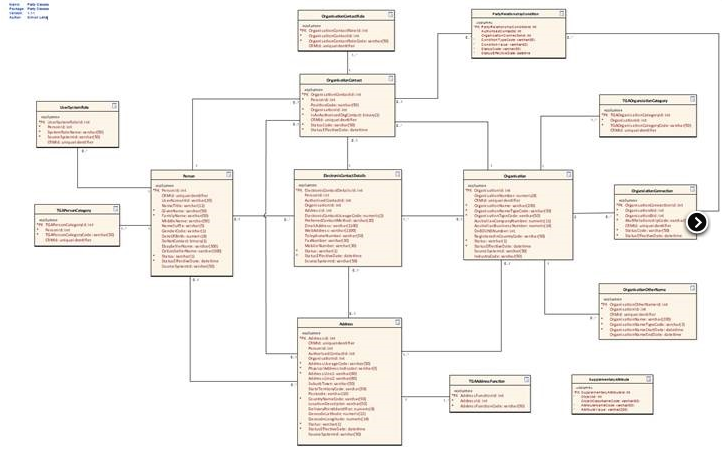

This model is often incorporated into the Enterprise in the form of guidance for data schemas which are to be implemented in a uniform fashion across common web service contracts, interfaces, and physical models.

Logical models typically only focus on defining the data concepts and rules related to a business domain and ignores technology or implementation specific concerns.

An example of a logical data model is included, below.

Figure 2 – An example of a logical data model.

Data sent to orchestrating or integration service endpoints (such as specific process handlers) should attempt to transform the incoming data into a format represented in a common interchange format derived from the logical model.

Additional Schema

In some cases, it may not be possible to uniformly map data into a pre-existing common format, for example when leveraging the capabilities of COTS products. In these cases, supplemental schema may need to be developed on a system-by-system basis.

Applications which are commercial off the shelf (COTS) products may need to apply their own schema or data definitions, particularly where significant customization would significantly weaken out of the box functionality (from abstracted COTS products in particular). Integration with such systems should attempt to map from the product schema to a common schema definition, where possible.

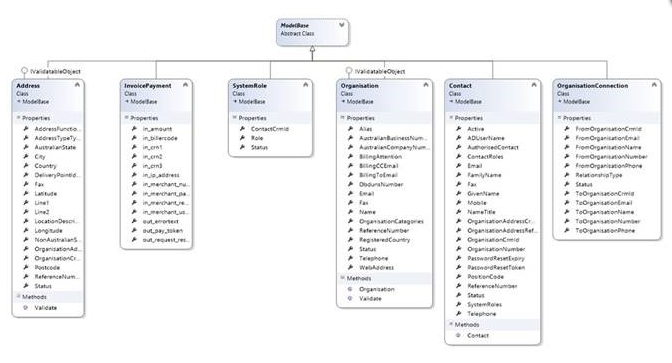

Canonical Data Models

A canonical model is a design pattern providing ease of communication between enterprise applications. A canonical model is based on enterprise and business data model(s) within an Enterprise.

A canonical model is usually based off a logical model, and models entity relationships and rules taking into account technology-specific restrictions or optimizations. A canonical model is usually implemented in a way where it can be directly consumed and reflected by system interfaces and interchange.

Data is structured, formatted and validated against rules set by the canonical model. Canonical models should be used, where appropriate, to ensure consistency of business data and services.

Figure 3 – An example of a form of canonical model.

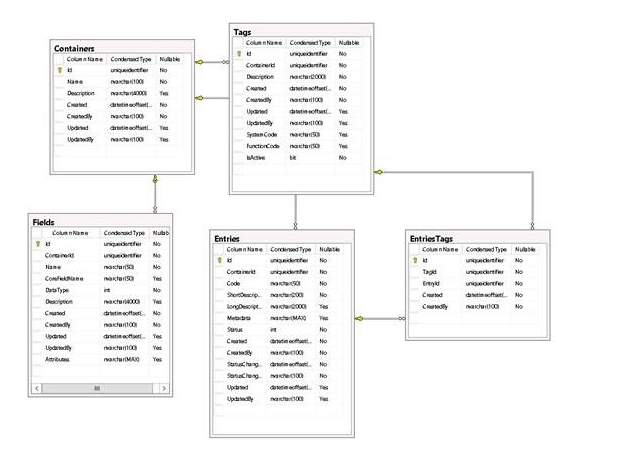

Physical Data Models

A physical data model represents the real world implementation of a data model, typically when the data is stored at rest, for example within a database or a software application. It incorporates the logic and definition provided by a logical or canonical model, but is typically defined and tuned for real world performance and security concerns.

For example, a physical model is likely to incorporate fields which are not directly relevant to the business value implied by a class or table, e.g. audit or concurrency fields.

Below is an example of the implementation of a physical data model, implemented within a database.

Figure 4 – An example of a physical data model.

Published at DZone with permission of Rob Sanders, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments