Facebook Announces Apollo at QCon NY 2014

Join the DZone community and get the full member experience.

Join For Freetoday i attended a talk 'how facebook scales big data systems' at qcon ny 2014. it turns out that jeff johnson took this opportunity to announce a new project by facebook: apollo. the following is a rough transcript of the talk.

apollo is a system facebook has been working on for about a year in their new york office. the tagline of apollo is 'consistency at scale'. its design is heavily influenced by their experience with hbase.

why?

facebook generally favors cp systems. they have four datacenters using master/slave style replication. missing the 'a'vailability usually isn't a big deal within a datacenter, but across dcs it becomes troublesome.

the wishlist that lead to apollo is roughly as follows:

- we need some kind of transactions

- acked writes should be eventually visible and not lost

- availability

so the question really boils down to: can we layer ap on top of cp?

apollo

apollo itself is written in 100% c++11, also using thrift2. the design is based on having a hierarchy of shards. these form the basic building block of apollo. essentially like hdfs (regions) are the building block for hbase. it supports thousands of shards, scaling from 3 servers to ~10k servers.

the shards use paxos style quorum protocols (cp). raft is used for consensus. fun sidenote: this turned out to be not much simpler than multi-paxos, even though that was the expectation.

rocksdb (a key-val store, log-structured storage) or mysql can be used as underlying storage. the storage primitives offered by apollo are:

- binary value

- map

- pqueue

- crdts

use cases

the apparent sweetspot for apollo is online, low-latency storage of aforementioned data structures. especially where atomic transformations of these data structures are required. the size of 'records' should range from a byte to about a megabyte.

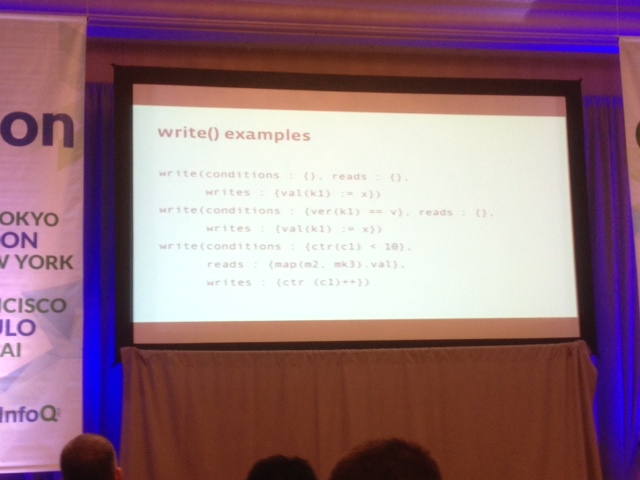

every operation on the apollo api is atomic. for exampe, you can pass in conditions into a read/write call. as an example, a condition can assert that a map contains a certain key. the operation will only go through if the condition (atomically) holds.

first write call mimics a traditional last-write wins kv store.

second one does optimistic concurrency, last one allows for complex

transactional behavior. note that a write can also return (consistent)

reads!

first write call mimics a traditional last-write wins kv store.

second one does optimistic concurrency, last one allows for complex

transactional behavior. note that a write can also return (consistent)

reads!

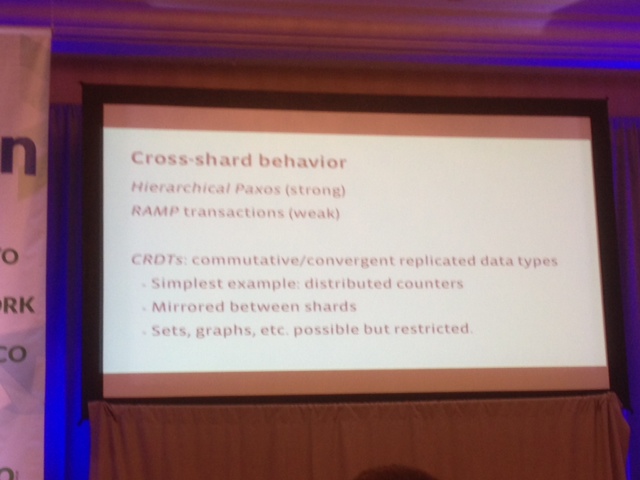

atomicity works across shards, at varying levels:

the idea is that apollo provides cp locally and ap between remote sites.

fault tolerant state machines

in addition to just storing and querying data, apollo has another unique feature: it allows execution of user submitted code in the form of state machines. these fault tolerant state machines are primarily used for apollo's internal implementation. e.g. to do shard creation/destruction, load balancing, data migration, coordinating cross-shard transactions.

but users can submit ftsms too. these are persistently stored, so it tolerates node failures. a shard owns a ftsm. a state machine may have side effects (e.g. call an external api), but all state changes are submitted through the shard replication mechanism. the exact way to create these ftsms were not discussed.

applications of apollo at facebook

one of the current applications of apollo at facebook is as a reliable in-memory database. for this use case it is setup with a raft write-ahead-log on a linux tmpfs. they replace memcache(d) with this setup, gaining transactional support in the caching layer.

furthermore it powers taco, an in-memory version of their tao graph. it supports billions of reads per second and millions of writes this way.

a more persistent application of apollo is to provide reliable queues, for example to manage the push notifications.

open source?

currently, apollo is developed internally at facebook. no firm claims were made during the talk that it will be opensourced. it was mentioned as a possibility after internal development settles down.

this was a quick write-up, twenty minutes after the talk ended. any factual errors are solely caused by me misinterpreting or mishearing stuff. i expect more information to trickle out of facebook engineering soon. that said, i hope this post paints an adequate initial picture of facebook's apollo!

Published at DZone with permission of Sander Mak, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments