Go Microservices, Part 10: Centralized Logging

Let's continue with our microservices project by learning about a logging strategy based on the Logrus logging driver and Loggly.

Join the DZone community and get the full member experience.

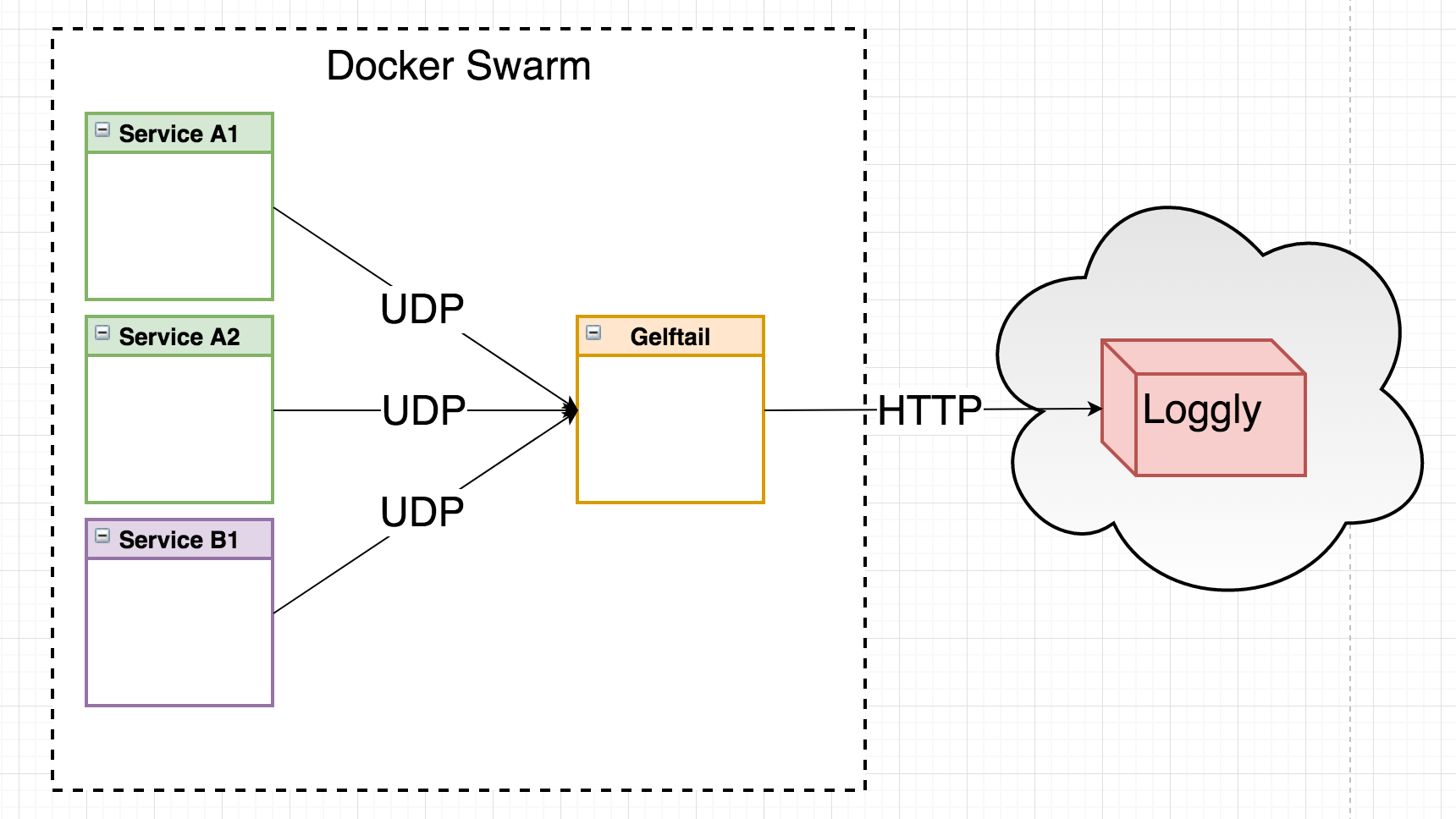

Join For Freein this part of the go microservices blog series, we’ll introduce a logging strategy for our go microservices based on logrus , the docker gelf logging driver and the “logging as a service” service loggly .

introduction

logs. you never know how much you miss them until you do. having guidelines for your team about what to log, when to log and how to log, may be one of the key factors for producing a maintainable application. then, microservices happen.

while dealing with one or a few different log files for a monolithic application is usually manageable (though exceptions exist…), consider doing the same for a microservice-based application with potentially hundreds or even thousands of service containers each producing logs. don’t even consider going big if you don’t have a solution for collecting and aggregating your logs in a well-structured manner.

thankfully, a lot of smart people have already thought about this - the stack formerly know as elk is perhaps one of the most well-known within the open source community. elasticsearch, logstash, and kibana form the elastic stack which i recommend for both on-premise and cloud deployments. however, there probably exists dozens of blog posts about elk, so in this particular blog, we’ll explore a laas (logging as a service) solution for our centralized logging needs based on four parts:

contents

- logrus - a logging framework for go

- docker gelf driver - logging driver for the greylog extended log format

- “gelftail” - a lightweight log aggregator we’re going to build in this blog post. of course, we’ll write it in go.

- loggly - a laas provider. provides similar capabilities for managing and acting on log data as similar services.

solution overview

source code

the finished source can be cloned from github:

> git clone https://github.com/callistaenterprise/goblog.git

> git checkout p10

1. logrus - a logging api for go

typically, our go microservices has up until now logged using either the “fmt” or the “log” packages, either to stdout or stderr. we want something giving us more fine-granular control of log levels and formatting. in the java world, many (most?) of us have dealt with frameworks such as log4j, logback and slf4j. logrus is our logging api of choice for this blog series, it roughly provides the same type of functionality as the apis i just mentioned regarding levels, formatting, hooks etc.

using logrus

one of the neat things with logrus is that it implements the same interface(s) we’ve used for logging up until now - fmt and log . this means we can more or less use logrus as a drop-in replacement. start by making sure your gopath is correct before fetching logrus source so it’s installed into your gopath:

> go get github.com/sirupsen/logrus

update source

we’ll do this the old-school way. for /common , /accountservice and /vipservice respectively - use your ide or text editor to do a global search&replace where fmt.* and log.* are replaced by logrus.* . now you should have a lot of logrus.println and logrus.printf calls. even though this works just fine, i suggest using logrus more fine-granular support for severities such as info, warn, debug etc. for example:

| fmt | log | logrus |

| println | println | infoln |

| printf | printf | infof |

| error | errorln |

there is one exception which is fmt.error which is used to produce error instances. do not replace fmt.error .

update imports using goimports

given that we’ve replaced a lot of log.println and fmt.println with logrus.println (and other logging functions), we have a lot of unused imports now that’ll give us compile errors. instead of fixing the files one at a time, we can use a niftly little tool that can be downloaded and executed on the command-line (or integrated into your ide of choice) - goimports .

again, make sure your gopath is correct. then use go get to download goimports:

go get golang.org/x/tools/cmd/goimportsthis will install goimports into your $gopath/bin folder. next, you can go to the root of the accountservice or vipservice service, e.g:

cd $gopath/src/github.com/callistaenterprise/goblog/accountservicethen, run goimports, telling it to fix imports recursively with the “-w” flag which applies the changes directly to the source files.

$gopath/bin/goimports -w **/*.gorepeat for all our microservice code, including the /common folder.

run go build to make sure the service compiles.

go build

configuring logrus

if we don’t configure logrus at all, it’s going to output log statements in plain text. given:

logrus.infof("starting our service...")it will output:

info[0000] starting our service... where 0000 is the number of seconds since service startup. not what i want, i want a datetime there. so we’ll have to supply a formatter.

the init() function is a good place for that kind of setup:

func init() {

logrus.setformatter(&logrus.textformatter{

timestampformat: "2006-01-02t15:04:05.000",

fulltimestamp: true,

})

}new output:

info[2017-07-17t13:22:49.164] starting our service...much better. however, in our microservice use-case, we want the log statements to be easily parsable so we eventually can send them to our laas of choice and have the log statements indexed, sorted, grouped, aggreagated, etc. therefore we’ll want to use a json formatter instead whenever we’re not running the microservice in standalone (i.e. -profile=dev) mode.

let’s change that init() code somewhat so it’ll use a json formatter instead unless the “-profile=dev” flag is passed.

func init() {

profile := flag.string("profile", "test", "environment profile")

if *profile == "dev" {

logrus.setformatter(&logrus.textformatter{

timestampformat: "2006-01-02t15:04:05.000",

fulltimestamp: true,

})

} else {

logrus.setformatter(&logrus.jsonformatter{})

}

}output:

{"level":"info","msg":"starting our service...","time":"2017-07-17t16:03:35+02:00"}that’s about it. feel free to read the logrus docs for more comprehensive examples.

it should be made clear that the standard logrus logger doesn’t provide the kind of fine-granular control you’re perhaps used to from other platforms - for example changing the output from a given package to debug through configuration. it is, however, possible to create scoped logger instances, which makes more fine-grained configuration possible, e.g:

var logger = logrus.logger{} // <-- create logger instance

func init() {

// some other init code...

// example 1 - using global logrus api

logrus.infof("successfully initialized")

// example 2 - using logger instance

logger.infof("successfully initialized")

}(example code, not in repo)

by using a logger instance it’s possible to configure the application-level logging in a more fine-granular way. however, i’ve chosen to do “global” logging for now using logrus.* for this part of the blog series.

2. docker gelf driver

what’s gelf? it’s an acronym for greylog extended log format which is the standard format for logstash . basically, it’s logging data structured as json. in the context of docker, we can configure a docker swarm mode service to do its logging using various drivers which actually means that everything written within a container to stdout or stderr is “picked up” by docker engine and is processed by the configured logging driver. this processing includes adding a lot of metadata about the container, swarm node, service etc. that’s specific to docker. a sample message may look like this:

{

"version":"1.1",

"host":"swarm-manager-0",

"short_message":"starting http service at 6868",

"timestamp":1.487625824614e+09,

"level":6,

"_command":"./vipservice-linux-amd64 -profile=test",

"_container_id":"894edfe2faed131d417eebf77306a0386b43027e0bdf75269e7f9dcca0ac5608",

"_container_name":"vipservice.1.jgaludcy21iriskcu1fx9nx2p",

"_created":"2017-02-20t21:23:38.877748337z",

"_image_id":"sha256:1df84e91e0931ec14c6fb4e559b5aca5afff7abd63f0dc8445a4e1dc9e31cfe1",

"_image_name":"someprefix/vipservice:latest",

"_tag":"894edfe2faed"

}

let’s take a look at how to change our “docker service create” command in copyall.sh to use the gelf driver:

docker service create \

--log-driver=gelf \

--log-opt gelf-address=udp://192.168.99.100:12202 \

--log-opt gelf-compression-type=none \

--name=accountservice --replicas=1 --network=my_network -p=6767:6767 someprefix/accountservice

- –log-driver=gelf tells docker to use the gelf driver

- –log-opt gelf-address tells docker where to send all log statements. in the case of gelf, we’ll use the udp protocol and tell docker to send log statements to a service on the defined ip:port. this service is typically something such as logstash but in our case, we’ll build our own little log aggregation service in the next section.

- –log-opt gelf-compression-type tells docker whether to use compression before sending the log statements. to keep things simple, no compression in this blog part.

that’s more or less it! any microservice instance created of the accountservice type will now send everything written to stdout/stderr to the configured endpoint. do note that this means that we can’t use docker logs [containerid] command anymore to check the log of a given service since the (default) logging driver isn’t being used anymore.

we should add these gelf log driver configuration statements to all docker service create commands in our shell scripts, e.g. copyall.sh .

there’s one kludgy issue with this setup, though - the use of a hard-coded ip-address to the swarm manager. regrettably, even if we deploy our “gelftail” service as a docker swarm mode service, we can’t address it using its logical name when declaring a service. we can probably work around this drawback somehow using dns or similar, feel free to enlighten us in the comments if you know how.

using gelf with logrus hooks

if you really need to make your logging more container-orchestrator agnostic, an option is to use the gelf plugin for logrus to do gelf logging using hooks. in that setup, logrus will format log statements to the gelf format by itself and can also be configured to transmit them to a udp address just like when using the docker gelf driver. however - by default logrus has no notion about running in a containerized context so we’d basically have to figure out how to populate all that juicy metadata ourselves - perhaps using calls to the docker remote api or operating system functions.

i strongly recommend using the docker gelf driver. even though it ties your logging to docker swarm mode, other container orchestrators probably have similar support for collecting stdout/stderr logs from containers with forwarding to a central logging service.

3. log collection and aggregation using “gelftail”

that udp server where all log statements are sent is often logstash or similar, that provides powerful control over transformation, aggregation, filtering etc. of log statements before storing them in a backend such as elasticsearch or pushing them to a laas.

however, logstash isn’t exactly lightweight and in order to keep things simple (and fun!) we’re going to code our very own little “log aggregator.” i’m calling it “gelftail.” the name comes from the fact that once i had configured the docker gelf driver for all my services, i had no way of seeing what was being logged anymore! i decided to write a simple udp server that would pick up all data sent to it and dump to stdout, which then i could look at using docker logs . e.g. a stream of all log statements from all services. not very practical but at least better than not seeing any logs at all.

the natural next step was then to attach this “gelftail” program to a laas backend, apply a bit of transformation, statement batching etc. which is exactly what we’re going to develop right away!

gelftail

in the root /goblog folder, create a new directory called gelftail . follow the instructions below to create the requisite files and folders.

mdkir $gopath/src/github.com/callistaenterprise/goblog/gelftail

mdkir $gopath/src/github.com/callistaenterprise/goblog/gelftail/transformer

mdkir $gopath/src/github.com/callistaenterprise/goblog/gelftail/aggregator

cd $gopath/src/github.com/callistaenterprise/goblog/gelftail

touch gelftail.go

touch transformer/transform.go

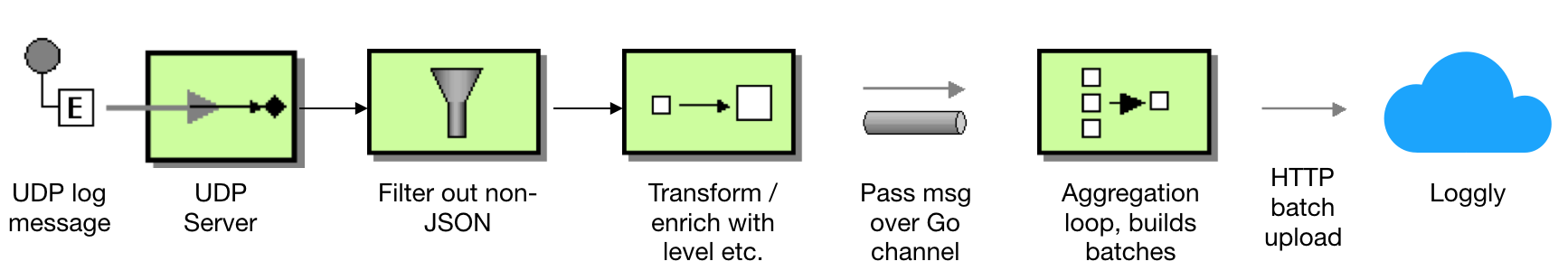

touch aggregator/aggregator.gogelftail works along these lines:

- starting a udp server (the one that the docker gelf driver is sending log output to).

- for each udp packet, we’ll assume it’s json-formatted output from logrus. we’ll do a bit of parsing to extract the actual level and short_message properties and transform the original log message slightly so it contains those properties as root-level elements.

- next, we’ll use a buffered go channel as a logical “send queue” that our aggregator goroutine is reading from. for each received log message, it’ll check if it’s current buffer is > 1 kb.

- if the buffer is large enough, it will do an http post to the loggly http upload endpoint with the aggregated statements, clear the buffer and start building a new batch.

expressed using classic enterprise integration patterns (in a somewhat non-idiomatic way…) it looks like this:

source code

the program will be split into three files. start with gelftail.go with a main package and some imports:

package main

import (

"bytes"

"encoding/json"

"flag"

"fmt"

"net"

"net/http"

"os"

"io/ioutil"

"github.com/sirupsen/logrus"

)when registering with loggly (our laas of choice for this blog series), we get an authentication token that you must treat as a secret. anyone having access to your token can at least send log statements into your account. so make sure you .gitignore token.txt or whatever name you pick for the file. of course, one could use the configuration server from part 7 and store the auth token as an encrypted property. for now, i’m keeping this as simple as possible so text file it is.

so let’s add a placeholder for our laas token and an init() function that tries to load this token from disk. if unsuccessful, we might as well log and panic.

var authtoken = ""

var port *string

func init() {

data, err := ioutil.readfile("token.txt")

if err != nil {

msg := "cannot find token.txt that should contain our loggly token"

logrus.errorln(msg)

panic(msg)

}

authtoken = string(data)

port = flag.string("port", "12202", "udp port for the gelftail")

flag.parse()

}we also use a flag to take an optional port number for the udp server. next, time to declare our main() function to get things started.

func main() {

logrus.println("starting gelf-tail server...")

serverconn := startudpserver(*port) // remember to dereference the pointer for our "port" flag

defer serverconn.close()

var bulkqueue = make(chan []byte, 1) // buffered channel to put log statements ready for laas upload into

go aggregator.start(bulkqueue, authtoken) // start goroutine that'll collect and then upload batches of log statements

go listenforlogstatements(serverconn, bulkqueue) // start listening for udp traffic

logrus.infoln("started gelf-tail server")

wg := sync.waitgroup{}

wg.add(1)

wg.wait() // block indefinitely

} quite straightforward - start the udp server, declare the channel we’re using to pass processed messages and start the “aggregator.” the startudpserver(*port) function is not very interesting, so we’ll skip forward to listenforlogstatements(..) :

func listenforlogstatements(serverconn *net.udpconn, bulkqueue chan[]byte) {

buf := make([]byte, 8192) // buffer to store udp payload into. 8kb should be enough for everyone, right bill? :d

var item map[string]interface{} // map to put unmarshalled gelf json log message into

for {

n, _, err := serverconn.readfromudp(buf) // blocks until data becomes available, which is put into the buffer.

if err != nil {

logrus.errorf("problem reading udp message into buffer: %v\n", err.error())

continue // log and continue if there are problms

}

err = json.unmarshal(buf[0:n], &item) // try to unmarshal the gelf json log statement into the map

if err != nil { // if unmarshalling fails, log and continue. (e.g. filter)

logrus.errorln("problem unmarshalling log message into json: " + err.error())

item = nil

continue

}

// send the map into the transform function

processedlogmessage, err := transformer.processlogstatement(item)

if err != nil {

logrus.printf("problem parsing message: %v", string(buf[0:n]))

} else {

bulkqueue <- processedlogmessage // if processing went well, send on channel to aggregator

}

item = nil

}

}follow the comments in the code. the transformer.go file isn’t that exciting either, it just reads some stuff from one json property and transfers that onto the “root” gelf message. so let’s skip that.

finally, a quite peek at the “aggregator” code in /goblog/gelftail/aggregator/ aggregator.go that processes the final log messages from the bulkqueue channel, aggregates and uploads to loggly:

var client = &http.client{}

var logglybaseurl = "https://logs-01.loggly.com/inputs/%s/tag/http/"

var url string

func start(bulkqueue chan []byte, authtoken string) {

url = fmt.sprintf(logglybaseurl, authtoken) // assemble the final loggly bulk upload url using the authtoken

buf := new(bytes.buffer)

for {

msg := <-bulkqueue // blocks here until a message arrives on the channel.

buf.write(msg)

buf.writestring("\n") // loggly needs newline to separate log statements properly.

size := buf.len()

if size > 1024 { // if buffer has more than 1024 bytes of data...

sendbulk(*buf) // upload!

buf.reset()

}

}

}i just love the simplicity of go code! using a bytes.buffer , we just enter an eternal loop where we block at msg := <-bulkqueue until a message is received over the (unbuffered) channel. we write the content + a newline to the buffer and then check whether the buffer is larger than our pre-determined 1kb threshold. if so, we invoke the sendbulk func and clear the buffer. sendbulk just does a standard http post to loggly.

build, dockerfile, deploy

of course, we’ll deploy “gelftail” as a docker swarm mode service just as everything else. for that, we need a dockerfile:

from iron/base

expose 12202/udp

add gelftail-linux-amd64 /

add token.txt /

entrypoint ["./gelftail-linux-amd64", "-port=12202"]token.txt is a simple text file with the loggly authorization token, more on that in section 4. of this blog post.

building and deploying should be straightforward. we’ll add a new .sh script to the root /goblog directory:

#!/bin/bash

export goos=linux

export cgo_enabled=0

cd gelftail;go get;go build -o gelftail-linux-amd64;echo built `pwd`;cd ..

export goos=darwin

docker build -t someprefix/gelftail gelftail/

docker service rm gelftail

docker service create --name=gelftail -p=12202:12202/udp --replicas=1 --network=my_network someprefix/gelftailthis should run in a few seconds. verify that gelftail was successfully started by tailing its very own stdout log. find it’s container id using docker ps and then check the log using docker logs :

> docker logs -f e69dff960cec

time="2017-08-01t20:33:00z" level=info msg="starting gelf-tail server..."

time="2017-08-01t20:33:00z" level=info msg="started gelf-tail server" if you do something with another service that logs stuff, the log output from that service should now appear in the tail above. let’s scale the accountservice to two instances:

> docker service scale accountservice=2the tailed docker logs above should now output some stuff such as:

time="2017-08-01t20:36:08z" level=info msg="starting accountservice"

time="2017-08-01t20:36:08z" level=info msg="loading config from http://configserver:8888/accountservice/test/p10\n"

time="2017-08-01t20:36:08z" level=info msg="getting config from http://configserver:8888/accountservice/test/p10\n" that’s all for “gelftail.” let’s finish this blog post by taking a quick peek at loggly.

4. loggly

there are numerous “logging as a service” providers out there and i basically picked one (i.e. loggly) that seemed to have a free tier suitable for demo purposes, a nice gui and a rich set of options for getting your log statements uploaded.

there is a plethora (see the list on the left in the linked page) of alternatives on how to get your logs into loggly. i decided to use the http/s event api that allows us to send multiple log statements in small batches by newline separation.

getting started

i suggest following their getting started guide, which can be boiled down to:

- create an account (the free tier works well for demo/trying out purposes).

- obtain an authorization token. save this somewhere safe and copy-paste it into /goblog/gelftail/token.txt .

- decide how to “upload” your logs. as stated above, i chose to go with the http/s post api.

- configure your services/logging driver/logstash/gelftail, etc. to use your upload mode of choice.

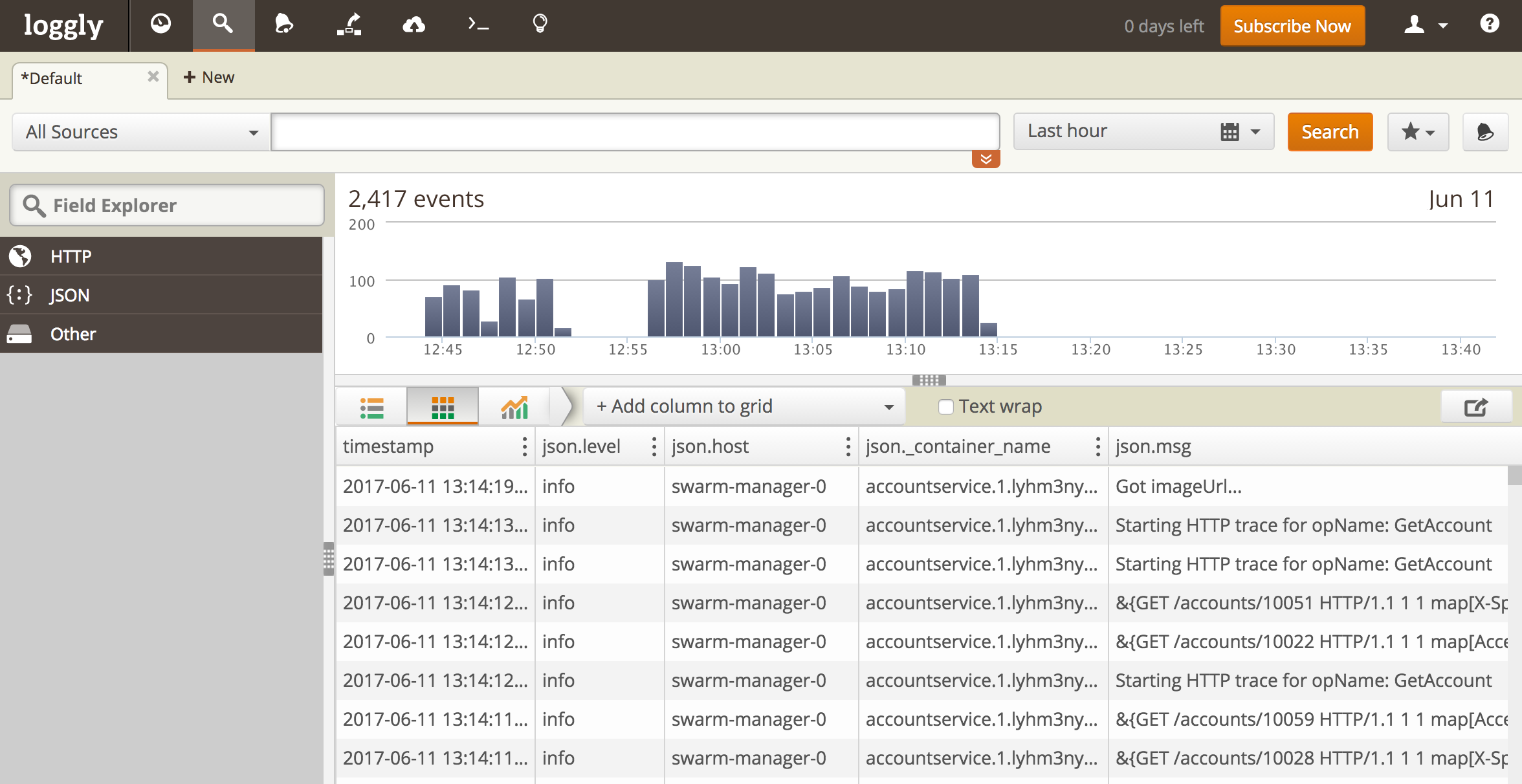

leveraging all the bells and whistles of loggly is out of scope for this blog post. i’ve only tinkered around with their dashboard and filtering functions which i guess is pretty standard as laas providers go.

a few examples

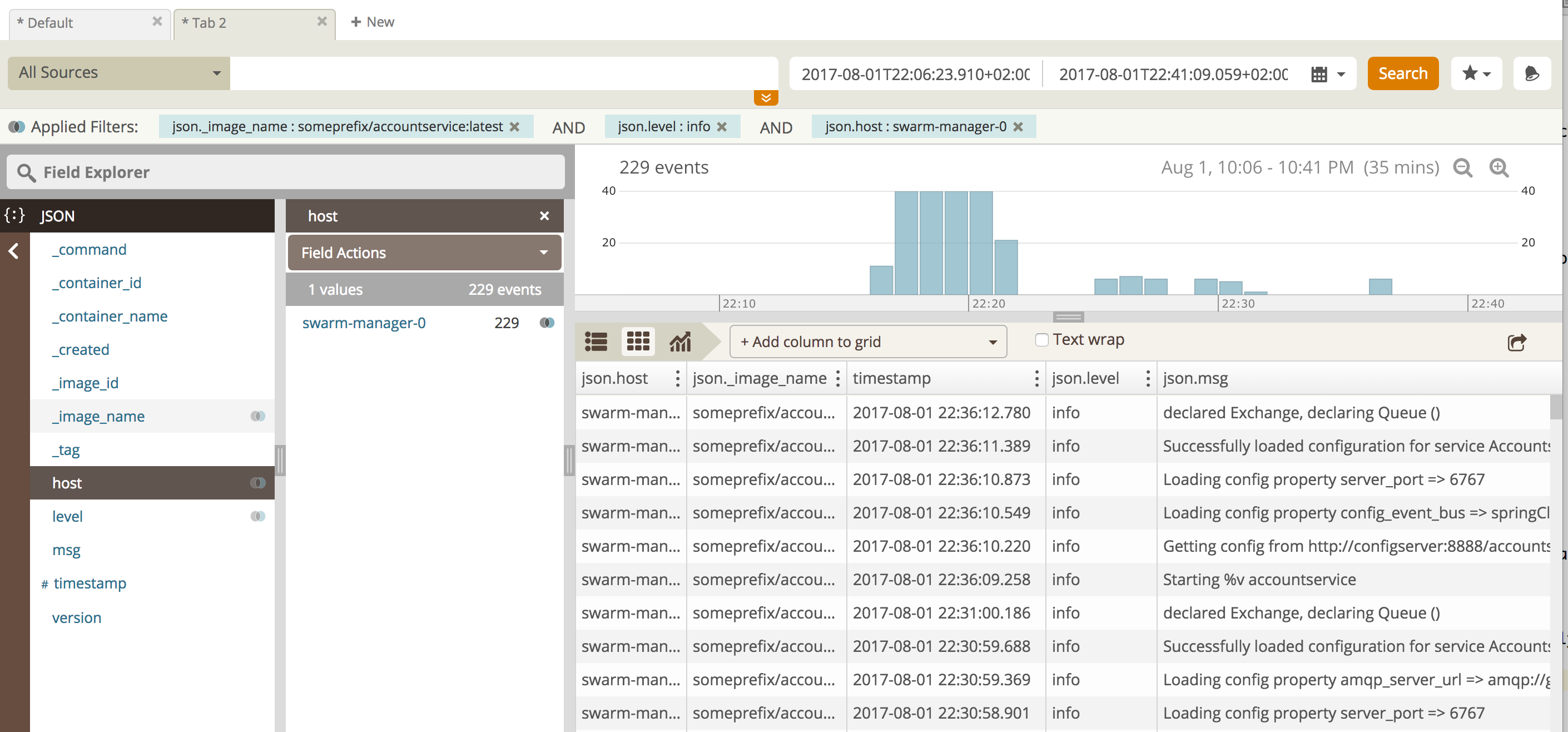

in the first screenshot, i’ve zoomed in on a 35-minute time duration where i’m explicitly filtering on the “accountservice” and “info” messages:

as seen, one can customize columns, filter values, time periods, etc very easily.

in the next sample, i’m looking at the same time period, but only at “error” log statements:

while these sample use cases are very simple, the real usefulness materializes when you’ve got dozens of microservices each running 1-n number of instances. that’s when the powerful indexing, filtering and other functions of your laas really becomes a fundamental part of your microservices operations model.

summary

in part 10 of this blog series, we’ve looked at centralized logging - why it’s important, how to do structured logging in your go services, how to use a logging driver from your container orchestrator and finally pre-processing log statements before uploading them to a logging as a service provider.

in the next part, it’s time to add circuit breakers and resilience to our microservices using netflix hystrix.

Published at DZone with permission of Erik Lupander, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments