How to Use Kubernetes for Autoscaling

As microservices come into greater demand for developers, it becomes all the more relevant to learn to increase their capacity.

Join the DZone community and get the full member experience.

Join For FreeWe live in an era where an application’s success does not depend only on the features it has, but also on how scaleable an application is. Imagine, if Amazon goes down because of a mere increase in the traffic, would it have been so successful?

In this blog, we will see how we can use Kubernetes for the auto-scaling of the applications based on resource usage — CPU usage in particular. I assume that you have some basic idea about Kubernetes concepts.

Suppose we have a service which is CPU-intensive and fails to handle the load when the requests increase. What we need here is horizontal scaling of the architecture such that new machines are spawned up as the load increases on the existing machine — say when the CPU usage goes above 90% on the current machine.

Kubernetes and Autoscaling to The Rescue

Autoscaling is a Kubernetes feature that auto-scales the pods. It allows auto-scaling the infrastructure horizontally. This is achieved via a Kubernetes resource called Horizontal Pod Autoscaler (HPA). Since Kubernetes uses pods as the basic unit of deployment, the Horizontal Pod Autoscaler (HPA) automatically scales the number of pods in a replication-controller/deployment/replica-set based on observed metrics.

The metrics can either be resource utilization metrics such as CPU/Memory or these can be based on some custom metrics depending on the application needs. In this article, we will look into scaling based on resource utilization, particularly CPU utilization.

The HPA is implemented as a control loop. The algorithm details can be found here.

Setting Up the HPA

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: testingHPA

spec:

scaleTargetRef:

apiVersion: apps/v1beta1

kind: Deployment

name: awesome_app

minReplicas: 3

maxReplicas: 5

targetCPUUtilizationPercentage: 85Here is the description on the above configuration:

- Like other resources, HPA is also an API resource in the Kubernetes, with

apiVersion,Kind,metadataandspecfields. - Then we need to define, what we want to scale, achieved by spec.scaleTargetRef.

- spec.scaleTargetRef tells HPA which scalable controller to scale (Deployment, RepicaSet or ReplicationController).

- In our example, we are telling HPA to scale a Deployment named "awesome_app."

- We then provide the scaling parameters —

minReplicas,maxReplicasand when to scale. - This simple HPA starts scaling if the CPU Utilization goes above 85% maintaining the pod count between 3 and 5 both inclusive.

- Note: You can also specify resource metrics in terms of direct values, instead of as percentages of the requested value.

Now if we do:

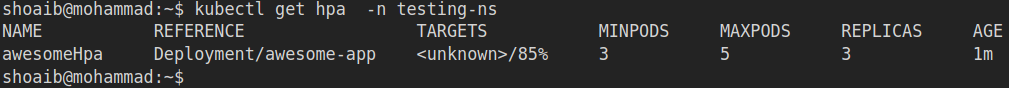

kubectl get hpa -n testing-nsWe see below output:

If you are also seeing <unknown> here, then this means that the resource limits are not set.

In such a scenario, the HPA won't work. Even if the CPU Utilization goes to 85% or more, new pods will not be created. To fix this we need to understand the concept of resource limits and requests.

Detour: Resources — Limits and Requests

CPU and memory are each a resource type (collectively, these two resources are called compute resources). Requests and Limits are mechanisms that Kubernetes uses to control these resources.

Requests are what the container is guaranteed to get. When a container requests a resource, Kubernetes will only schedule the container on a node that can give the required resource. Limits, on the other hand, make sure that a container never goes above a value. The container is allowed to go up to that limit and is then restricted.

Each container can set its own requests and limits and these are all additive. A resource type has a base unit.

CPU resources are defined in milli-cores. If your container needs two full cores to run, you will put the value 2000m.

Remember, if you mention a request that's larger than core count of your biggest node, then your pod will never get schedule.

Memory resources are defined in bytes. You can give values from bytes to petabytes.

If you put a memory request that's larger than the amount of memory on your nodes, the pod will never be scheduled.

A sample for setting the resource requests and limits is shown below:

name: container1

image: busybox

resources:

requests:

memory: “32Mi”

cpu: “200m”

limits:

memory: “64Mi”

cpu: “250m”The above sample means the container that gets created with the above specification requests a minimum of 200 milli-cores of CPU (one-fifth of a core) and 32 Mebibytes of memory. In case these specifications fail to handle the load, then the CPU cores should be increased to 250 milli-cores (one-fourth of a core) and memory allocated to this container should be increased to 64 Mebibytes.

Back to Our Problem

To fix our earlier problem, we need to provide resource limits and requests for the deployment. These are done in the Deployment.yaml file.

Since we needed auto-scaling based upon the CPU utilization, we mentioned requests and limits only for CPU resources as below.

Add the following lines in the Deployment.yaml file:

resources:

limits:

cpu: 2000m

requests:

cpu: 1000mThese lines should be added at spec.template.spec.containers, the level in the Deployment.yaml file.

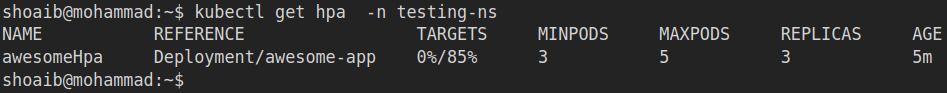

Ones we set the limit and requests and do apply the changes, let’s again do:

kubectl get hpa -n testing-ns

We get the targets column as 0%/85%. This shows that currently, our CPU usage is 0%. This will start auto-scaling when the CPU usage reaches 85%.

Time to Test

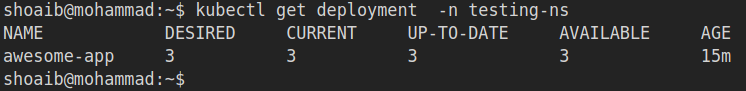

Now to see if this actually scales, we will send some traffic and let the application run to a point when the CPU utilization goes up to 85%. To record, let’s first see how many pods we have before we start sending any traffic to the service.

Let's run:

kubectl get deployment -n testing-ns

We see in the output that the Desired state is 3 and Current is also 3, as the CPU Utilization is still effectively 0%.

Now to produce some load on the service we will use, a tool called wrk. For simplicity, we will run wrk in a Docker, by below command:

docker run --rm loadimpact/loadgentest-wrk -c 600 -t 600 -d 15m https://fun-service.test.com/fun_index=7Here is what happens when we run the above command:

- We are opening 600 connections to the service at a time using 600 threads.

- We will keep hitting the service for 15 minutes so that we have enough load generated.

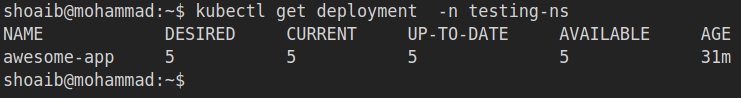

After 10 minutes when we again run the command

kubectl get deployment -n testing-nswe get the below output

Here we see that now that the CPU utilization goes up to 85%, the Desired State has now changed to 5 and the Current state is also 5.

Conclusion

With the ever-growing demand for microservices and its development, we need a tool to make deployment of these microservices also to be an easy task. Kubernetes gives us this power. Kubernetes HPA is an ideal feature when it comes to scaling a microservice.

Opinions expressed by DZone contributors are their own.

Comments