Leverage Docker to Produce Classic Deployment Artifacts

Learn how to integrate Docker into a traditional delivery pipeline without drastically changing the deployment output artifact from classic archives to Docker images.

Join the DZone community and get the full member experience.

Join For FreeTraditionally, deployment artifacts were types of archives that were transferred to the target environment and installed there. They could be simple .zip archives with binaries, Java's .jar or .war files, or simply .exe executables, among others. It is worth saying that this approach implied preliminary preparation, like installing and configuring all software dependencies.

The situation changed when Docker appeared. Moreover, the principle changed: now we operate Docker images like some self-sufficient sealed units containing everything the app needs to work. But we have to admit that both principle and technology are relatively new to the industry. Many companies still rely on a VM-based approach and classic deployment artifacts. And it's quite clear why they do this: infrastructure is already bought; Ops teams are trained on how to deploy, manage and monitor applications; and so forth. In two words, this journey seems to be very long and tough. To mitigate this, we can use the well-known step-by-step method with gradual adjustments to the existing delivery pipeline.

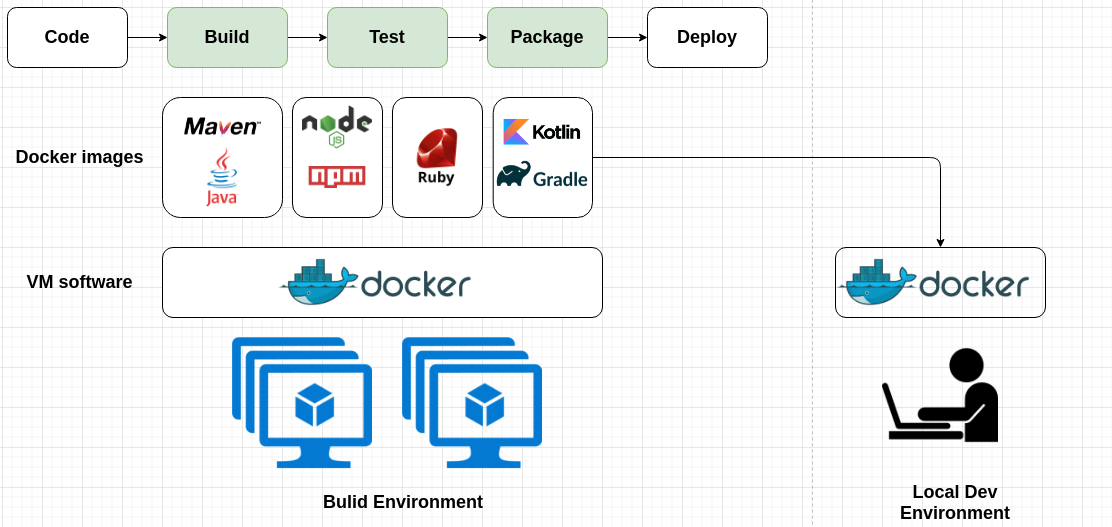

So, let's see what a traditional delivery pipeline looks like:

I highlighted in green stages we will focus on: build, test, and package. The main issue here is the environment where they are executed. It's usually long-lived static VMs with many different types of software that were somehow installed by someone. Even if you're trying to separate machines according to their purpose, the machines within the same group will differ (so-called configuration drift). How can we handle this? I guess you know that the answer will be Docker :), which provides an additional layer of isolation between VM and the stages executed on it. Check it out on the schema below:

As an additional bonus to build environments granularity we can pull the same Docker image locally and execute necessary stages under the same conditions, which is extremely important during troubleshooting for the build stage.

As a result, we end up with Docker images that can be either ready to run on the Docker engine, or that simply contain the deployment artifact we need to extract. This extraction can be done by using `docker cp` command or by a more elegant way that I found on a Reddit forum:

docker run --rm --entrypoint /usr/bin/cat $IMAGE /container/path/to/artifact > desired/host/path/for/artifactOr for many files, use this command:

docker run --rm --entrypoint /usr/bin/tar $IMAGE -cC /container/path/to/artifacts . | tar -xC desired/host/path/for/artifactsAn Example That Is Close to Reality

Let's imagine that our application is Java-based and hosted in Apache Tomcat. Then, the Dockerfile will be like this:

FROM maven:3.6.3-jdk-8 AS build

WORKDIR /app

COPY . .

ARG BUILD_PROFILE=Prod

RUN mvn clean install -P $BUILD_PROFILE

FROM tomcat:8.5.77-jre8-openjdk-slim-bullseye

COPY --from=build /app/target/App*.war /usr/local/tomcat/webapps/App.war

EXPOSE 8080

CMD [“catalina.sh”, “run”]Then, we need to build this image:

docker built -t tomcat-example .Afterward, extract the .war to further deploy it on VM:

docker run --rm --entrypoint /usr/bin/cat tomcat-example /usr/local/tomcat/webapps/App.war > App.warWe are done!

Conclusion

In this article, we looked at how you can integrate Docker into a traditional delivery pipeline without drastically changing the deployment output artifact from classic archives to Docker images. Hope that it will help you move toward best practices and modern tools.

Opinions expressed by DZone contributors are their own.

Comments