Building AI-Driven Intelligent Applications: A Hands-On Development Guide for Integrating GenAI Into Your Applications

This article explains how to build a GenAI-powered chatbot and provides a step-by-step guide to build an intelligent application.

Join the DZone community and get the full member experience.

Join For FreeEditor's Note: The following is an article written for and published in DZone's 2025 Trend Report, Generative AI: The Democratization of Intelligent Systems.

In today's world of software development, we are seeing a rapid shift in how we design and build applications, mainly driven by the adoption of generative AI (GenAI) across industries. These intelligent applications can understand and respond to users' questions in a more dynamic way, which help enhance customer experiences, automate workflows, and drive innovation. Generative AI — mainly powered by large language models (LLMs) like OpenAI GPT, Meta Llama, and Anthropic Claude — has changed the game by making it easy to understand natural language text and respond with new content such as text, images, audio, and even code.

There are different LLMs available from various organizations that can be easily plugged into existing applications based on project needs. This article explains how to build a GenAI-powered chatbot and provides a step-by-step guide to build an intelligent application.

Why There Is a Need for GenAI Integration

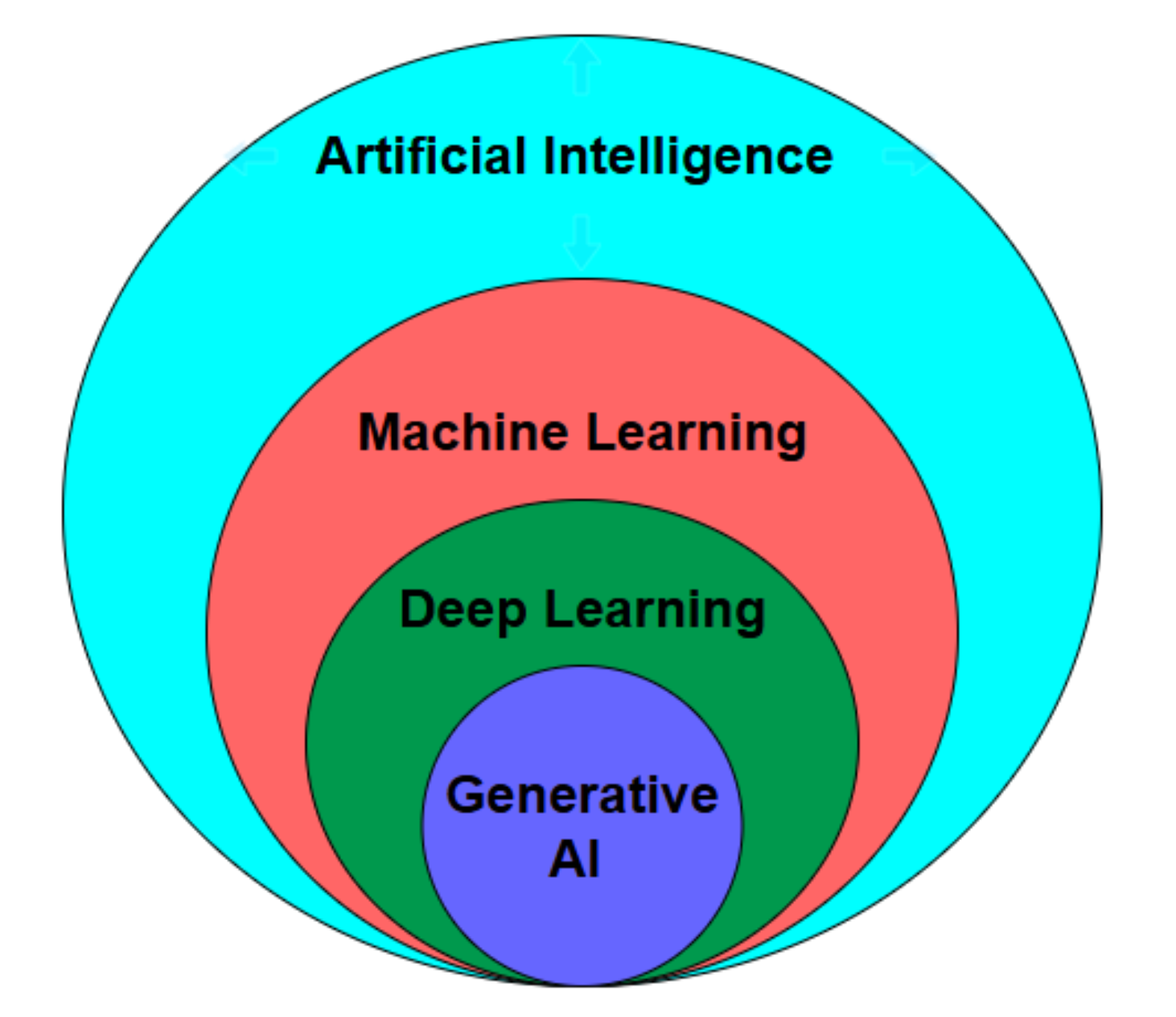

Within the field of artificial intelligence (AI), GenAI is a subset of deep learning methods that can work with both structured and unstructured data. Figure 1 presents the different layers of artificial intelligence.

Figure 1. Artificial intelligence landscape

It is notably generative AI that is rapidly transforming the business world by fundamentally reshaping how businesses automate tasks, enhance customer interactions, optimize operations, and drive innovation. Companies are embracing AI solutions to have a competitive edge, streamline workflows, and unlock new business opportunities.

As AI becomes more woven into society, its economic impact will be significant, and organizations are already starting to understand its full potential.

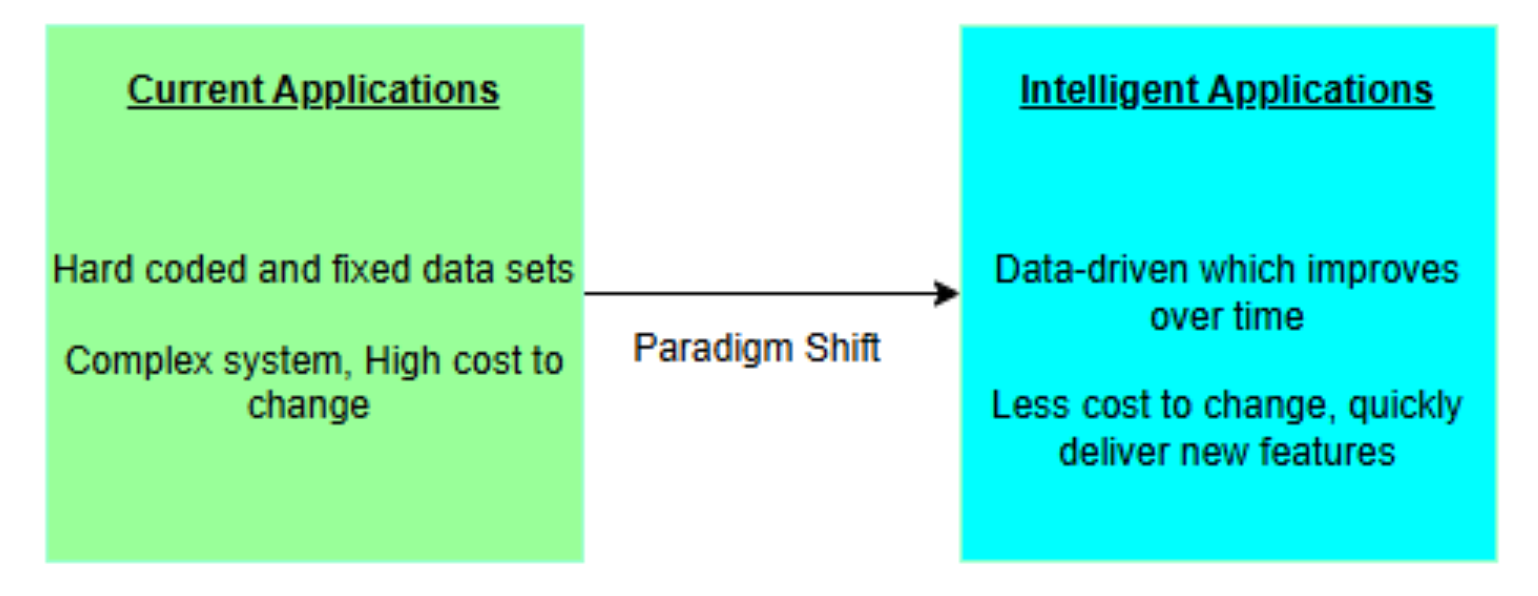

According to the MIT Sloan Management Review, 87% of organizations believe AI will give them a competitive edge. McKinsey's 2024 survey also revealed that 65% of respondents reported their organizations are regularly using GenAI — nearly double the percentage from the previous survey 10 months prior. Figure 2 lays out a comparison of the shift in organizations that are building intelligent applications.

Figure 2. Comparison between traditional and intelligent apps

Advantages of GenAI Integration

Integrating GenAI into applications brings several advantages and revolutionizes how businesses operate:

- AI models can analyze vast amounts of structured and unstructured data to extract valuable insights, enabling data-driven decision making.

- By automating intensive, repetitive tasks and therefore improving efficiency, businesses can reduce operational costs.

- GenAI enables real-time personalization and makes interactions more engaging. For example, GenAI-powered chatbots can recommend products based on the user's browsing history.

- GenAI can be a powerful tool for brainstorming and content creation, which empowers businesses to develop innovative products, services, and solutions.

- GenAI-powered chatbots can provide instant customer support by answering questions and resolving issues. This can significantly improve customer satisfaction, reduce wait times, and free up human agents' time to handle more complex inquiries.

Precautions for Integrating GenAI

While integrating GenAI to build intelligent applications, solutions, or services, organizations need to be aware of challenges and take necessary precautions:

- AI models can generate misleading or factually incorrect responses, often called hallucinations. This leads to poor decision making and legal or ethical implications, which can damage an organization's reputation and trustworthiness.

- Because AI models are trained on historical data, they can inherit biases, leading to unfair or discriminatory outcomes. It's crucial to embrace responsible AI practices to evaluate and mitigate any potential biases.

- Integrating GenAI into existing systems and workflows can become complicated and requires significant technical expertise.

- As governments around the world are introducing new AI regulations, companies must stay up to date and implement AI governance frameworks to meet legal and ethical standards.

- Growing threats of deep fakes, misinformation, and AI-powered cyberattacks can undermine public trust and brand reputation.

To counter these risks, businesses should invest in AI moderation tools and adopt proactive strategies to detect and mitigate harmful or misleading content before it reaches users. Through strong governance, ethical frameworks, and continuous monitoring, organizations can unlock the potential of AI while protecting their operations, trust, and customer data.

Tech Stack Options

There are different options available if you are considering integrating GenAI to build intelligent applications. The following are some, but not all, popular tool options:

- Open-source tools:

- Microsoft Semantic Kernel is an AI orchestration framework for integrating LLMs with applications using programming languages like C#, Python, and Java.

- LangChain is a powerful framework for chaining AI prompts and memory.

- Hugging Face is a library for using and fine-tuning open-source AI models.

- LlamaIndex is a framework that provides a central interface to connect your LLM with external data sources.

- Enterprise tools:

- OpenAI API provides access to OpenAI's powerful models, including GPT-3, GPT-4o, and others.

- Azure AI Foundry is Microsoft's cloud-based AI platform that provides a suite of services for building, training, and deploying AI models.

- Amazon SageMaker is a fully managed service for building, training, and deploying machine learning models.

- Google Cloud Vertex AI is a platform for building and deploying machine learning models on Google Cloud.

Deciding whether to choose an open-source or enterprise platform to build intelligent AI applications depends on your project requirements, team capabilities, budget, and technical expertise. In some situations, a combination of tools from both open-source and enterprise ecosystems can be the most effective approach.

How to Build a GenAI-Powered Customer Chatbot

Regardless of the tech stack you select, you will have to follow the same steps to integrate AI. In this section, I will focus on building a chatbot that takes any PDF file, extracts data, and chats with the user by answering questions. It can be hosted on a web application as an e-commerce chatbot to answer user inquiries, but due to the size limitations, I will create it as a console application.

Step 1. Setup and Prerequisites

Before we begin, we'll need to:

- Create a personal access token (PAT), which helps to authenticate 1,200+ models that are available in GitHub Marketplace. Instead of creating developer API keys for every model, you can create one PAT token and use it to call any model.

- Install OpenAI SDK using

pip install openai(requires: Python ≥ 3.8). - Have an IDE platform for writing Python code. Not mandatory, however, I will use Visual Studio Code (VSCode) to write code in this example.

- Download and install Python.

- Download this VSCode extension, which offers rich support for the Python language; it also offers debugging, refactoring, code navigation, and more.

- Install PDFPlumber using

pip install pdfplumber, a Python library that allows you to extract text, tables, and metadata from PDF files.

Step 2. Create Python Project and Set Up a Virtual Environment

Using VSCode or your favorite IDE, create a folder named PythonChatApp. Ensure that Python is installed on your system. Navigate to the project root directory and run the below commands to create a virtual environment. Creating a virtual environment is optional but recommended to isolate project environments.

Pip install Virtualenv

cd c:\Users\nagavo\source\repos\PythonChatApp

virtualenv myenvStep 3. Create Github_Utils.py File to Interact With OpenAI Models

The Github_Utils.py file imports the OpenAI library and sets up required global variables. An OpenAI client is instantiated with a base URL and API PAT token. This client becomes the interface to send requests to the API.

One advantage with GitHub Models is that we can easily switch to the GPT o3-mini model by simply updating the model name variable without changing existing code. This file contains two functions, summarize_text and ask_question_about-text, both of which are responsible for summarizing the text from the selected PDF and, later on, to ask questions related to the content. The file contents are shown below:

import os

from openai import OpenAI

# Load GitHub API PAT Token and endpoint

github_PAT_key = "<paste your github PatToken>"

github_api_url = "https://models.inference.ai.azure.com"

model_name = "gpt-4o"

# Initialize OpenAI client

client = OpenAI(

base_url=github_api_url,

api_key=github_PAT_key,

)

def summarize_text(text):

"""Summarizes the text using GitHub's models API."""

try:

response = client.chat.completions.create(

messages=[

{

"role": "system",

"content": "You are a helpful assistant.",

},

{

"role": "user",

"content": f"Summarize the following text: {text}",

}

],

temperature=0.7,

top_p=1.0,

max_tokens=300,

model=model_name

)

return response.choices[0].message.content

except Exception as e:

return f"Error with GitHub API: {e}"

def ask_question_about_text(text, question):

"""Asks a question about the text using GitHub's models API."""

try:

response = client.chat.completions.create(

messages=[

{

"role": "system",

"content": "You are a helpful assistant.",

},

{

"role": "user",

"content": f"Based on the following text: {text}\n\n Answer this question: {question}",

}

],

temperature=0.7,

top_p=1.0,

max_tokens=300,

model=model_name

)

return response.choices[0].message.content

except Exception as e:

return f"Error with GitHub API: {e}"Step 4. Create Pdf_Utils.py File to Extract Data From PDF

The Pdf_Utils.py file contains utility functions for working with PDF files, specifically for extracting text from them. The main function, extract_text_from_pdf, takes the path to a PDF file as an argument and returns the extracted text as a string by utilizing the PDFPlumber library. The file contents are shown on the following page.

try:

import pdfplumber

except ImportError:

print("Error: pdfplumber module not found. Please install it using 'pip install pdfplumber'.")

def extract_text_from_pdf(pdf_path):

"""Extracts text from a PDF file."""

text = ""

try:

with pdfplumber.open(pdf_path) as pdf:

for page in pdf.pages:

page_text = page.extract_text()

if page_text:

text += page_text + "\n"

except Exception as e:

print(f"Error reading PDF: {e}")

return textStep 5. Create Main.Py, an Entry Point for the Application

The Main.Py file acts as the main entry point for the application. It is responsible for receiving user input to process the PDF file and interacting with the AI service. It imports Pdf_utils and Github_utils to interact with methods in both files. The file contents of Main.py are shown below:

import os

from Pdf_utils import extract_text_from_pdf

from Github_utils import summarize_text, ask_question_about_text

def main():

print("=== PDF Chatbot ===")

# Ask user to specify PDF file path

pdf_path = input("Please enter the path to the PDF file: ").strip()

if not os.path.isfile(pdf_path):

print(f"Error: The file '{pdf_path}' does not exist.")

return

# Extract text from PDF

print("\nExtracting text from PDF...")

text = extract_text_from_pdf(pdf_path)

if not text.strip():

print("No text found in the PDF.")

return

# Summarize the text

print("\nSummary of the PDF:")

try:

summary = summarize_text(text)

print(summary)

except Exception as e:

print(f"Error with GitHub API: {e}")

return

# Ask questions about the text

while True:

print("\nYou can now ask questions about the document!")

question = input("Enter your question (or type 'exit' to quit): ").strip()

if question.lower() == "exit":

print("Exiting the chatbot. Goodbye!")

break

try:

answer = ask_question_about_text(text, question)

print("\nAnswer:")

print(answer)

except Exception as e:

print(f"Error with GitHub API: {e}")

break

if __name__ == "__main__":

main()Step 6. Run the Application

Open terminal and navigate to the root directory. To run the application, enter the following command:

C:\Users\nagavo\source\repos\PythonChatApp\PDF_Chatbot> python main.py

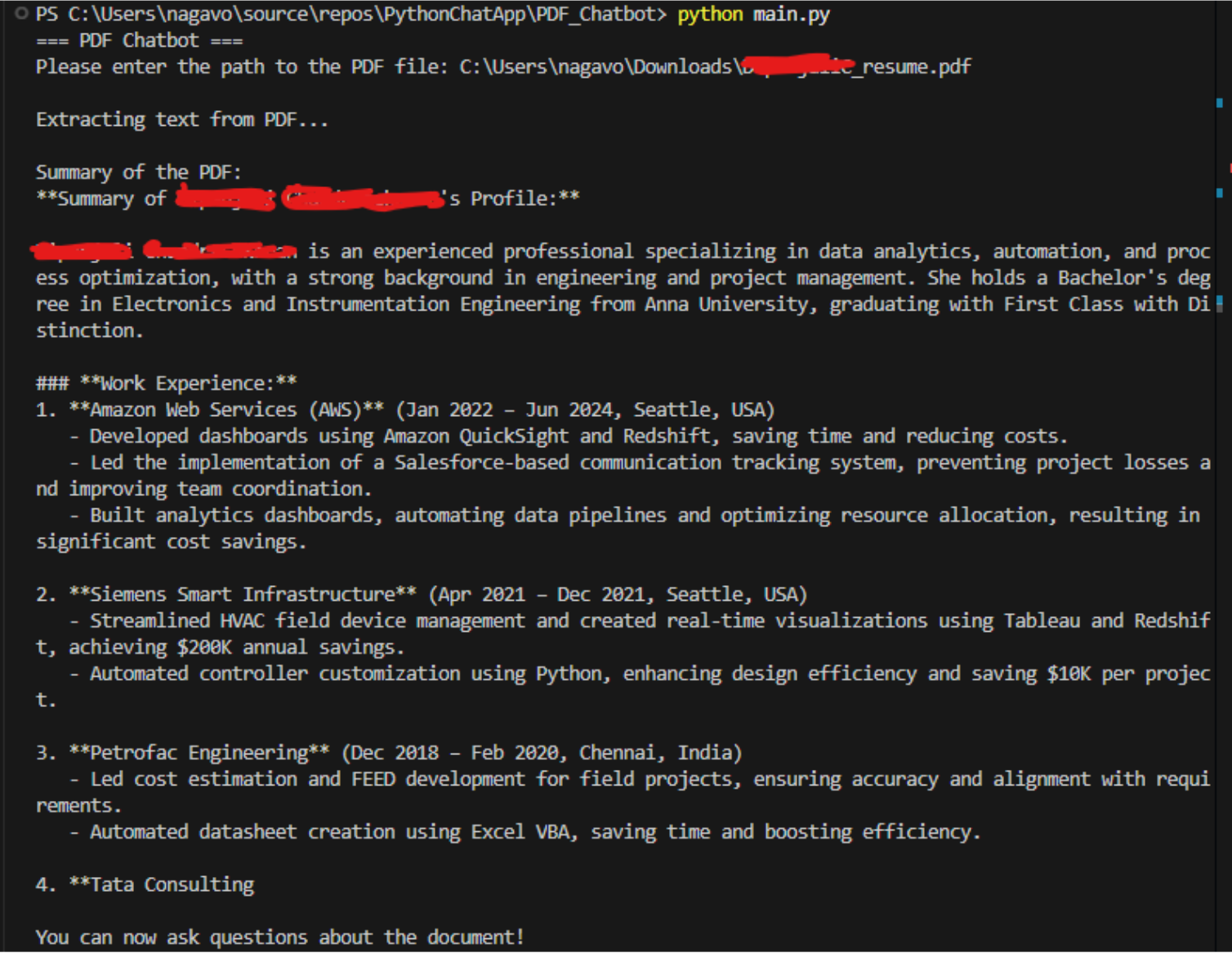

Enter the path to the PDF file in the response. In this example, I uploaded a resume to help me summarize the candidate's profile and her experience. This file is sent to the GPT-4o model to summarize the file contents as shown in Figure 3.

Figure 3. Summary of the PDF document in the GPT response

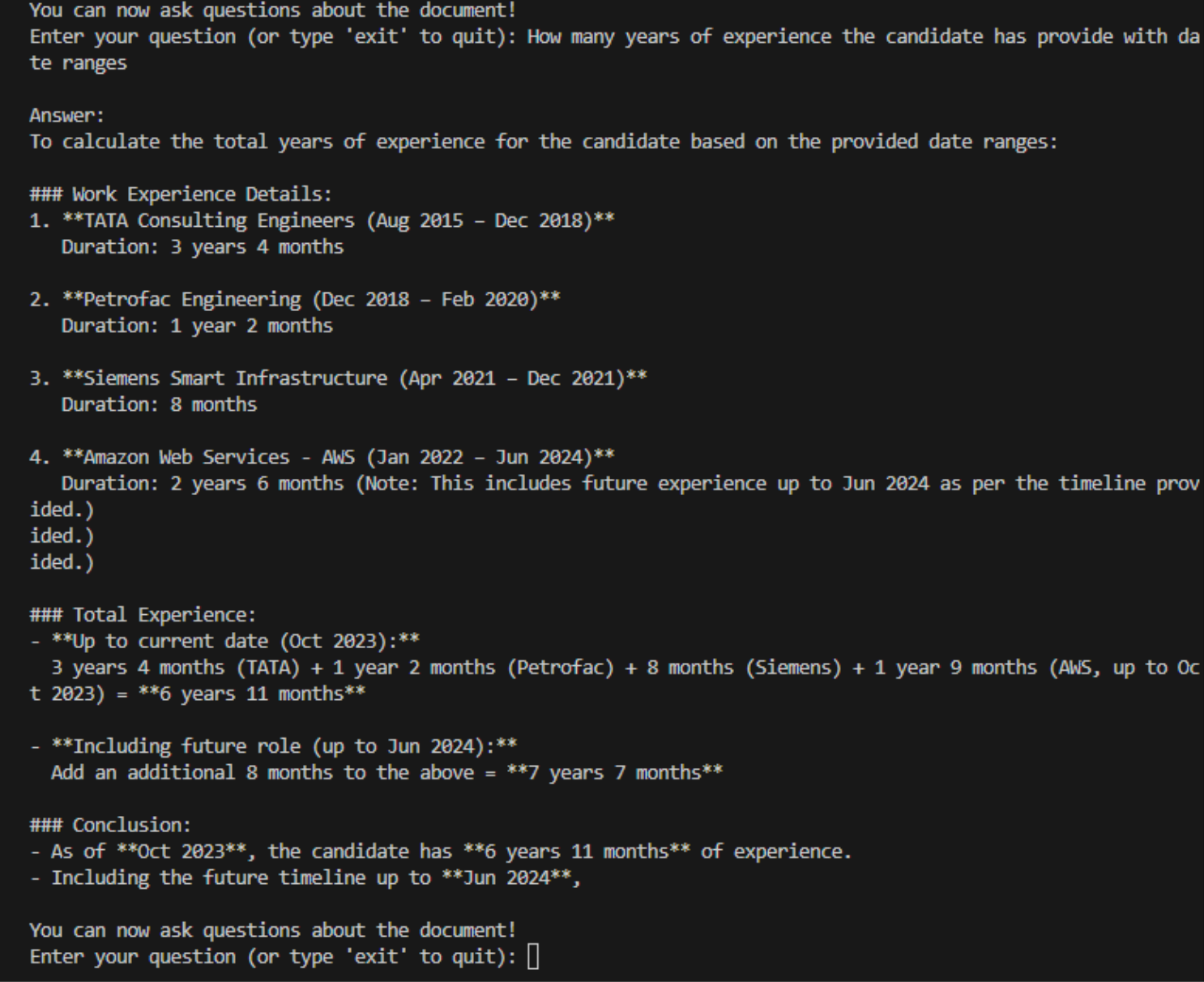

Let's ask some questions about the document, for example, "How many years of experience does the candidate have with date ranges?" Figure 4 shows the detailed response from the chatbot application.

Figure 4. Response from asking specific question about the document

We can host this app on an e-commerce website to upload product and order information. This allows customers to interact by asking specific questions about products or their orders, thus avoiding customer agents manually answering these questions. There are multiple ways we can leverage GenAI across industries; this is just one example.

Conclusion

Integrating GenAI into applications is no longer a luxury but a necessity for businesses to stay competitive. The adoption of GenAI offers numerous advantages, including increased productivity, improved decision making, and cost savings by avoiding repetitive work. It is also crucial to be aware of the challenges and risks associated with GenAI, such as hallucination, bias, and regulatory compliance, as it is easy to misuse AI-generated content. It is essential to adopt responsible AI practices and invent robust governance frameworks to ensure ethical and fair use of AI technologies and by doing so, organizations can unlock the full potential of GenAI while protecting their reputation and trust from their customers.

References:

- "AI regulations around the world: Trends, takeaways & what to watch heading into 2025" by Diligent Blog

- "Superagency in the workplace: Empowering people to unlock AI's full potential" by Hannah Mayer, et al.

- "Expanding AI's Impact With Organizational Learning" by Sam Ransbotham, et al., MITSloan

- "Embracing Responsible AI: Principles and Practices" by Naga Santhosh Reddy Vootukuri

This is an excerpt from DZone's 2025 Trend Report, Generative AI: The Democratization of Intelligent Systems

Read the Free Report

Opinions expressed by DZone contributors are their own.

Comments