LLM (Large Language Models) For Better Developer Learning of Your Product

Explore how to leverage LLMs (Large Language Models) and LLM apps for effective and efficient developer education, boosting the utilization of your product.

Join the DZone community and get the full member experience.

Join For FreeDeveloping a tech product is not just about coding and deployment. It’s about the learning journey that goes into building and utilizing it as well. Especially, if you have a developer-oriented product, it is about ensuring that developers understand your product at a deep level through the docs, tutorials, and how-to guides, improving both their own skills and the quality of the work they produce. Nowadays AI can not only generate docs from code but also makes it easy to find specific information or answer questions about your product using a chatbot for a better developer experience. It is life-changing for these project docs maintainers.

This article explores how LLMs (Large Language Models) and LLM apps can be leveraged for effective and efficient developer education, which can boost the utilization of your product.

Understanding Large Language Models

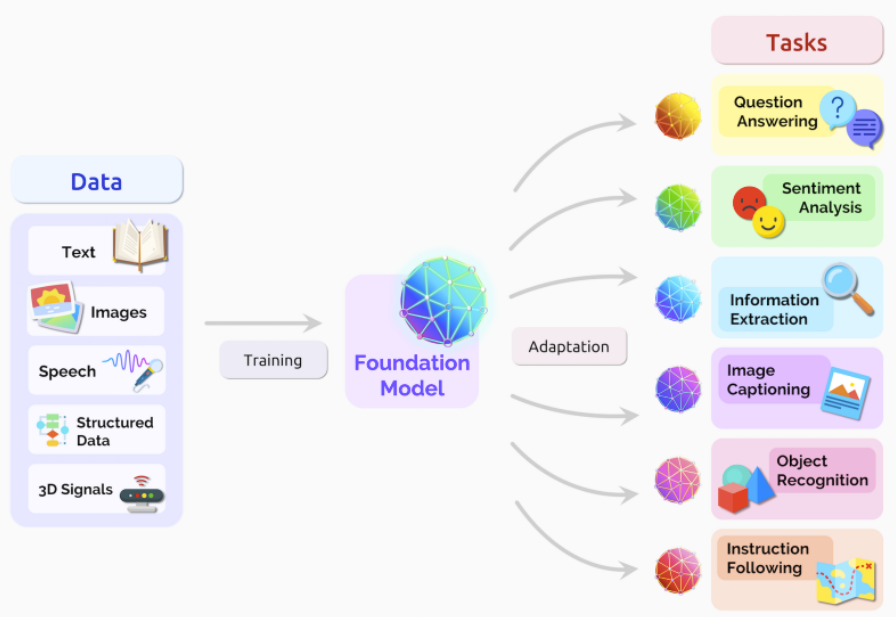

Let’s quickly recap what Large Language Models are. LLMs are like smart computer programs that are really good at understanding language. They are AI models trained on vast amounts of text data. LLM uses this understanding to do different tasks like creating content, looking up information, chatting, or helping arrange your data.

To get an LLM to do a specific task, a user gives it one or more prompts through an app, call it LLM App. These prompts could be a question, a command, a description, or some other piece of text. After receiving the prompt, the LLM decides what information should be given back to the user. The app then uses this information to come up with a response, which could be an answer or some newly created content.

On the technical side, LLMs are a specific kind of deep neural network that’s mainly trained on text, although some also use images, videos, or sounds. They’re very robust and adaptable in terms of what they can do, which is why they’re used in so many different areas. By now, a lot of people have heard about some of the most famous LLMs and the apps they power, like ChatGPT and DALL-E.

Key Challenges LLM Apps Can Solve in Developer Learning

When developers need to comprehend a new API, library, framework, or other developer tool, they first consult any kind of documentation. It is a product roadmap that provides instructions on how to use these tools successfully. It can be difficult to produce clear, detailed, and accessible documentation, but doing so can prevent misunderstanding, misuse, and, ultimately, the loss of potential users. Overly technical documentation may be inaccessible to less experienced developers, while overly simplified documentation may omit important details.

Inconsistent terminology, structure, or format can confuse readers and make the documentation harder to follow or even the best documentation is useless if developers can’t find it. Poorly organized or hard-to-navigate documentation can make finding the necessary information a daunting task. In this context, the key challenges an LLM app can solve are inconsistency and discoverability. Let’s understand what you can achieve by using the LLM app in developer learning in the next sections.

LLMs in Developer Learning

Contextual Learning

LLMs can revolutionize the way developers learn about your product. Because LLMs are context-sensitive, they can provide relevant and personalized responses based on the information given to them. When integrated into a learning platform such as docs, these models can deliver on-demand, contextual explanations about various features and functionalities of your product. They can essentially provide a virtual, on-demand tutor for developers, offering them the information they need exactly when they need it.

Interactive Documentation

Traditional documentation can be static, hard to navigate, and not very user-friendly. LLMs can be used to create interactive, dynamic documentation that responds to developers’ queries in real time. Developers can ask specific questions about your product, and the language model can generate responses on the fly, simplifying the learning process.

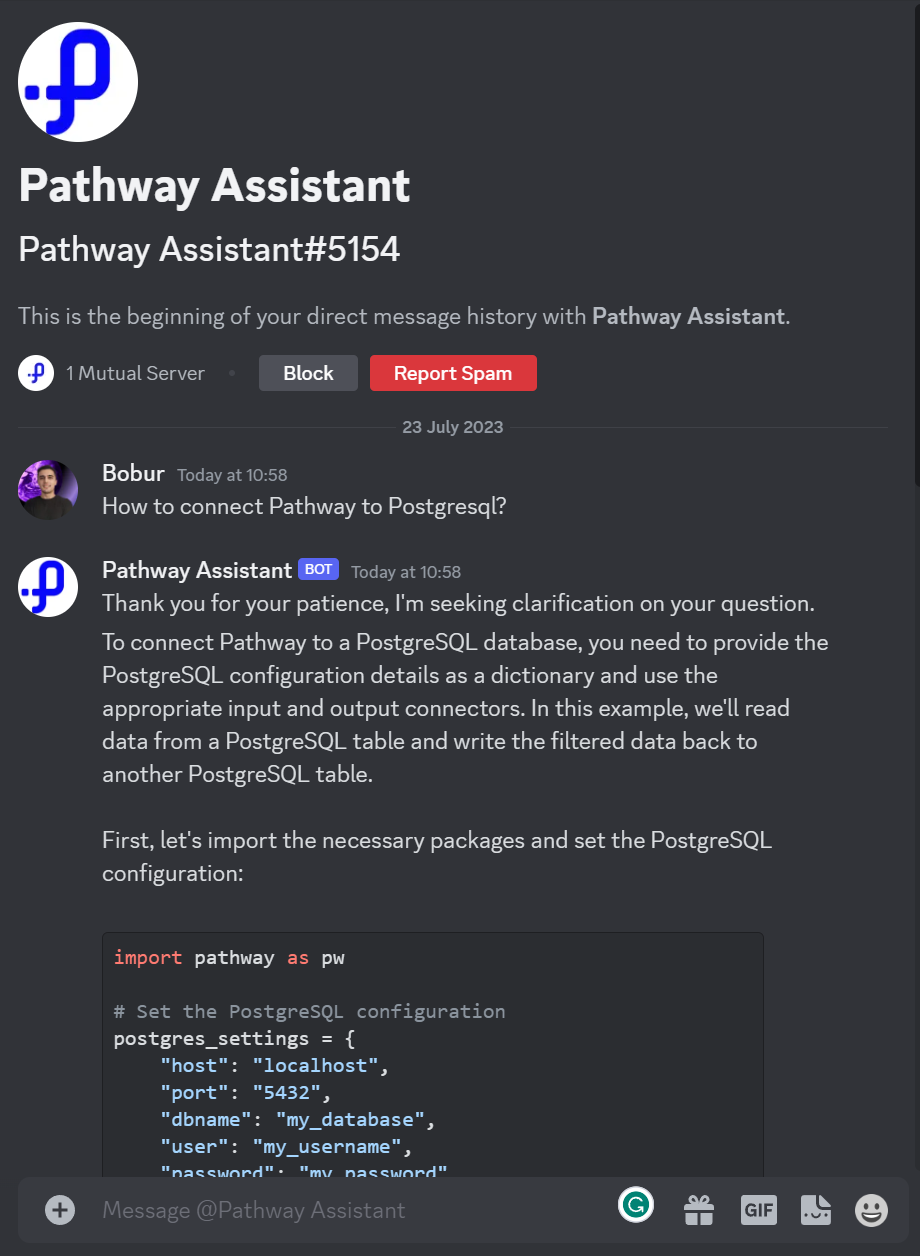

For example, Pathway engineers built a chatbot to answer questions about the Pathway documentation in real-time and you can ask the bot assistant on Discord to learn how it works.

Continued Learning

The technology industry is marked by continual change and evolution which means docs are subject to change regularly and developers might miss important changes on docs that might lead to code misconfiguration (for example, change on APIs) or even they might end up using the project. On the other hand, the LLM-based bot can automatically detect any changes in the document directory and update the vector index accordingly. This real-time reactivity ensures that app’s responses are always based on the most recent and relevant information available on the website.

Code Generation and Review

LLMs can generate and review code, making them an excellent learning tool for developers. Developers can input their code, and the language model can suggest improvements, catch bugs, or even generate new code snippets. This offers a practical, hands-on way for developers to learn about your product’s technical aspects. This can be useful for open-source project maintainers where the many code contributions could be from the community and usually new contributors need assistance with writing certain types of code. LLMs can review code and identify potential bugs or errors, making the quality control process more efficient. This aids contributors by providing immediate feedback and reducing the time between code submission and acceptance.

Implementing LLM

Using the LLM app an open-source project like Pathway offers, you can also easily integrate above mentioned capabilities into your docs platform. As they claim, the current solution can cope with the existing key challenges of bringing LLM applications to production and the ambiguity of natural languages that we’ve seen. LLM App architecture relies on existing enterprise document storage — no disk copies are created, and no vector database is needed. For an in-depth exploration of the app’s underlying code, visit the GitHub repository at llm-app.

Conclusion

Effective documentation (quickstarts, how-to guides, or tutorials) is necessary for the success of developer-oriented products. Although creating such documentation comes with its share of challenges, addressing these problems with modern the LLM app can lead to a better user experience, improved product adoption, and, ultimately, more satisfied developers.

Published at DZone with permission of Bobur Umurzokov. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments