Log4j Thread Deadlock - A Case Study

Join the DZone community and get the full member experience.

Join For Free- Java EE server: Oracle Weblogic Portal 10.0

- OS: Solaris 10

- JDK: Oracle/Sun HotSpot JVM 1.5

- Logging API: Apache Log4j 1.2.15

- RDBMS: Oracle 10g

- Platform type: Web Portal

- Quest Foglight for Java (monitoring and alerting)

- Java VM Thread Dump (thread race analysis)

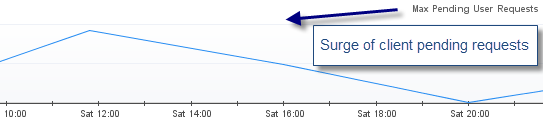

- What is the client impact? HIGH

- Recent change of the affected platform? Yes, a recent deployment was performed involving minor content changes and some Java libraries changes & refactoring

- Any recent traffic increase to the affected platform? No

- Since how long this problem has been observed? New problem observed following the deployment

- Did a restart of the Weblogic server resolve the problem? No, any restart attempt did result in an immediate surge of threads

- Did a rollback of the deployment changes resolve the problem? Yes

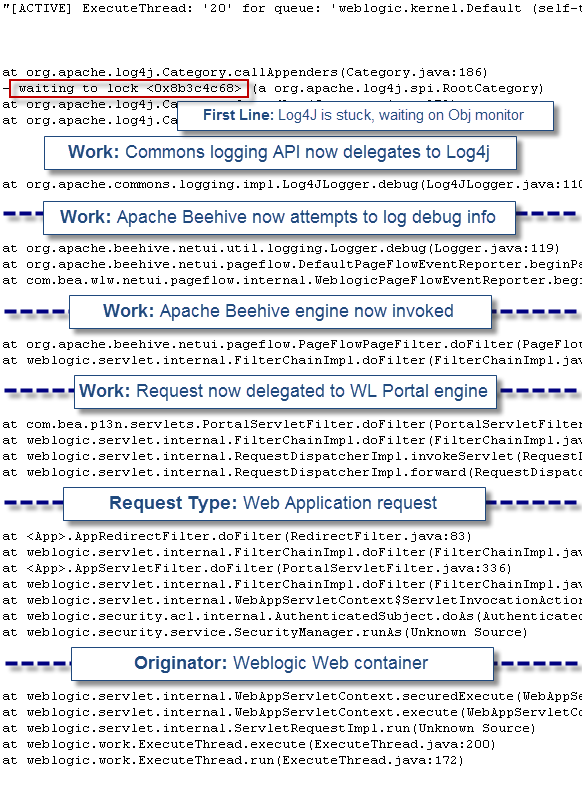

As usual, thread problems require proper thread dump analysis in order to pinpoint the source of threads contention. Lack of this critical analysis skill will prevent you to go any further in the root cause analysis.

The first step of the analysis was to perform a fast scan of all stuck threads and pinpoint a problem “pattern”. We found 250 threads stuck in the following execution path:

"[ACTIVE] ExecuteThread: '20' for queue: 'weblogic.kernel.Default (self-tuning)'" daemon prio=10 tid=0x03c4fc38 nid=0xe6 waiting for monitor entry [0x3f99e000..0x3f99f970]

at org.apache.log4j.Category.callAppenders(Category.java:186)

- waiting to lock <0x8b3c4c68> (a org.apache.log4j.spi.RootCategory)

at org.apache.log4j.Category.forcedLog(Category.java:372)

at org.apache.log4j.Category.log(Category.java:864)

at org.apache.commons.logging.impl.Log4JLogger.debug(Log4JLogger.java:110)

at org.apache.beehive.netui.util.logging.Logger.debug(Logger.java:119)

at org.apache.beehive.netui.pageflow.DefaultPageFlowEventReporter.beginPageRequest(DefaultPageFlowEventReporter.java:164)

at com.bea.wlw.netui.pageflow.internal.WeblogicPageFlowEventReporter.beginPageRequest(WeblogicPageFlowEventReporter.java:248)

at org.apache.beehive.netui.pageflow.PageFlowPageFilter.doFilter(PageFlowPageFilter.java:154)

at weblogic.servlet.internal.FilterChainImpl.doFilter(FilterChainImpl.java:42)

at com.bea.p13n.servlets.PortalServletFilter.doFilter(PortalServletFilter.java:336)

at weblogic.servlet.internal.FilterChainImpl.doFilter(FilterChainImpl.java:42)

at weblogic.servlet.internal.RequestDispatcherImpl.invokeServlet(RequestDispatcherImpl.java:526)

at weblogic.servlet.internal.RequestDispatcherImpl.forward(RequestDispatcherImpl.java:261)

at <App>.AppRedirectFilter.doFilter(RedirectFilter.java:83)

at weblogic.servlet.internal.FilterChainImpl.doFilter(FilterChainImpl.java:42)

at <App>.AppServletFilter.doFilter(PortalServletFilter.java:336)

at weblogic.servlet.internal.FilterChainImpl.doFilter(FilterChainImpl.java:42)

at weblogic.servlet.internal.WebAppServletContext$ServletInvocationAction.run(WebAppServletContext.java:3393)

at weblogic.security.acl.internal.AuthenticatedSubject.doAs(AuthenticatedSubject.java:321)

at weblogic.security.service.SecurityManager.runAs(Unknown Source)

at weblogic.servlet.internal.WebAppServletContext.securedExecute(WebAppServletContext.java:2140)

at weblogic.servlet.internal.WebAppServletContext.execute(WebAppServletContext.java:2046)

at weblogic.servlet.internal.ServletRequestImpl.run(Unknown Source)

at weblogic.work.ExecuteThread.execute(ExecuteThread.java:200)

at weblogic.work.ExecuteThread.run(ExecuteThread.java:172)## org.apache.log4j.Category

/**

* Call the appenders in the hierrachy starting at <code>this</code>. If no

* appenders could be found, emit a warning.

*

* <p>

* This method calls all the appenders inherited from the hierarchy

* circumventing any evaluation of whether to log or not to log the

* particular log request.

*

* @param event

* the event to log.

*/

public void callAppenders(LoggingEvent event) {

int writes = 0;

for (Category c = this; c != null; c = c.parent) {

// Protected against simultaneous call to addAppender,

// removeAppender,...

synchronized (c) {

if (c.aai != null) {

writes += c.aai.appendLoopOnAppenders(event);

}

if (!c.additive) {

break;

}

}

}

if (writes == 0) {

repository.emitNoAppenderWarning(this);

}- Rollback the refactoring and split Log4j calls back between parent and child classloader

- Reduce logging level for some appenders from DEBUG to WARNING

Published at DZone with permission of Pierre - Hugues Charbonneau, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments