Mainframe Development for the "No Mainframe" Generation

It is quite possible to develop on behalf of a mainframe platform without being aware of it. The conditions necessary to achieve this are rather simple.

Join the DZone community and get the full member experience.

Join For FreePowerful but Unknown

Few people will recognize a mainframe as a modern digital environment, but in fact, it is a widely used powerful platform. When shopping or doing your taxes, there is probably a mainframe involved at some point. As a developer, you are likely to "encounter" one during your career, even if you are not actually dealing with "green screens." However, without mainframe knowledge, how do you proceed? Someone once said: "All the people with relevant knowledge are dead or retired." Even though that is not entirely true, learning how to "handle" the mainframe could indeed pose difficulties.

Mainframe in a Laboratory

One of the software development departments at the Dutch IT company Ordina is involved in mainframe technology. Apart from mainframe development, it focuses on three points:

- Automation

- External data access

- Discovery and impact analysis

My colleague at Ordina Patrick Hoving and I are working on the first two points. One of our challenging questions is the following: "Is it possible to integrate a mainframe in your IT landscape in such a way that IT professionals without specific knowledge can deal with it?"

The solution we chose is an open-source-based environment, enabling fully automated CI/CD pipelines, in which developers can use all the modern tools they want to create software that will run on the mainframe.

The VPN-protected laboratory environment that we constructed contains the following elements:

- Mainframe emulating software (ADCD): released by IBM and running on Linux, it contains all the features of a "real" mainframe.

- GitLab: the graphical user interface for distributed version control system Git, used for version management and running CI/CD pipelines.

- Robot Framework: used for automatic testing.

What can we do in this lab?

Develop

In order to be able to develop, test, and deploy, we started by creating a small but useful application, one that creates mainframe user accounts, using z/OS access management application RACF. While working for several customers, we noted that requests for mainframe access usually take awhile, and we wanted to be able to create "our" users automatically and fast.

Our starting point for building applications was that no development should be done on the mainframe itself. We chose to build our user provisioning application in bash shell commands on a local Linux machine because that was easy for us. But we could have picked any other language like Python, Java, or C++. Or built a mobile app, as long as it supported API calls. In our bash scripts, we used curl for those calls via the built-in z/OSMF API from IBM.

# basic of curl commands for call to z/OSMF

Ccurlcmdbase="curl --silent --insecure --header 'Authorization: Basic $(cat ${Czoscredentialfile})'"

# adding the parameters for GET request

curlcmd=${Ccurlcmdbase} curlcmd+=" --request \"GET\"" curlcmd+=" \"${Capi_files}/ds?dslevel=${accountnaamupper}\"" With simple GET and POST commands, it is possible to have code executed and data transferred to and from the mainframe. The whole application can be executed by typing three commands: one to add a user on the mainframe, one to delete a user, and one to display a list of available users.

Below is an overview of our laboratory environment, indicating the application and the architecture. The red arrows represent the manual actions that are present in a "traditional" production environment. The blue arrows indicate the automated flow as it runs over the parts of our environment.

Test

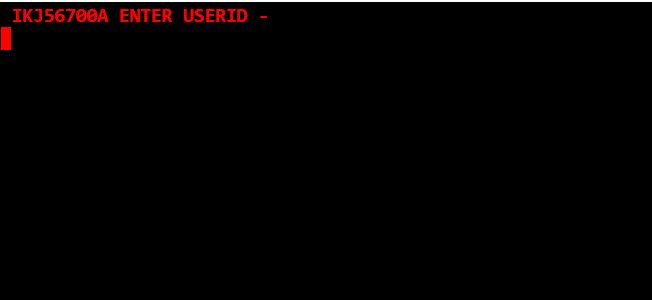

Since we did not want to test the application by typing in the commands over and over again with test user credentials, we looked for a tool that could do this for us. We decided to use Robot Framework. This Python-based tool meets our "open-source" requirement, and it runs on several platforms. With Robot Framework you can test about anything, and lots of libraries are available. For the tests on the mainframe (scenarios like "user tries to log in with the credentials he just received") we used the Mainframe3270 library. This library allows us to walk through the mainframe screens. It also creates screenshots, so after testing, it is perfectly clear what happened. Below are some code snippets examples and screenshots from the test execution.

TC_logon

# On the VTAM-screen: choose TSO

Write Bare L TSO

Send Enter

Wait Field Detected

Take Screenshot

Sleep ${g_demodelay}

# Test whether the login prompt has appeared. If so, provision user name

${l_3270output} Read 1 2 22

Should Be True "${l_3270output}" == "IKJ56700A ENTER USERID"

Write Bare ${user_name}

Send Enter

Deploy

While developing and testing, we initially used a Git repository for version control of both the software and the test assets. And we ran manual tests before merging our changes to the master branch. After a number of those manual operations, we sourced a tool that would enable continuous deployment and continuous integration(CI/CD). GitLab seemed a natural choice; it met our conditions, it has a large user base, and it is pretty well-supported. Moreover, we found it easy to build pipelines to deploy the application and the testware. Therefore, when we make a change in our user provisioning application, we simply merge the changes to our master branch and run the deployment pipelines, which show the tests and their results. If the mainframe is "hit" by several requests and a new user has been created on it, even if we know that it is there, we never have to type a single command on a "green screen." If we hand over our application to a new colleague, they wouldn't have to know that there was a mainframe behind it.

Opinions expressed by DZone contributors are their own.

Comments