Mastering Scalability in Spring Boot

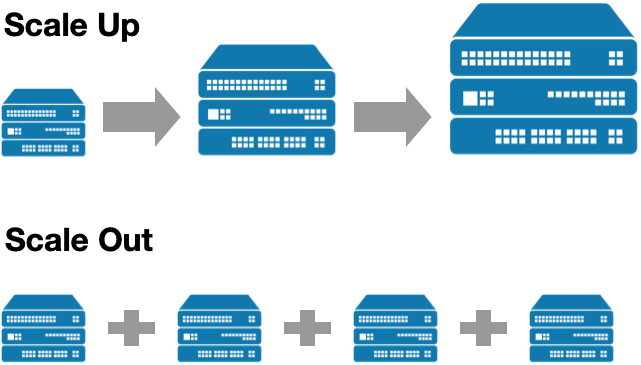

Scalability ensures systems handle growth in traffic or data while maintaining performance. This can be achieved via vertical scaling or horizontal scaling.

Join the DZone community and get the full member experience.

Join For FreeScalability is a fundamental concept in both technology and business that refers to the ability of a system, network, or organization to handle a growing amount of requests or ability to grow. This characteristic is crucial for maintaining performance and efficiency as need increases.

In this article, we will explore the definition of scalability, its importance, types, methods to achieve it, and real-world examples.

What Is Scalability in System Design?

Scalability encompasses the capacity of a system to grow and manage increasing workloads without compromising performance. This means that as user traffic, data volume, or computational demands rise, a scalable system can maintain or even enhance its performance. The essence of scalability lies in its ability to adapt to growth without necessitating a complete redesign or significant resource investment

Why This Is Important

- Managing growth. Scalable systems can efficiently handle more users and data without sacrificing speed or reliability. This is particularly important for businesses aiming to expand their customer base.

- Performance enhancement. By distributing workloads across multiple servers or resources, scalable systems can improve overall performance, leading to faster processing times and better user experiences.

- Cost-effectiveness. Scalable solutions allow businesses to adjust resources according to demand, helping avoid unnecessary expenditures on infrastructure.

- Availability assurance. Scalability ensures that systems remain operational even during unexpected spikes in traffic or component failures, which is essential for mission-critical applications.

- Encouraging innovation. A scalable architecture supports the development of new features and services by minimizing infrastructure constraints.

Types of Scalability in General

- Vertical scaling (scaling up). This involves enhancing the capacity of existing hardware or software components. For example, upgrading a server's CPU or adding more RAM allows it to handle increased loads without changing the overall architecture.

- Horizontal scaling (scaling out). This method involves adding more machines or instances to distribute the workload. For instance, cloud services allow businesses to quickly add more servers as needed.

Challenges

- Complexity. Designing scalable systems can be complex and may require significant planning and expertise.

- Cost. Initial investments in scalable technologies can be high, although they often pay off in the long run through improved efficiency.

- Performance bottlenecks. As systems scale, new bottlenecks may emerge that need addressing, such as database limitations or network congestion.

Scalability in Spring Boot Projects

Scalability refers to the ability of an application to handle growth — whether in terms of user traffic, data volume, or transaction loads — without compromising performance. In the context of Spring Boot, scalability can be achieved through both vertical scaling (enhancing existing server capabilities) and horizontal scaling (adding more instances of the application).

Key Strategies

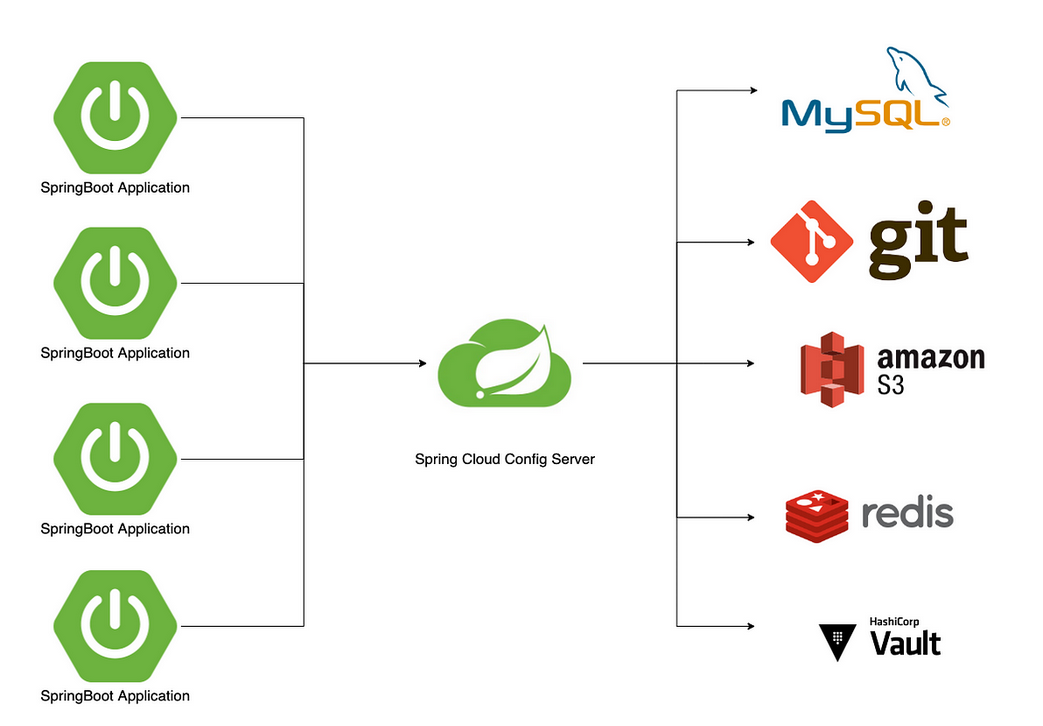

Microservices Architecture

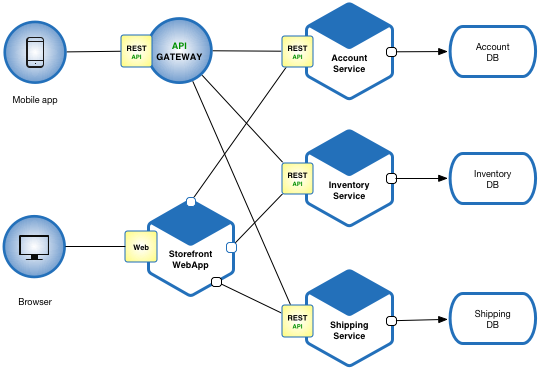

- Independent services. Break your application into smaller, independent services that can be developed, deployed, and scaled separately. This approach allows for targeted scaling; if one service experiences high demand, it can be scaled independently without affecting others.

- Spring cloud integration. Utilize Spring Cloud to facilitate microservices development. It provides tools for service discovery, load balancing, and circuit breakers, enhancing resilience and performance under load.

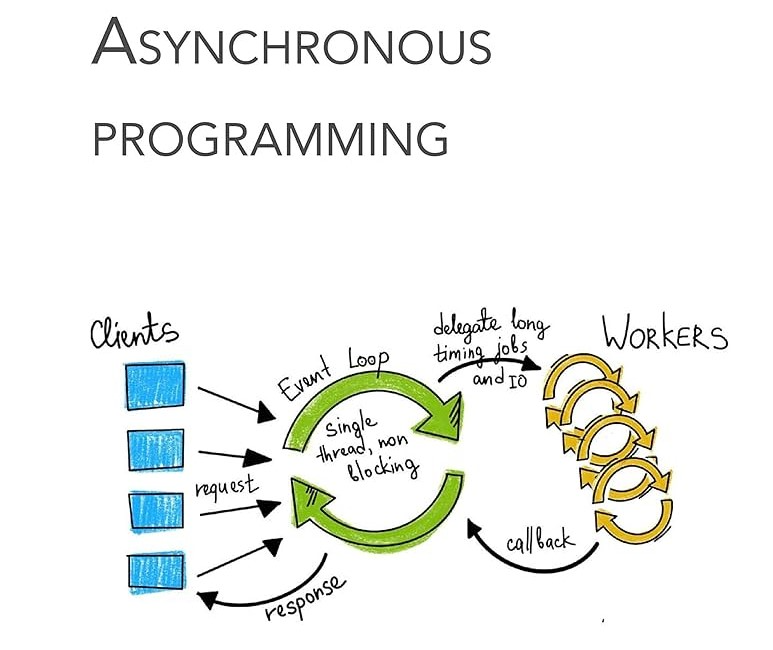

Asynchronous Processing

- Implement asynchronous processing to prevent thread blockage and improve response times. Utilize features like

CompletableFutureor message queues (e.g., RabbitMQ) to handle long-running tasks without blocking the main application thread.

- Asynchronous processing allows tasks to be executed independently of the main program flow. This means that tasks can run concurrently, enabling the system to handle multiple operations simultaneously. Unlike synchronous processing, where tasks are completed one after another, asynchronous processing helps in reducing idle time and improving efficiency. This approach is particularly advantageous for tasks that involve waiting, such as I/O operations or network requests. By not blocking the main execution thread, asynchronous processing ensures that systems remain responsive and performant.

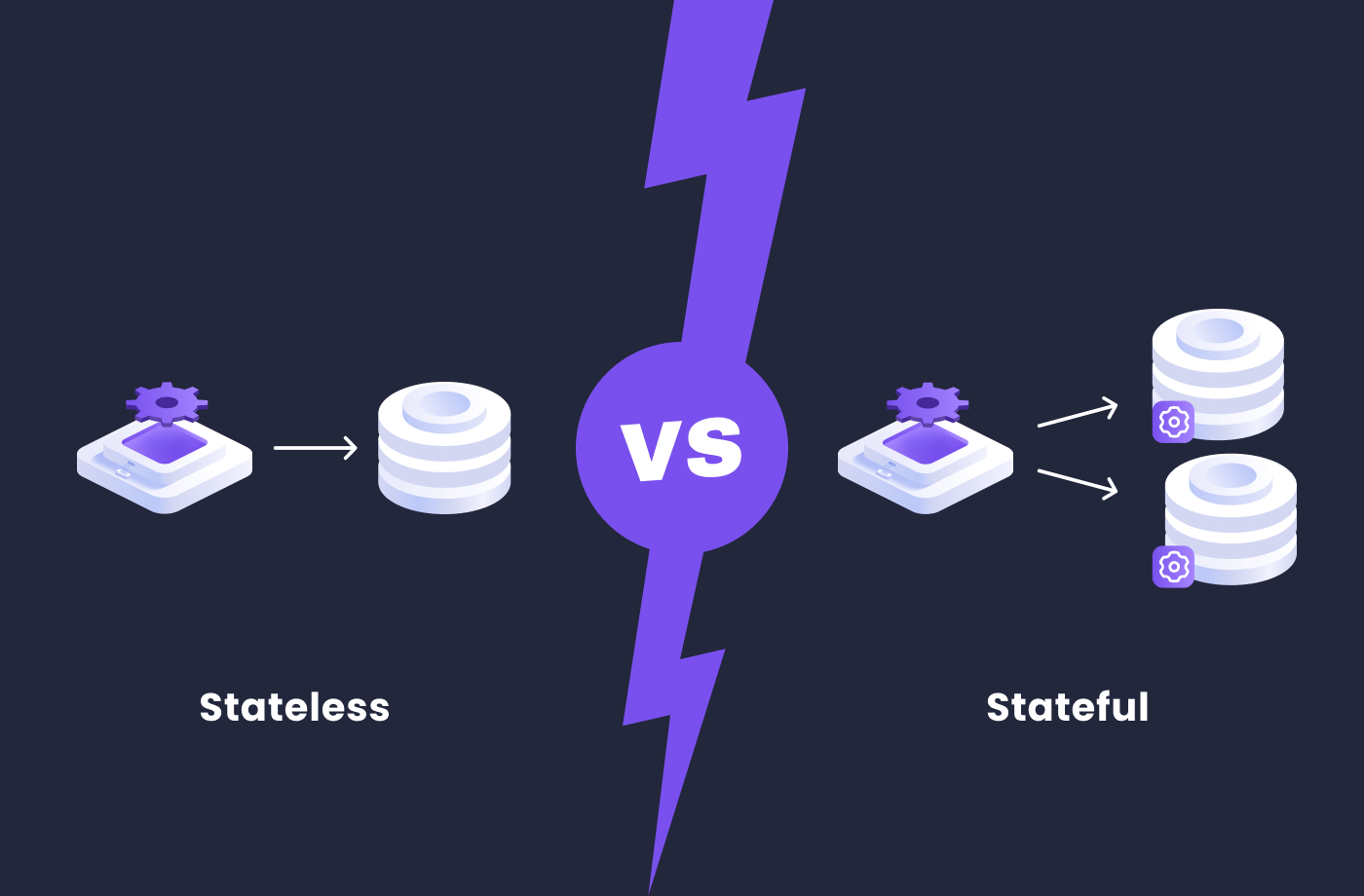

Stateless Services

- Design your services to be stateless, meaning they do not retain any client data between requests. This simplifies scaling since any instance can handle any request without needing session information.

- There is no stored knowledge of or reference to past transactions. Each transaction is made as if from scratch for the first time. Stateless applications provide one service or function and use a content delivery network (CDN), web, or print servers to process these short-term requests.

Database Scalability

Database scalability refers to the ability of a database to handle increasing amounts of data, numbers of users, and types of requests without sacrificing performance or availability. A scalable database tackles these database server challenges and adapts to growing demands by either adding resources such as hardware or software, by optimizing its design and configuration, or by undertaking some combined strategy.

Type of Databases

1. SQL Databases (Relational Databases)

- Characteristics: SQL databases are known for robust data integrity and support complex queries.

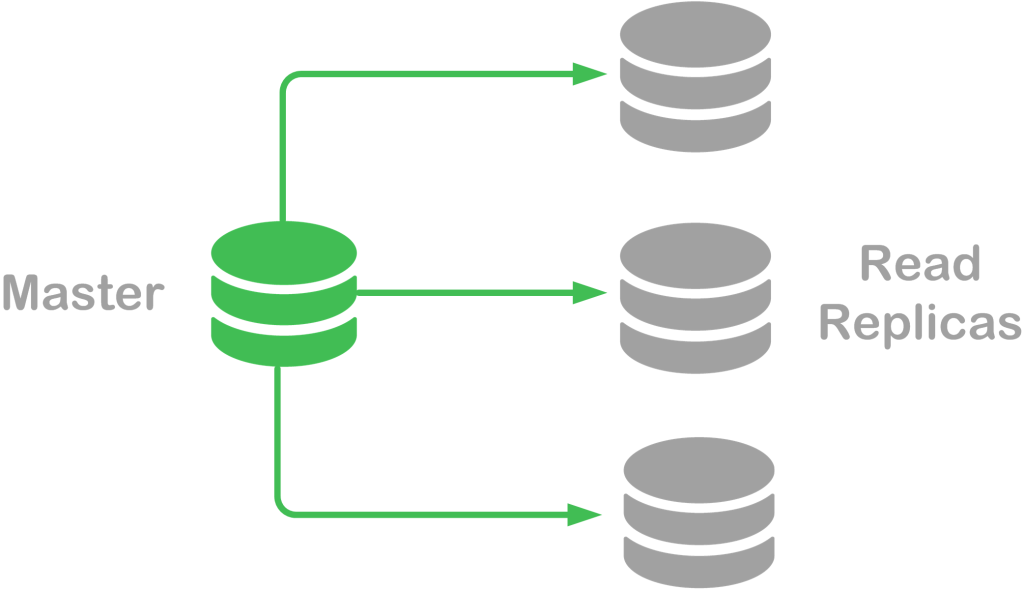

- Scalability: They can be scaled both vertically by upgrading hardware and horizontally through partitioning and replication.

- Examples: PostgreSQL supports advanced features like indexing and partitioning.

2. NoSQL Databases

- Characteristics: Flexible schema designs allow for handling unstructured or semi-structured data efficiently.

- Scalability: Designed primarily for horizontal scaling using techniques like sharding.

- Examples: MongoDB uses sharding to distribute large datasets across multiple servers.

Below are some techniques that enhance database scalability:

- Use indexes. Indexes help speed up queries by creating an index of frequently accessed data. This can significantly improve performance, particularly for large databases. Timescale indexes work just like PostgreSQL indexes, removing much of the guesswork when working with this powerful tool.

- Partition your data. Partitioning involves dividing a large table into smaller, more manageable parts. This can improve performance by allowing the database to access data more quickly. Read how to optimize and test your data partitions’ size in Timescale.

- Use buffer cache. In PostgreSQL, buffer caching involves storing frequently accessed data in memory, which can significantly improve performance. This is particularly useful for read-heavy workloads, and while it is always enabled in PostgreSQL, it can be tweaked for optimized performance.

- Consider data distribution. In distributed databases, data distribution or sharding is an extension of partitioning. It turns the database into smaller, more manageable partitions and then distributes (shards) them across multiple cluster nodes. This can improve scalability by allowing the database to handle more data and traffic. However, sharding also requires more design work up front to work correctly.

- Use a load balancer. Sharding and load balancing often conflict unless you use additional tooling. Load balancing involves distributing traffic across multiple servers to improve performance and scalability. A load balancer that routes traffic to the appropriate server based on the workload can do this; however, it will only work for read-only queries.

- Optimize queries. Optimizing queries involves tuning them to improve performance and reduce the load on the database. This can include rewriting queries, creating indexes, and partitioning data.

Caching Strategies

Caching is vital in enhancing microservices' performance and firmness. It is a technique in which data often and recently used is stored in a separate storage location for quicker retrieval from the main memory, known as a cache. If caching is incorporated correctly into the system architecture, there is a marked improvement in the microservice's performance and a lessened impact on the other systems.

When implementing caching:

- Identify frequently accessed data that doesn't change often — ideal candidates for caching.

- Use appropriate annotations (

@Cacheable,@CachePut, etc.) based on your needs. - Choose a suitable cache provider depending on whether you need distributed capabilities (like Hazelcast) or simple local storage (like

ConcurrentHashMap). - Monitor performance improvements after implementing caches to ensure they're effective without causing additional overheads like stale data issues.

Performance Optimization

Optimize your code by avoiding blocking operations and minimizing database calls. Techniques such as batching queries or using lazy loading can enhance efficiency. Regularly profile your application using tools like Spring Boot Actuator to identify bottlenecks and optimize performance accordingly.

Steps to Identify Bottlenecks

Monitoring Performance Metrics

- Use tools like Spring Boot Actuator combined with Micrometer for collecting detailed application metrics.

- Integrate with monitoring systems such as Prometheus and Grafana for real-time analysis.

Profiling CPU and Memory Usage

- Utilize profilers like VisualVM, YourKit, or JProfiler to analyze CPU usage, memory leaks, and thread contention.

- These tools help identify methods that consume excessive resources.

Database Optimization

- Analyze database queries using tools like Hibernate statistics or database monitoring software.

- Optimize SQL queries by adding indexes, avoiding N+1 query problems, and optimizing connection pool usage.

Thread Dump Analysis for Thread Issues

- Use

jstack <pid>command or visual analysis tools like yCrash to debug deadlocks or blocked threads in multi-threaded applications.

Distributed Tracing (If Applicable)

- For microservices architecture, use distributed tracing tools such as Zipkin or Elastic APM to trace latency issues across services.

Common Bottleneck Scenarios

High Latency

Analyze each layer of the application (e.g., controller, service) for inefficiencies.

|

Scenario |

Tools/Techniques |

|

High CPU Usage |

VisualVM, YourKit |

|

High Memory Usage |

Eclipse MAT, VisualVM |

|

Slow Database Queries |

Hibernate Statistics |

|

Network Latency |

Distributed Tracing Tools |

Monitoring and Maintenance

Continuously monitor your application’s health using tools like Prometheus and Grafana alongside Spring Boot Actuator. Monitoring helps identify performance issues early and ensures that the application remains responsive under load

Load Balancing and Autoscaling

Use load balancers to distribute incoming traffic evenly across multiple instances of your application. This ensures that no single instance becomes a bottleneck. Implement autoscaling features that adjust the number of active instances based on current demand, allowing your application to scale dynamically

Handling 100 TPS in Spring Boot

1. Optimize Thread Pool Configuration

Configuring your thread pool correctly is crucial for handling high TPS. You can set the core and maximum pool sizes based on your expected load and system capabilities.

Example configuration:

spring.task.execution.pool.core-size=20

spring.task.execution.pool.max-size=100

spring.task.execution.pool.queue-capacity=200

spring.task.execution.pool.keep-alive=120sThis configuration allows for up to 100 concurrent threads with sufficient capacity to handle bursts of incoming requests without overwhelming the system. Each core of a CPU can handle about 200 threads, so you can configure it based on your hardware.

2. Use Asynchronous Processing

Implement asynchronous request handling using @Async annotations or Spring WebFlux for non-blocking I/O operations, which can help improve throughput by freeing up threads while waiting for I/O operations to complete.

3. Enable Caching

Utilize caching mechanisms (e.g., Redis or EhCache) to store frequently accessed data, reducing the load on your database and improving response times.

4. Optimize Database Access

Use connection pooling (e.g., HikariCP) to manage database connections efficiently. Optimize your SQL queries and consider using indexes where appropriate.

5. Load Testing and Monitoring

Regularly perform load testing using tools like JMeter or Gatling to simulate traffic and identify bottlenecks. Monitor application performance using Spring Boot Actuator and Micrometer.

Choosing the Right Server

Choosing the right web server for a Spring Boot application to ensure scalability involves several considerations, including performance, architecture, and specific use cases. Here are key factors and options to guide your decision:

1. Apache Tomcat

- Type: Servlet container

- Use case: Ideal for traditional Spring MVC applications.

- Strengths:

- Robust and widely used with extensive community support.

- Simple configuration and ease of use.

- Well-suited for applications with a straightforward request-response model.

- Limitations:

- May face scalability issues under high loads due to its thread-per-request model, leading to higher memory consumption per request

2. Netty

- Type: Asynchronous event-driven framework

- Use case: Best for applications that require high concurrency and low latency, especially those using Spring WebFlux.

- Strengths:

- Non-blocking I/O allows handling many connections with fewer threads, making it highly scalable.

- Superior performance in I/O-bound tasks and real-time applications.

- Limitations:

- More complex to configure and requires a different programming model compared to traditional servlet-based application.

3. Undertow

- Type: Lightweight web server

- Use case: Suitable for both blocking and non-blocking applications; often used in microservices architectures.

- Strengths:

- High performance with low resource consumption.

- Supports both traditional servlet APIs and reactive programming models.

- Limitations:

- Less popular than Tomcat, which may lead to fewer community resources available

4. Nginx (As a Reverse Proxy)

- Type: Web server and reverse proxy

- Use case: Often used in front of application servers like Tomcat or Netty for load balancing and serving static content.

- Strengths:

- Excellent at handling high loads and serving static files efficiently.

- Can distribute traffic across multiple instances of your application server, improving scalability

Using the Right JVM Configuration

1. Heap Size Configuration

The Java heap size determines how much memory is allocated for your application. Adjusting the heap size can help manage large amounts of data and requests.

-Xms1g -Xmx2g -Xms: Set the initial heap size (1 GB in this example).-Xmx: Set the maximum heap size (2 GB in this example).

2. Garbage Collection

Choosing the right garbage collector can improve performance. The default G1 Garbage Collector is usually a good choice, but you can experiment with others like ZGC or Shenandoah for low-latency requirements.

-XX:+UseG1GC For low-latency applications, consider using:

-XX:+UseZGC # Z Garbage Collector

-XX:+UseShenandoahGC # Shenandoah Garbage Collector 3. Thread Settings

Adjusting the number of threads can help handle concurrent requests more efficiently.

Set the number of threads in the Spring Boot application properties:

server.tomcat.max-threads=200Adjust the JVM’s thread stack size if necessary:

-Xss512k 4. Enable JIT Compiler Options

JIT (Just-In-Time) compilation can optimize the performance of your code during runtime.

-XX:+TieredCompilation

-XX:CompileThreshold=1000 -XX:CompileThreshold: This option controls how many times a method must be invoked before it's considered for compilation. Adjust according to profiling metrics.

Hardware Requirements

To support 100 TPS, the underlying hardware infrastructure must be robust. Key hardware considerations include:

- High-performance servers. Use servers with powerful CPUs (multi-core processors) and ample RAM (64 GB or more) to handle concurrent requests effectively.

- Fast storage solutions. Implement SSDs for faster read/write operations compared to traditional hard drives. This is crucial for database performance.

- Network infrastructure. Ensure high bandwidth and low latency networking equipment to facilitate rapid data transfer between clients and servers.

Conclusion

Performance optimization in Spring Boot applications is not just about tweaking code snippets; it's about creating a robust architecture that scales with growth while maintaining efficiency. By implementing caching, asynchronous processing, and scalability strategies — alongside careful JVM configuration — developers can significantly enhance their application's responsiveness under load.

Moreover, leveraging monitoring tools to identify bottlenecks allows for targeted optimizations that ensure the application remains performant as user demand increases. This holistic approach improves user experience and supports business growth by ensuring reliability and cost-effectiveness over time.

If you're interested in more detailed articles or references on these topics:

Opinions expressed by DZone contributors are their own.

Comments