Merge Policy Internals in Solr

Join the DZone community and get the full member experience.

Join For Free

last week, a colleague asked me a really simple question about

segments merging in solr. after discussing the answer for some minutes

while playing around with solr, i realized that there are a lot of

subtleties in this subject. so i started to look at the code and

discovered some interesting things, which i’m going to summarize in

this post.

what is a merge policy?

the process of choosing which segments are going to be merged, and in which way, is carried out by an abstract class named mergepolicy. this class builds a mergespecification, which is an object composed of a list of onemerge objects. each one of them represents a single merge operation, defined by the segments that will be merged into a new one.

after a change in the index, the indexwriter invokes the mergepolicy to obtain a mergespecification. next, it invokes the mergescheduler, who is in charge of determining when the merges will be executed. there are mainly two implementations of mergescheduler: the concurrentmergescheduler, which runs each merge in a separate thread, and the serialmergescheduler, which runs all the merges sequentially in the current thread. finally, when the time to do a merge comes, the indexwriter does part of the job, and delegates the other part to a segmentmerger.

so, if we want to know when a set of segments is going to be merged, why a segment is being merged and another one isn’t, and other things like these, we should take a look at the mergepolicy.

there are many implementations of mergepolicy, but i’m going to focus in one of them (logbytesizemergepolicy), because it’s solr’s default, and i believe that it’s the one used by most people. mergepolicy defines three abstract methods to construct a mergespecification:

- findmerges is invoked whenever there is a change in the index. this is the method that i’ll study in this post.

- findmergesforoptimize is invoked whenever an optimize operation is called.

-

findmergestoexpungedeletes is invoked whenever an expunge deletes operation is called.

step by step

i’ll start by giving a brief and conceptual description of how the

merge policy works, that you can follow by looking at the figure below:

- sort the segments by name.

- group the existing segments into levels. each level is a contiguous set of segments.

-

for each level, determine the interval of segments that are going to be merged.

parameters

lets define a couple of parameters involved in the process of merging the segments that compose an index:

- mergefactor: this parameter determines many things, like how many segments are going to be merged into a new one, the maximum number of segments that can be in a level and the span of each level. can be set in solrconfig.xml.

- minmergesize: all segments whose size is less than this parameter’s value will belong to the same level. this value is fixed.

- maxmergesize: all segments whose size is greater than this parameter’s value won’t be ever merged. this value is fixed.

- maxmergedocs: all segments containing more documents than this parameter’s value won’t be merged. can be set in solrconfig.xml.

constructing the levels

let’s see how levels are constructed. to

define the first level, the algorithm searches the maximum segment’

size. let’s call this value levelmaxsize. if this value is less than minmergesize, then all the segments are considered to be at the same level. otherwise, let’s define levelminsize as:

that’s quite an ugly arithmetic formula, but it means something like this: levelminsize will be almost mergefactor times less than levelmaxsize (it would have been mergefactor times if 1 had been used as exponent instead of 0.75), but if it goes below minmergesize, make it equal to minmergesize.

after this calculation, the algorithm will

choose which segments belong to the current level. to do this, it will

find the newest segment whose size is greater or equal than levelminsize, and will consider this segment, and all the segments older than it, to be part of this level.

the next levels will be constructed using the same algorithm, but

constraining the set of available segments to the ones newer than the

newest of the previous level.

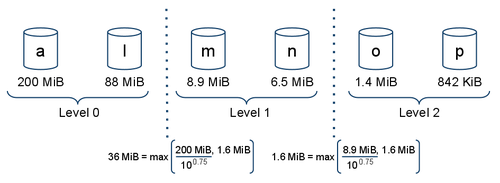

in the next figure you can see a simple example with mergefactor=10 and minmergesize=1.6mib. the intention behind using a mergefactor of 10 is to obtain levels with span of decreasing order of magnitude.

but

in some cases, this algorithm can result in a structure of levels that

you won’t expect if you don’t know the internals. take, for example,

the segments of the following table, assuming mergefactor=10 and minmergesize=1.6mib:

| segment | size |

| a | 200 mib |

| l | 88 mib |

| m | 8.9 mib |

| n | 6.5 mib |

| o | 1.4 mib |

| p | 842 kib |

| q | 842 kib |

| r | 842 kib |

| s | 842 kib |

| t | 842 kib |

| u | 842 kib |

| v | 842 kib |

| w | 842 kib |

| x | 160 mib |

how many levels are in this case? let’s see: the maximum segment size is 200 mib, thus, levelminsize is 35 mib. the newest segment with size greater than levelminsize is

x

, so the first level will include

x

and all the segments older than it. this means that, in this case, there’s only one level!

choosing which segments to merge

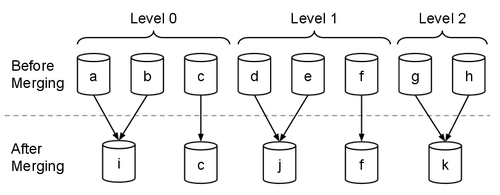

after defining the levels, the mergepolicy will choose which segments to merge. to do this, it’ll analyze each level separately. if a level has less than mergefactor segments, then it’ll be skipped. otherwise, each group of mergefactor contiguous segments will be merged into a new one. if at least one of the segments in a group is bigger than maxmergefactor, or has more than maxmergedocs documents, then the group is skipped.

resuming the second example of the previous section, where only one level is present, the result of the merge will be:

| segment | size |

| u | 842 kib |

| v | 842 kib |

| w | 842 kib |

| x | 160 mib |

| y | 311 mib |

minmergesize and maxmergesize

through the post, i’ve talked about these two parameters, which are

involved in the process of merging. it’s worth noting that the value of

them is hard coded in the source code of lucene, and their values are

the following:

- minmergesize has a fixed value of 1.6 mib. this means that any segment whose size is less than 1.6 mib will be included in the last level.

-

maxmergesize has a fixed value of 2 gib. this means that any segment whose size is greater than 2 gib will never be merged.

conclusion

while the algorithm itself isn’t extremely complex, you need to understand the internals if you want to predict what will happen to your index when more documents are added. also, knowing how the merge policy works will help you in determining the value of some parameters like mergefactor and maxmergedocs.

Published at DZone with permission of Juan Grande, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments