NewRelic Logs With Logback on Azure Cloud

An example of a Logback appender with Logstash Logback Encoder that helps to log from Azure-managed services into NewRelic Log API.

Join the DZone community and get the full member experience.

Join For FreeThose who work with NewRelic logs for Java applications probably know that the user of an agent might be the most flexible and convenient way to collect log data and gather different application metrics, like CPU and memory usage and execution time. However, to run a Java application with the agent, we need access to an application execution unit (like a jar or Docker image) and control an execution runtime (e.g., an image with JVM on Kubernetes). Having such access, we can set up Java command line arguments, including necessary configurations for the agent.

Though, Azure Cloud-managed services, such as Azure Data Factory (ADF) or Logic Apps, do not have "tangible" executed artifacts nor execution environments. Such services are fully managed, and their execution runtimes are virtual. For these services, Azure suggests using Insights and Monitor to collect logs, metrics, and events to create alerts. But if your company has a separate solution for centralized log collection, like NewRelic, it could be a challenge to integrate managed services with an external logging framework.

In this article, I will show how to send logs to NewRelic from Azure-managed services.

Solution

NewRelic provides a rather simple Log API, which imposes few restrictions and can be used by registered users authenticated by NewRelic API Key. Log API has only a mandatory message field and expects some fields in a predefined format, e.g., the timestamp should be as milliseconds or seconds since epoch or ISO8601-formatted string. A format of accepted messages is JSON.

Many Azure-managed services can send messages over HTTP. For instance, ADF provides an HTTP connector. Again, though, it might be troublesome to maintain consistent logging with the same log message structure, the same configurations, and the shared API key.

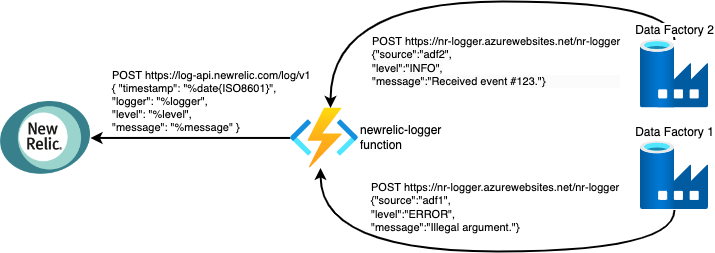

Instead, much more convenient would be to have a single service that is responsible for collecting messages from other Azure services and for communication with NewRelic. So, all the messages would go through the service, be transformed, and sent to NewRelic's Log API.

A good candidate for such a logging service is Azure Function with HTTP trigger. Azure Function can execute code to receive, validate, transform, and send log messages to NewRelic. And a function will be a single place where the NewRelic API key is used.

Implementation

Depending on a log structure, a logger function may expect different message formats. Azure functions are able to process JSON payload and automatically deserialize it into an object. This feature helps to maintain a unified log format. The definition of a function's HTTP trigger would be like this:

public HttpResponseMessage log(

@HttpTrigger(name = "req",

methods = {HttpMethod.POST},

authLevel = AuthorizationLevel.ANONYMOUS)

HttpRequestMessage<Optional<LogEvent>> request,

ExecutionContext context) {...}Here LogEvent is POJO class, which is used to convert JSON strings into Java objects.

The reduction of implementation complexity can be achieved by splitting the functionality into two parts: a "low" level protocol component and the function itself that receives and logs input events. This separation of concerns, as the function does its main job - the logging, and a separate component implements NewRelic Log API.

The logging-in function can be delegated to Logback or Log4j with Slf4j, so the process looks like conventional logging. No extra dependencies in the code except on a habitual Slf4j:

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.event.Level;

public class NewRelicLogger {

private static final Logger LOG =

LoggerFactory.getLogger("newrelic-logger-function");

@FunctionName("newrelic-logger")

public HttpResponseMessage log(

@HttpTrigger(name = "req",

methods = {HttpMethod.POST},

authLevel = AuthorizationLevel.ANONYMOUS)

HttpRequestMessage<Optional<LogEvent>> request,

ExecutionContext context) {

// input parameters checks are skipped for simplicity

var body = request.getBody().get();

var level = body.level();

var message = body.message();

switch (level) {

case "TRACE", "DEBUG", "INFO", "WARN", "ERROR" ->

LOG.atLevel(Level.valueOf(level)).log(message);

default ->

LOG.info("Unknown level [{}]. Message: [{}]", level, message);

}

return request

.createResponseBuilder(HttpStatus.OK)

.body("")

.build();

}

}The second part of the implementation is a custom appender which is used by Logback, so log messages are sent to NewRelic Log API. Logstash Logback Encoder is perfectly suited for the generation of JSON strings within the Logback appender. With it, we just need to implement an abstract AppenderBase. And LoggingEventCompositeJsonLayout will be responsible for the generation of JSON. The layout is highly configurable, which allows to form any structure of a message. A skeleton of AppenderBase implementation with mandatory fields can look like this:

public class NewRelicAppender extends AppenderBase<ILoggingEvent> {

private Layout<ILoggingEvent> layout;

private String url;

private String apiKey;

// setters and getters are skipped

@Override

public void start() {

if (!validateSettings()) { //check mandatory fields

return;

}

super.start();

}

@Override

protected void append(ILoggingEvent loggingEvent) {

//layout generates JSON message

var event = layout.doLayout(loggingEvent);

// need to check the size of message

// as NewRelic allows messages < 1Mb

// NewRelic accepts messages compressed by GZip

payload = compressIfNeeded(event);

// send a message to NewRelic by some Http client

sendPayload(payload);

}

// compression and message sending methods are skipped

}The appender can be added, built, and packaged into the Azure function. Although, the better approach is to build the appender separately, so it can be reused in other projects without the necessity to copy-paste the code just by adding the appender as a dependency.

In the NewRelic Logback Appender repository, you can find an example of such an appender. And below, it will be shown how to use the appender with the logging function.

Usage

So, if the NewRelic appender is built as a separate artifact, it needs to be added as a dependency into a project. In our case, the project is the Azure logging function. The function uses a Logback framework for logging. Maven's dependencies will be like the following:

<dependencies>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>1.4.6</version>

</dependency>

<dependency>

<groupId>ua.rapp</groupId>

<artifactId>nr-logback-appender</artifactId>

<version>0.1.0</version>

<scope>runtime</scope>

</dependency>

</dependencies>The appender uses LoggingEventCompositeJsonLayout. Its documentation can be found on Composite Encoder/Layout. The layout is configured with a mandatory field message and common attributes: timestamp, level, and logger. Custom attributes can be added into a resulting JSON via Slf4j MDC.

Other required appender's properties are needed to post log messages to NewRelic:

| Property name | Description |

|---|---|

| apiKey | Valid API key to be sent as HTTP header. Register a NewRelic account or use an existing one. |

| host | NewRelic address, like https://log-api.eu.newrelic.com for Europe.See details Introduction to the Log API. |

| URL | URL path to API, like /log/v1. |

Here is a full example of the appender's configuration used by the NewRelic logger function:

<?xml version="1.0" encoding="UTF-8" ?>

<configuration>

<appender name="NEWRELIC" class="ua.rapp.logback.appenders.NewRelicAppender">

<host>https://log-api.newrelic.com}</host>

<url>/log/v1}</url>

<apiKey>set-your-valid-api-key</apiKey>

<layout class="net.logstash.logback.layout.LoggingEventCompositeJsonLayout">

<providers>

<timestamp/>

<version/>

<uuid/>

<pattern>

<pattern>

{

"timestamp": "%date{ISO8601}",

"logger": "%logger",

"level": "%level",

"message": "%message"

}

</pattern>

</pattern>

<mdc/>

<tags/>

</providers>

</layout>

</appender>

<root level="TRACE">

<appender-ref ref="NEWRELIC"/>

</root>

</configuration>With Slf4j Logger and MDC the logger function logs message like this:

private static final Logger LOG = LoggerFactory.getLogger("newrelic-logger-function");

// ...

MDC.put("invocationId", UUID.randomUUID().toString());

MDC.put("app-name", "console");

MDC.put("errorMessage", "Missed ID attribute!");

LOG.error("User [555] logged in failed.");Which generates a JSON string:

{

"@timestamp": "2023-04-22T14:09:07.2011033Z",

"@version": "1",

"app-name": "console",

"errorMessage": "Missed ID attribute!",

"invocationId": "0d16c981-d342-43f1-8b89-4e3e3fde8974",

"level": "ERROR",

"logger": "newrelic-logger-function",

"message": "User [555] logged in failed.",

"newrelic.source": "api.logs",

"timestamp": 1682172547201,

"uuid": "24212215-c075-4644-a122-15c075f6447f"

}Here attributes @timestamp, @version, and uuid are added by Logstash Logback layout providers <timestamp/>, <version/>, and <uuid/>. Attributes app-name, invocationId, and errorMessage are added by <mdc/> the provider.

Conclusion

In this article, it was described how, without much effort, to create a custom Logback appender, which can be used by Azure function to send log messages into NewRelic. Azure function with HTTP trigger is used inside Azure Cloud. It provides API to handle logs from managed cloud services without NewRelic's agent.

Opinions expressed by DZone contributors are their own.

Comments