Node.js Performance Showdown

Let's pit different frameworks against each other and see who comes out on top.

Join the DZone community and get the full member experience.

Join For FreeEarlier this year I followed up on a post comparing the performance of a variety of Node.js web frameworks. With the release of numerous updates to these frameworks as well as Node.js itself updating (currently v7.2.1), we thought it was time for another node.js performance showdown.

This time I’m adding another environment to the node.js performance test. In addition to testing within Virtual box running Debain, I’m also testing on a VM (provisioned via Digital Ocean) running Ubuntu. These two environments should give a better idea about how these frameworks perform when they have different resources accessible to them.

I’ve also included an additional framework total.js in these tests, as requested on the previous post.

The environment specifications and software versions are:

- Ubuntu 16.04.1 x64 – Hosted on Digital Ocean 2 GB RAM option

- i7 4790 (3.6GHz) Workstation – Windows 10 running Debian 8.5 in Virtual Box

The versions of each framework are:

- Runtime: Node.JS v7.2.1

- node.js v7.2.1 http library “nodejs” in test

- hapi – 16.0.1

- express – 4.14.0

- koa – 1.2.4

- restify – 4.3.0

- total.js – 2.3.0

The Node.js Performance Test

Similar to the last test (detailed in this article here), ApacheBench was used to fire requests against the endpoint each framework was configured to listen to.

Each request was simply a GET request to the endpoint with no querystring parameters. Each framework was configured to return “Hello World!”.

Apache Bench was configured to run 100 tests concurrently for 50,000 requests.

The entire test was scripted so that each server was started and allowed five seconds to stabilize before ab is run against it. After the test is complete a further five seconds is allowed before the next framework is tested.

The entire test script was repeated five times in each environment and the results for each framework/environment combination were averaged.

The entire script can be found in the GitHub repository here.

Results

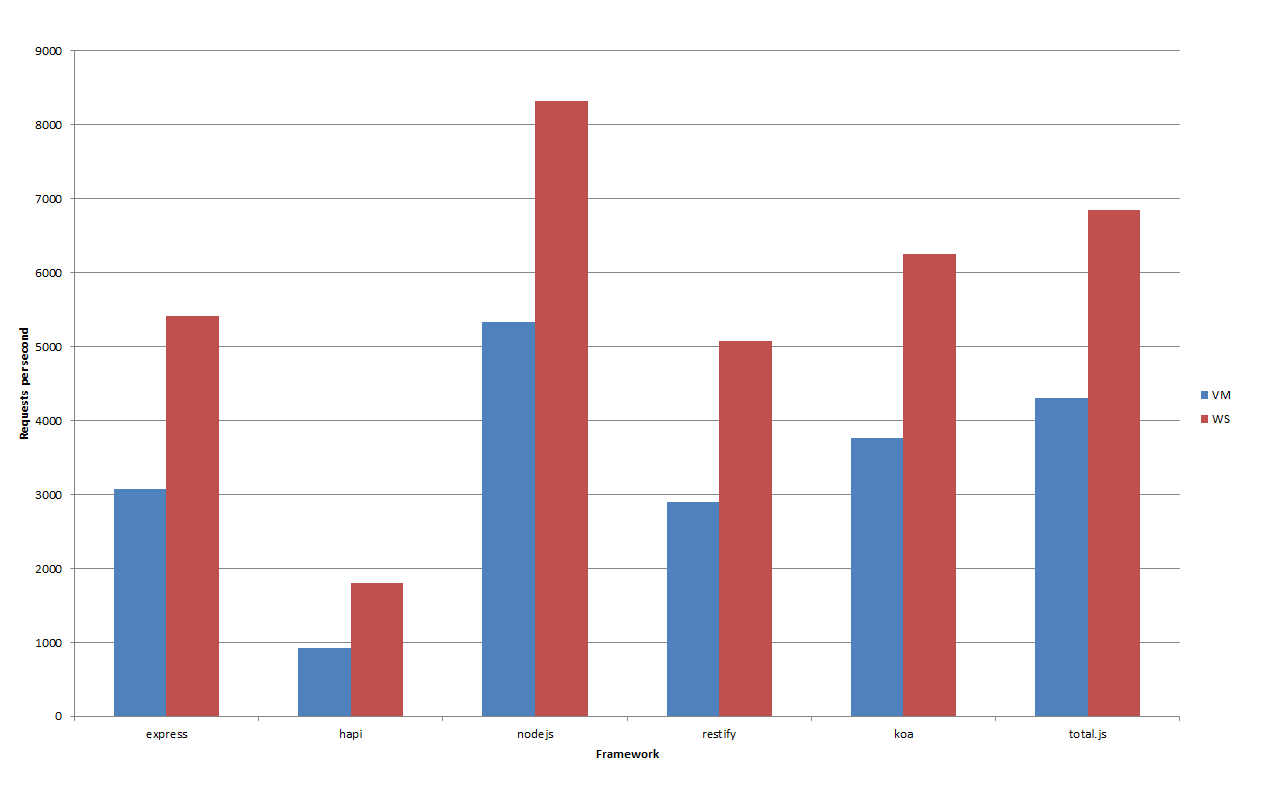

Our results show the number of requests per second on both the Virtual Machine provisioned from Digital Ocean (“VM”) as well as my Work Station running Debian in Virtual Box (“WS”).

Environment

In each case, the tests ran faster on the workstation as expected. This is because the VM would have less or no dedicated hardware provisioned for it. Regardless, we learned a bit about the capabilities of the frameworks when they have less headroom. Hapi ran almost 2x faster on the higher powered machine, whereas the native node.js only ran 1.55x the speed. This may be because more pressure is being put on the network layer itself rather than the framework at higher request counts.

History

Relative to previous tests all frameworks performed marginally better than they used to (this comparison is based on the results from the Debian VM running on the workstation which was used for the previous tests.) However each framework performed very similarly to historic results when compared in relative terms.

In Absolute Terms

Overall the results were somewhat expected, beside the newcomer who I had no previous data to make a judgment with. However, it turned out that total.js was actually the fastest of the frameworks tested, being only 15% slower than using the raw node.js HTTP library. The other frameworks, koa, restify and express all performed similarly and hapi performed the worst:

Conclusion

In conclusion total.js seems to be the most out the box performant when it comes to simple request response capacity.

Motivation for the Node.js Performance Test

The reason we were initially interested in testing the capability of node frameworks was for use within the data ingestion layer of our application – Raygun. Where we receive data from a huge number of sources each day, from web servers to users browsing websites, ensuring that these requests are responded to and terminated quickly and that the payload is persisted reliably to our processing services is paramount. We still utilize express in our ingestion layer though in the future we may switch to a different language entirely. If and when this happens it’s likely we’ll follow up with an analysis which expands to include different languages entirely.

Published at DZone with permission of Alex Ogier, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments