Optimize AWS Solution Architecture for Performance Efficiency

Follows best practices for designing and operating reliable, secure, efficient, and cost-effective cloud solutions and applications that offer high performance.

Join the DZone community and get the full member experience.

Join For FreeAmazon Web Services (AWS) offers various resources and services to help you build SaaS and PaaS solutions, however, the challenge is to achieve and maintain performance efficiency that has its own important share in delivering business value. This article highlights some of the best practices for designing and operating reliable, secure, efficient, and cost-effective cloud applications that offer performance efficiency. There are two primary areas to focus on:

- Select and Configure cloud resources for higher performance

- Review and Monitor Performance

Select and Configure Cloud Recourses for Higher Performance

Compute

In AWS, Compute is available in three forms: instances, containers, and functions. You may choose which form of compute to use for each component in your architecture based on workload. When architecting your use of compute, you should take advantage of AWS elastic compute options in the following way:

- Select optimal instance families and types to ensure you have correct compute, memory, network, and storage capabilities with a right configuration that best matches your workload needs

- Optimize the operating system settings such as volume management, RAID, block sizes, and settings

- When building containers with Amazon ECS service, you should use data to select the optimal type for your workload and consider container configuration options such as memory, CPU, and tenancy configuration

Key AWS Compute Services to achieve performance:

- AWS Lambda: To deliver optimal performance with AWS Lambda, choose the amount of memory you want for your function. For example, choosing 256 MB of memory allocates approximately twice as much CPU power to your Lambda function as requesting 128 MB of memory. You can also control the amount of time each function is allowed to run (up to a maximum of 300 seconds)

- AWS Spot Instances: Use spot instances to generate loads at low cost and discover bottlenecks before they are experienced in production

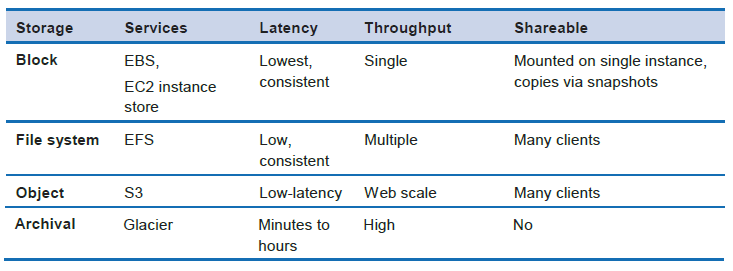

Storage

AWS offers storage solutions such as block, filesystem, object, and archival. You may choose any by considering characteristics such as the ability to share, file size, cache size, latency requirement, expected throughput, and persistence of data.

You may choose AWS service that best fits your needs from Amazon S3, Amazon Glacier, Amazon Elastic Block Store (Amazon EBS), Amazon Elastic File System (Amazon EFS), or Amazon EC2 instance store. Here is a summary of AWS storage services and their characteristics.

Databases

In AWS, there are four approaches to address database requirements: Relational Online Transaction Processing (OLTP), Non-relational databases (NoSQL), Data warehouse, and Online Analytical Processing (OLAP), and Data indexing and searching. While managing your data, you should consider the following guidelines.

- Select the database solution that aligns best to your access patterns or consider changing your access patterns (for example, indexes, key distribution, partition, or horizontal scaling) to align with the storage solution to maximize performance

- Identify different characteristics (for example, availability, consistency, partition tolerance, latency, durability, scalability, and query capability) you may require for your solution so that you can select the appropriate database and storage technologies such as relational, NoSQL, or warehouse

- Consider the configuration options for your selected database approach such as storage optimization, database-level settings, memory, and cache

Key AWS Database Services to achieve performance:

- Amazon RDS: Consider using automated read replicas (not available for Oracle or SQL Server) to scale out read performance, SSD-backed storage options, and provisioned IOPS. These replicas allow read-heavy workloads to use multiple servers instead of overloading a central database

- Amazon DynamoDB Accelerator (DAX): It provides throughput Auto Scaling support with a read-through/write-through distributed caching tier in front of the database providing sub-millisecond latency for entities that are in the cache

- Amazon Redshift: Use Amazon Redshift when you need SQL operations that will scale. Amazon Redshift will provide the best performance if you specify sort keys, distribution keys, and column encodings as these can significantly improve storage, I/O, and query performance

- Amazon Athena: It offers a fully managed Presto service that can run queries on your data lakes with large amounts of less structured data

- Amazon ElastiCache: It works as an in-memory data store and cache to support the most demanding applications requiring sub-millisecond response times, high throughput and low latency

Networking

In AWS, networking is available in several different types and configurations through compute, storage, network, routing, and edge services.

- Choose the appropriate Region or Regions for your deployment based user location, data location, and security & compliance requirements

- Use edge services such as Content Delivery Network (CDN) and Domain Name System (DNS) to reduce latency. However, you need to ensure that you have configured cache-control correctly for both DNS and HTTP/HTTPS to gain the most benefit

- Use Application Load Balancer for HTTP and HTTPS traffic request routing for modern application architectures using microservices and containers

- Use the Network Load Balancer for load balancing of TCP traffic where extreme performance is required. It is capable of handling millions of requests per second while maintaining ultra-low latencies, and it is also optimized to handle sudden and volatile traffic patterns

Key AWS network Services to achieve performance:

- Amazon EBS optimized instances: They offer optimized configuration stack to minimize contention between Amazon EBS I/O and other traffic from your instance

- Amazon S3 transfer acceleration: Amazon S3 offers content acceleration as a feature that lets external users benefit from the networking optimizations of CloudFront to upload data to Amazon S3

- Amazon Elastic Network Adapters (ENA): It provides further optimization by delivering 20 Gbps of network capacity for your instances within a single placement group

- AWS CloudFront: It is a global CDN provides a global network of edge locations that cache the content and provide high-performance network connectivity to end-users

- Amazon Route 53: It offers Latency-based routing (LBR) to help improve application’s performance by routing your customers to the AWS endpoint (for EC2 instances, Elastic IP addresses, or ELB load balancers)

- Amazon Virtual Private Cloud (Amazon VPC): Using VPC endpoints, the data between your VPC and another AWS service is transferred within the Amazon network, helping protect your instances from internet traffic and improve performance

- AWS Direct Connect: It provides dedicated connectivity to the AWS environment, from 50 Mbps up to 10 Gbps

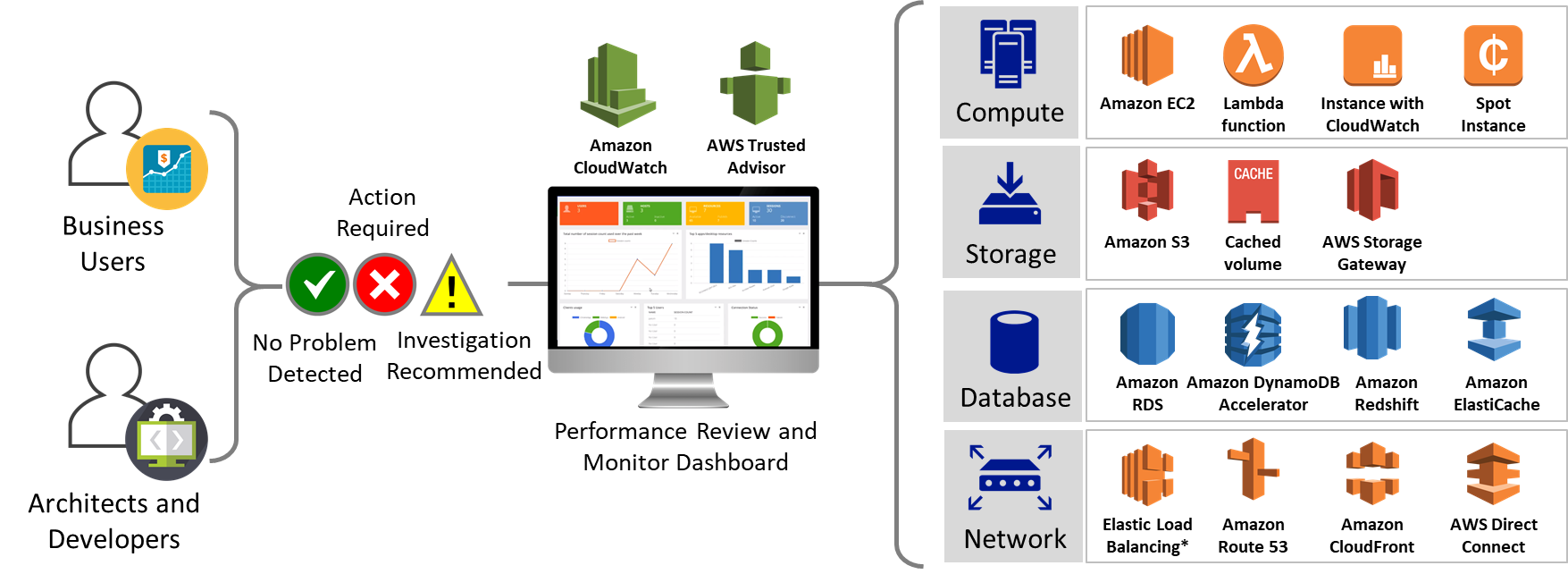

Review and Monitoring Performance

Once you have identified your approach toward high-performance AWS architecture for your solution, you should set up an automated process to perform/monitor/review performance to ensure your selection of resource types and configuration options are performing as per your custom and industry benchmarks.

- Performance Measurement Metrics and Benchmarks: Build your own custom or industry-standard benchmarks, such as TPC-DS (to benchmark your data warehousing workloads). It is also recommended that you use both technical and business metrics. For example, website or mobile apps key technical metrics are rendering time, thread count, garbage collection rate, and wait for states while business metrics include aggregate cumulative cost per request, requests per user and per microservice, alerts to drive down costs and more

- Automated Performance Testing: Set up the CICD process that automatically triggers performance tests after the functional tests have passed successfully for each build. The automation should create a new environment, set up initial conditions, load test data, and then execute performance and load tests. Results from these tests should be tied back to the build so you can track performance changes over time

- Automated Testing: Create a cloud solution test automation framework that runs a series of test scripts that replicate pre-recorded user journeys, and data access patterns to predict the behavior of AWS resource usage in production as much as possible. You can also use software or software-as-a-service (SaaS) solutions to generate the required load

- Performance Monitoring Dashboard: Key technical and business metrics should be visible to your team for each build version to ensure performance efficiency. Create visualizations to monitor performance issues, hot spots, wait for states, or low utilization.

Key AWS performance review and monitoring services:

- Amazon CloudWatch: It helps collect and publish custom and business metrics and set alarms that indicate when thresholds are breached to notify that a test is outside of expected performance

- AWS Trusted Advisor: It helps you monitor EC2 utilization, service limits, a large number of rules in security group causing performance degradation, resource record set, overutilized EBS, HTTP request headers for CloudFront, EC2 to EBS Throughput, incorrectly configured DNS settings and many more performance checkpoints and recommends actions required

Opinions expressed by DZone contributors are their own.

Comments