Optimized Metrics Generation With Metadata-Driven Dynamic SQL

Learn about a metadata-driven approach to generate metrics with dynamic SQL, enhancing scalability, flexibility, and efficiency in auditing and reporting.

Join the DZone community and get the full member experience.

Join For FreeThe creation of precise and adaptable metrics remains crucial for successful monitoring, auditing, and reporting in present-day data-centric settings. This article describes an optimized method to create metrics and dynamic SQL with metadata-based techniques using Oracle packages. This process combines configuration tables, dynamic SQL templates, and merge operations to create a metrics generation framework that scales well and remains easy to maintain and adjust.

Background

The process is built around an audit package and a set of Data Definition Language (DDL) statements that define critical tables for storing audit facts, dynamic SQL templates, and metric results.

Key tables include:

AUDIT_REPORTING_FCT: Stores audit fact records.AUDIT_REPORTING_QUERY: Contains SQL templates with placeholders.AUDIT_REPORTING_METADATA: Provide metadata (e.g., audit column mappings and descriptions).AUDIT_OVERALL_METRICS,AUDIT_DAILY_METRICS: These are different group of the metrics which can be defined based on nature of their reporting. For example, we are using overall metrics which can aggregate the data across the batch while we have daily metrics which are reported based on daily batch processing.AUDIT_DWH: Captures data warehouse metrics such as row counts. This can be partition by the day to compare daily stats on the datawarehouse tables.

This metadata-driven design allows the system to generate dynamic SQL on the fly, adapting to changing business rules without modifying core code.

Data Model and Architecture

Core Components

1. Batch and Execution Metadata

- Batch: Contains run identifiers (e.g.,

BATCH_ID) and cycle information. - Batch execution: Ties each execution (

EXECUTION_ID) to a batch, ensuring traceability.

2. File Processing and Validation

- Batch files and file segments: Track data files, their segments, and required validations.

- Lookup rules: Define validations for not-null checks and referential integrity.

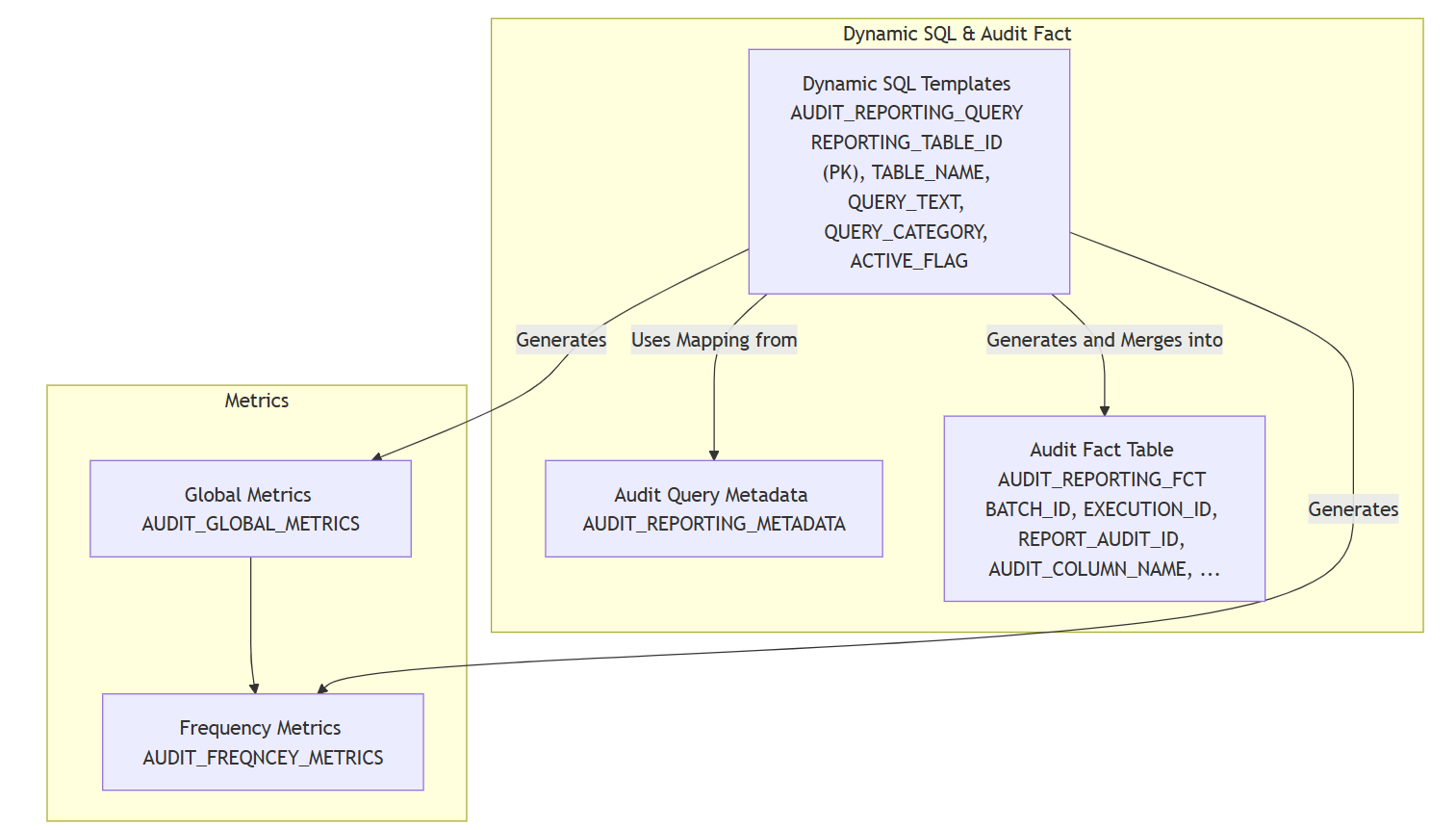

3. Dynamic SQL and Audit Fact Tables

- Dynamic SQL templates: Stored in

AUDIT_REPORTING_QUERYwith associated metadata. - Audit fact table (

AUDIT_REPORTING_FCT): Merges dynamically generated audit results.

4. Metrics Storage

- Overall metrics (

AUDIT_OVERALL_METRICS): Aggregates metrics across the batch. - Daily metrics (

AUDIT_DAILY_METRICS): Captures metrics at defined intervals (e.g., daily). - Metrics master (

AUDIT_REPORTING_METADATA): Defines metric codes, frequencies, and descriptions.

5. Data Warehouse Metrics

-

DWH metrics (

AUDIT_DWH): Records row counts and update statistics for warehouse tables.

Textual diagram for dynamic SQL generation:

End-to-End Process of Generating Metrics

1. Batch Initialization and Data Loading

A new batch is initiated, generating a unique BATCH_ID and EXECUTION_ID. If the batch process is set to run on a daily basis, a new BATCH_ID is generated on a daily basis. If we need to reload the same-day data, we can re-use the existing IDs, or we can generate a new one depending on the downstream table data.

Once BATCH_ID is created, data files are loaded into staging tables, and any prior batch validation records are cleaned up to ensure a fresh start. The staging tables are set for truncate and reload for every run.

2. Data Validation

In the data validation step, we will run the validation store proc, which executes to clean up stale validation records and perform not-null and lookup validations using dynamically constructed SQL. Each validation step logs its actions for future troubleshooting.

3. Metrics Extraction from Source Systems

Multiple store procedures are executed to generate the aggregated metrics by querying data warehouse and staging environments. These metrics are first populated in temporary or staging tables before final consolidation.

4. Dynamic SQL Generation for Audit Reporting

Dynamic SQL is built using active query templates from AUDIT_REPORTING_QUERY. Placeholders (e.g., <execution_dt>, <batch_id>) are replaced with actual runtime values.

After assembly, the SQL is executed, and its results are merged into the audit fact table (AUDIT_REPORTING_FACT) using efficient MERGE operations.

Example PL/SQL code snippet:

DECLARE

v_sql_clob CLOB;

v_cycle_dt DATE := SYSDATE;

v_batch_id NUMBER;

v_execution_id NUMBER := 1001;

v_record_cnt NUMBER;

BEGIN

-- Retrieve current batch ID from the batch table

SELECT MAX(batch_id)

INTO v_batch_id

FROM some_batch_table; -- Replace with the actual batch table

-- Assemble dynamic SQL using active query templates for the 'P0_REPORT' category

SELECT TRIM(XMLCAST(XMLAGG(XMLELEMENT(e, QUERY_TEXT)) AS CLOB))

INTO v_sql_clob

FROM AUDIT_REPORTING_QUERY

WHERE ACTIVE_FLAG = 'Y'

AND QUERY_CATEGORY = 'P0_REPORT';

-- Replace placeholders with actual runtime values

v_sql_clob := REPLACE(v_sql_clob, '<cycle_dt>',

'TO_DATE(''' || TO_CHAR(v_cycle_dt, 'YYYY-MM-DD') || ''',''YYYY-MM-DD'')');

v_sql_clob := REPLACE(v_sql_clob, '<batch_id>', TO_CHAR(v_batch_id));

-- Optional: Log the generated dynamic SQL for debugging

DBMS_OUTPUT.PUT_LINE('Generated Dynamic SQL:');

DBMS_OUTPUT.PUT_LINE(v_sql_clob);

-- Execute the dynamic SQL (typically returns results to be merged into the audit fact table)

EXECUTE IMMEDIATE v_sql_clob;

-- Capture the number of processed rows

v_record_cnt := SQL%ROWCOUNT;

DBMS_OUTPUT.PUT_LINE('Records processed: ' || v_record_cnt);

-- Example merge into the audit fact table

MERGE INTO AUDIT_REPORTING_FACT tgt

USING (

SELECT v_batch_id AS batch_id,

v_exec_id AS exec_id,

101 AS rpt_audit_id, -- Example audit ID; in practice, derived from metadata

'COLUMN_X' AS audit_column_name,

'Display Name for Column X' AS audit_display_name,

v_cycle_dt AS audit_date_value_1,

123 AS audit_value_1

FROM dual

) src

ON (tgt.BATCH_ID = src.batch_id AND tgt.RPT_AUDIT_ID = src.rpt_audit_id)

WHEN MATCHED THEN

UPDATE SET tgt.AUDIT_VALUE_1 = src.audit_value_1,

tgt.UPDATED_DT = SYSTIMESTAMP

WHEN NOT MATCHED THEN

INSERT (BATCH_ID, EXECUTION_ID, RPT_AUDIT_ID, AUDIT_COLUMN_NAME,

AUDIT_DISPLAY_NAME, AUDIT_DATE_VALUE_1, AUDIT_VALUE_1,

CYCLE_DT, CREATED_DT, UPDATED_DT)

VALUES (src.batch_id, src.exec_id, src.rpt_audit_id, src.audit_column_name,

src.audit_display_name, src.audit_date_value_1, src.audit_value_1,

v_cycle_dt, SYSTIMESTAMP, SYSTIMESTAMP);

COMMIT;

EXCEPTION

WHEN OTHERS THEN

DBMS_OUTPUT.PUT_LINE('Error encountered: ' || SQLERRM);

ROLLBACK;

RAISE;

END;

5. Metrics Aggregation

As mentioned in the previous section, frequency metrics and global metrics are generated using the metadata stored in their respective tables. The composite keys are added to the tables to ensure data integrity.

6. Logging, Exception Handling, and Finalization

Throughout the process, information can be logged using separate logging proc, which records key actions and any errors encountered. Robust error handling also ensures that failures trigger appropriate rollbacks and detailed error messages.

Finally, once all steps complete successfully, the process commits the data into the final audit and metric tables.

Advantages and Disadvantages

Advantages

Flexibility and Configurability

- SQL templates and mapping metadata allow changes without altering core code.

- New metrics or audit rules are implemented by updating configuration records.

Dynamic Adaptability

-

The system adapts to varying data structures and reporting needs at runtime.

Centralized Control

-

All dynamic queries originate from a central repository, ensuring consistency.

Efficient Merging

-

The use of

MERGEoperations minimizes locking and efficiently handles both inserts and updates.

Detailed Logging

-

Extensive logging enhances traceability and simplifies troubleshooting.

Disadvantages

Complexity

-

Dynamic SQL is inherently harder to debug and maintain due to concatenation and placeholder replacements.

Performance Overhead

-

Runtime construction of SQL (especially with XML aggregation) may introduce additional CPU/memory overhead.

Security Concerns

-

Although typically in controlled environments, dynamic SQL can increase the risk of SQL injection if not properly managed.

Testing Challenges

-

The distributed logic between metadata and dynamic code can complicate comprehensive testing.

Alternative Solutions

While the dynamic, metadata-driven approach offers significant flexibility, alternatives exist:

1. Parameterized Stored Procedures or Views

Instead of constructing SQL dynamically, develop stored procedures or views with fixed SQL that accepts parameters.

Advantages

- Improved performance due to precompiled SQL and reusable execution plans.

- Easier to test and debug.

Disadvantages

-

Reduced flexibility; changes may require code deployments.

Example: Parameterized stored procedure.

CREATE OR REPLACE PROCEDURE generate_audit_metrics (

p_batch_id IN NUMBER,

p_cycle_dt IN DATE

)

AS

v_record_cnt NUMBER;

BEGIN

MERGE INTO AUDIT_REPORTING_FACT tgt

USING (

SELECT p_batch_id AS batch_id,

1001 AS execution_id, -- Could be a parameter

101 AS rpt_audit_id,

'COLUMN_X' AS audit_column_name,

'Display Name for Column X' AS audit_display_name,

p_cycle_dt AS audit_date_value_1,

123 AS audit_value_1

FROM dual

) src

ON (tgt.BATCH_ID = src.batch_id AND tgt.RPT_AUDIT_ID = src.rpt_audit_id)

WHEN MATCHED THEN

UPDATE SET tgt.AUDIT_VALUE_1 = src.audit_value_1,

tgt.UPDATED_DT = SYSTIMESTAMP

WHEN NOT MATCHED THEN

INSERT (BATCH_ID, EXECUTION_ID, RPT_AUDIT_ID, AUDIT_COLUMN_NAME,

AUDIT_DISPLAY_NAME, AUDIT_DATE_VALUE_1, AUDIT_VALUE_1,

CYCLE_DT, CREATED_DT, UPDATED_DT)

VALUES (src.batch_id, src.execution_id, src.rpt_audit_id, src.audit_column_name,

src.audit_display_name, src.audit_date_value_1, src.audit_value_1,

p_cycle_dt, SYSTIMESTAMP, SYSTIMESTAMP);

v_record_cnt := SQL%ROWCOUNT;

DBMS_OUTPUT.PUT_LINE('Records processed: ' || v_record_cnt);

COMMIT;

EXCEPTION

WHEN OTHERS THEN

DBMS_OUTPUT.PUT_LINE('Error: ' || SQLERRM);

ROLLBACK;

RAISE;

END generate_audit_metrics;

2. ETL/ELT Tools and Materialized Views

ETL/ELT Platforms

Use dedicated data integration tools (e.g., Oracle Data Integrator, Informatica) to handle transformations and loading, separating business logic from the database.

Materialized Views

Pre-aggregate complex queries in materialized views that refresh on a schedule, reducing the need for runtime dynamic SQL.

Conclusion

The metadata-driven dynamic SQL approach for generating metrics offers significant flexibility and centralized control, making it an attractive solution for environments with evolving reporting requirements. This architecture ensures scalability and easier maintenance by dynamically assembling SQL from configuration tables and leveraging merge operations. However, it comes with challenges such as increased complexity, performance overhead, and testing difficulties.

Alternative solutions, such as parameterized stored procedures, ETL tools, or materialized views, provide a more static and often more performant environment at the expense of flexibility. The choice between these approaches depends on your organization’s priorities in terms of agility, performance, and maintainability.

This comprehensive overview and sample implementation serve as a guide for designing an optimized metrics generation process that can be adapted to diverse business needs while ensuring accurate and efficient reporting.

Opinions expressed by DZone contributors are their own.

Comments