Parent Document Retrieval (PDR): Useful Technique in RAG

PDR allows RAG to respond accurately and nuanced to complex queries. Explore more in this step-by-step implementation using LangChain, OpenAI, and more.

Join the DZone community and get the full member experience.

Join For FreeWhat Is Parent Document Retrieval (PDR)?

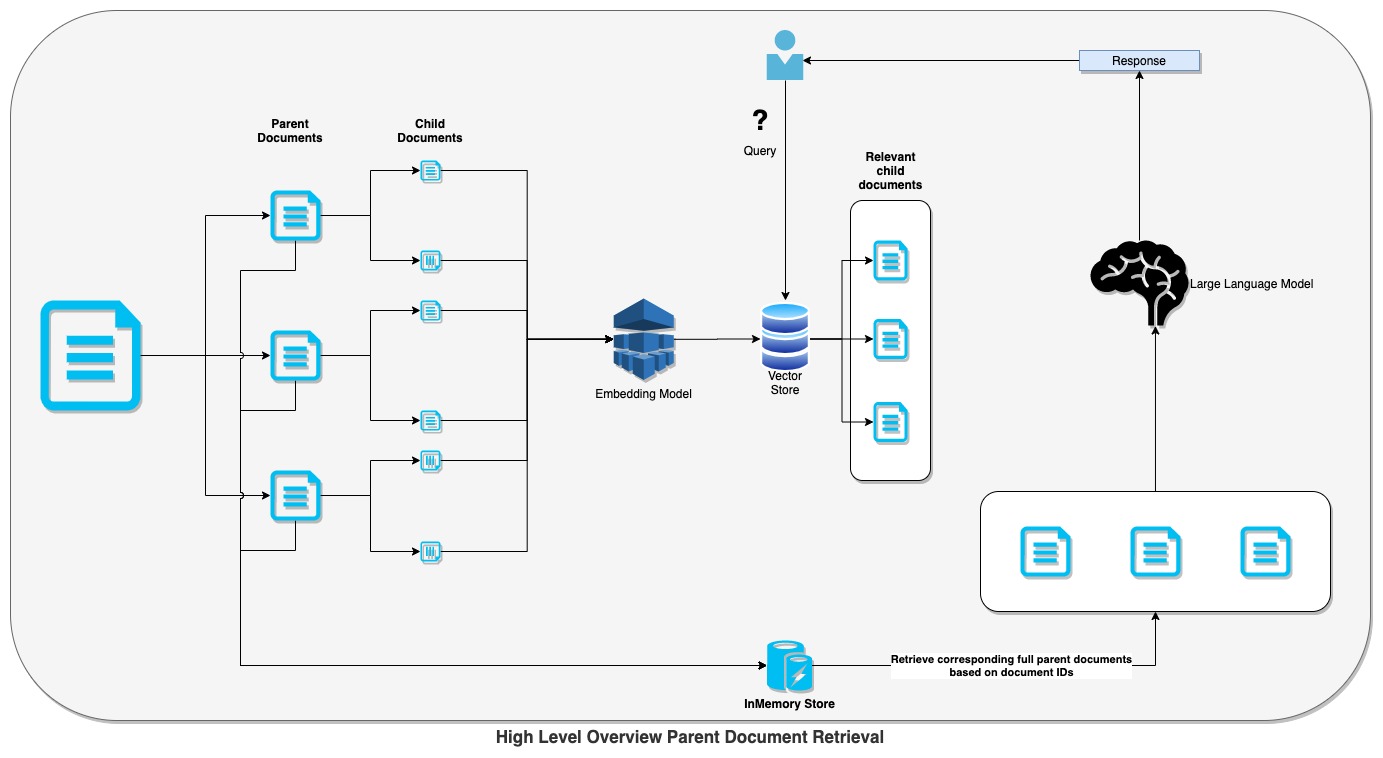

Parent Document Retrieval is a method implemented in state-of-the-art RAG models meant to recover full parent documents from which relevant child passages or snippets can be extracted. It provides context enrichment and is passed on to the RAG model for more comprehensive, information-rich responses to complex or nuanced questions.

Major steps in parent document retrieval in RAG models include:

- Data preprocessing: Breaking very long documents into manageable pieces

- Create embeddings: Convert pieces into numerical vectors for efficient search

- User query: User submits a question

- Chunk retrieval: Model retrieves the piece’s most similar to the embedding for the query

- Find parent document: Retrieve original documents or bigger pieces of them from which these pieces were taken

- Parent Document Retrieval: Retrieve full parent documents to provide more context for the response

Step-By-Step Implementation

The steps for implementing parent document retrieval comprise four different stages:

1. Prepare the Data

We will first create the environment and preprocess data for our RAG system implementation for parent document retrieval.

A. Import Necessary Modules

We will import the required modules from the installed libraries to set up our PDR system:

from langchain.schema import Document

from langchain.vectorstores import Chroma

from langchain.retrievers import ParentDocumentRetriever

from langchain.chains import RetrievalQA

from langchain_openai import OpenAI

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.storage import InMemoryStore

from langchain.document_loaders import TextLoader

from langchain.embeddings.openai import OpenAIEmbeddingsIt is these libraries and modules that will form a major part of the forthcoming steps in the process.

B. Set Up the OpenAI API Key

We are using an OpenAI LLM for response generation, so we will need an OpenAI API key. Set the OPENAI_API_KEY environment variable with your key:

OPENAI_API_KEY = os.environ["OPENAI_API_KEY"] = "" # Add your OpenAI API key

if OPENAI_API_KEY == "":

raise ValueError("Please set the OPENAI_API_KEY environment variable")C. Define the Text Embedding Function

We will leverage OpenAI's embeddings to represent our text data:

embeddings = OpenAIEmbeddings()

D. Load Text Data

Now, read in the text documents you would like to retrieve. You can leverage the class TextLoader for reading text files:

loaders = [

TextLoader('/path/to/your/document1.txt'),

TextLoader('/path/to/your/document2.txt'),

]

docs = []

for l in loaders:

docs.extend(l.load())2. Retrieve Full Documents

Here, we will set up the system to retrieve full parent documents for which child passages are relevant.

A. Full Document Splitting

We'll use RecursiveCharacterTextSplitter to split the loaded documents into smaller text chunks of a desired size. These child documents will allow us to search efficiently for relevant passages:

child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

B. Vector Store and Storage Setup

In this section, we will use Chroma vector store for embeddings of the child documents and InMemoryStore to keep track of the full parent documents associated with the child documents:

vectorstore = Chroma(

collection_name="full_documents",

embedding_function=OpenAIEmbeddings()

)

store = InMemoryStore()C. Parent Document Retriever

Now, let us instantiate an object from the class ParentDocumentRetriever. This class shall be responsible for the core logic related to the retrieval of full parent documents based on child document similarity.

full_doc_retriever = ParentDocumentRetriever(

vectorstore=vectorstore,

docstore=store,

child_splitter=child_splitter

)D. Adding Documents

These loaded documents will then be fed into the ParentDocumentRetriever using the add_documents method as follows:

full_doc_retriever.add_documents(docs)

print(list(store.yield_keys())) # List document IDs in the store

E. Similarity Search and Retrieval

Now that the retriever is implemented, you can retrieve relevant child documents given a query and fetch the relevant full parent documents:

sub_docs = vectorstore.similarity_search("What is LangSmith?", k=2)

print(len(sub_docs))

print(sub_docs[0].page_content)

retrieved_docs = full_doc_retriever.invoke("What is LangSmith?")

print(len(retrieved_docs[0].page_content))

print(retrieved_docs[0].page_content)3. Retrieve Larger Chunks

Sometimes it may not be desirable to fetch the full parent document; for instance, in cases where documents are extremely big. Here is how you would fetch bigger pieces from the parent documents:

- Text splitting for chunks and parents:

- Use two instances of

RecursiveCharacterTextSplitter:- One of them shall be used to create larger parent documents of a certain size.

- Another with a smaller chunk size to create text snippets, child documents from the parent documents.

- Use two instances of

- Vector store and storage setup (like full document retrieval):

- Create a

Chromavector store that indexes the embeddings of the child documents. - Use

InMemoryStore, which holds the chunks of the parent documents.

- Create a

A. Parent Document Retriever

This retriever solves a fundamental problem in RAG: it retrieves the whole documents that are too large or may not contain sufficient context. It chops up documents into small chunks for retrieval, and these chunks are indexed. However, after a query, instead of these pieces of documents, it retrieves the whole parent documents from which they came — providing a richer context for generation.

parent_splitter = RecursiveCharacterTextSplitter(chunk_size=2000)

child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

vectorstore = Chroma(

collection_name="split_parents",

embedding_function=OpenAIEmbeddings()

)

store = InMemoryStore()

big_chunks_retriever = ParentDocumentRetriever(

vectorstore=vectorstore,

docstore=store,

child_splitter=child_splitter,

parent_splitter=parent_splitter

)

# Adding documents

big_chunks_retriever.add_documents(docs)

print(len(list(store.yield_keys()))) # List document IDs in the store

B. Similarity Search and Retrieval

The process remains like full document retrieval. We look for relevant child documents and then take corresponding bigger chunks from the parent documents.

sub_docs = vectorstore.similarity_search("What is LangSmith?", k=2)

print(len(sub_docs))

print(sub_docs[0].page_content)

retrieved_docs = big_chunks_retriever.invoke("What is LangSmith?")

print(len(retrieved_docs))

print(len(retrieved_docs[0].page_content))

print(retrieved_docs[0].page_content)

4. Integrate With RetrievalQA

Now that you have a parent document retriever, you can integrate it with a RetrievalQA chain to perform question-answering using the retrieved parent documents:

qa = RetrievalQA.from_chain_type(llm=OpenAI(),

chain_type="stuff",

retriever=big_chunks_retriever)

query = "What is LangSmith?"

response = qa.invoke(query)

print(response)Conclusion

PDR considerably improves the RAG models' output of accurate responses that are full of context. With the full-text retrieval of parent documents, complex questions are answered both in-depth and accurately, a basic requirement of sophisticated AI.

Opinions expressed by DZone contributors are their own.

Comments