Quick and Simple Logging Framework Using JSON Logger

Mule 4's JSON Logger is a low code solution to creating a global logging framework. We cover its implementation and uses in this article. Let's get started!

Join the DZone community and get the full member experience.

Join For FreeIntroduction

In Mulesoft implementation we always come across a need of implementing a robust logging framework that helps development and operation teams monitor and debug transactions in a faster and better way.

In Mule 4, the JSON Logger makes the effort of such logging framework creation faster and easier. Thanks to Andres Ramirez for releasing such a cool stuff for logging, which is much powerful than out of the box Mule logging component. In this blog we will explore some of these features. The intent of this blog is to demonstrate how the JSON logger can make the implementation of a standard logging framework super simple.

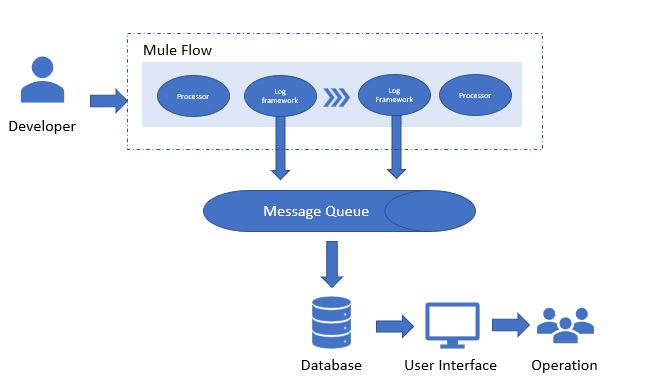

Often a customer looks for a centralized solution for logging and log messages can be forwarded to a storage easily for future reference. For one of our customer requirements, we have recently decided to create a Mule logging framework as such:

Let us now dive deeper into the implementation.

Logging Framework With JSON Logger

Since the JSON logger has out of the box capabilities, it reduced a lot of custom coding requirements and made this implementation much faster and easier. The out of the box capabilities of JSON Logger that helps us majorly in our requirement are as follows:

- Publish message on Destination Queue (Without writing a single line of code)

- Data masking: obfuscate sensitive data

- Trace Point: flag the position in the flow from where logging is done

- Elapsed Time: indicate time elapsed between to logging activity in a flow

We have created a simple logging framework using the JSON logger as the heart of it and parameterized the configuration. Developers can control the logging behavior by setting some variables and properties before calling the framework in the implementation flow.

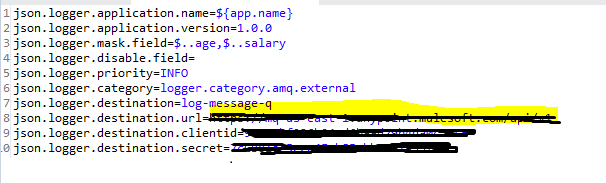

For our purpose we created the JSON logger in such a way where developers can set the trace point and message (an OOTB feature in JSON logger to set some trace message) by setting a variable. For the rest of the configuration, developers need to set up a property file to configure the JSON logger inside the logging framework. The sample Property file is as follows:

Whichever decision you decide on for configuration of the framework will be controlled through the property and which will be controlled through variables that can vary from enterprise to enterprise. Basically the configurations need to change more dynamically and in a Mule implementation, should be controlled through variables and configurations more static in nature in an implementation flow that can be controlled through property files.

Here is the code snippet of the Logging Framework using the JSON Logger:

<?xml version="1.0" encoding="UTF-8"?>

<mule xmlns:json-logger="http://www.mulesoft.org/schema/mule/json-logger" xmlns="http://www.mulesoft.org/schema/mule/core"

xmlns:doc="http://www.mulesoft.org/schema/mule/documentation"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.mulesoft.org/schema/mule/core http://www.mulesoft.org/schema/mule/core/current/mule.xsd

http://www.mulesoft.org/schema/mule/json-logger http://www.mulesoft.org/schema/mule/json-logger/current/mule-json-logger.xsd">

<json-logger:config name="JSON_Logger_Config" doc:name="JSON Logger Config" doc:id="b643623e-507d-46a7-a55b-6cd638ce2f18" environment="${mule.env}" disabledFields="${json.logger.mask.field}" contentFieldsDataMasking="${json.logger.mask.field}">

<json-logger:external-destination >

<json-logger:amq-destination queueOrExchangeDestination="${json.logger.destination}" url="${json.logger.destination.url}" clientId="${json.logger.destination.clientid}" clientSecret="${json.logger.destination.secret}" >

<json-logger:log-categories >

<json-logger:log-category value="logger.category.amq.external" />

</json-logger:log-categories>

</json-logger:amq-destination>

</json-logger:external-destination>

</json-logger:config>

<flow name="jsonlogger-logging-frameworkFlow" doc:id="b7fcd83e-2fad-45e4-a253-fb47894befbf" >

<json-logger:logger doc:name="Logger" doc:id="ca5b85b1-ad6e-4f80-bf89-6914144c6620" config-ref="JSON_Logger_Config" message="#[vars.logMessage default 'No Message defined']" tracePoint="#[vars.tracepoint]" category="${json.logger.category}" priority="${json.logger.priority}"/>

</flow>

</mule>

We also set up a Category (refer to the code snippet above) to control message publication to the destination MQ. Please note it is not advisable to publish all log messages from a flow to a destination queue. This is to avoid any performance bottleneck.

So we can set up a Category and the JSON Logger will only publish log message to the destination queue for which the Category is matching using a predefined value in JSON logger configuration. For example, we can set up a Category as logger.category.amq.external for tracePoint START and END. For all other tracePoint, the logger framework can be used without passing any value for Category. This way, the Logging framework will only enable the publishing of log messages to the destination queue at the start of the flow and at the end of the flow. This is one more very powerful feature of JSON Logger.

Please note that we can also mask some of the sensitive data from the logged payload from the above code snippet and property file images. This is a flexible way to mask different sensitive data in different flows.

Because the JSON Logger is a powerful component, please note how we can create a flexible logging framework using very minimal coding (just setting the JSON Logger configuration parameters in a parameterized way).

Publish Logging Framework in Exchange

Once the framework is ready, it is time to publish as Mule plugin to Exchange. For that, we require some additional setup to the POM file and the local .m2 setting xml. As a first step, from local .m2 setting xml add the below code snippet:

<servers>

<server>

<id>Exchange2</id>

<username>Username to log in Anypoint platform</username>

<password>Password to log in Anypoint platform</password>

</server>Please note you can use any Id for your repository (and same name need to be used in Logging Framework POM file). I have used the name "Exchange2".

Next open the POM file for the logging framework and add a classifier as mule-plugin. This is very important since otherwise the asset will not published to exchange.

<plugin>

<groupId>org.mule.tools.maven</groupId>

<artifactId>mule-maven-plugin</artifactId>

<version>${mule.maven.plugin.version}</version>

<extensions>true</extensions>

<configuration>

<classifier>mule-plugin</classifier>

</configuration>

</plugin>Then add a distributionMangement for your repository details (Exchange where you want to publish the asset):

<distributionManagement>

<repository>

<id>Exchange2</id>

<name>Corporate Repository</name>

<url>https://maven.anypoint.mulesoft.com/api/v1/organizations/{organization ID}/maven</url>

<layout>default</layout>

</repository>

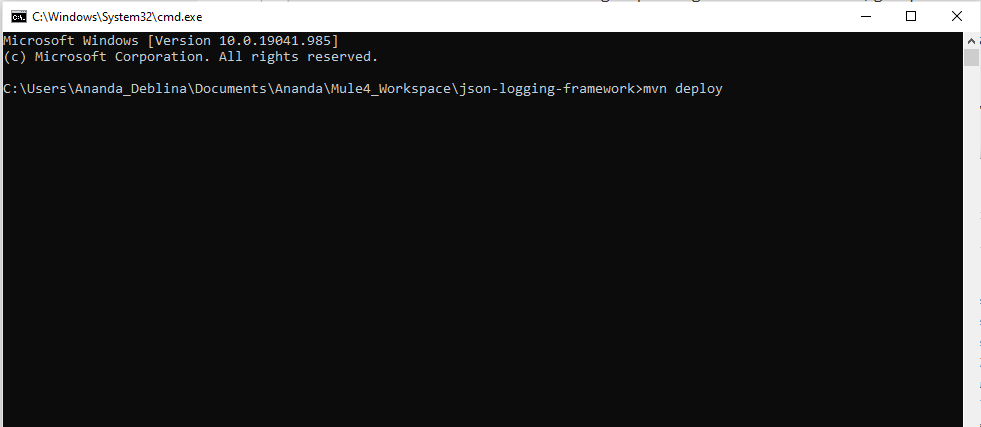

</distributionManagement>Now you can open the command prompt from the Logging Framework project folder and run the command mvn deploy to publish your asset on exchange -

Once the build completed successfully the logging framework will be visible on exchange (may need to refresh the page).

Add Logging Framework To Mule Implementation

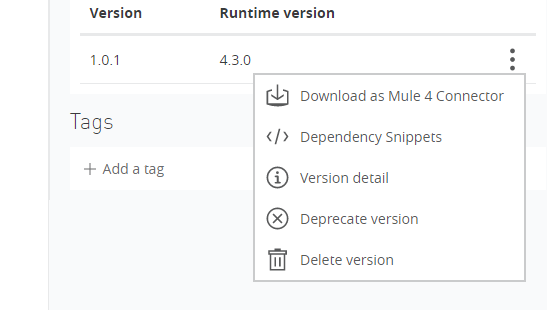

The next step is to add the framework as a maven dependency to the Mule implementation flow. For this copy the dependency snippet from the exchange.

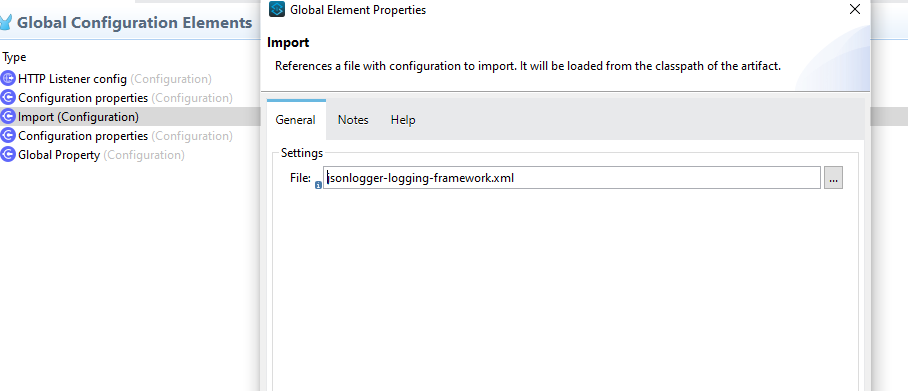

Add the dependency snippet as a dependency in the POM file of the Mule flow. You can then import the log framework xml file from global configuration:

For demo purpose we have created a Mule application flow as seen below:

<flow name="demo-json-loggerFlow" doc:id="cdaf7b4e-a533-4f99-b93b-6acf0bbc47a4" >

<http:listener doc:name="Listener" doc:id="921b2d22-682b-42e8-a20d-91b5695f8055" config-ref="HTTP_Listener_config" path="${api.path}"/>

<set-variable value="#['START']" doc:name="Set Start tracepoint" doc:id="d5d7a148-030d-4146-acfa-a210c8747083" variableName="tracepoint"/>

<set-variable value="#['Message Defined - '++ vars.tracepoint]" doc:name="Set Start Log Message" doc:id="b99a75f9-d8fb-40b6-9df5-52e688b5fa64" variableName="logMessage"/>

<flow-ref doc:name="Refer Log Framework-Start" doc:id="79e85682-ad40-4635-9ba2-0ca29c0f3d61" name="jsonlogger-logging-frameworkFlow"/>

<set-variable value="#['END']" doc:name="Set End tracepoint" doc:id="2fcb6f4e-ce54-425d-a66d-c38b631a8ef7" variableName="tracepoint"/>

<set-variable value="#['Message Defined - '++ vars.tracepoint]" doc:name="Set End Log Message" doc:id="bcc2f5c9-02da-4f46-a896-634ae7134513" variableName="logMessage"/>

<flow-ref doc:name="Refer Log Framework-End" doc:id="afe47c95-ed48-44e2-8230-619e21c67beb" name="jsonlogger-logging-frameworkFlow"/>

</flow>We also added a property file to control the logging framework behavior as shown in Diagram 1.

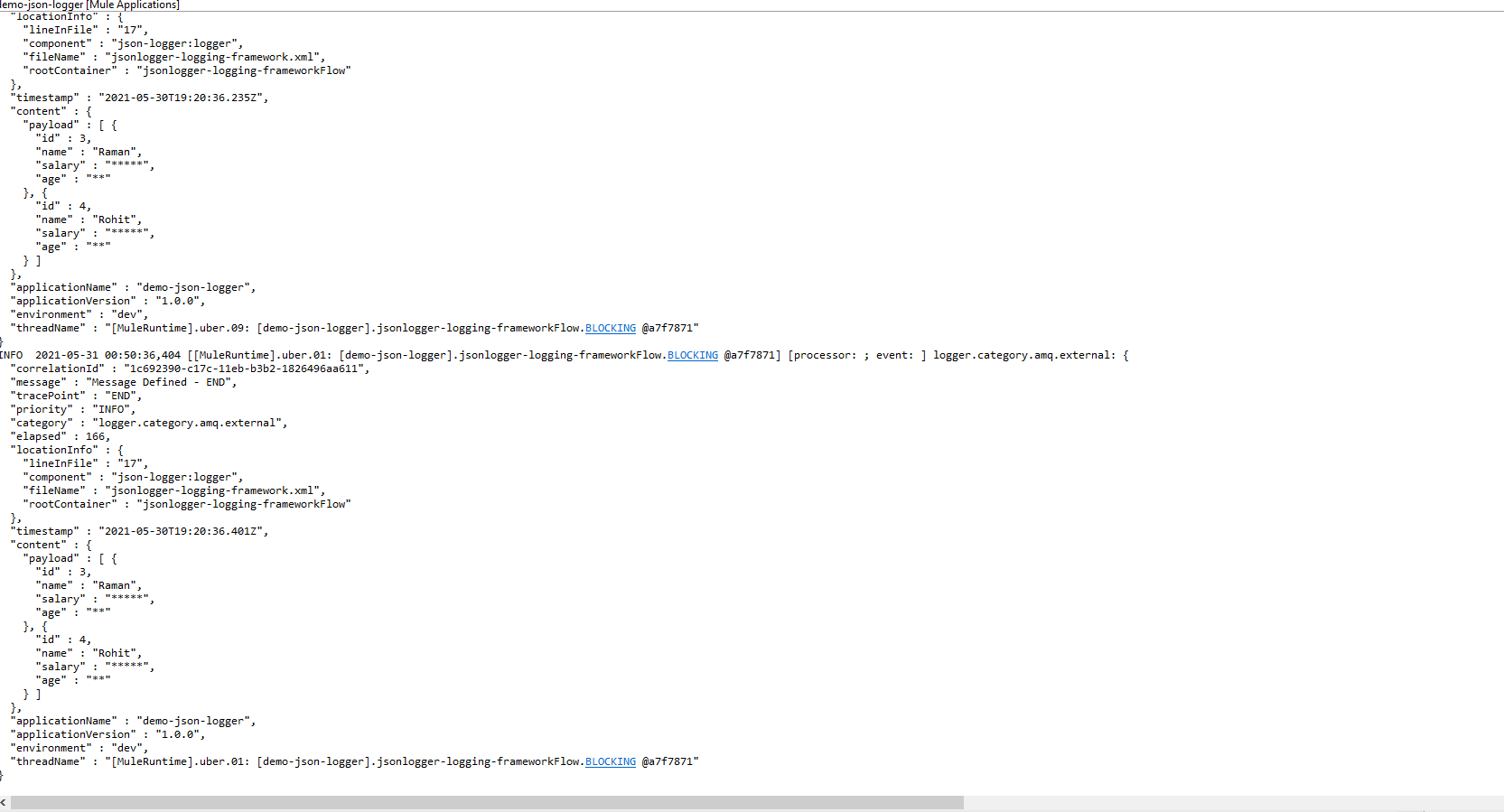

Once we run the demo flow and send some request through Postman, we can see on console log messages in console from underneath JSON logger:

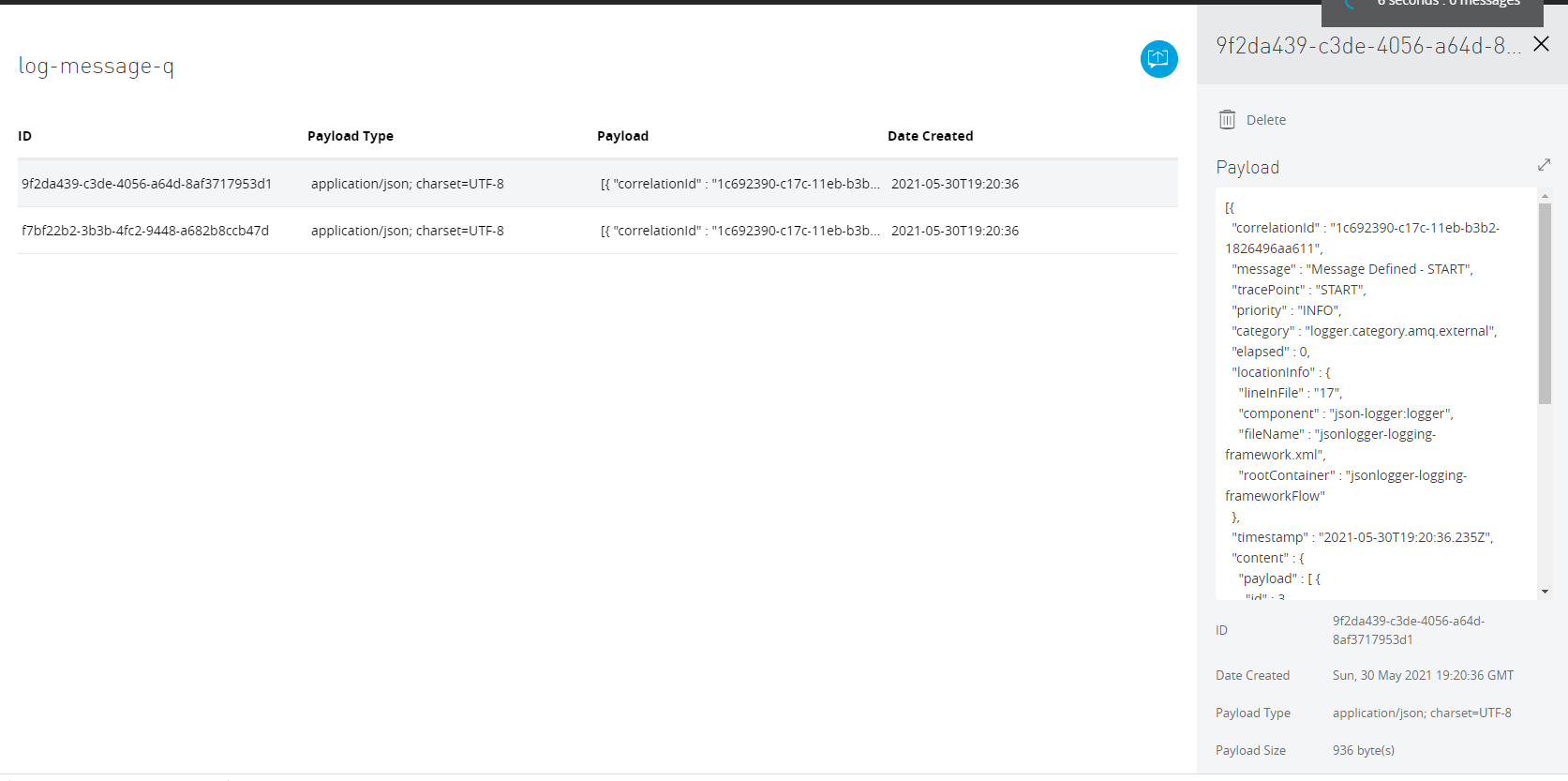

Since we have added one Anypoint MQ as the destination MQ same message will be published to that destination queue.

We have created another subscribe Mule application that will subscribe the log message from the Anypoint MQ and publish the key information to a database table on Snowflake. The Mule flow at high level looks as below (this is a simple flow used for demo purpose and this outside of logging framework):

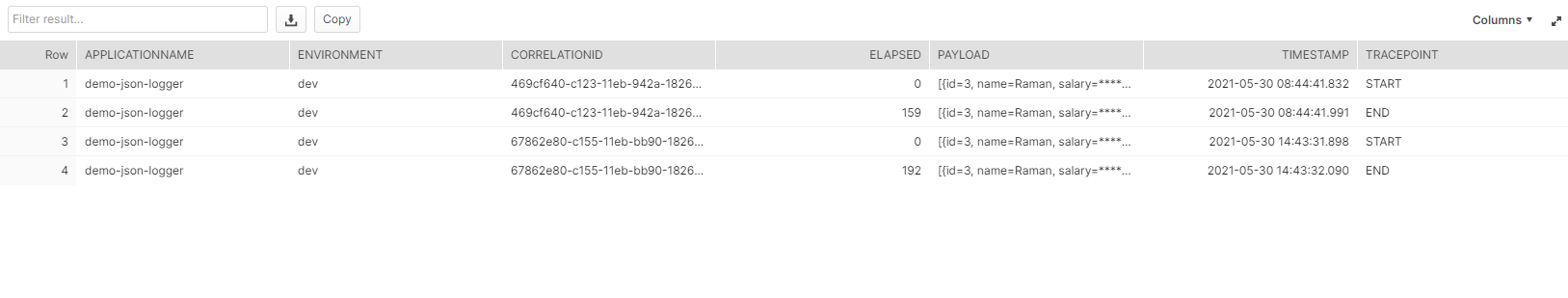

Finally we can see the key information publish to snowflake database for further use by operation team:

Note how the JSON logger is also publishing the time elapsed (under elapsed column) to execute the flow which is very useful for performance benchmark and not required to write a single line of code.

I hope this will help in getting an idea on how easily we can crate a robust logging framework for Mule implementation leveraging JSON Logger out of the box capability.

Opinions expressed by DZone contributors are their own.

Comments