Streamlining Event Data in Event-Driven Ansible

Learn how ansible.eda.json_filter and ansible.eda.normalize_keys streamline event data for Ansible automation. Simplify, standardize, and speed up workflows.

Join the DZone community and get the full member experience.

Join For FreeIn Event-Driven Ansible (EDA), event filters play a crucial role in preparing incoming data for automation rules. They help streamline and simplify event payloads, making it easier to define conditions and actions in rulebooks. Previously, we explored the ansible.eda.dashes_to_underscores filter, which replaces dashes in keys with underscores to ensure compatibility with Ansible's variable naming conventions.

In this article, we will explore two more event filters ansible.eda.json_filter and ansible.eda.normalize_keys.

The two filters, ansible.eda.json_filter and ansible.eda.normalize_keys give more control over incoming event data. With ansible.eda.json_filter, we can pick and choose which keys to keep or drop from the payload, so we only work with the information we need. This helps the automation run faster and cuts down on mistakes caused by extra, unneeded data.

The ansible.eda.normalize_keys filter addresses the challenge of inconsistent key formats by converting keys containing non-alphanumeric characters into a standardized format using underscores. This normalization ensures that all keys conform to Ansible's variable naming requirements, facilitating seamless access to event data within rulebooks and playbooks. Using these filters, we can create more robust and maintainable automation workflows in Ansible EDA.

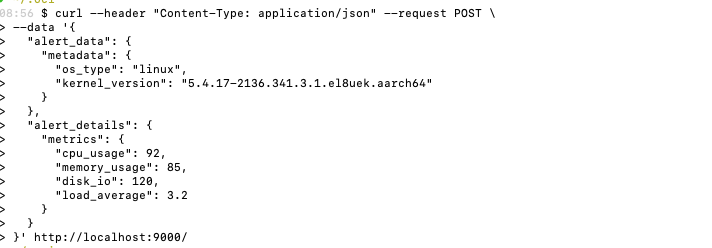

Testing the json_filter Filter With a Webhook

To demonstrate how the ansible.eda.json_filter works, we’ll send a sample JSON payload to a webhook running on port 9000 using a simple curl command. This payload includes both host metadata and system alert metrics. The metadata section provides details like the operating system type (linux) and kernel version (5.4.17-2136.341.3.1.el8uek.aarch64).

The metrics section reports key system indicators, such as CPU usage (92%), memory usage (85%), disk I/O (120), and load average (3.2). Here's the command used to post the data:

curl --header "Content-Type: application/json" --request POST \

--data '{

"alert_data": {

"metadata": {

"os_type": "linux",

"kernel_version": "5.4.17-2136.341.3.1.el8uek.aarch64"

}

},

"alert_details": {

"metrics": {

"cpu_usage": 92,

"memory_usage": 85,

"disk_io": 120,

"load_average": 3.2

}

}

}' http://localhost:9000/webhook.yml

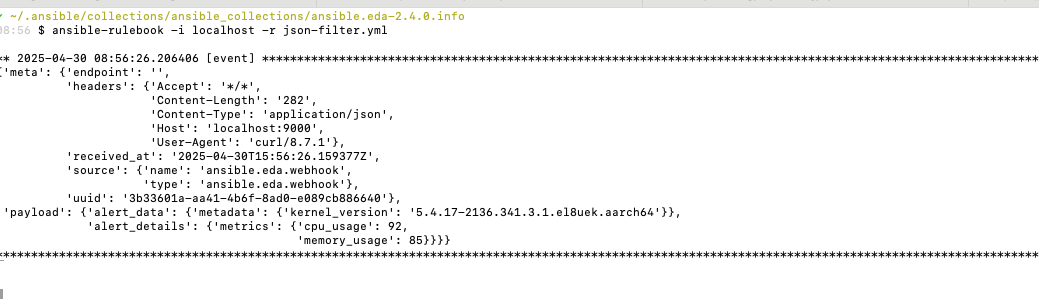

Here’s a demo script that shows how to use ansible.eda.json_filter to remove unwanted fields from a JSON event. In this setup, a webhook listens on port 9000 and receives alert data. The filter is set to exclude keys such as os_type, disk_io, and load_average, which are not required for further processing.

This helps focus only on the important metrics like CPU and memory usage. The filtered event is then printed to the console, making it easy to understand how the filter works.

- name: Event Filter json_filter demo

hosts: localhost

sources:

- ansible.eda.webhook:

port: 9000

host: 0.0.0.0

filters:

- ansible.eda.json_filter:

exclude_keys: ['os_type', 'disk_io', 'load_average']

rules:

- name: Print the event details

condition: true

action:

print_event:

pretty: trueScreenshots

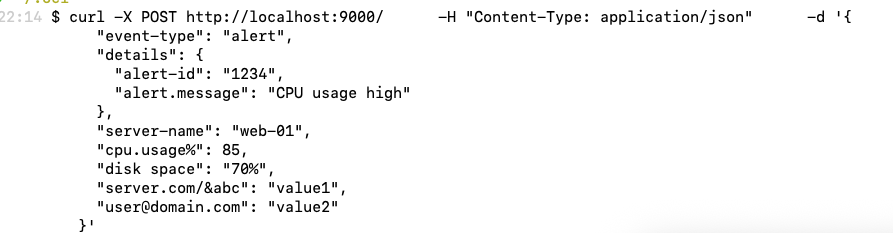

Testing the normalize_keys Filter With a Webhook

To demonstrate the functionality of the ansible.eda.normalize_keys filter, we are sending a JSON payload containing keys with various special characters to a locally running webhook endpoint.

This test demonstrates how the filter transforms keys with non-alphanumeric characters into a standardized format using underscores, ensuring compatibility with Ansible's variable naming conventions.

curl -X POST http://localhost:9000/ \

-H "Content-Type: application/json" \

-d '{

"event-type": "alert",

"details": {

"alert-id": "1234",

"alert.message": "CPU usage high"

},

"server-name": "web-01",

"cpu.usage%": 85,

"disk space": "70%",

"server.com/&abc": "value1",

"user@domain.com": "value2"

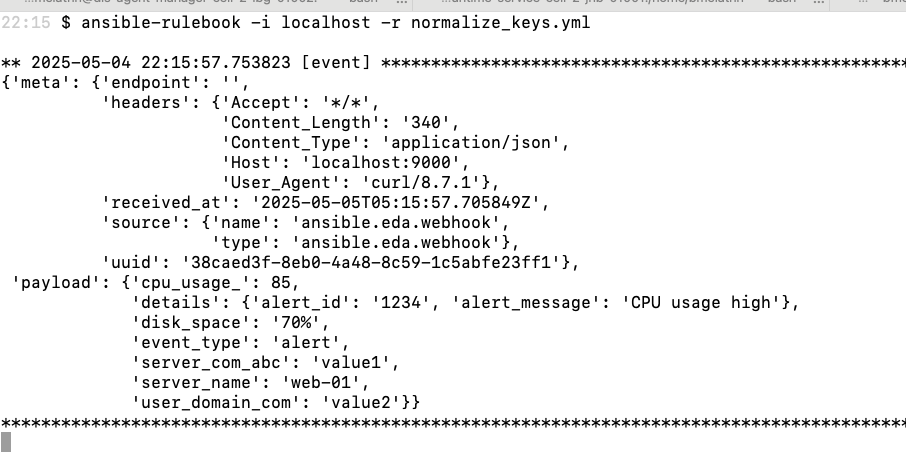

}'In this payload, keys such as event-type, alert-id, alert.message, server-name, cpu.usage%, disk space, server.com/&abc, and user@domain.com include characters like hyphens, periods, spaces, slashes, ampersands, and at symbols. When processed through the ansible.eda.normalize_keys filter, these keys are transformed by replacing sequences of non-alphanumeric characters with single underscores. For example, server.com/&abc becomes server_com_abc, and user@domain.com becomes user_domain_com.

This normalization process simplifies the handling of event data, reduces the likelihood of errors due to invalid variable names, and enhances the robustness and maintainability of your automation workflows in Ansible EDA.

webhook.yml

Here’s a demo script that shows how to use ansible.eda.normalize_keys to remove unwanted fields from a JSON event. In this setup, a webhook listens on port 9000 and receives alert data. The filter is set to exclude keys such as os_type, disk_io, and load_average, which are not required for further processing.

This helps focus only on the important metrics like CPU and memory usage. The filtered event is then printed to the console, making it easy to understand how the filter works.

- name: Event Filter normalize_keys demo

hosts: localhost

sources:

- ansible.eda.webhook:

port: 9000

host: 0.0.0.0

filters:

- ansible.eda.normalize_keys:

rules:

- name: Webhook rule to print the event data

condition: true

action:

print_event:

pretty: trueScreenshots

Conclusion

In the above demos, we saw how two simple but powerful filters ansible.eda.json_filter and ansible.eda.normalize_keys can transform incoming event data into exactly what the automation needs. Using the exclude_keys option, we removed unnecessary details. With include_keys, we can ensure that important information is retained. This approach makes automation more focused, easier to manage, and faster to run. It also helps prevent issues that can occur when events contain too much extra data.

Similarly, the ansible.eda.normalize_keys filter is an invaluable addition to any Event-Driven Ansible workflow. Converting keys with special characters into clean, underscore‑only names removes a common source of errors and confusion when accessing event data. This simple normalization step not only makes rulebooks and playbooks more readable, but also ensures that your automation logic remains robust and maintainable across diverse data sources.

This will help to streamline the variable handling and help the developers to focus on building effective, reliable automation rather than dealing with inconsistent payload formats. Together, these filters let you focus on the real work — building reliable, efficient EDA workflows — without getting bogged down in messy data.

Note: The views expressed in this article are my own and do not necessarily reflect the views of my employer.

Opinions expressed by DZone contributors are their own.

Comments