AI Meets Vector Databases: Redefining Data Retrieval in the Age of Intelligence

Learn how AI and vector databases enable searches by meaning, powering smarter recommendations, chatbots, and tools for handling unstructured data.

Join the DZone community and get the full member experience.

Join For FreeEver wish we could search the internet not with just keywords, but with actual meaning, like what we really meant to say? That’s the magic happening when AI teams up with vector databases.

Traditional databases are great for clean, structured stuff, like spreadsheets. But most of the data we create today? It’s messy and unstructured: think tweets, photos, voice notes, even memes. That’s where AI comes in. It’s amazing at understanding all that chaos, but it needs a smart system to store and search through it.

Enter vector databases. They're built to handle this new kind of searching, by meaning, not just matching words.

In this post, we’ll break down how this powerful duo is changing the way we find and understand information. We’ll show some real-world examples, peek at a bit of code, and walk through how it all fits together.

So, What Exactly Is a Vector Database?

In the age of intelligence, we generate and interact with large amounts of complex, often unarmed data (lesson, picture, audio, video). Traditional databases and search methods, mainly based on accurate keywords matching or predetermined structures (e.g., SQL table), struggle to understand the meaning and reference behind this data. This is where a powerful combination of artificial intelligence (AI) and vector database comes in.

Problem

How do you search for data based on ideological equality or meaning instead of only keywords? For example, how can a system understand that "the best dog breeds for apartment living are" suitable for small, cool canines, "to the semantics, even if the exact words are not mail?

Solution

AI (Embedding Model)

AI model, especially deep learning models (such as used in LLM, or special people like sentence-bart for lesson, clip for images/text), learn to change the complex data in dense numerical representation called vector embedding in dense numerical representation. The main property of these vectors is that items with similar meanings or features are mapped at points that are close together in a high-dimensional "vector space".

Vector Database

These are special databases designed to find high-dimensional vector embeddings clearly, store, and index them efficiently. They use algorithms such as the projected neighbor (ANN) discovery (e.g. HNSW, IVF) so that they are the vectors (and thus represent the original data) that are closest to a given query vector (most similar) to a given quarry vector.

The AI Vector DB Team-Up: How They Play Together

So, how does this tag team actually work? Think of it like this:

- AI understands: An AI model "reads" or "looks at" a piece of data (text, image, etc.).

- AI creates coordinates: Based on its understanding, the AI generates a vector embedding—numerical coordinates that pinpoint the data's meaning on our conceptual map.

- Vector DB stores: The vector database takes these coordinates (the vector) and the data's ID, stores them, and builds super-fast shortcuts (indexes) to navigate the map efficiently.

- Ask a question: We provide a query (text, maybe even an image).

- AI gets coordinates for query: The same AI model figures out the coordinates for our query on the map.

- Vector DB finds neighbors: We ask the vector database, "What's nearby these coordinates?" The database uses its speedy shortcuts (those ANN searches) to find the closest points on the map in milliseconds.

- Get relevant stuff: The database tells us the IDs of the closest items, and voilà — we get results based on meaning and similarity!

Why Is This Team-Up So Important?

- Gets the meaning: AI embeddings capture what something means, not just the words used.

- Super speedy: Vector DBs are built for finding similar items incredibly fast, even with billions of data points.

- Handles huge amounts of data: They're designed for the massive, complex datasets AI works with.

So, what can we do with this?

- Search that understands we: Imagine searching for "comfy shoes for running" and getting results for "great sneakers for jogging" — because the system understands the intent.

- Spot-on recommendations: Ever wonder how Netflix or Spotify uncannily knows what we'll like next? They often compare our preferences (as a vector) to movies or songs (also vectors) to find great matches.

- Smarter chatbots: Chatbots that actually understand our questions and pull relevant answers from their knowledge base, because they're matching meaning, not just keywords.

Code Sneak Peek: Finding Similar Stuff (Conceptual Example)

Let's peek under the hood. Imagine we've already used an AI model to create vector coordinates for lots of sentences and stored them in a vector database index (like Pinecone). Now, we want to find sentences similar to a new one:

# (Assuming setup with 'pinecone-client' and an embedding 'model') Our question, or "query"

query_sentence = "AI is amazing in the world"

# 1. Ask the AI model for the coordinates of our query

query_embedding = model.encode([query_sentence])[0].tolist()

# 2. Ask the Vector DB (index) to find the 2 closest neighbors

results = index.query(vector=query_embedding, top_k=2, include_metadata=True)

# 3. Look at what it found!

print(f"We asked about: \"{query_sentence}\"\n")

print("Here's what sounds similar:")

for match in results["matches"]:

original_text = match.get('metadata', {}).get('text', 'N/A') # Get the original text if stored

print(f" - Found: \"{original_text}\" (Similarity Score: {match['score']:.2f})") # Show scoreWhat Just Happened?

We turned our sentence ("AI is amazing...") into vector coordinates. Then, we asked the vector database to find the two stored sentences whose coordinates were closest to our query's coordinates. The database returned those sentences (like maybe "I love artificial intelligence technology" or "The capabilities of AI are truly incredible") along with a score showing how similar they were. Pretty neat, right? It's finding things based on shared meaning!

Why This Matters: Stuff We Can Use Today

It's already making everyday tech better:

- Search that just gets it: Search for "pictures of happy dogs outside" and find great photos, even if they weren't tagged with those exact words. The system understands the concept of joyful pups in the park.

- Recommendations that feel like magic: How does our favorite streaming service or shopping site know our taste so well? Often, they're using this tech to match our preferences to items based on deep similarity.

- Finding that photo or video clip: Go beyond filenames! Search our media library for visually similar content – like finding all photos with a beach sunset, regardless of how they're named.

- Helpful chatbots and Q&A: Get useful answers faster because the chatbot understands the meaning behind our question and finds relevant info stored as vectors.

- Spotting the odd one out: This tech is great for security, too. By mapping out normal patterns (like financial transactions), it can instantly spot unusual activity (like potential fraud) that looks different on the "meaning map."

The Basic Journey: Data In, Answers Out

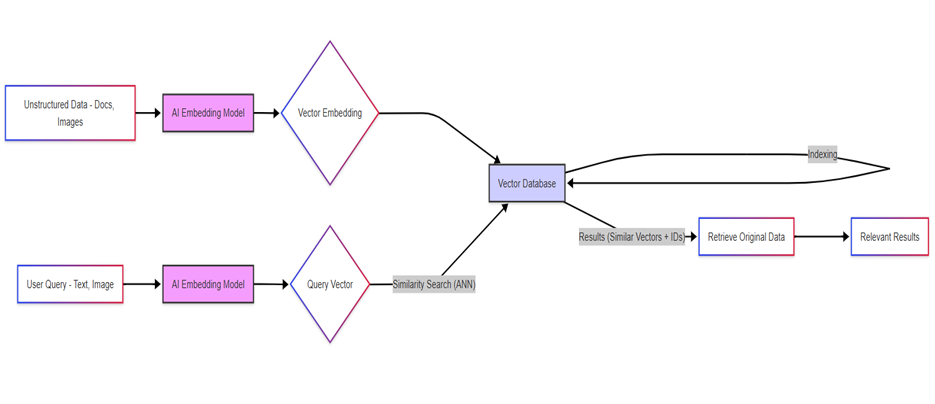

Here is the workflow diagram:

Stuff Comes In

Raw data (text, pics, audio) enters the system.

- AI figures it out: An AI model looks at the data and gives it meaning-based coordinates (the vector embedding).

- Coordinates get stored: These vectors (and links back to the original data) are saved and organized in the vector database.

- We ask: We type a query, upload an image, etc.

- Query gets coordinates: The same AI model gives our query its own coordinates.

- Database finds neighbors: The vector database rapidly searches its "map" to find stored data with coordinates closest to our query's coordinates.

- Similar stuff returned: The database points to the most similar items it found.

- Original data pulled: The system grabs the actual text, image, or product info using the pointers from the database.

- Results delivered: We get the relevant information!

Real Example: Shopping Sites

We know when we browse an online store, click on, say, a cool pair of running shoes, and instantly see suggestions for other similar shoes? That's often AI + Vector DBs at work!

How It Works (Behind the Scenes)

- Prep work (done beforehand): The store uses an AI model to create vector "coordinates" for every single product based on descriptions, features, maybe even pictures. These coordinates are all stored in a vector database.

- Visit (happens in real-time):

- We click on those red running shoes.

- The website instantly grabs the pre-calculated coordinates for that specific shoe.

- It asks the vector database: "Quick! Find me other shoes with coordinates close to these!"

- The Vector DB does its super-fast search and returns the IDs of the most similar shoes (maybe blue trail runners, lightweight marathon shoes, etc.).

- The website pulls the pictures and prices for those similar shoes.

- Boom! They appear on our page under "We Might Also Like."

It feels seamless, but it's this clever tech making relevant suggestions based on deep similarity, helping we discover products we might actually love.

Conclusion

Teaming up AI and vector databases is a huge leap forward. It lets us move beyond simple keyword searches to finding information based on what it truly means. This is powering smarter search engines, recommendation systems that feel psychic, and countless other applications that understand us better.

Things are still being used (e.g to make it even faster and cheap), but do not make any mistake: this combination is becoming original how we interact with information. Whether we are creating apps or eager about the future of technology, understand how AI and vector database work together, it is important to navigate our fast intelligent world. Get ready to see a lot in it!

Opinions expressed by DZone contributors are their own.

Comments