Reasons Your Database Might Crash

This article helps database administrators prevent unexpected behavior and crashes by exploring the reasons databases crash, to optimize their performance.

Join the DZone community and get the full member experience.

Join For FreeA Database Is Part of a Dynamic Environment

A database setup consists of the system server hardware and software stack that runs the OS and other needed software packages. On top of it runs the database server, its associated plugins, containers (if any), etc. These will be connected to the in-house and outside worlds through network hardware and software like routers, switches, and firewalls. Ensuring such a complex setup will run 24/7 without any issues or downtime is an ideal but not a well-desired scenario for a system or database administrator. The reason is: software, hardware, networks – these are not 100% fault proof or at least are not expected like that. The internal and external environments are constantly changing – new software arriving, existing software being updated, memory being consumed, the database growing, I/O and traffic increasing, log files and caches, buffers growing, and all those challenges applicable to a dynamic environment are related to a database, too.

That said, this is not the bail for letting a database or its host go on strike or crash, abruptly or along expected lines. A database, its host system, and the network should undergo several security and maintenance routines and protocols that guarantee a 99.xx% uptime, or something near to that. Even if some unexpected happens, the DBA must be able to restore and reinstate the database as early as possible. This article discusses the reasons that can cause a database to crash, and also some administration guidelines for preventing unexpected behavior that affects the robustness of a database.

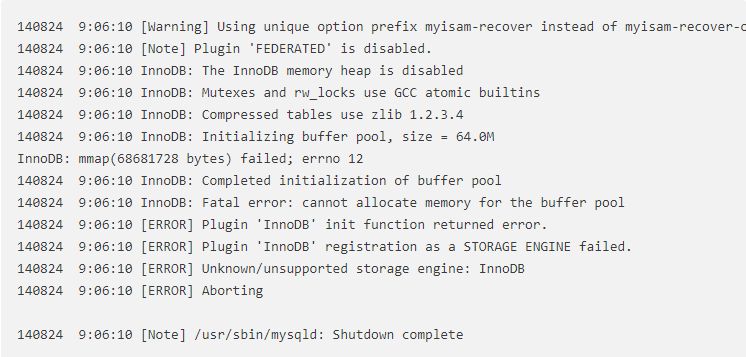

An example error log for a database server shutdown.

You Have Not Taken Care of Pre-Deployment Considerations

Below are certain issues that could have been addressed well before the database's installation and deployment to ensure its smooth operation from the ground level:

- You need a Big Data database without much transaction integrity, but you went for a transaction secure and complex technology with little flexibility. On the other hand, you need a host level, transactional system but you used a mass data NoSQL-based technology.

- The database is not well normalized with the needed keys and indexes to eliminate redundancy and improve response time. Searchable fields are not indexed to speed up retrieval, especially when the database starts to grow. Schemas that separate master data and dependent records in different tables for performance and query optimization are not utilized. Primary and foreign key constraints are not used effectively to relate masters and dependents and to help efficient join queries.

- You did not use the latest stable release of both the system software packages including the OS and the database. This should have avoided an initial rush for emergency upgrades in case of a minor version release so the DBA and developers can focus on the database performance side.

- You did not plan your database configuration in advance and documented it. Or, this was not preceded by data quantity and traffic estimation. The configuration parameters like query cache size and limit, log sizes, flush time, compression level, I/O capacity, page size, what to log, maximum connections, open files limit, sort buffer size, and wait for timeout were not pre-calculated. After deployment and monitoring the load and traffic, these parameters could be optimized further to match the current system requirements.

Your Database Is on the Wrong Server

Nowadays, hosting server competition is so high that you can be easily tempted to go for a configuration at a cheaper rate, planning to upgrade after the need for a scale-out actually happens. You can end up buying a shared hosting space or a VPS with beginner level resources. What probably happens after a few days or weeks of deployment is: any database hosted on the same server causes a spike in the shared resource usage, denying the right for others. This may be due to reasons like a poor configuration or query, inefficient application coding, or even a compromised application or database. The net effect is your database struggling due to lack of resources, including memory and processing, causing down time as well as DOS. Lack of memory for processing and internal database operations like caching and sorting can crash the database. The easy solution is to monitor your database’s growth in terms of data storage as well as traffic and scale up/upgrade your hosting.

Your Application and Queries Can Be Unfriendly

It can happen that your application’s data access layer (if it exists) is not programmed efficiently, and results in issuing too many queries or slow queries. Such queries often result from under/overutilization of indexes, table joins that are bi-directional, causing indexes to be ineffective, absent, or querying a non-indexed field, causing a built-in database function like date, count, or sum to be calculated for each and every row in the output, rather than using a variable to pre-calculate the needed value. The application’s ineffectiveness may be due to a poor design pattern, lack of standards, poor code organization and optimization, or recursive queries. A bad query or recursive querying can hang the server or cause buffer flow that crashes the server. The Slow Query Logs and Query Optimizer tools like EXPLAIN in MySQL and error logs can be useful in identifying and rectifying these issues.

You Are Facing Hardware and/or Software Failures

This applies mainly to in-house deployments. A host server hardware failure (processor, memory disks, RAM, motherboard, network hardware, etc.) or power failure and subsequent server crash can cause the database server to stop abruptly, causing a crash. The case is the same with a software failure that affects the threads and dependency package processes of the database server. Ensuring quality hardware, a power backup plan, and proactive and efficient system administration are the remedies for this issue.

You Are Running out of Resources, Especially Memory and Swap Space

A database very frequently uses memory based buffers, caches, and log files along with the major ones, data files and index files. The database buffer cache is the place where the database server copies data from data files and place for processing. With a growing data volume on the database, the information placed on the file system will increase, and if a proportionate additional memory allocation is not done for the in-memory resources, the database will try to access the SWAP memory (memory allocated on the disk to be used by processes once RAM is being completely used up). If enough swap space is not available at any point in time, then the database server may abort the operation or abruptly crash due to lack of memory. The solution is to allocate sufficient RAM and swap space on the host server for future scale outs, and continuously monitor the database usage and data to ensure the allocated resources are always at least one step ahead of the requirement. Similarly, log and error files must be periodically cleaned and the file system defragmented for maximum utilization of memory resources.

Have You Heard About Buffer Overflow and SQL Injection Attacks?

Buffers are fixed sized memory blocks used to place data during processing (READS/WRITES and other Function Computing). Many attacks are targeted to exploit the buffer overflow vulnerability of many applications and programming languages. The database is also a nice target for attackers to trigger a buffer overflow sequence by exploiting the applications' loose security, or through SQL Injections. Buffer overflow also can occur unintentionally due to poorly written database queries or misconfigurations. When an operation injects or causes data that may not be contained in a buffer, the adjacent buffer block is used to store the data. If the original data contains pointers to the malicious code injected through SQL attack, or if a wrong data type based field gets a data value that’s binary format exceeds the allocated buffer size, the process flow is altered in the former case and buffer overflow occurs in the latter case, crashing the database. Using signed data type modifiers, securing the application, patching up code vulnerabilities, and preventing SQL injection are the remedies to prevent buffer overflow database attacks.

Under-Usage of Cached Results

If your queries are unnecessarily broadening the row domain using wide queries, like:

“SELECT * FROM products WHERE price > 1000”

Where you need only the product id, name, and actual price, and you are fetching other unnecessary product details like manufacturer, SKU/MPN, stock, etc., you are drawing a pond instead of a glass of water. An already cached resultset for a similar query in some other parts of the application, like:

“SELECT product_id, product_name, product_price FROM products WHERE price > 1000”

will not be used by your first jumbo query. Additionally, the first broad result set will be cached, wasting precious cache space. A high number of such queries will cache to become irrelevant and overload the database with searching/sorting and processing overheads. Eventually, there is a good chance for cache and slow query logs to build up and the database server will start showing under-performance, eventually leading to a crash.

You Have File Permission and Corruptions

A significant percentage of database server crashes are caused by file permission issues, corrupted data, and index files. This can happen due to a variety of reasons:

- Some other processes are trying to modify a data or index file that is written by the database without suitable locking.

- Many database server processes are using the same data directory in the host system that does not have support for good file system locking, or external file locking is disabled for the database servers.

- The database server tries to read/write from an already crashed and thus corrupted data/index file.

- A defective piece of hardware, especially I/O corrupting a data/index file.

- The data/file permission is either not set properly initially or was changed after the database deployment, preventing the database server from accessing the files.

You Are Not Reading the Error Logs and Taking Remedial Actions

A majority of the common issues that lead to a database crash can initiate like small events and will be recorded by the database in the error log file. Your failure to constantly check the error log until something big happens can lead to an unexpected (though warned by the database) crash. Most database server technologies will record the following type of errors that need to be repaired at the earliest chance:

- Diagnostic errors related to corrupt tables, indexes, etc.

- Configuration variable values disproportionate to the database environment and workload, e.g. the buffer pool size needs to be increased.

- Duplicate entries present in key fields.

- Replication errors.

- Data packet size errors.

- Communication protocol mismatch errors.

- Etc...

All Your Growing Data Is in a Single Database

Even though you can place your thousands, if not millions of data records in a single database, it will cause you issues including an abrupt shutdown or crash due to the above reasons and other issues. The solution is to use a replicated database system that employs a master server and slave nodes to share the data and connections. A cluster deployment is also highly beneficial to share the workload and improve performance. Synchronized and container based database nodes also improve performance and scalability, and also avoid a single point of failure by providing alternate or backup nodes to replace the crashed or failed database node.

You Do Not Have an (Expert) DBA

A typical scenario of poor DBA performance is the database crash that happens during an upgrade, when the dependencies of the new release are not ensured prior to the upgrade. A DBA or team is essential for administrative and maintenance tasks for a database, unless you outsource your database needs and administration to a DBaaS provider who manages everything for you. The DBA should be proactive in monitoring logs and alerts to identify issues, scalability needs, and space for performance optimization. The solutions must be planned and implemented as a top priority so that your organization is not surprised or struck by a sudden database crash that can seriously affect your operations.

Opinions expressed by DZone contributors are their own.

Comments