Run Java Microservices Across Multiple Cloud Regions With Spring Cloud

Let’s explore how to develop and deploy multi-region Java microservices with Spring Cloud and why you want to do so.

Join the DZone community and get the full member experience.

Join For FreeIf you want to run your Java microservices on a public cloud infrastructure, you should take advantage of the multiple cloud regions. There are several reasons why this is a good idea.

First, cloud availability zones and regions fail regularly due to hardware issues, bugs introduced after a cloud service upgrade, or banal human errors. One of the most well-known S3 outages happened when an AWS employee messed with an operational command!

If a cloud region fails, so do your microservices from that region. But, if you run microservice instances across multiple cloud regions, you remain up and running even if an entire US East region is melting.

Second, you may choose to deploy microservices in the US East, but the application gets traction across the Atlantic in Europe. The roundtrip latency for users from Europe to your application instances in the US East will be around 100ms. Compare this to the 5ms roundtrip latency for the user traffic originating from the US East (near the data centers running microservices), and don't be surprised when European users say your app is slow. You shouldn't hear this negative feedback if microservice instances are deployed in both the US East and Europe West regions.

Finally, suppose a Java microservice serves a user request from Europe but requests data from a database instance in the USA. In that case, you might fall foul of data residency requirements (if the requested data is classified as personal by GDPR). However, if the microservice instance runs in Europe and gets the personal data from a database instance in one of the European cloud regions, you won't have the same problems with regulators.

This was a lengthy introduction to the article's main topic, but I wanted you to see a few benefits of running Java microservices in multiple distant cloud locations. Now, let's move on to the main topic and see how to develop and deploy multi-region microservices with Spring Cloud.

High-Level Concept

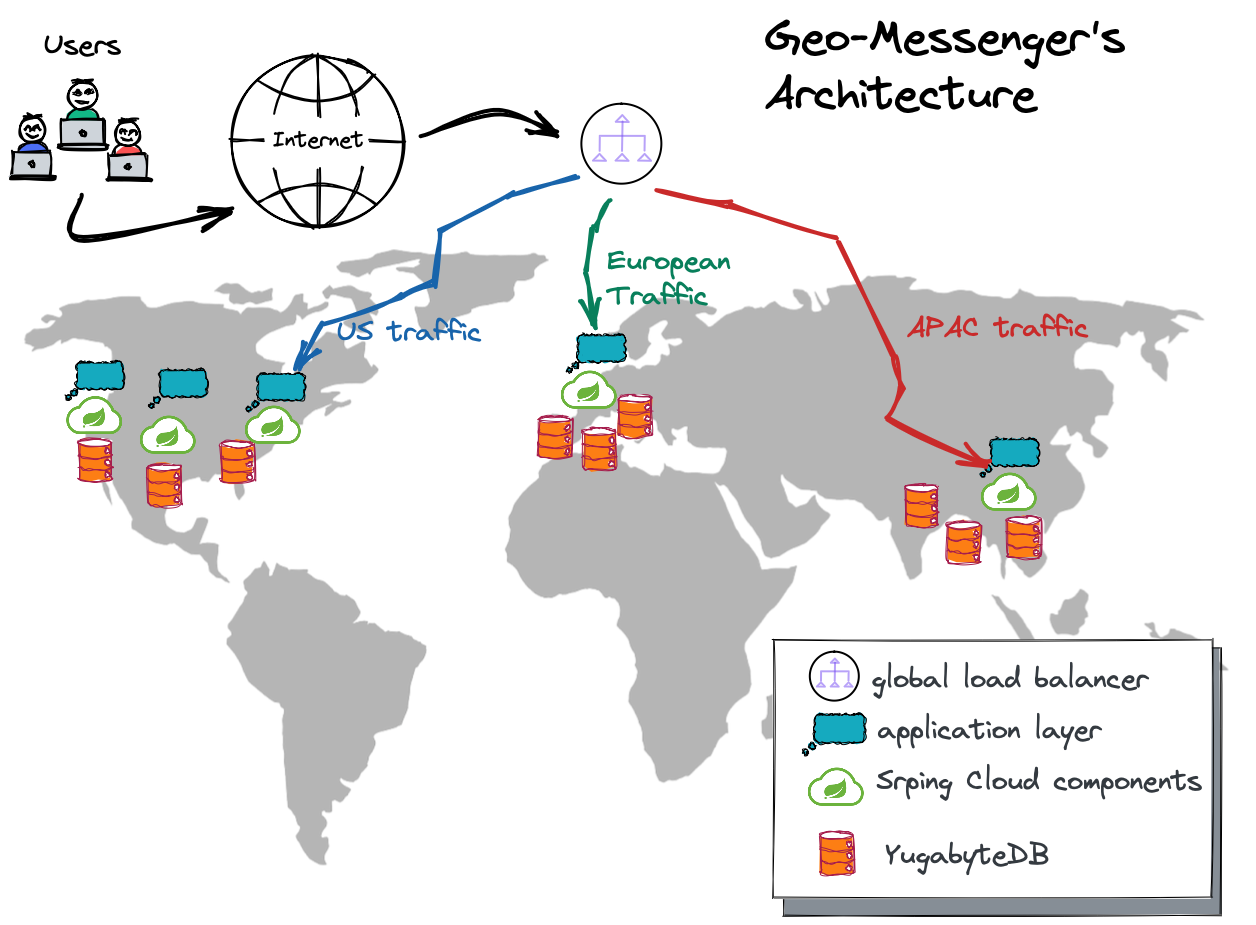

Let’s take a geo-distributed Java messenger as an example to form a high-level understanding of how microservices and Spring Cloud function in a multi-region environment.

The application (comprised of multiple microservices) runs across multiple distant regions: US West, US Central, US West, Europe West, and Asia South. All application instances are stateless.

Spring Cloud components operate in the same regions where the application instances are located. The application uses Spring Config Server for configuration settings distribution and Spring Discovery Server for smooth and fault-tolerant inter-service communication.

YugabyteDB is selected as a distributed database that can easily function across distant locations. Plus, as long as it’s built on the PostgreSQL source code, it naturally integrates with Spring Data and other components of the Spring ecosystem. I’m not going to review YugabyteDB multi-region deployment options in this article. Check out this article if you’re curious about those options and how to select the best one for this geo-distributed Java messenger.

The user traffic gets to the microservice instances via a Global External Cloud Load Balancer. In short, the load balancer comes with a single IP address that can be accessed from any point on the planet. That IP address (or a DNS name that translates to the address) is given to your web or mobile front end, which uses the IP to connect to the application backend. The load balancer forwards user requests to the nearest application instance automatically. I’ll demonstrate this cloud component in greater detail below.

Target Architecture

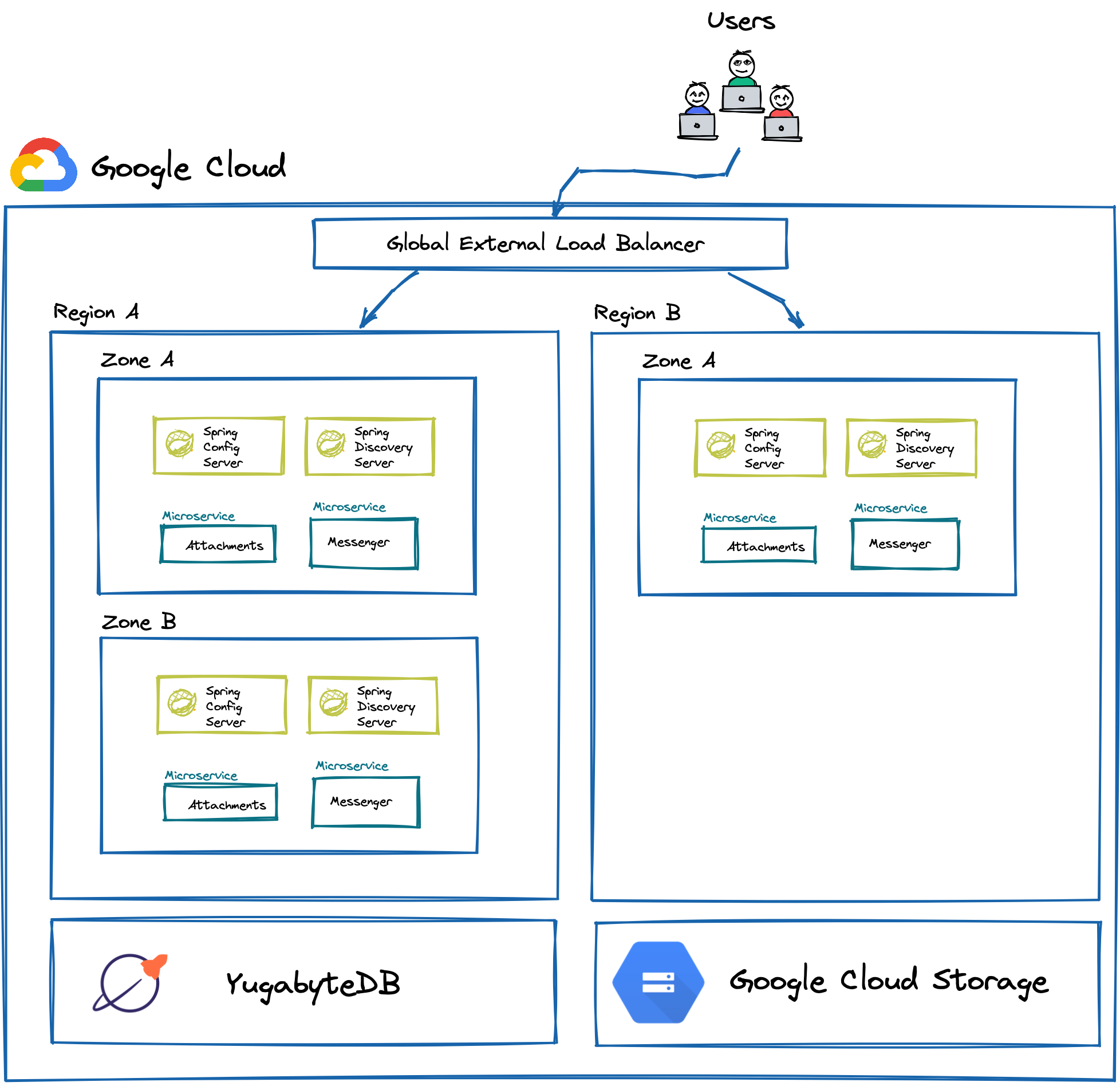

A target architecture of the multi-region Java messenger looks like this:

The whole solution runs on the Google Cloud Platform. You might prefer another cloud provider, so feel free to go with it. I usually default to Google for its developer experience, abundant and reasonably priced infrastructure, fast and stable network, and other goodies I’ll be referring to throughout the article.

The microservice instances can be deployed in as many cloud regions as necessary.

In the picture above, there are two random regions: Region A and Region B. Microservice instances can run in several availability zones of a region (Zone A and B of Region A) or within a single zone (Zone A of Region B).

It’s also reasonable to have a single instance of the Spring Discovery and Config servers per region, but I purposefully run an instance of each server per availability zone to bring the latency to a minimum.

Who decides which microservice instance will serve a user request? Well, the Global External Load Balancer is the decision-maker!

Suppose a user pulls up her phone, opens the Java messenger, and sends a message. The request with the message will go to the load balancer, and it might forward it this way:

- Region A is the closest to the user, and it’s healthy at the time of the request (no outages). The load balancer selects this region based on those conditions.

- In that region, microservice instances are available in both Zone A and B. So, the load balancer can pick any zone if both are live and healthy. Let’s suppose that the request went to Zone B.

I’ll explain what each microservice is responsible for in the next section. As of now, all you should know is that the Messaging microservice stores all application data (messages, channels, user profiles, etc.) in a multi-region YugabyteDB deployment. The Attachments microservice uses a globally distributed Google Cloud Storage for user pictures.

Microservices and Spring Cloud

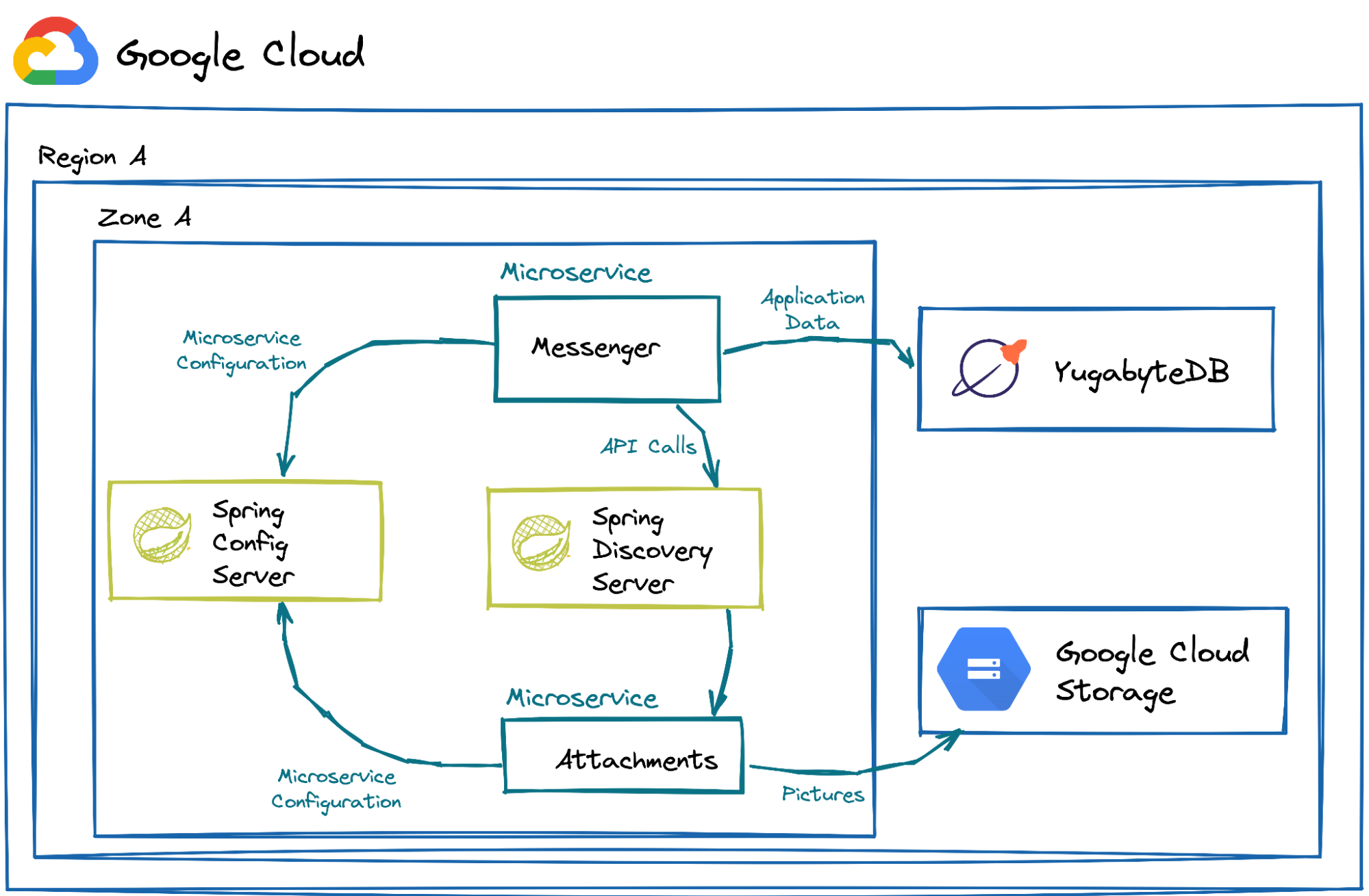

Let’s talk more about microservices and how they utilize Spring Cloud.

The Messenger microservice implements the key functionality that every messenger app must possess—the ability to send messages across channels and workspaces. The Attachments microservice uploads pictures and other files. You can check their source code in the geo-messenger’s repository.

Spring Cloud Config Server

Both microservices are built on Spring Boot. When they start, they retrieve configuration settings from the Spring Cloud Config Server, which is an excellent option if you need to externalize the config files in a distributed environment.

The config server can host and pull your configuration from various backends, including a Git repository, Vault, and a JDBC-compliant database. In the case of the Java geo-messenger, the Git option is used, and the following line from the application.properties file of both microservices requests Spring Boot to load the settings from the Config Server:

spring.config.import=configserver:http://${CONFIG_SERVER_HOST}:${CONFIG_SERVER_PORT}Spring Cloud Discovery Server

Once the Messenger and Attachments microservices are booted, they register with their zone-local instance of the Spring Cloud Discovery Server (that belongs to the Spring Cloud Netflix component).

The location of a Discovery Server instance is defined in the following configuration setting that is transferred from the Config Server instance:

eureka.client.serviceUrl.defaultZone=http://${DISCOVERY_SERVER_HOST}:${DISCOVERY_SERVER_PORT}/eurekaYou can also open the HTTP address in the browser to confirm the services have successfully registered with the Discovery Server:

The microservice register with the server using the name you pass via the spring.application.name setting of the application.properties file. As the above picture shows, I’ve chosen the following names:

spring.application.name=messengerfor the Messenger microservicespring.application.name=attachmentsfor the Attachments service

The microservice instances use those names to locate and send requests to each other via the Discovery Server. For example, when a user wants to upload a picture in a discussion channel, the request goes to the Messenger service first. But then, the Messenger delegates this task to the Attachments microservice with the help of the Discovery Server.

First, the Messenger service gets an instance of the Attachments counterpart:

List<ServiceInstance> serviceInstances = discoveryClient.getInstances("ATTACHMENTS");

ServiceInstance instance;

if (!serviceInstances.isEmpty()) {

instance = serviceInstances

.get(ThreadLocalRandom.current().nextInt(0, serviceInstances.size()));

}

System.out.printf("Connected to service %s with URI %s\n",

instance.getInstanceId(), instance.getUri());Next, the Messenger microservice creates an HTTP client using the Attachments’ instance URI and sends a picture via an InputStream:

HttpClient httpClient = HttpClient.newBuilder().build();

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create(instance.getUri() + "/upload?fileName=" + fileName))

.header("Content-Type", mimeType)

.POST(HttpRequest.BodyPublishers.ofInputStream(new Supplier<InputStream>() {

@Override

public InputStream get() {

return inputStream;

}

})).build();The Attachments service receives the request via a REST endpoint and eventually stores the picture in Google Cloud Storage, returning a picture URL to the Messenger microservice:

public Optional<String> storeFile(String filePath, String fileName, String contentType) {

if (client == null) {

initClient();

}

String objectName = generateUniqueObjectName(fileName);

BlobId blobId = BlobId.of(bucketName, objectName);

BlobInfo blobInfo = BlobInfo.newBuilder(blobId).build();

try {

client.create(blobInfo, Files.readAllBytes(Paths.get(filePath)));

} catch (IOException e) {

System.err.println("Failed to load the file:" + fileName);

e.printStackTrace();

return Optional.empty();

}

System.out.printf(

"File %s uploaded to bucket %s as %s %n", filePath, bucketName, objectName);

String objectFullAddress = "http://storage.googleapis.com/" + bucketName + "/" + objectName;

System.out.println("Picture public address: " + objectFullAddress);

return Optional.of(objectFullAddress);

}If you’d like to explore a complete implementation of the microservices and how they communicate via the Discovery Server, visit the GitHub repo, linked earlier in this article.

Deploying on Google Cloud Platform

Now, let’s deploy the Java geo-messenger on GCP across three geographies and five cloud regions - North America ('us-west2,' 'us-central1,' 'us-east4'), Europe ('europe-west3') and Asia ('asia-east1').

Follow these deployment steps:

- Create a Google project.

- Create a custom premium network.

- Configure Google Cloud Storage.

- Create Instance Templates for VMs.

- Start VMs with application instances.

- Configure Global External Load Balancer.

I’ll skip the detailed instructions for the steps above. You can find them here. Instead, let me use the illustration below to clarify why the premium Google network was selected in step #2:

Suppose an application instance is deployed in the USA on GCP, and the user connects to the application from India. There are slow and fast routes to the app from the user’s location.

The slow route is taken if you select the Standard Network for your deployment. In this case, the user request travels over the public Internet, entering and exiting the networks of many providers before getting to the USA. Eventually, in the USA, the request gets to Google’s PoP (Point of Presence) near the application instance, enters the Google network, and gets to the application.

The fast route is selected if your deployment uses the Premium Network. In this case, the user request enters the Google Network at the PoP closest to the user and never leaves it. That PoP is in India, and the request will speed to the application instance in the USA via a fast and stable connection. Plus, the Cloud External Load Balancer requires the premium tier. Otherwise, you won’t be able to intercept user requests at the nearest PoP and forward them to the nearby application instances.

Testing Fault Tolerance

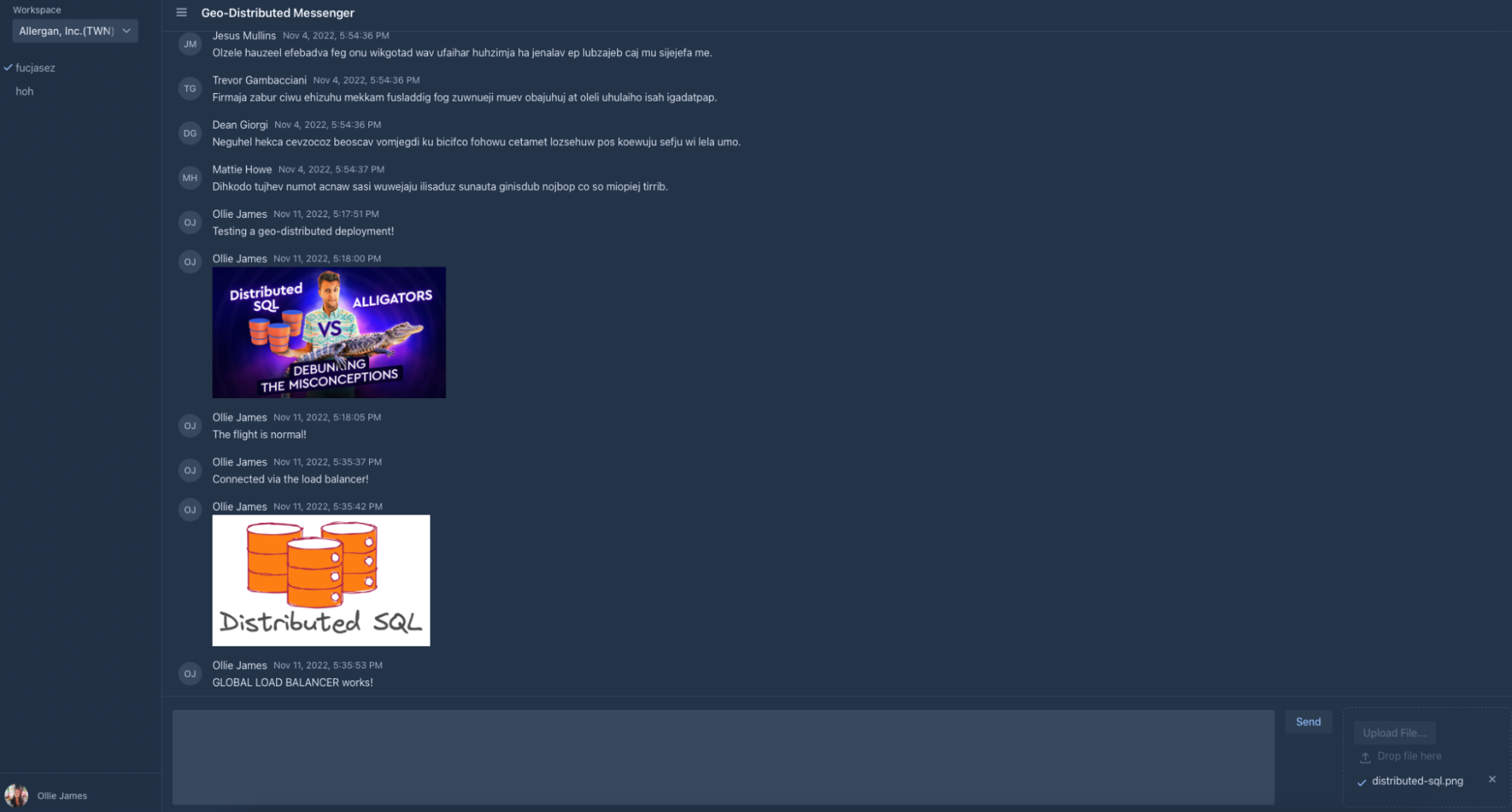

Once the microservices are deployed across continents, you can witness how the Cloud Load Balancer functions at normal times and during outages.

Open an IP address used by the load balancer in your browser and send a few messages with photos in one of the discussion channels:

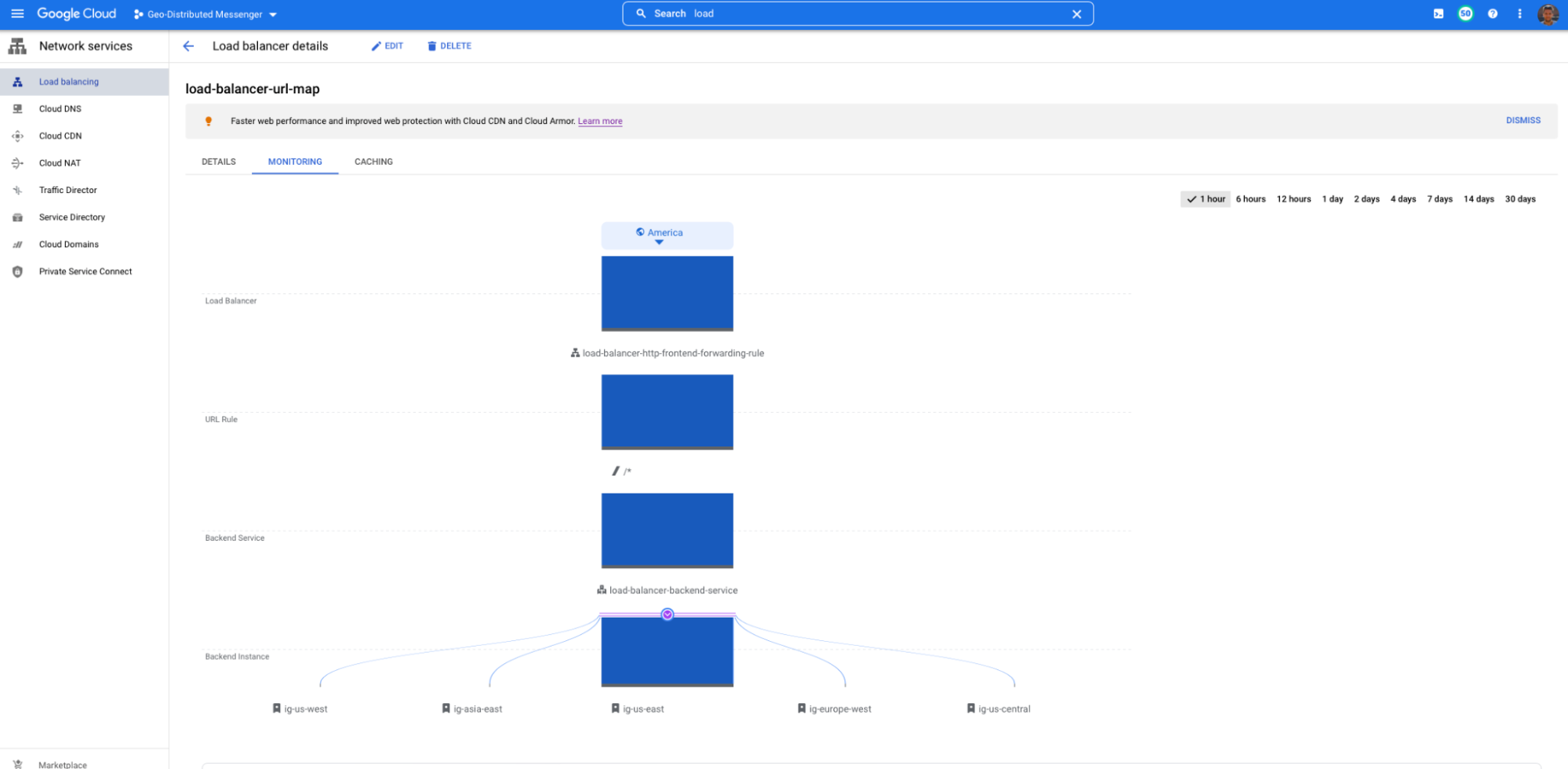

Which instance of the Messenger and Attachments microservices served your last requests? Well, it depends on where you are in the world. In my case, the instances from the US East (ig-us-east) serve my traffic:

What would happen with the application if the US East region became unavailable, bringing down all microservices in that location?

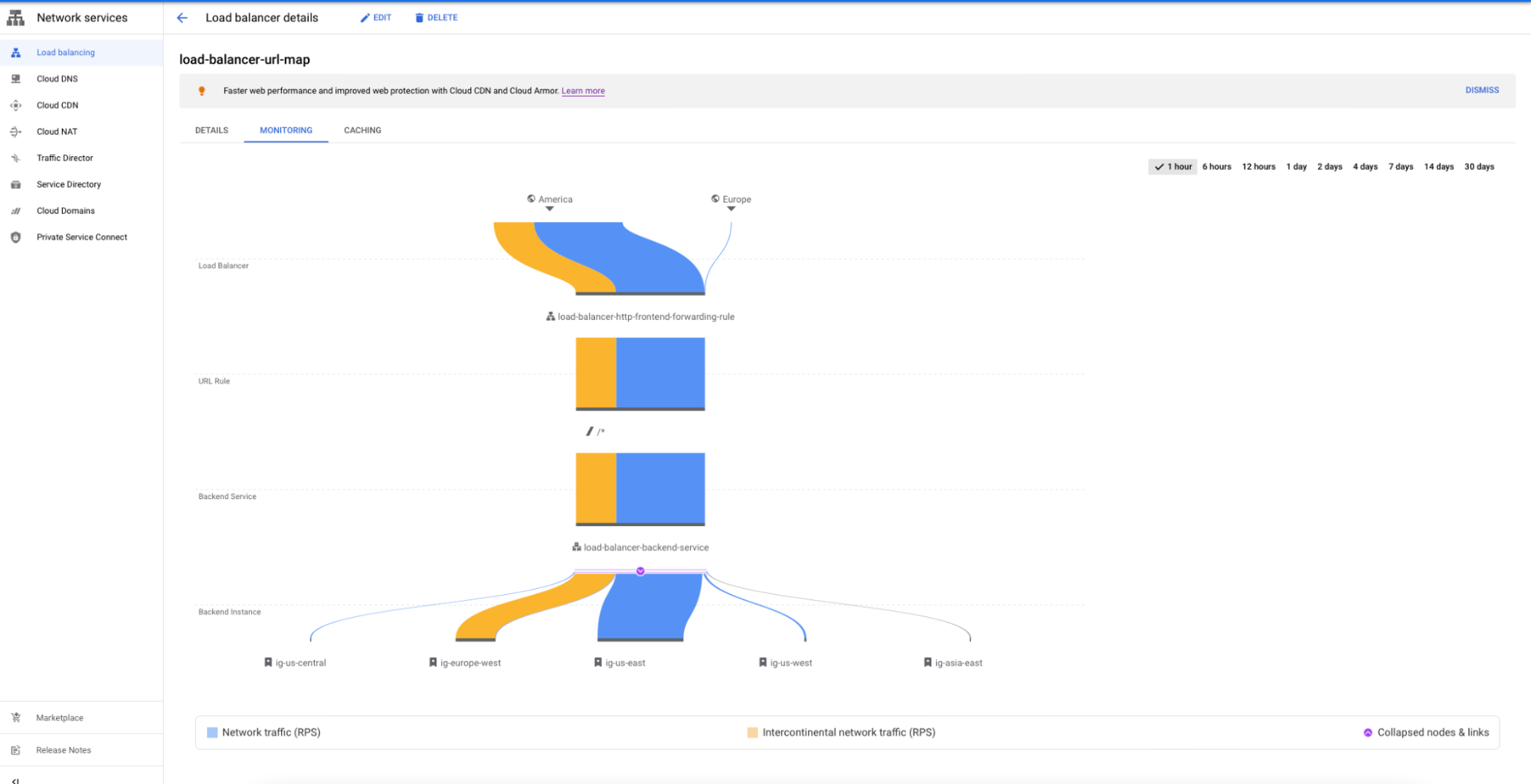

Not a problem for my multi-region deployment. The load balancer will detect issues in the US East and forward my traffic to another closest location. In this case, the traffic is forwarded to Europe as long as I live in the US East Coast near the Atlantic Ocean:

To emulate the US East region outage, I connected to the VM in that region and shut down all of the microservices. The load balancer detected that the microservices no longer responded in that region and started forwarding my traffic to a European data center.

Enjoy the fault tolerance out of the box!

Testing Performance

Apart from fault tolerance, if you deploy Java microservices across multiple cloud regions, your application can serve user requests at low latency regardless of their location.

To make this happen, first, you need to deploy the microservice instances in the cloud locations where most of your users live and configure the Global External Load Balancer that can do routing for you. This is what I discussed in "Automating Java Application Deployment Across Multiple Cloud Regions."

Second, you need to arrange your data properly in those locations. Your database needs to function across multiple regions, the same as microservice instances. Otherwise, the latency between microservices and the database will be high and overall performance will be poor.

In the discussed architecture, I used YugabyteDB as it is a distributed SQL database that can be deployed across multiple cloud regions. The article, "Geo-Distributed Microservices and Their Database: Fighting the High Latency" shows how latency and performance improve if YugabyteDB stores data close to your microservice instances.

Think of that article as the continuation of this story, but with a focus on database deployment. As a spoiler, I improved latency from 450ms to 5ms for users who used the Java messenger from South Asia.

Wrapping Up

If you develop Java applications for public cloud environments, you should utilize the global cloud infrastructure by deploying application instances across multiple regions. This will make your solution more resilient, performant, and compliant with the data regulatory requirements.

It‘s important to remember that it’s not that difficult to create microservices that function and coordinate across distant cloud locations. The Spring ecosystem provides you with the Spring Cloud framework, and public cloud providers like Google offer the infrastructure and services needed to make things simple

Opinions expressed by DZone contributors are their own.

Comments