Service Discovery With Docker and Consul: Part 1

Service discovery is vital in large system deployments. Learn how to use Docker and Consul to help your deployments run more smoothly and get home at a decent hour.

Join the DZone community and get the full member experience.

Join For Freeduring the last year, i've become a big fan of using consul for all things related to service discovery. if you're doing microservices you've probably run into the issue that when the number of services you create increases, it becomes more and more difficult to manage the communication between them. consul provides a perfect solution for this problem. it offers an easy-to-use, open standards based (opinionated) approach to service discovery (and also provides a large set of other functions).

i recently gave a presentation on how to do service discovery in a microservices architecture using consul and got a couple of requests to explain a bit more about it. so in this article, and a couple of follow-ups, i'll explain a bit more about how you can use consul. i won't just focus on purely the service discovery part provided by consul, but also show you a couple of other features provided by either consul or one of the tools surrounding it. note that all the samples, docker files, etc. can be found in the following repo: https://github.com/josdirksen/next-build-consul . so instead of "copy & pasting" from this article, just clone the repository.

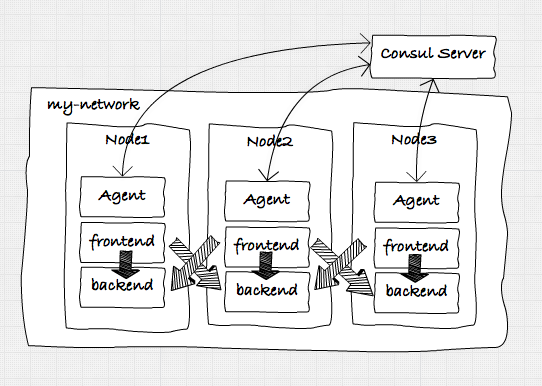

in this first article, we'll create a simple docker-based architecture with a number of services that will communicate with one another using simple http calls and discover each other using consul. our initial target architecture looks a bit like this:

to accomplish all of this, we first need to take the following steps to set up an environment we can run the services in:

note: i'm using docker-machine on my mac to do all this. if you run windows or linux the commands might vary slightly. let's hope that docker for mac (and windows) quickly comes out of beta ( https://blog.docker.com/2016/03/docker-for-mac-windows-beta/) , so we don't need this anymore.

- create four docker-machines: one in which we'll run a consul server, and three in which we'll run our individual services and a consul agent.

- start main consul server: we'll use a single consul server (and multiple consul agents, more on that later) to keep track of the running services and some docker related stuff.

- setup docker swarm: to avoid having to deploy our services individually we'll use a docker swarm to manage the three nodes on which we'll run our services. in the rest of this article, we'll use docker-compose to start and stop the individual services.

- setup docker overlay network: if we want to have our services communicate with each other in a simple manner, we will create an overlay network. this will allow the components we deploy to docker to easily communicate with one other (since they'll share the same subnet)

- start the consul agents: each node will have its own consul agent, which will monitor the health of the services on that node and communicate with the consul server.

so the first thing we'll do is create some docker-machines. first, we'll create the docker-machine that'll hold our consul server. the reason we run this one first is so that we can point the other docker-machines to consul running inside this container and use it for managing docker-swarm and the overlay network we want to use.

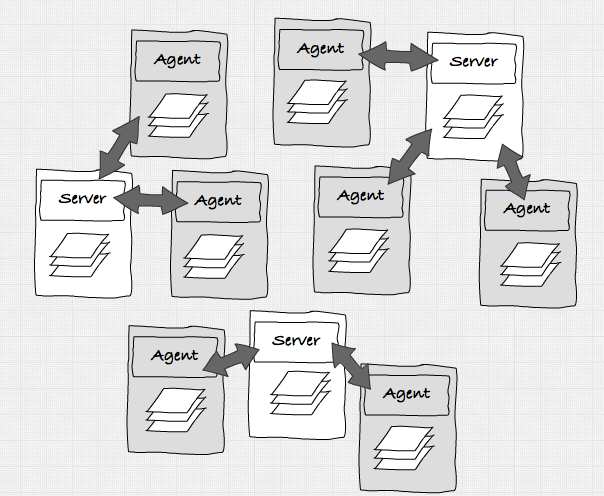

docker-machine create nb-consul --driver virtualboxbefore we start the consul server, lets quickly look at the architecture behind consul.

in this image, you can see the two modes consul can run in. it can run in server mode or agent mode. all the servers talk to each other and decide who is the leader. an agent just talks to one of the servers and normally runs on the node that is also running the services. note that the state between all the servers and agents within a cluster is shared. so, when a service registers itself with one of the agents, that information is available to all the servers and the agents that are connected to one another.

for this set of articles, we won't set up a cluster of servers, but just use one. now that we've got our docker-machine running, we can start the consul server. before we start let me first show you a simple script that makes switching between different docker-machines easier and the alias we use to avoid typing "docker-machine".

# quickly switch environments e.g: . dm-env nb-consul

$ cat ~/bin/dm-env

eval `docker-machine env $2 $1`

# avoid typing too much

$ alias dm

dm=docker-machineso, with these aliases in place, first we do a "dm-env nb-consul" to select the correct docker-machine.

next, we get the ip address of this server and then we can start our consul server like this.

# get the ip address

$

192.168.99.106

# use this ip address in the advertise

docker run -d --restart always -p 8300:8300 -p 8301:8301 -p 8301:8301/udp -p 8302:8302/udp \

-p 8302:8302 -p 8400:8400 -p 8500:8500 -p 53:53/udp -h server1 progrium/consul \

-server -bootstrap -ui-dir /ui -advertise $(dm ip nb-consul)at this point, we have our docker consul server running. now let's create the other three servers on which we'll run our services.

as you can see in the following commands, we're also creating a docker swarm cluster at the same time, and the "nb1" node is the swarm master.

docker-machine create -d virtualbox --swarm --swarm-master \

--swarm-discovery="consul://$(docker-machine ip nb-consul):8500" \

--engine-opt="cluster-store=consul://$(docker-machine ip nb-consul):8500" \

--engine-opt="cluster-advertise=eth1:2376" nb1

docker-machine create -d virtualbox --swarm \

--swarm-discovery="consul://$(docker-machine ip nb-consul):8500" \

--engine-opt="cluster-store=consul://$(docker-machine ip nb-consul):8500" \

--engine-opt="cluster-advertise=eth1:2376" nb2

docker-machine create -d virtualbox --swarm \

--swarm-discovery="consul://$(docker-machine ip nb-consul):8500" \

--engine-opt="cluster-store=consul://$(docker-machine ip nb-consul):8500" \

--engine-opt="cluster-advertise=eth1:2376" nb3at this point, we've got four docker-machines up and running. one is running a consul master, and the other ones aren't doing much yet.

$ dm ls

name active driver state url swarm

nb1 - virtualbox running tcp://192.168.99.110:2376 nb1 (master)

nb2 - virtualbox running tcp://192.168.99.111:2376 nb1

nb3 - virtualbox running tcp://192.168.99.112:2376 nb1

nb-consul * virtualbox running tcp://192.168.99.106:2376before we continue with configuring the slaves, there is one more utility script that might come in handy:

$ cat addtohost

#!/usr/bin/env bash

$ cat addtohost

#!/usr/bin/env bash

update-docker-host(){

# clear existing docker.local entry from /etc/hosts

sudo sed -i "/"${1}"\.local$/d" /etc/hosts

# get ip of running machine

export docker_ip="$(docker-machine ip $1)"

# update /etc/hosts with docker machine ip

&& sudo /bin/bash -c "echo \"${docker_ip} $1.local\" >> /etc/hosts"

}

update-docker-host nb1

update-docker-host nb2

update-docker-host nb3

update-docker-host nb-consulthis script adds the ip addresses of the docker-machines to your local "hosts" file. this means that we can simply access the docker hosts by just going to " http://nb-consul.local:8500 " for instance.

in our scenario, we want all our services to be able to communicate with one another. we have multiple docker hosts so we need to find an easy way to have services running in node "nb1" to be able to communicate with "nb2". the easiest way to accomplish this is to create a single network that is used by all the services running in the docker containers. to do this we create a simple "overlay" network like this:

# select the swarm master

$dm-env nb1 --swarm

# create an overlay network the the name my-netand since we created this on our swarm master, this network will be available in all the members of our swarm. when we create our services later on, we'll connect those to this network, so that they all share the same subnet.

to start the consul agents, we're going to use docker-compose. the docker-compose file is very straightforward and is just a simple way to avoid typing in all the launch commands (especially when you're doing live demos).

version: '2'

services:

agent-1:

image: progrium/consul

container_name: consul_agent_1

ports:

- 8300:8300

- 8301:8301

- 8301:8301/udp

- 8302:8302

- 8302:8302/udp

- 8400:8400

- 8500:8500

- 53:53/udp

environment:

- "constraint:node==nb1"

command: -ui-dir /ui -join 192.168.99.106 -advertise 192.168.99.110

networks:

default:

aliases:

- agent-1

agent-2:

image: progrium/consul

container_name: consul_agent_2

ports:

- 8300:8300

- 8301:8301

- 8301:8301/udp

- 8302:8302

- 8302:8302/udp

- 8400:8400

- 8500:8500

- 53:53/udp

environment:

- "constraint:node==nb2"

command: -ui-dir /ui -join 192.168.99.106 -advertise 192.168.99.111

networks:

default:

aliases:

- agent-2

agent-3:

image: progrium/consul

container_name: consul_agent_3

ports:

- 8300:8300

- 8301:8301

- 8301:8301/udp

- 8302:8302

- 8302:8302/udp

- 8400:8400

- 8500:8500

- 53:53/udp

environment:

- "constraint:node==nb3"

command: -ui-dir /ui -join 192.168.99.106 -advertise 192.168.99.112

networks:

default:

aliases:

- agent-3

networks:

default:

external:

name: my-netnothing too special in this file. the only thing you might notice it that we use explicit ip addresses in the commands to start the consul agents. we could, easily, just use an environment variable for this, which is set through a simple bash script. but, for this article we just specify the ip addresses of the relevant docker-machines. make sure your "docker_host" points to the docker swarm master and start the agents like this:

# start the agents

$ docker-compose -f docker-compose-agents.yml up -d

creating consul_agent_3

creating consul_agent_2

creating consul_agent_1

# check what is running

$ docker ps --format '{{ .id }}\t{{ .image }}\t{{ .command }}\t{{ .names}}'

bf2000882dccprogrium/consul"/bin/start -ui-dir /"nb1/consul_agent_1

a1bc26eef516progrium/consul"/bin/start -ui-dir /"nb2/consul_agent_2

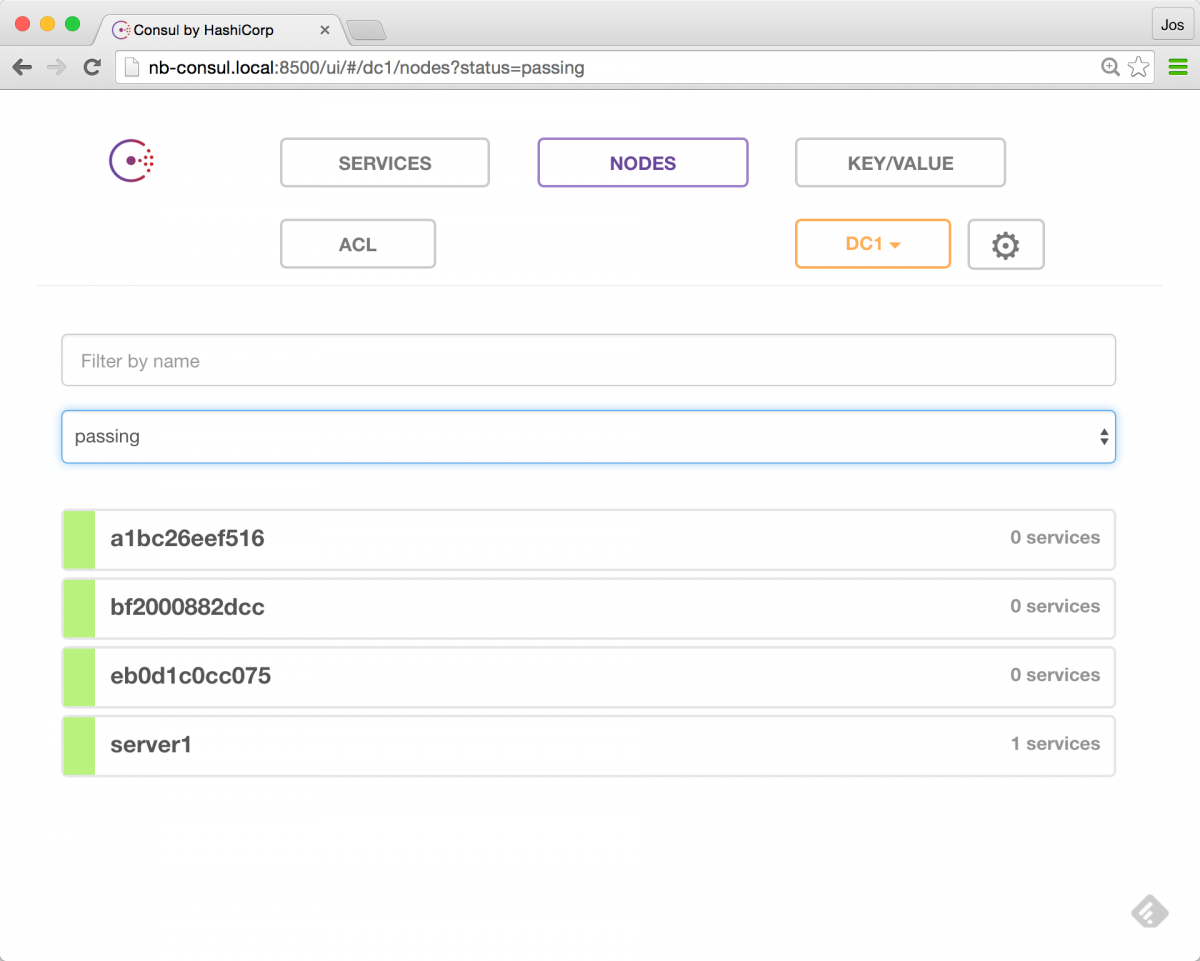

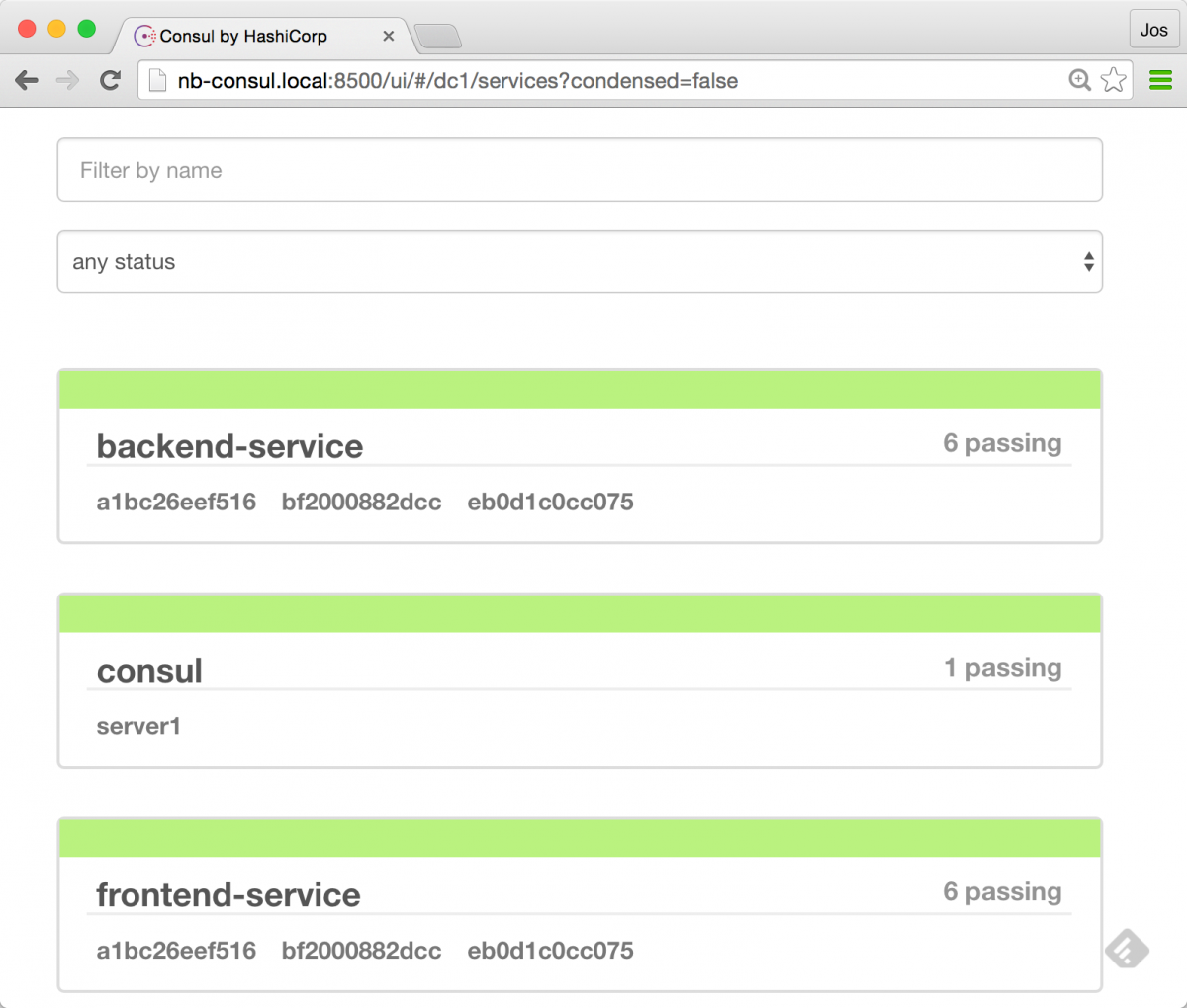

eb0d1c0cc075progrium/consul"/bin/start -ui-dir /"nb3/consul_agent_3at this point, we have a consul server running in docker-machine "nb-consul" and we've got three agents running on our nodes. to validate our setup, let's open up the consul server's interface: http://nb-consul.local:8500

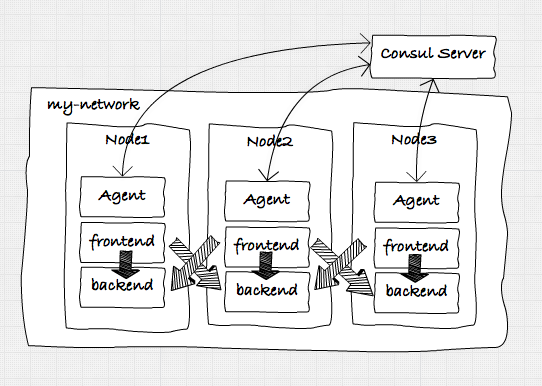

and, as you can see, we've got 1 server running (our consul server), and the three agents. so at this point we can start adding our services, to get to this architecture:

and, as you can see, we've got 1 server running (our consul server), and the three agents. so at this point we can start adding our services, to get to this architecture:

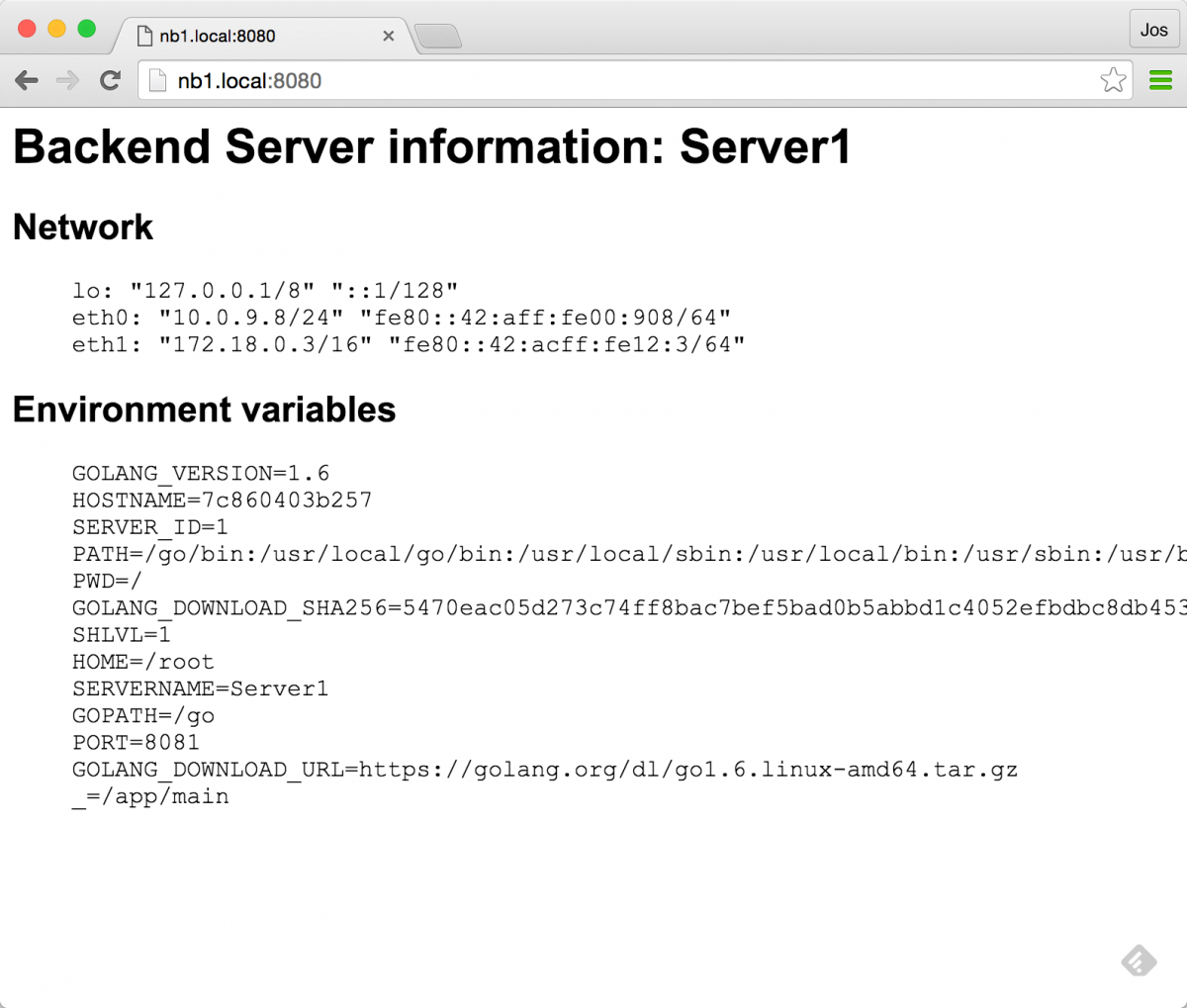

the services, in this case, are just simple golang applications. i created a simple application that can run in frontend or in backend mode. in frontend mode, it provides a minimal ui with a button to call a backend service. while in backend mode it provides a simple api that returns some information to the calling party and a simple ui showing some statistics. for convenience, i've pushed this image to the docker hub ( https://hub.docker.com/r/josdirksen/demo-service/ ) so you can easily use it without having to build from the source github repository.

as you can see, in the previous architecture overview, we want to start a frontend and a backend service on each of the nodes. we could do this manually, but since we've got docker-swarm we can easily do this through a single docker-compose file. if you want to see what this file looks like you can check the sources here ( https://github.com/josdirksen/next-build-consul ).

let's first launch the services, and then we'll look at how they register themselves with consul:

# make sure you select the swarm master

$ . dm-env nb1 --swarm

# now use docker-compose to run the backend services

$ docker-compose -f docker-compose-backend.yml up -d

creating backend2

creating backend3

creating backend1

# and use docker-compose to run the frontend services

$ docker-compose -f docker-compose-frontend.yml up -d

creating frontend1

creating frontend3

creating frontend2

# check in docker if everything is running

$ docker ps --format '{{ .id }}\t{{ .image }}\t{{ .command }}\t{{ .names}}'

65846be2e367 josdirksen/demo-service "/entrypoint.sh --typ" nb2/frontend2

aedd80ab0889 josdirksen/demo-service "/entrypoint.sh --typ" nb3/frontend3

d9c3b1d83b5e josdirksen/demo-service "/entrypoint.sh --typ" nb1/frontend1

7c860403b257 josdirksen/demo-service "/entrypoint.sh --typ" nb1/backend1

80632e910d33 josdirksen/demo-service "/entrypoint.sh --typ" nb3/backend3

534da0670e13 josdirksen/demo-service "/entrypoint.sh --typ" nb2/backend2

bf2000882dcc progrium/consul "/bin/start -ui-dir /" nb1/consul_agent_1

a1bc26eef516 progrium/consul "/bin/start -ui-dir /" nb2/consul_agent_2

eb0d1c0cc075 progrium/consul "/bin/start -ui-dir /" nb3/consul_agent_3as you can see in the last output of "docker ps" we have three frontends, three backends, and three consul agents running. this is pretty much the architecture we're aiming for. we can also see this when we open up consul:

as you can see, we've got three frontend services and three backend services registered in consul. if we open one of the backends we'll see some general information:

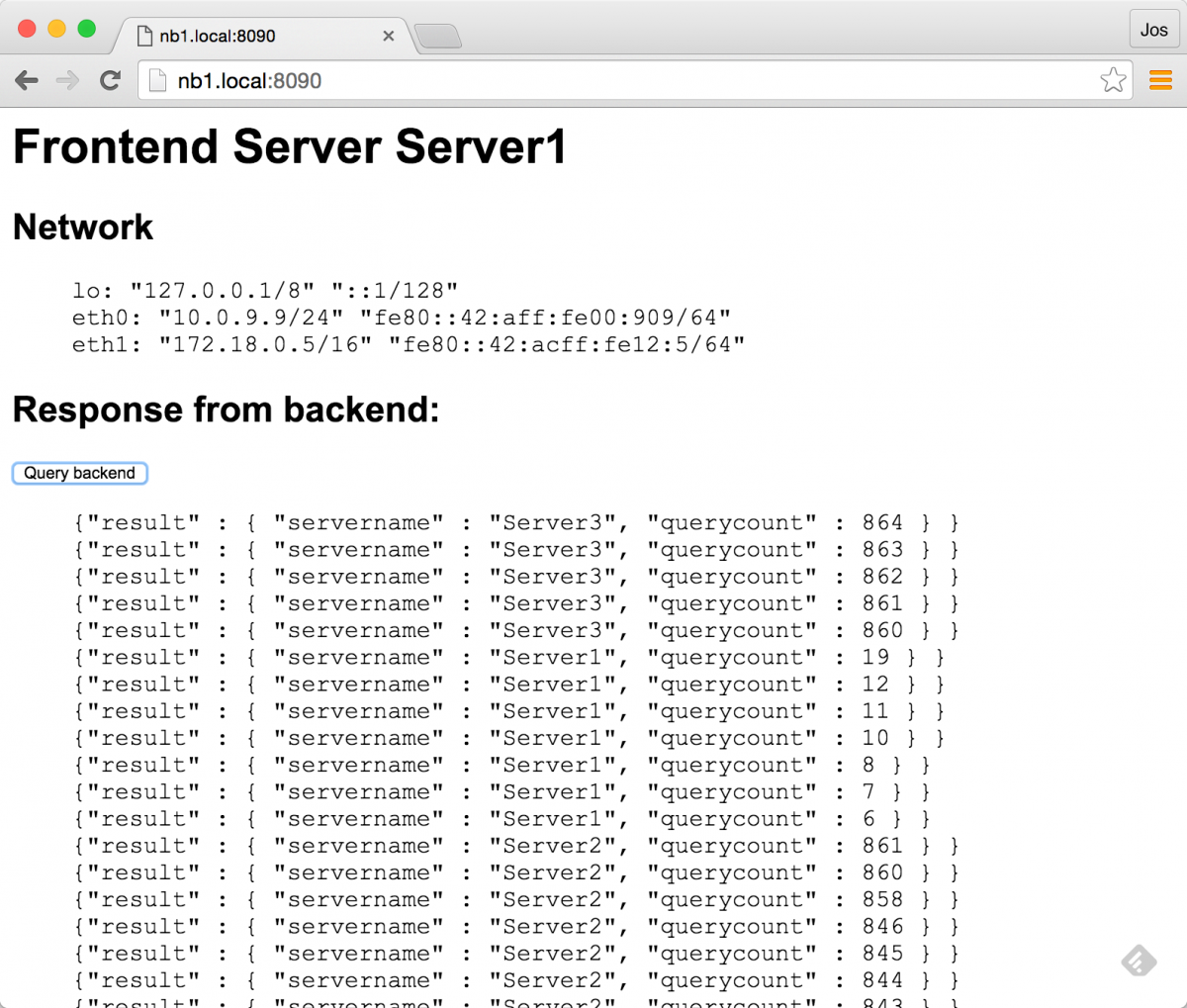

and we can use the frontend ui, to call one of our backends:

there are however a couple of questions we need to answer:

- service registration : when we start a backend or frontend service, we see it appearing in consul. how do we do this?

- service discovery : and, when we click the button on the frontend service a call is made to one of the backend services. how does the frontend know which service to call?

in the next sections, we'll look a bit closer at these questions.

first off, service registration. to register a service with consul, we have to make a very simple rest call to our local consul-agent, which looks something like this:

{

"name": "service1",

"address": "10.0.0.12",

"port": 8080,

"check": {

"http": "http://10.0.0.12:8080/health",

"interval": "5s"

}

}as you can see, we specify the name, address, and port where the service can be found, and we add an additional health check. when the health check returns something in the 200 range, the service is marked as healthy and can be discovered by other services. so, how do we do this for our services? if you look at the sources for this example you can find the "script/entrypoint.sh" file, which looks like this:

#!/usr/bin/env bash

ip=`ip addr | grep -e 'eth0.*state up' -a2 | tail -n 1 | awk '{print $2}' | cut -f1 -d '/'`

name="$2-service"

read -r -d '' msg << eom

{

"name": "$name",

"address": "$ip",

"port": $port,

"check": {

"http": "http://$ip:$port",

"interval": "5s"

}

}

eom

curl -v -xput -d "$msg" http://consul_agent_$server_id:8500/v1/agent/service/register && /app/main "$@"what this script does, is that it creates the json to be sent to the consul-agent and, before starting the main application, it uses "curl" to send it. so when a service is started, it automatically registers itself to the local consul agent (note that you can also do this in a more automated way, for instance, by using consul registrator ). this works since we can just reference the local agent by its name since it is in the same container. if you look closely, you might see that we use a couple of environment variables here. these are passed in through the docker-compose file we use:

...

frontend-1:

image: josdirksen/demo-service

container_name: frontend1

ports:

- 8090:8090

environment:

- "constraint:node==nb1"

- server_id=1

- servername=server1

- port=8090

command: /entrypoint.sh --type frontend

dns: 192.168.99.106

dns_search: service.consul

...the interesting part here are the dns entries. as you might remember the 192.168.99.106 is the address of our consul server. this means that we do dns lookups against consul (we could also have pointed to a consul agent).

with this setup, we can just reference a service by name, and use dns to resolve it. the following shows how this works:

# check which ips are registered for the backend-service

# called from outside the container

$ dig @nb-consul.local backend-service.service.consul +short

10.0.9.7

10.0.9.8

10.0.9.6

# if we do this from a container, we can do just this

docker exec -ti nb2/frontend2 ping backend-service

ping backend-service.service.consul (10.0.9.8): 56 data bytes

64 bytes from 10.0.9.8: icmp_seq=0 ttl=64 time=0.809 mscool right? we can discover the service just by using dns. that also means that integrating this in our existing applications is really easy since we can just rely on basic dns resolving. for example, in the frontend service we call the backend using this code:

resp, err := http.get("http://backend-service:8081")

if err != nil {

// handle error

fmt.println(err)

} else {

defer resp.body.close()

body, _ := ioutil.readall(resp.body)

w.header().set("content-type",resp.header.get("content-type"))

w.write(body)

}this calls one of the backend services using dns. we now also have some simple failover, since the dns timetolive of consul is set to 0. applications might still do some caching, but it means that we already have some basic failover:

$ curl -s backend-service:8081

{"result" : {

"servername" : "server1",

"querycount" : 778

}

}

# shutdown server 1 and do again, curl has a dns cache of

# 1 minute, so you might need to wait a bit

$ curl -s backend-service:8081

{"result" : {

"servername" : "server2",

"querycount" : 770

}

}

$ curl -s backend-service:8081

{"result" : {

"servername" : "server2",

"querycount" : 771

}

}which of course also works for our frontend/golang application:

in the follow-up to this article, we'll also show some more advanced failover, by introducing haproxy as an intermediate for more advanced failover techniques.

that pretty much wraps it up for this first article. so, in summary, here's what we have done:

- we've set up a simple architecture using 4 docker nodes. 1 for the consul server, and three for our services.

- the services register themselves with consul on service startup.

- we don't need to explicitly do something to enable service discovery. we can use standard dns to lookup a service.

- consul uses a ttl of 0 for dns and returns the available services using round robin. as you've seen, you can already use this for basic failover, when a dns lookup fails.

keep tuned for the follow-up article somewhere in the coming weeks.

Published at DZone with permission of Jos Dirksen, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments