Spring Webflux: EventLoop vs Thread Per Request Model

In this article, we take a look at Spring Webflux to understand how we can use reactive programming to increase application performance.

Join the DZone community and get the full member experience.

Join For FreeSpring Webflux Introduction

Spring Webflux has been introduced as part of Spring 5, and with this, it started to support Reactive Programming. It uses an asynchronous programming model. The use of reactive programming makes applications highly scalable to support high request load over a period of time.

What Problem It Solved Compared With Spring MVC

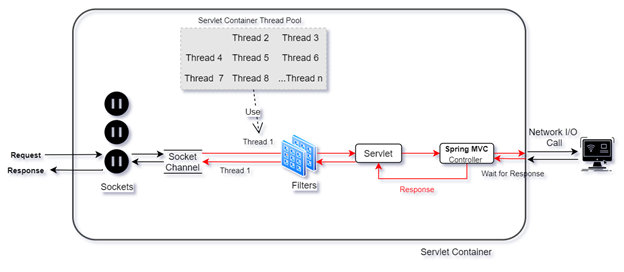

Spring MVC uses a Synchronous Programming model, where each request has been mapped to a thread and which is responsible to take the response back to the request socket. Cases where applications make some network calls like, fetch data from a database, fetch a response from another application, file read/write etc., a request thread has to wait to get the desired response.

The request thread here is blocked, and there is no CPU utilization in this period. That’s why this model uses a large thread pool for request processing. This could be fine for low request rate applications, but for applications with high request rates, this can ultimately make the application slow or unresponsive. This certainly affects businesses in today’s market.

For such applications, reactive programming can be used effectively. Here, a request thread sends the required input data to the network and doesn’t wait for a response. Rather, it assigns a callback function, which will get executed once this blocking task is completed. This way, the request thread makes itself available to handle another request.

If used in a proper manner, not a single thread can be blocked, and threads can utilize the CPU efficiently. Proper manner here means, for this model, it is required for every thread to behave reactively. Hence, database drivers, inter-service communication, web server, etc. should use reactive threads as well.

Embedded Server

Spring Webflux by default has Netty as an embedded server. Apart from that, it is also supported on Tomcat, Jetty, Undertow, and other Servlet 3.1+ containers. The thing to note here is that Netty and Undertow are non-servlet runtimes and Tomcat and Jetty are well-known servlet containers.

Before the introduction of Spring Webflux, Spring MVC used to have Tomcat as its default embedded server, which uses the Thread Per Request Model. Now, with Webflux, Netty has been preferred as a default, which uses the Event Loop Model. Although Webflux is also supported on Tomcat, it's only with versions that implement Servlet 3.1 or later APIs.

Note: Servlet 3 introduces the feature for asynchronous programming, and Servlet 3.1 introduced additionally asynchronous I/O allowing everything asynchronous.

EventLoop

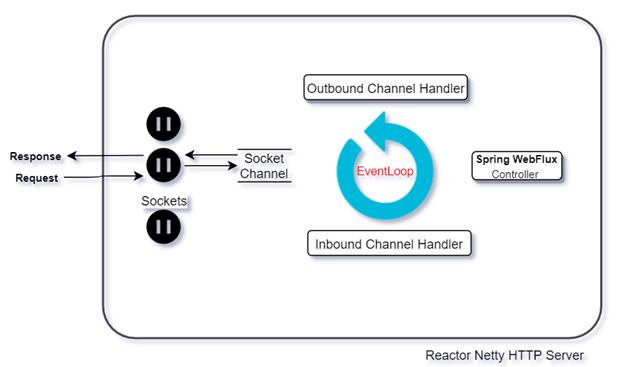

EventLoop is a Non-Blocking I/O Thread (NIO), which runs continuously and takes new requests from a range of socket channels. If there are multiple EventLoops, then each EventLoop is assigned to a group of Socket Channels, and all EventLoops are managed under an EventLoopGroup.

The following diagram shows how EventLoops work:

Here, EventLoop is shown to explain its usage on the server-side. But, it works the same way when it's on the client-side, and it sends requests to another server i.e. for I/O requests.

- All requests are received on a unique socket, associated with a channel, known as

SocketChannel. - There is always a single EventLoop thread associated with a range of

SocketChannels. So, all requests to that Sockets/SocketChannels are handed over to the same EventLoop. - Requests on the EventLoop go through a Channel Pipeline, where a number of Inbound channel handlers or

WebFiltersare configured for the required processing. - After this, EventLoop executes the application-specific code.

- On its completion, EventLoop again goes through a number of Outbound Channel handlers for configured processing.

- In the end, EventLoop handed back the response to the same SocketChannel/Socket.

- Repeat Step 1 to Step 6 in a loop.

That’s a simple use case that will certainly minimize the resource usage due to single thread usage, but this is not going to solve the real problem.

What happens if an application blocks the EventLoop for a long time due to any of the following reasons?

- High CPU intensive work.

- Database operations like read/write etc.

- File read/write.

- Any call to another application over the network.

In these cases, EventLoop will get blocked at Step 4, and we could be in the same situation where our application could become slow or unresponsive very soon. Creating additional EventLoop is not the solution. As already mentioned, a range of sockets are bound to a single EventLoop. Hence, a block in any EventLoop doesn’t allow other EventLoop to work on its behalf for already bounded sockets.

Note: Multiple EventLoops should be created only if an application is running on a multi-CPU platform, as EventLoops must be kept running, and a single CPU can’t keep multiple EventLoops running simultaneously. By default, the application starts with as many EventLoops as it has CPU cores.

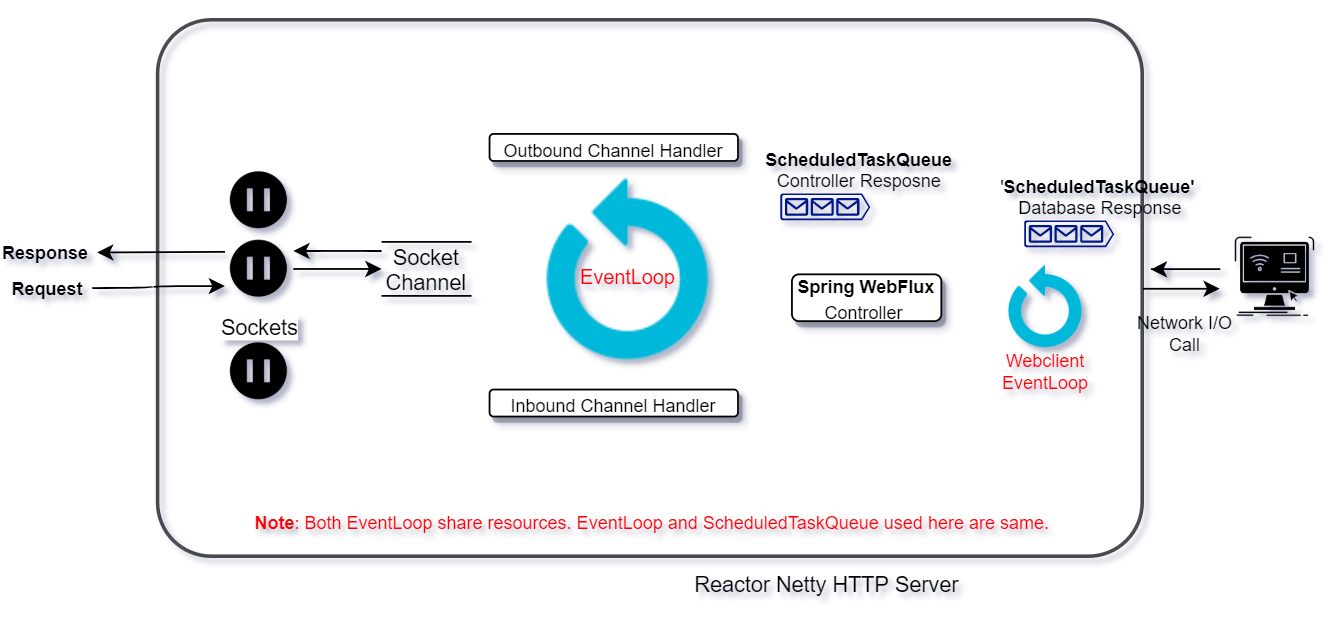

Now, that’s where the real power of NIO EventLoop comes into the picture with superb simplicity behind it. A user application should delegate the request to another thread and return the result asynchronously via a callback to unblock the EventLoop for new request handling. So, the updated working steps for EventLoop would be like the following:

- Step 1 to Step 3 as above.

- EventLoop delegates the request to a new Worker Thread.

- Worker Thread perform these long tasks.

- After completion, it writes the response to a task and adds that in

ScheduledTaskQueue.

- EventLoop poll tasks in task queue

ScheduledTaskQueue- If there are any, perform Step 5 to Step 6 via a task’s

Runnable runmethod. - Otherwise, it continues to poll new requests at SocketChannel.

- If there are any, perform Step 5 to Step 6 via a task’s

Advantage:

- Lightweight request processing thread.

- Optimum utilization of hardware resources.

- Single EventLoop can be shared between an HTTP client and request processing.

- A single thread can handle requests over many sockets (i.e. from different clients).

- This model provides support for backpressure handling in case of infinite stream response.

Thread Per Request Model (Implementing Servlet 3.1+)

The Thread per Request model is in practice since the introduction of synchronous Servlet programming. This model was adopted by Servlet Containers to handle incoming requests. As request handling is synchronous, this model requires many threads to handle requests. Hence, this increases resource utilization. There was no consideration of network I/O, which can block these threads. Hence, for scalability, generally, resources need to scale-up. This increases the system cost.

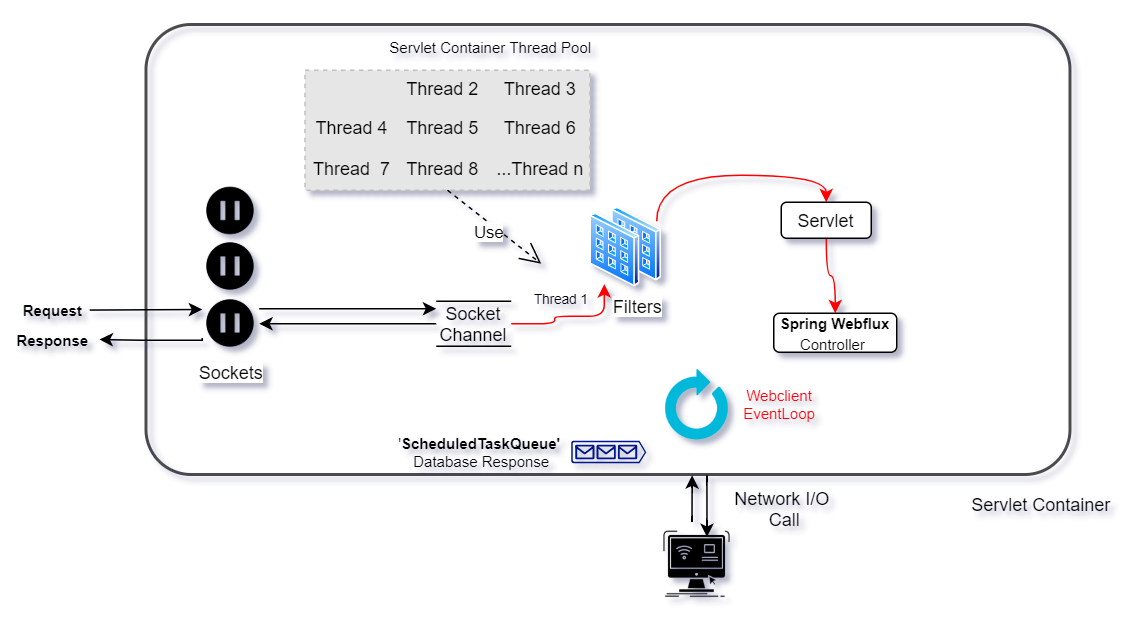

Going reactive, using Spring Webflux gives the option to use Servlet Containers as well, if they implement Servlet 3.1+ APIs. Tomcat is the most commonly used Servlet Container, and it supports reactive programming as well.

When you choose Spring Webflux on Tomcat, the application starts with a certain number of request processing threads under the thread pool (e.g. 10). Requests from sockets are assigned to a thread in this pool. The thing to note here is that there is no permanent binding of a socket to Thread.

If any request thread gets blocked for an I/O call, requests can still be handled by other threads in the thread pool. But, more such requests can block all request threads. Until now, it is the same as we used to have in synchronous processing. But, in a reactive approach, it allows, a user to delegate requests to another pool of Worker Thread, as discussed with EventLoop. That way, request processing threads become available and the application remains responsive.

The following are the steps executed while handling a request in this model:

- All requests are received on a unique socket, associated with a channel known as SocketChannel.

- The request is assigned to a Thread from the Thread Pool.

- The request on the Thread goes through a certain handler (like filter, servlet) for necessary pre-processing.

- The request thread can delegate requests to a Worker thread or Reactive Web client, while executing any blocking code in a Controller.

- On its completion, the Worker Thread or Web client (EventLoop) will be responsible to give back response to concerned Socket.

Again, by making applications fully reactive by choosing reactive clients (like Reactive Webclient) and Reactive DB drivers, minimum threads can provide effective scalability to the system.

Advantages:

- Supports and allows the use of Servlet APIs.

- If any request thread blocks, it only blocks a single client socket, not a range of sockets as in EventLoop.

- Optimum utilization of hardware resources

Performance Comparison

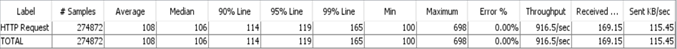

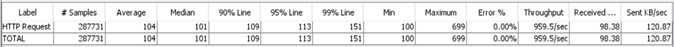

For this comparison, a sample Reactive Spring Boot Application using Spring Webflux has been created with a deliberate delay of 100ms. The request has been delegated to another Worker Thread to unblock the request processing thread.

The following configuration has been used for this comparison:

- Single EventLoop configured for Netty Performance using property

reactor.netty.ioWorkerCount=1 - Single Request processing thread configured for Tomcat Performance using property

server.tomcat.max-threads=1 - AWS EC2 instance with configuration of

t2.micro(1GB RAM, 1CPU). - Jmeter test script with 100 users and ramp-up of 1 second executed for 10 minutes.

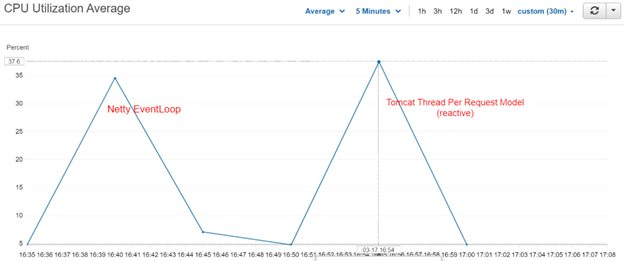

Max CPU utilization

Tomcat Thread Per Request Model: 37.6%

Netty EventLoop: 34.6%

Result : Tomcat shows 8.67052% increase in CPU utilization.

Performance Result

Tomcat 90 percentile response time: 114ms

Netty EventLoop 90 percentile response time: 109ms

Result: Tomcat shows 4.58716% increase in response time.

Opinions expressed by DZone contributors are their own.

Comments