Test Design Guidelines for Your CI/CD Pipeline

This article reviews the challenges of software testing and best practice guidelines for automated tests.

Join the DZone community and get the full member experience.

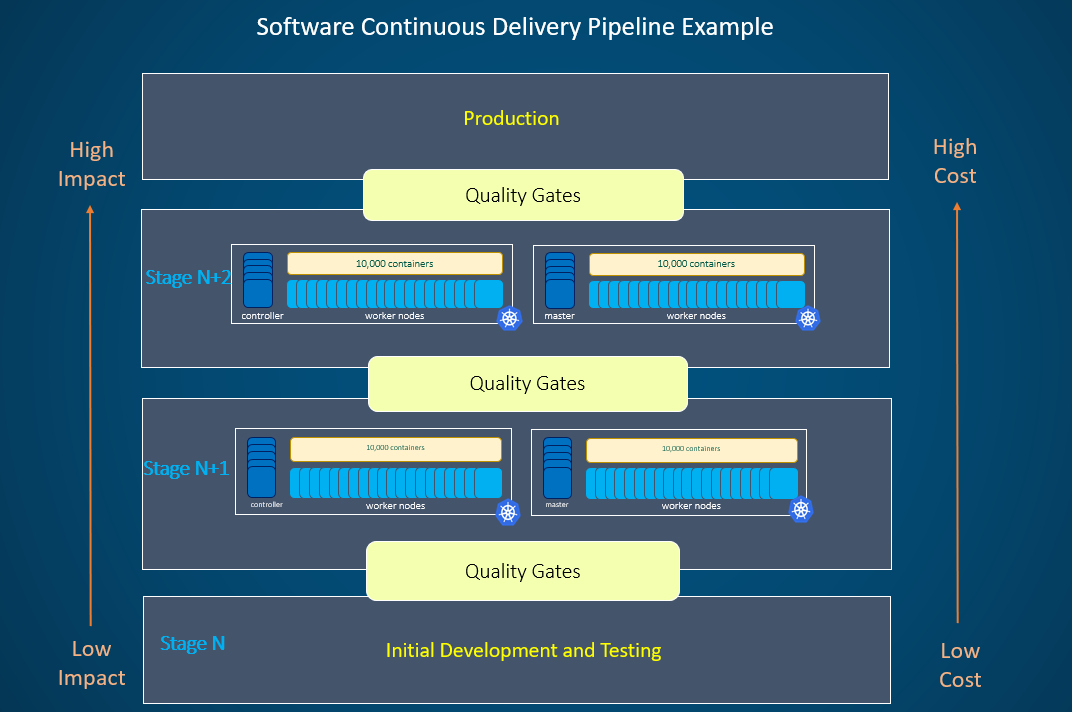

Join For FreeWhen delivering software to the market faster, there is a critical need to onboard automated tests into your continuous delivery pipeline to verify the software adheres to the standards your customer expects. Your continuous delivery pipeline could also consist of many stages that should trigger these automated tests to verify defined quality gates before the software can move to the next stage and eventually into production (see Figure 1). Depending on the stage of your pipeline, your automated tests could range in complexity from unit, integration, end-to-end, and performance tests. When considering the quantity and complexity of tests, along with the possibility of having multiple stages in your pipeline, there could be many challenges when onboarding, executing, and evaluating the quality of your software before it is released.

This article will describe some of these challenges. I will also provide some best practice guidelines on how your automated tests could follow a contract to help increase the delivery of your software to the market faster while maintaining quality. Following a contract helps to onboard your tests in a timely and more efficient manner. This also helps when others in your organization might need to troubleshoot issues in the pipeline.

Strive to Run Any Test, Anywhere, Anytime, by Anyone!

Figure 1: Example Software Continuous Delivery Pipeline

Figure 1: Example Software Continuous Delivery Pipeline

Challenges

There could be several challenges when onboarding your tests into your continuous delivery pipeline that could delay your organization from delivering software to market in a reliable manner:

Quantity of Technologies

Automated tests can be developed in many technologies. Examples include pytest, Junit, Selenium, Cucumber, and more. These technologies might have competing installation requirements such as operating system levels, browser versions, third-party libraries, and more. Also, the infrastructure that hosts your pipeline may not have enough dedicated resources or elasticity to support these varieties of technologies. It would be efficient to execute tests in any environment without having to worry about competing requirements.

Test Runtime Dependencies

Tests can also depend on a variety of inputs during runtime that could include text files, images, and/or database tables, to name a few. Being able to access these input items can be challenging as these inputs could be persisted in an external location that your test must retrieve during execution. These external repositories may be offline during runtime and cause unanticipated test failures.

Different Input Parameters

When onboarding and sharing your tests into your organization’s CI/CD process, it is common for your tests to have input parameters to pass into your test suite to execute the tests with different values. For example, your tests may have an input parameter that tells your test suite what environment to target when executing the automated tests. One test author may name this input parameter –base-URL while another test author in your organization may name this input parameter –target. It would be advantageous to have a common signature contact, with the same parameter naming conventions when onboarding into your organization’s CI/CD process.

Different Output Formats

The variety of technologies being used for your testing could produce different output formats by default. For example, your pytest test suite could generate plain text output while your Selenium test suite may produce HTML output. Standardizing on a common output format will assist in collecting, aggregating, reporting, and analyzing the results of executing all the tests onboarded into your enterprise CI/CD process.

Difficulties Troubleshooting

If a test fails in your CI/CD pipeline, this may cause a delay in moving your software out to market and thus there will be a need to debug the test failure and remediate it quickly. Having informative logging enabled for each phase of your test will be beneficial when triaging the failure by others in your organization such as your DevOps team.

Guidelines

Containerize Your Tests

By now, you have heard of containers and their many benefits. The advantage of containerizing your tests is that you have an isolated environment with all your required technologies installed in the container. Also, having your tests containerized would allow the container to be run in the cloud, on any machine, or in any continuous integration (CI) environment because the container is designed to be portable.

Have a Common Input Contract

Having your test container follow a common contract for input parameters helps with portability. It also reduces the friction to run that test by providing clarity to the consumer about what the test requires. When the tests are containerized, the input parameters should use environment variables. For example, the docker command below uses the -e option to define environment variables to be made available to the test container during runtime:

docker run

-e BASE_URL=http://machine.com:80

-e TEST_USER=testuser

-e TEST_PW=xxxxxxxxxxx

-e TEST_LEVEL=smoke

Also, there could be a large quantity of test containers onboarded into your pipeline that will be run at various stages. Having a standard naming convention for your input parameters would be beneficial when other individuals in your organization need to run your test container for debugging or exploratory purposes. For example, if tests need to define an input parameter that defines the user to use in the tests, have a common naming convention that all test authors follow, such as TEST_USER.

Have a Common Output Contract

As mentioned earlier, the variety of technologies being used by your tests could produce different output formats by default. Following a contract to standardize the test output helps when collecting, aggregating, and analyzing the test results across all test containers to see if the overall results meet your organization's software delivery guidelines. For example, there are test containers using pytest, Junit, Selenium, and Cucumber. If the contract said to produce output in xUnit format, then all the test results generated from the running of these containers could be collected and reported on in the same manner.

Provide Usage/Help Information

When onboarding your test container in your pipeline, you are sharing your tests with others in your organization, such as the DevOps and engineering teams that support the pipeline. Others in your organization might have a need to use your test container as an example as they design their test container.

To assist others in the execution of your test container, having a common option to display the help and usage information to the consumer would be beneficial. The help text could include:

- A description of what your test container is attempting to verify

- Descriptions of the available input parameters

- Descriptions of the output format

- One or two example command line executions of your test container

Informative Logging

Logging captures details about what took place during test execution at that moment in time. To assist with faster remediation when there is a failure, the following logging guidelines are beneficial:

- Implement a standard record format that would be easy to parse by industry tooling for observability

- Use descriptive messages about the stages and state of the tests at that moment in time

- Ensure that there is NO sensitive data, such as passwords or keys, in the generated log files that might violate your organization's security policies

- Log API (Application Program Interfaces) requests and responses to assist in tracking the workflow

Package Test Dependencies Inside of the Container

As mentioned earlier, tests can have various runtime dependencies such as input data, database tables, and binary inputs to name a few. When these dependencies are contained outside of the test container, they may not be available at runtime. To onboard your test container in your pipeline more efficiently, having your input dependencies built and contained directly inside of your container would ensure that they are always available. However, there are use cases where it may not make sense to build your dependencies directly inside of your test container. For example, you have a need to use a large input dataset that is gigabytes in size, in your test. In this case, it may make more sense to work with your DevOps team to have this input dataset available on a mounted filesystem that is made available in your container.

Setup and Teardown Resources

Automated tests may require the creation of resources during execution time. For example, there could be a requirement to create multiple Account resources in your shared deployment under test and perform multiple operations on these Account resources. If there are other tests running in parallel against the same deployment that might also have a requirement to perform some related operations on the same Account resource, then there could be some unexpected errors. A test design strategy that would create an Account resource with a unique naming convention, perform the operation, assert things were completed correctly, and then remove the Account resource at the end of the test would reduce the risk of failure. This strategy would ensure that there is a known state at the beginning and end of the test.

Have Code Review Guidelines

Code review is the process of evaluating new code by someone else on your team or organization before it is merged into the main branch and packaged for consumption by others. In addition to finding bugs much earlier, there are also benefits to having other engineers review your test code before it is merged:

- Verify the test is following the correct input and output contracts before it is onboarded into your CI/CD pipeline

- Ensure there is appropriate logging enabled for readability and observability

- Establish the tests have code comments and are well documented

- Ensure the tests have correct exception handling enabled and the appropriate exit codes

- Evaluate the quantity of the input dependencies

- Promote collaboration by reviewing if the tests are satisfying the requirements

Conclusion

It is important to consider how your test design could impact your CI/CD pipeline and the delivery of your software to market in a timely manner while maintaining quality. Having a defined contract for your tests will allow one to onboard tests into your organization’s software delivery pipeline more efficiently and reduce the rate of failure.

Opinions expressed by DZone contributors are their own.

Comments