The Developer’s Guide to Web Speech API: What It Is, How It Works, and More

Speech recognition and speech synthesis have had a dramatic impact on areas like accessibility and more. In this post, take a deep dive into Web Speech API.

Join the DZone community and get the full member experience.

Join For FreeWeb Speech API is a web technology that allows you to incorporate voice data into apps. It converts speech into text and vice versa using your web browser.

The Web Speech API was introduced in 2012 by the W3C community. A decade later, this API is still under development and has limited browser compatibility.

This API supports short pieces of input, e.g., a single spoken command, as well as lengthy, continuous input. The capability for extensive dictation makes it ideal for integration with the Applause app, while brief inputs work well for language translation.

Speech recognition has had a dramatic impact on accessibility. Users with disabilities can navigate the web more easily using their voices. Thus, this API could become key to making the web a friendlier and more efficient place.

The text-to-speech and speech-to-text functionalities are handled by two interfaces: speech synthesis and speech recognition.

Speech Recognition

In the speech recognition (SpeechRecognition) interface, you speak into a microphone and then the speech recognition service then checks your words against its own grammar.

The API protects the privacy of its users by first asking permission to access your voice by a microphone. If the page using the API uses the HTTPS protocol, it asks for permission only once. Otherwise, the API will ask in every instance.

Your device probably already includes a speech recognition system, e.g., Siri for iOS or Android Speech. When using the speech recognition interface, the default system will be used. After the speech is recognized, it is converted and returned as a text string.

In "one-shot" speech recognition, the recognition ends as soon as the user stops speaking. This is useful for brief commands, like a web search for app testing sites, or making a call. In "continuous" recognition, the user must end the recognition manually by using a "stop" button.

At the moment, speech recognition for Web Speech API is only officially supported by two browsers: Chrome for Desktop and Android. Chrome requires the use of prefixed interfaces.

However, the Web Speech API is still experimental, and specifications could change. You can check whether your current browser supports the API by searching for the webkitSpeechRecognition object.

Speech Recognition Properties

Let’s learn a new function: speechRecognition().

var recognizer = new speechRecognition();Now let’s examine the callbacks for certain events:

onStart:onStartis triggered when the speech recognizer begins to listen to and recognize your speech. A message may be displayed to notify the user that the device is now listening.onEnd:onEndgenerates an event that is triggered each time the user ends the speech recognition.onError: Whenever a speech recognition error occurs, this event is triggered using theSpeechRecognitionErrorinterface.onResult: When the speech recognition object has obtained results, this event is triggered. It returns both the interim results and the final results.onResultmust use theSpeechRecognitionEventinterface.

The SpeechRecognitionEvent object contains the following data:

results[i]: An array of the result objects of the speech recognition, each element representing a recognized wordresultindex: The current recognition indexresults[i][j]: The j-th alternative to a recognized word; the first word to appear is the word deemed most probableresults[i].isFinal: A Boolean value that displays whether the result is interim or finalresults[i][j].transcript: The word’s text representationresults[i][j].confidence: The probability of the result being correct (value range from 0 to 1)

What properties should we configure on the speech recognition object? Let’s take a look.

Continuous vs One-Shot

Decide whether you need the speech recognition object to listen to you continuously until you turn it off, or whether you only need it to recognize a short phrase. Its default setting is "false."

Let’s say you are using the technology for note-taking, to integrate with an inventory tracking template. You need to be able to speak at length with enough time to pause without sending the app back to sleep. You can set continuous to true like so:

speechRecognition.continuous = true;Language

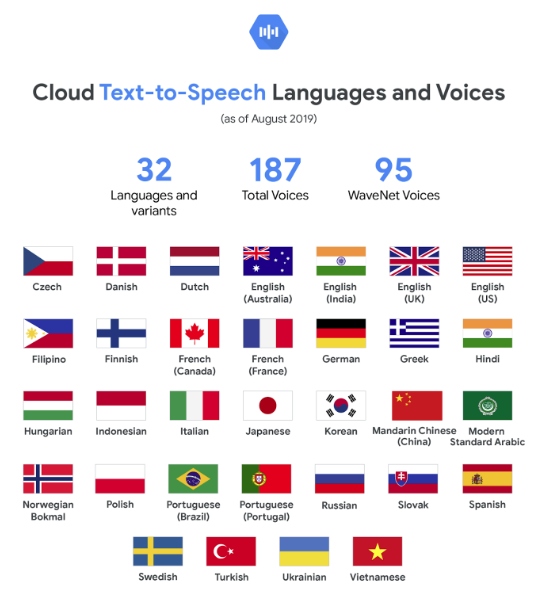

Image Sourced from Google

What language do you want the object to recognize? If your browser is set to English by default, it will automatically select English. However, you can also use locale codes.

Additionally, you could allow the user to select the language from a menu:

speechRecognition.lang = document.querySelector("#select_dialect").value;Interim Results

Interim results refer to results that are not yet complete or final. You can enable the object to display interim results as feedback to users by setting this property to true:

speechRecognition.interimResults = true;Start and Stop

If you have configured the speech recognition object to “continuous,” then you will need to set the onClick property of the start and stop buttons, like so:

document.querySelector("#start").onclick = () => {

speechRecognition.start();

};

document.querySelector("#stop").onclick = () => {

speechRecognition.stop();

};This will allow you to control when your browser begins "listening" to you, and when it stops.

So, we’ve taken an in-depth look at the speech recognition interface, its methods, and its properties. Now let’s explore the other side of Web Speech API.

Speech Synthesis

Speech synthesis is also known as text-to-speech or TTS. Speech synthesis means taking text from an app and converting it into speech, then playing it from your device’s speaker.

You can use speech synthesis for anything from driving directions to reading out lecture notes for online courses, to screen-reading for users with visual impairments.

In terms of browser support, speech synthesis for Web Speech API can be used in Firefox desktop and mobile from Gecko version 42+. However, you have to enable permissions first. Firefox OS 2.5+ supports speech synthesis by default; no permissions are required. Chrome and Android 33+ also support speech synthesis.

So, how do you get your browser to speak? The main controller interface for speech synthesis is SpeechSynthesis, but a number of related interfaces are required, e.g., for voices to be used for the output. Most operating systems will have a default speech synthesis system.

Put simply, you need to first create an instance of the SpeechSynthesisUtterance interface. The SpeechSynthesisUtterance interface contains the text the service will read, as well as information such as language, volume, pitch, and rate. After specifying these, put the instance into a queue that tells your browser what to speak and when.

Assign the text you need to be spoken to its "text" attribute, like so:

newUtterance.text = The language will default to your app or browser’s language unless specified otherwise using the .lang attribute.

After your website has loaded, the voiceschanged event can be fired. To change your browser’s voice from its default, you can use the getvoices() method within SpeechSynthesisUtterance. This will show you all of the voices available.

The variety of voices will depend on your operating system. Google has its own set of default voices, as does Mac OS X. Finally, choose your preferred voice using the Array.find() method.

Customize your SpeechSynthesisUtterance as you wish. You can start, stop and pause the queue, or change the talking speed (“rate”).

Web Speech API: The Pros and Cons

When should you use Web Speech API? It’s great fun to play with, but the technology is still in development. Still, there are plenty of potential use cases. Integrating APIs can help modernize IT infrastructure. Let’s look at what Web Speech API is able to do well, and which areas are ripe for improvement.

Boosts Productivity

Talking into a microphone is quicker and more efficient than typing a message. In today’s fast-paced working life, we may need to be able to access web pages while on the go.

It’s also fantastic for reducing the admin workload. Improvements in speech-to-text technology have the potential to significantly cut time spent on data entry tasks. STT could be integrated into audio-video conferencing to speed up note-taking in your stand-up meeting.

Accessibility

As previously mentioned, both STT and TTS can be wonderful tools for users with disabilities or support needs. Additionally, users who may struggle with writing or spelling for any reason may be better able to express themselves through speech recognition.

In this way, speech recognition technology could be a great equalizer on the internet. Encouraging the use of these tools in an office also promotes workplace accessibility.

Translation

Web Speech API could be a powerful tool for language translation due to its capability for both STT and TSS. At the moment, not every language is available; this is one area in which Web Speech API has yet to reach its full potential.

Offline Capability

One drawback is that an internet connection is necessary for the API to function. At the moment, the browser sends the input to its servers, which then returns the result. This limits the circumstances in which Web Speech can be used.

Accuracy

Incredible strides have been made to refine the accuracy of speech recognizers. You may occasionally still encounter some struggles, such as with technical jargon and other specialized vocabularies, or with regional accents. However, in 2022, speech recognition software is now reaching human-level accuracy.

In Conclusion

Although Web Speech API is experimental, it could be an amazing addition to your website or app. From top PropTech companies to marketing, all workplaces can use this API to supercharge efficiency. With a few simple lines of JavaScript, you can open up a whole new world of accessibility.

Speech recognition makes it easier and more efficient for your users to navigate the web. Get excited to watch this technology grow and evolve!

Opinions expressed by DZone contributors are their own.

Comments