The Best Way to Optimize Garbage Collection Is NOT By Tuning it

When it comes to optimizing GC, tuning is not the answer.

Join the DZone community and get the full member experience.

Join For Free

When asked: "What would you do if your Java app experiences long GC pauses?" — most people would answer: "increase the heap size and/or tune the garbage collector." That works in many but not all situations. The heap may already be close to the total memory available. And GC tuning, beyond a few most obvious and efficient flags, often becomes a black art where each new change brings a smaller improvement. Worse, hyper-tuning GC makes it tightly coupled with your current heap size, number of CPUs, and workload patterns.

Need help choosing the right GC? Check out this post!

If any of these components changes in the future, the GC may suddenly perform much worse. And (what a surprise!) at that point, it may be really hard to remember why each of the flags in the combination like the one below is there, and what its effect was supposed to be:

Is it possible to improve GC performance in some alternative way: not by tuning GC, but by making your app fundamentally more GC-friendly? It turns out that often the answer is yes. To illustrate that, let's consider the following example.

A Memory-Intensive Benchmark

The simple benchmark below models, very roughly, an application that reads incoming data (strings), performs some calculations and stores the key-value pairs in the cache:

/** Base class for all benchmarks */abstract class AbstractTest extends Thread { static final int NUM_UNIQUE_STRINGS = 400 * 1000; static final int NUM_ITERS = 10 * 1000 * 1000; static final String PREFIX = "This is a long and useless string prefix just to fill memory with some long- and short-lived garbage"; final Random random = new Random(1); // Code to initialize and run test with 4 threads omitted for brevity... int nextNumber() { return random.nextInt(); } // Simulate internal calculations // Simulate receiving a string from the outside world String nextString() { return PREFIX + random.nextInt(NUM_UNIQUE_STRINGS); } abstract void putInMap(String s, int x); public void run() { for (int i = 0; i < NUM_ITERS; i++) { String s = nextRandomString(); int x = nextRandom(); putInMap(s, x); } }}/** A concrete benchmark where we use the most basic implementation */static class Test1 extends AbstractTest { private final Map<String, Integer> map = new HashMap<>(); void putInMap(String s, int x) { map.put(s, x); }}The full code is available here.

When I run this benchmark with four parallel threads on my MacBook Pro, using Oracle JDK 1.8.0_151 with -XX:+UseG1GC -Xms2g -Xmx2g GC settings, it takes 84 sec. Over 90 percent of that time is spent in garbage collections.

Why does that happen? It's because this application creates objects (instances of String and java.lang.Integer) all the time, and then, by replacing entries in the hashmap, makes similar older objects garbage. There is a very high object turnaround here, yet most objects live for long enough so that the GC cannot discard them quickly. And when an object's lifetime exceeds a certain threshold, it's much more expensive to collect it once it becomes garbage.

Note that the number of unique strings that this app generates is limited to 400,000. This happens in real life, or at least in web and business apps, very often, since most data that they send and receive, such as customer names, product names, city/country names, URLs, etc. tend to be repetitive. Thus, both real apps and this benchmark generate many copies of the same strings over their lifetime.

That means that the number of keys in our hashmap is limited; it stops growing at some point, and the number of live objects in the heap stays roughly constant as well. However, the total number of objects, and their turnaround, is enough to create so much work for the garbage collector that even when I increase the heap size six-fold, from 2GB to 12GB, the run time improves just ~2.5 times, to 29 sec.

Can we do any better? Yes. For starters, we can avoid creating duplicate strings, or at least avoid keeping them in memory for longer than a few microseconds each.

Deduplicating Strings

Let's create another benchmark class, where everything is the same as above, except just one method that is overridden as follows:

String nextString() { return super.nextString().intern(); }We use the String.intern() method here to intern, or deduplicate, each string routed to the cache immediately after it's created. Internally, this method basically queries a JVM-managed concurrent weak hash set of strings. If a string with the same value exists, it is returned; otherwise, the string is cached. If interning is used for a duplicate string that has just been created, for which a cached copy exists, the new string copy becomes garbage immediately. This is short-lived garbage, which is the easiest for the GC. And it turns out that making long-living garbage short-living can result in a dramatic improvement: When I run the new benchmark with the same parameters, it completes in just 25 sec. That's more than 3 times faster than the original benchmark with the same heap size, and also faster than the original benchmark with 6 times the heap size!

String.intern() is the most common and convenient method of string deduplication, since, for one thing, this method is always available in any part of the code. Some authors criticize the performance and scalability of this method, but it turns out that it actually takes a few microseconds to execute. Thus, when applied judiciously (only for strings that have a high probability of being duplicate), this method is just fine for most applications. Getting rid of duplicate strings in less trivial situations, when interning is difficult to apply directly, is discussed in one of my previous articles. And if you really need to deduplicate strings with maximum performance and minimum memory requirements, you may consider, for example, writing your own small, customized FALF Interner.

Is there any other opportunity to improve GC time here? Yes.

Getting Rid of Boxed Numbers

Those who are not familiar with the internals of Java boxed numbers, such as java.lang.Integer or java.lang.Long, are encouraged to read the following article. Long story short, a boxed number instance requires several times more memory than the plain number that it wraps. Furthermore, the presence of a large number of such objects makes the GC's job much harder than when all the numbers are stored in a single object — a primitive array. So, if you need a data structure that maps objects to numbers, numbers to objects or numbers to numbers, what do you do? The good news is that there are third-party libraries, such as fastutil, that provide specialized implementations of maps and lists that internally use primitive arrays instead of boxed numbers. If we modify our benchmark as follows:

// Use specialized it.unimi.dsi.fastutil.objects.Object2IntOpenHashMap to map// Strings to int numbersObject2IntOpenHashMap<String> specializedMap = new Object2IntOpenHashMap<>();void putInMap(String s, int x) { specializedMap.put(s, x); }and rerun it with the same parameters as before, it now takes just 12 sec! That's twice faster than the previous version and 7 times faster than the original version. Getting rid of creation and GCing of a vast number of small objects clearly improves performance.

How Do We Know Which Object to Optimize?

By now, it should be clear that by making selective, often very small and low-effort optimizations in the code, we may be able to improve GC performance of the application better, and much more reliably, than if we spend a lot of time tuning the garbage collector. Furthermore, improvements made in the code work well in the long term, regardless of the GC type and possible changes in the JVM, hardware, etc. A question remains, though: How do we know which objects to optimize? In our benchmark, it was straightforward because, after all, we had only two types of objects created in large quantities. But in any real-life application, such guessing just won't work. You should use a memory analysis tool instead. And the most comprehensive way of memory analysis is by taking a heap dump.

A heap dump is essentially a full snapshot of the running JVM's heap. To obtain a heap dump with garbage (a "full" dump), one should invoke the JDK jmap utility without the -live flag. There are a number of heap dump analysis tools available, both open-source and commercial. The most popular open-source tool is Eclipse MAT; there is also VisualVM and some less powerful, lesser-known tools. The commercial tools include the general-purpose Java profilers: JProfiler and YourKit, as well as one tool built specifically for heap dump analysis called JXRay.

Unlike most other tools, JXRay analyzes a heap dump right away for a large number of common problems, such as duplicate strings and other objects, suboptimal data structures, boxed numbers, etc. The tool generates a report with all the collected information in the HTML format. The advantage of this approach is that you can view the results of analysis anywhere at any time and share it with others easily. It also means that you can run the tool on any machine, including big and powerful, but "headless" machines in a data center.

Now, let's get back to our problem. The questions that are most important when analyzing heap dumps for GC optimizations are:

How much garbage does the given dump contain?

Which object types contribute most to the garbage?

Where (and what live data structures) most likely generate this garbage?

Here is how JXRay may help to answer these questions.

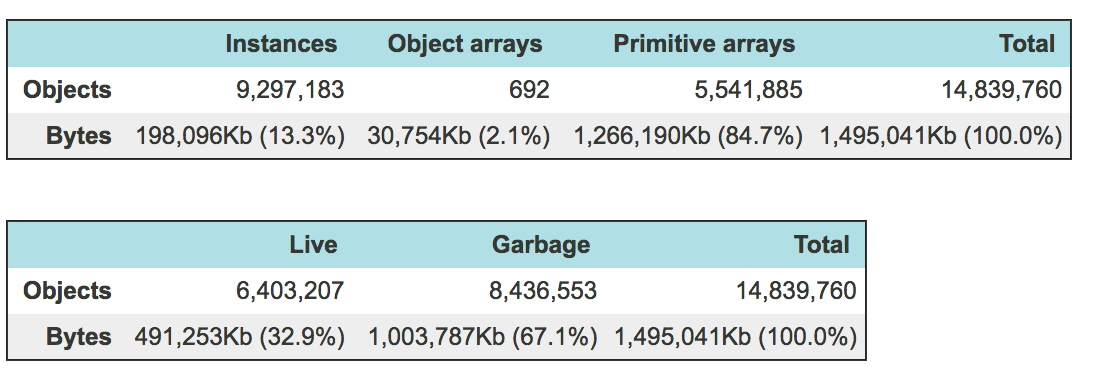

Determining the amount/percentage of garbage is straightforward: Just check the top two tables of the JXRay report. Here is the output for a heap dump generated from our benchmark when it runs with no optimizations: So, here, we immediately see that in this dump garbage takes about 1GB, or 67.1 percent, of the total used heap.

So, here, we immediately see that in this dump garbage takes about 1GB, or 67.1 percent, of the total used heap.

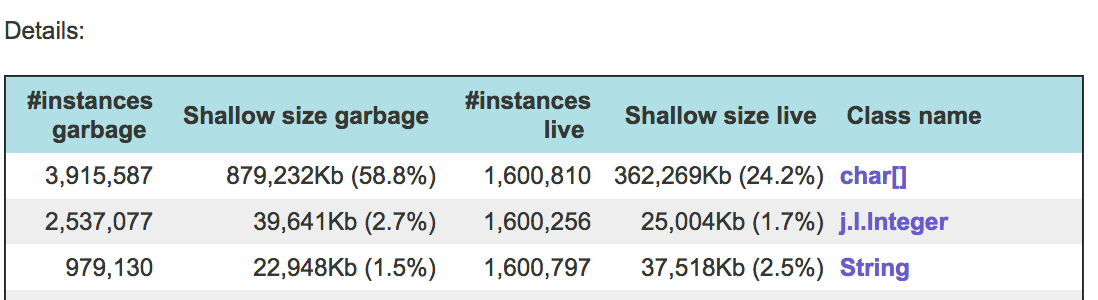

To find out what object types contribute most to garbage, let's open section 5 of the report ("Live vs Garbage objects"). Predictably, the top three entries there are the objects that makeup strings (String and char[]) and java.lang.Integer instances:

Note that the boxed number objects take an order of magnitude less memory than strings, but their quantity is just about twice smaller. That explains why getting rid of them improved our benchmark time so significantly: Time to move objects around is proportional to the object number. And now, finally, let's try to answer the most interesting question: Who is likely to generate all this garbage?

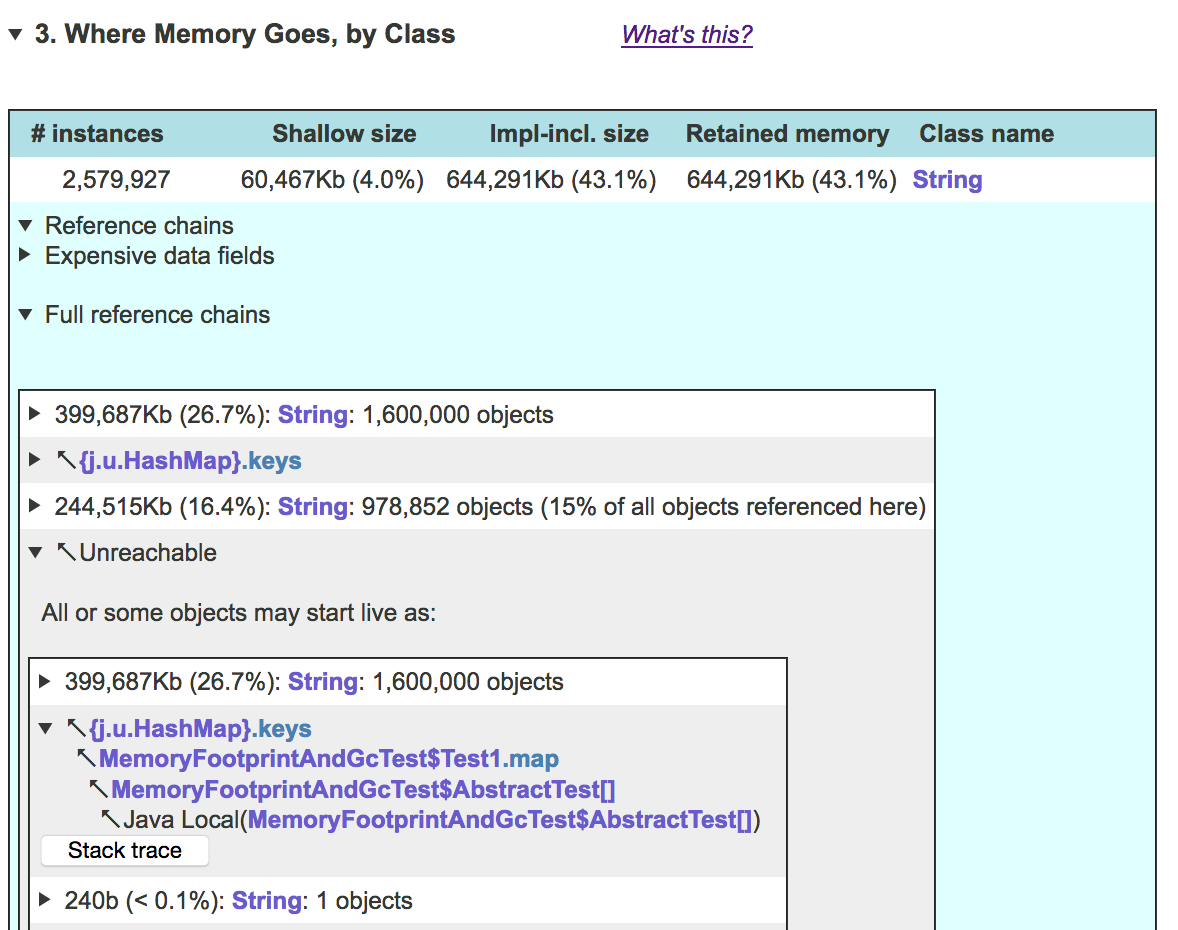

For that, let's go to section 3 of the report ("Where Memory Goes, by Class") and expand it. Then, look at the first two lines, which are for class String that takes the biggest share of memory. Expand the second line ("Reference chains"), then click on "Full reference chains".

The resulting table shows several sources of Strings (that is, groups and reference chains of objects ultimately referencing Strings). That also includes "Unreachable" (garbage) pseudo-source. Now, here is the exciting part: when you click on "Unreachable", JXRay presents its guesses on where these garbage objects most likely originate. Again, not surprisingly, here, it identified our cache as the most likely origin of the garbage strings:

Of course, this guessing has its limitations. Currently, JXRay uses some heuristics based on partial reference chains among garbage objects and similarity of contents in groups of garbage and live objects. Sometimes, however, certain garbage objects are discarded so quickly that there is literally no matching live objects for them. In this case, currently, all you can do is try to guess where they come from based on the garbage object contents. For that, JXRay presents random samples of objects in each group.

To summarize, the best way to optimize GC performance of the given application is to optimize its code so that it generates less garbage, especially medium- and long-living garbage that is the biggest problem for garbage collectors. The best way to find out whether such an optimization is possible, i.e. which objects are garbage and what code manages them, is to take and analyze a heap dump with a suitable tool.

Further Reading

Opinions expressed by DZone contributors are their own.

Comments